When you get to a certain age, and you buy a lot of albums, you sorta inevitably end up with several versions of the albums you like the most, don’t you? Or is that just me?

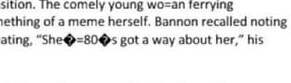

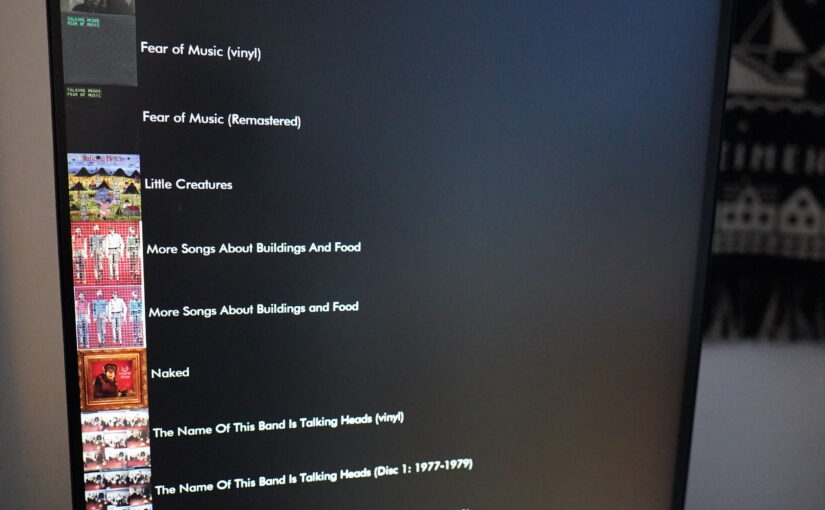

For instance, Talking Heads released eight studio albums in their time, and I have… 24 things from them in /music/repository/Talking Heads/. The situation is even worse with David Bowie, who released 26 studio albums, and I have 166 things in /music/repository/David Bowie/.

(Yes, I rip everything to flac and play from the computer.)

This over-shopping happens for a variety of reasons — with Talking Heads it’s because later versions of the albums have been fucked around with so much in “remastering” that I find them annoying, so I’ve ended up buying several old versions to find a good one.

(For the ’77 album, you have to buy a vinyl from the first few years — in later versions, banjos suddenly appears on several tracks. I’ve read that people have similar problem on streaming services, too? They have a favourite album, but then all of a sudden it’s swapped out with a remaster? What a total, absolute nightmare! *gasp* *shock*)

For David Bowie, it’s just that I’ve got a ton of live albums, and box sets, and singles and singles and singles…

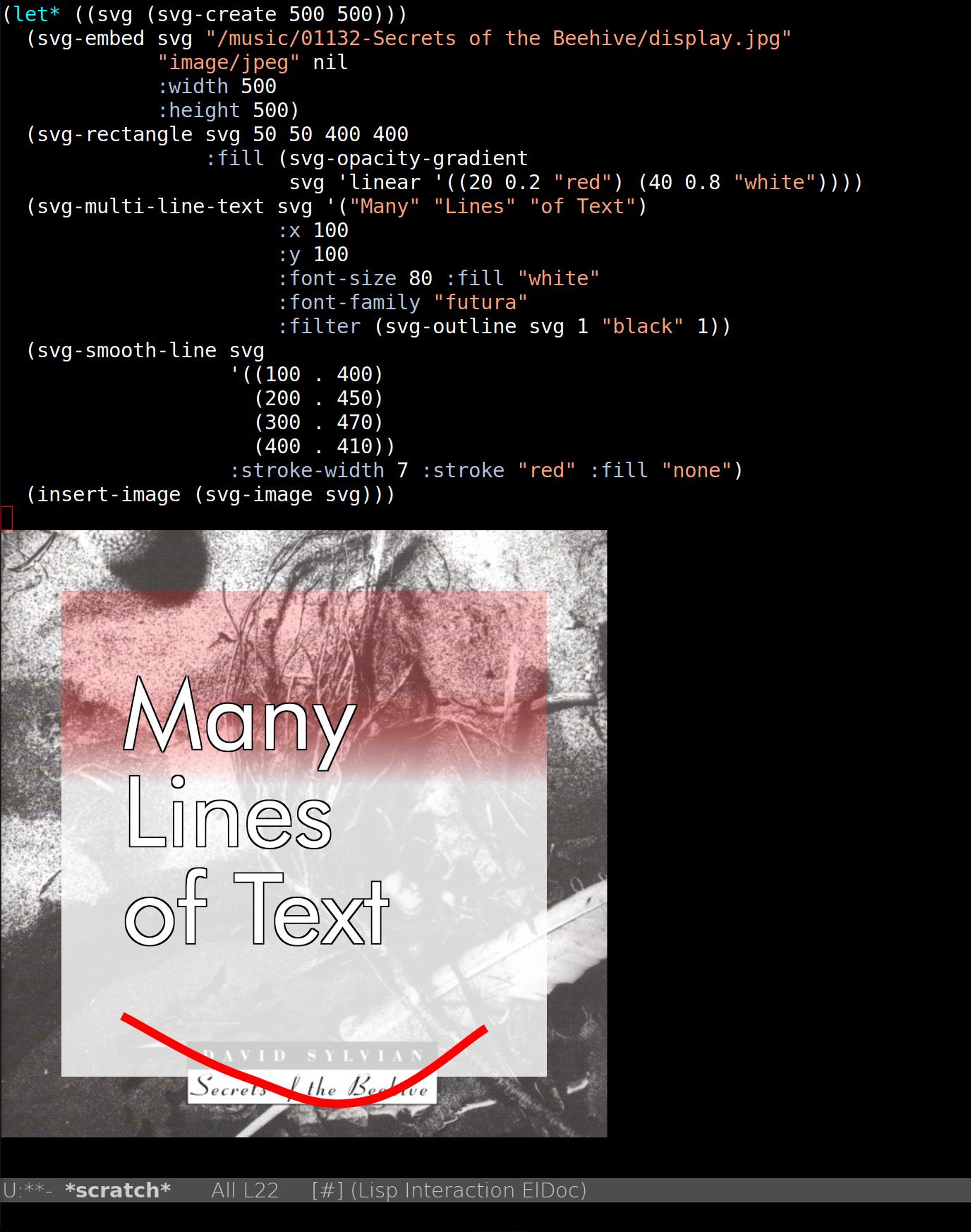

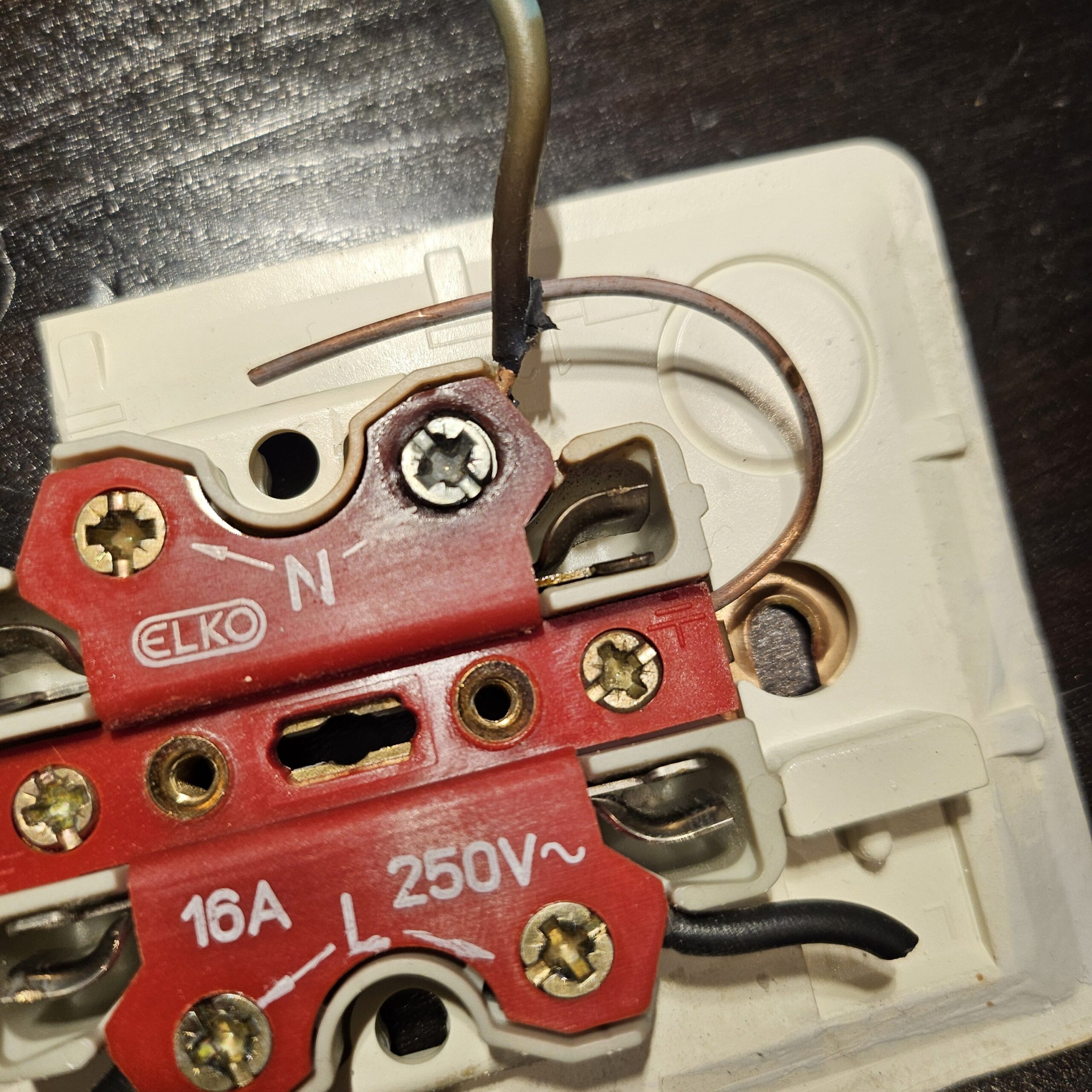

Anyway, the end result is that I’m spending an inordinate amount of time to find the “real” albums for these artists… so I wondered whether I could just quickly hack up a categorisation system that would cover my use case here? Here’s what I came up with:

I typed away a bit, and:

Tada! Now I can listen to the five first Talking Heads albums without futzing around a bit. (I pretend the last three albums don’t exist.)

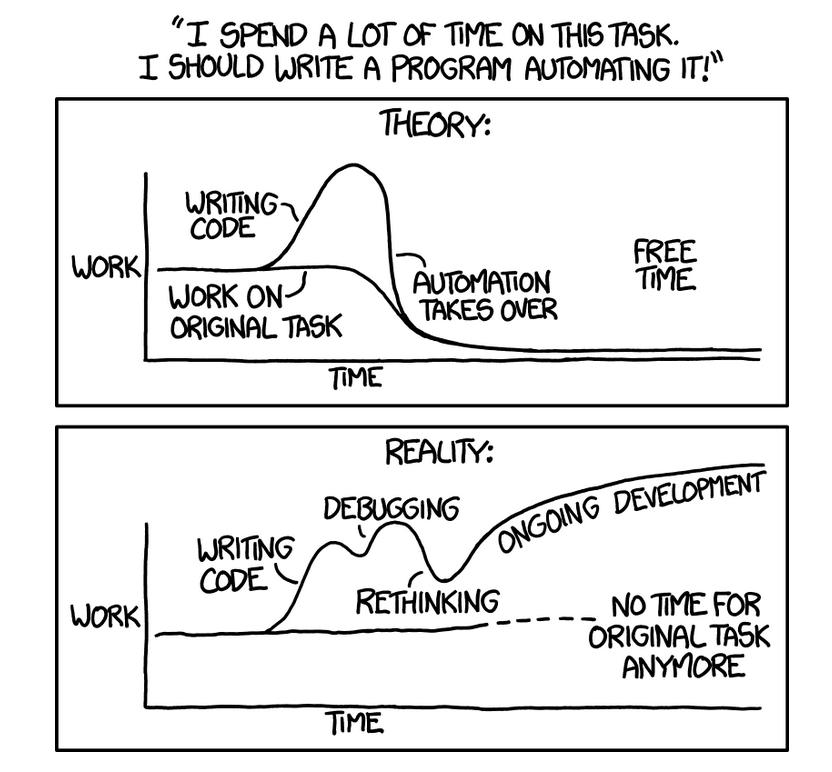

(Yeah yeah yeah.)

But what about ol’ Bowie?

WHAT A NIGHTMARE

That’s better. But I found when I was doing tagging up the albums, there’s certain EPs and live albums that I consider essential, so I didn’t tag up David Live here, for instance, so I’m a bit inconsistent. But whatevs! I’m gonna save hours and hours!

Fortunately, there’s only a couple handful of artists that I have this problem with, so I don’t have to tag a lot…

Heh, I’ve got 91 Coil things — with an extended bootleg “archive” version of each EP, for instance… OK, there’s more than a handful of artists that need tagging. *rolls up t-shirt sleeves*

Fortunately, this new system means that I can buy even more totally marginal albums, and then just hide them away after listening to them a couple of times.

Didn’t Peter Gabriel also do a German version of another album!? Now that I don’t have to skip them every time I want to play the first four albums… *gasp*