I was looking for an old draft blog post, and I realised that I had a whole bunch of abandoned blog posts here. Perhaps I should just make an omnibus of abandoned drafts — mostly abandoned for being too boring too post? Sure! Makes sense! This’ll be the most entertaining blog post ever!

I’ll include them all below, no matter how inane, in reverse chronological order:

2026-02-08: February Books

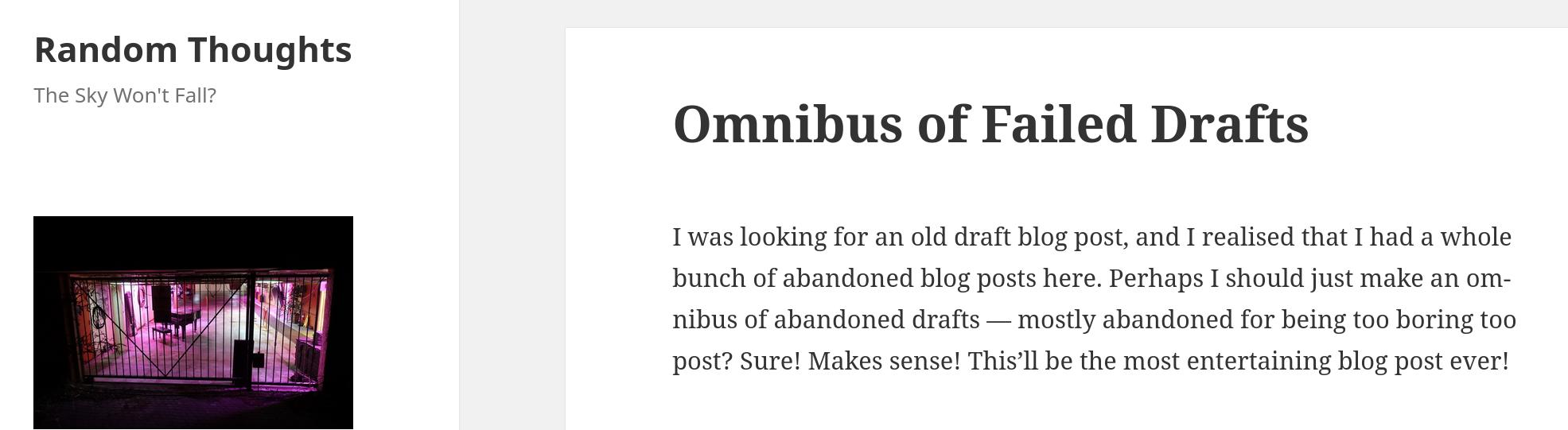

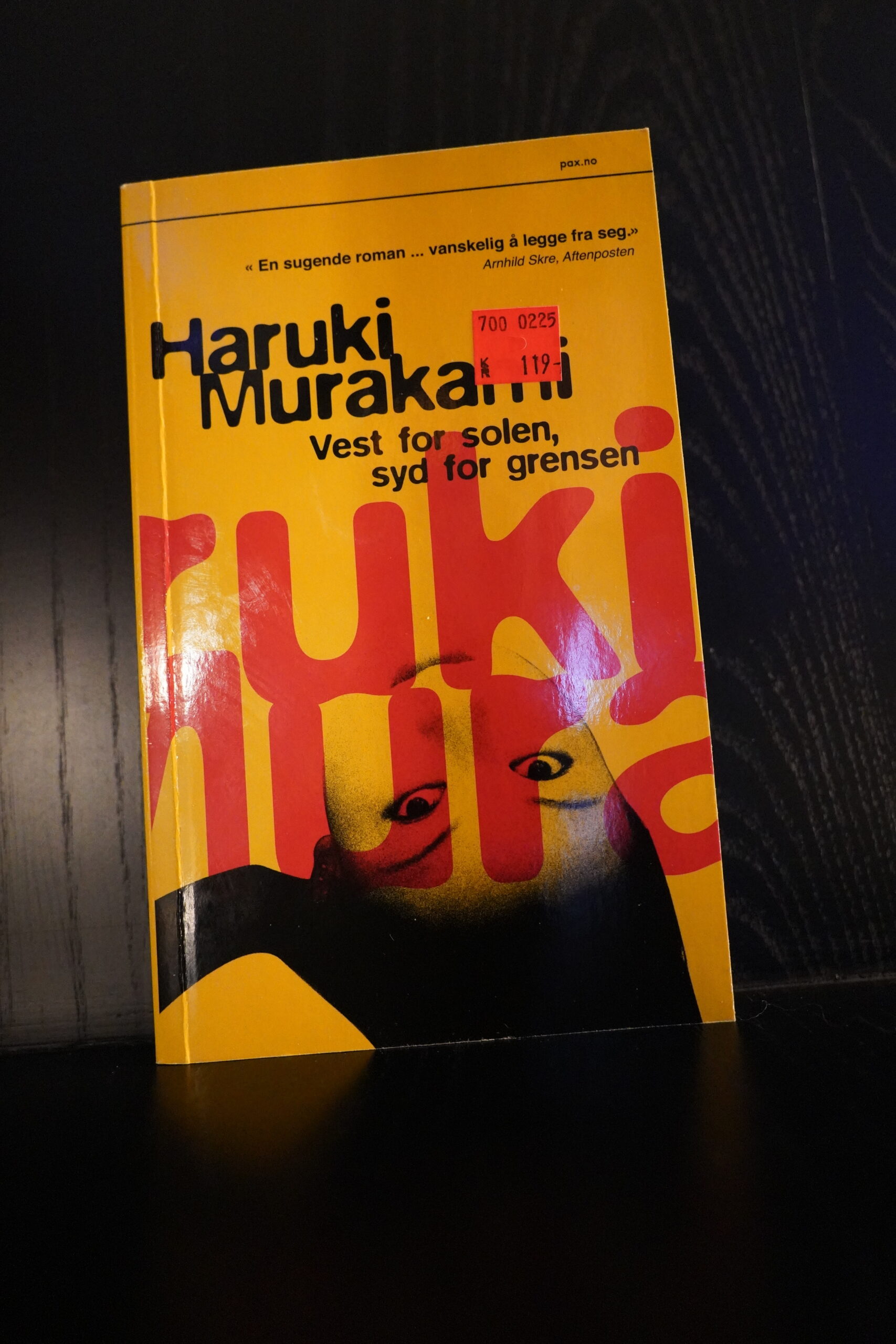

I’ve read plenty of Murakami books, and I’ve enjoyed them all. Er… like… fifteen? One of the last ones, 1Q84, I read in 2017 and I thought “well, OK, perhaps this is enough” — even though I enjoyed reading it, his schtick had just become so familiar that… well… it’s enough already. The protagonist who likes swimming or running, who enjoys jazz and cigarettes and is something like an author, who has a girlfriend that goes mysteriously missing… it’s all fine. It’s OK. And I like the lackadaisical straightforward way everything is written, but c’mon.

So I thought “this is fine, but it’s enough”, but I apparently picked this book up at a sale last year… and I started reading it and got to page 40 and I went “I was right when I said ‘that’s enough'”, because while this seems like a perfectly enjoyable book, I’m just not into this any more, and I ditched it.

I’ve got two more Murakami books on my shelves that I haven’t read yet, and I don’t think I’ll attempt to for quite a few years. Perhaps I’ll get the urge again one of these years.

I diffidently started a monthly blog post series about books I read, but I realised that I didn’t really find that entertaining enough, so the second one was abandoned. But the above had been written. There you go.

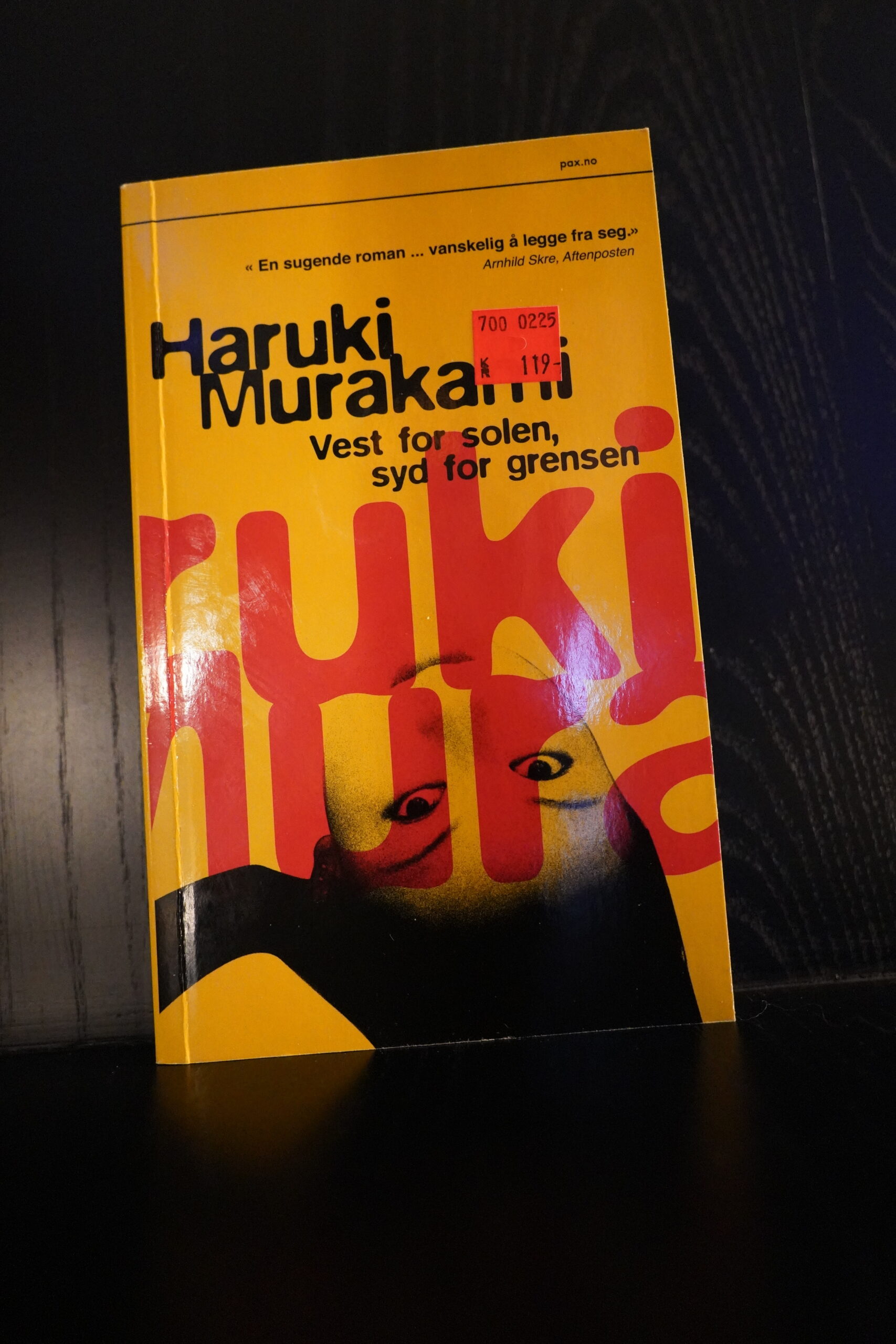

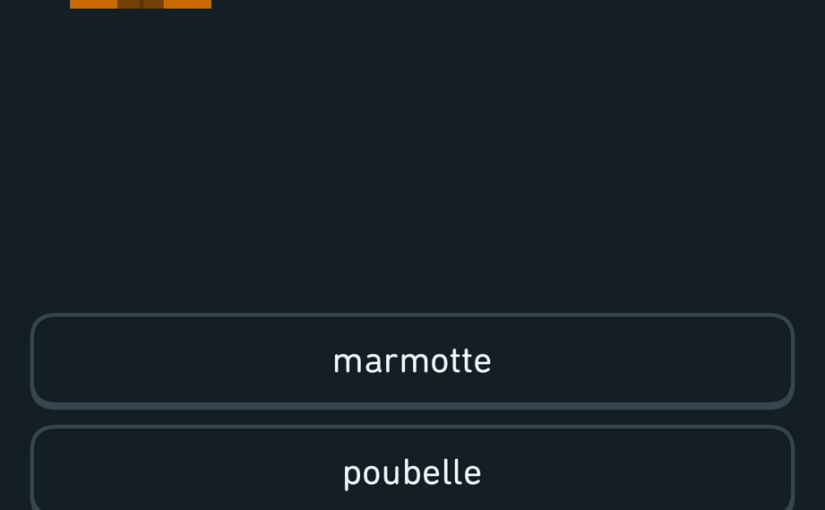

2025-07-28: Today’s confusing tracking page

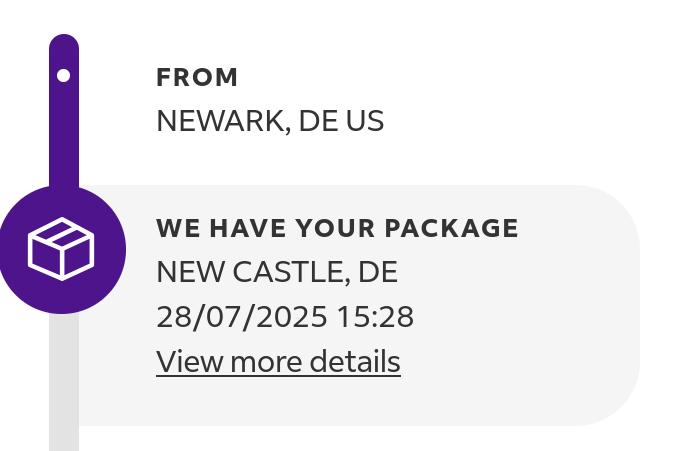

Newark, DE US!? New Castle, DE!?

I’m on the edge of the seat about what the next location is going to be.

This is indeed confusing — “DE” is presumably Delaware? But Newark is in New Jersey, isn’t it? Anyway, not really funny.

2025-04-30: Small Screens At Night

That’s what a bench in my kitchen looks like at night now, and I thought that was vaguely amusing, but it’s a head-scratcher for sure.

2025-04-29: Draft

I’ve been trying to ween myself off of Twitter (and Bluesky). If they were more entertaining, but they’re not, and it just feels like a waste of time to keep scrolling. I don’t have any big theory of why the feeds don’t work better — I get lots of posts repeated, and lots of posts with 7 views that go like “I know!” and that’s it, or “Yeah, the SKD with the PMCs flurdle Thomas” — totally oblique stuff — but I’m wondering whether it’s to trigger some kind of hunting instinct. If there was just one fun thing after another, you’d grow tired of all the interesting posts? It would become an obligation and a chore to go through all the posts? But since there’s twenty bad posts for every good one, you get a kind of rush when you get to that one good one?

I dunno. You shouldn’t ascribe cleverness to something where the more likely explanation is that the people who implemented the algos suck. But the end result is that I feel I can’t justify spending time hunting for pearls. It’s just not a sane way to spend time.

So I try to limit it to doing it one time per day only — after I get up, before eating breakfast.

It was hard to de-train my fingers. If I have Firefox up, reading something, if my mind starts wandering for a second, my fingers will automatically, by reflex, type Ctrl+t t RET, which takes me to Twitter. The trick is then to Ctrl+w before the page loads — and since Twitter is so embarrassingly slow, there’s plenty of time.

This was just too too boring. And, of course, I failed miserably — I’m back to reading Twitter again now. I’m still trying to cut down.

2025-04-26: The most elaborate bird perches

I thought the image was vaguely amusing, but I just couldn’t come up with a funny title.

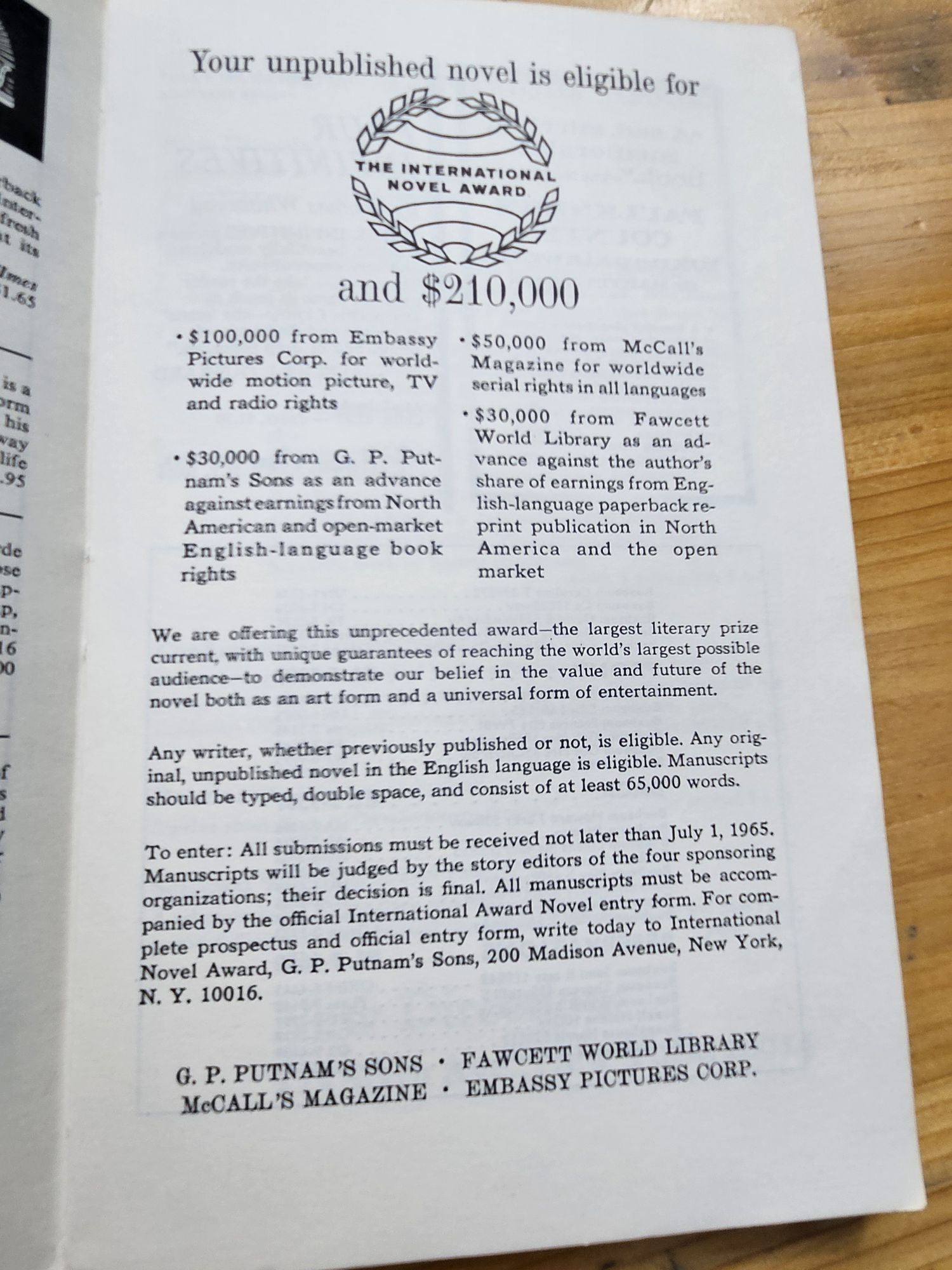

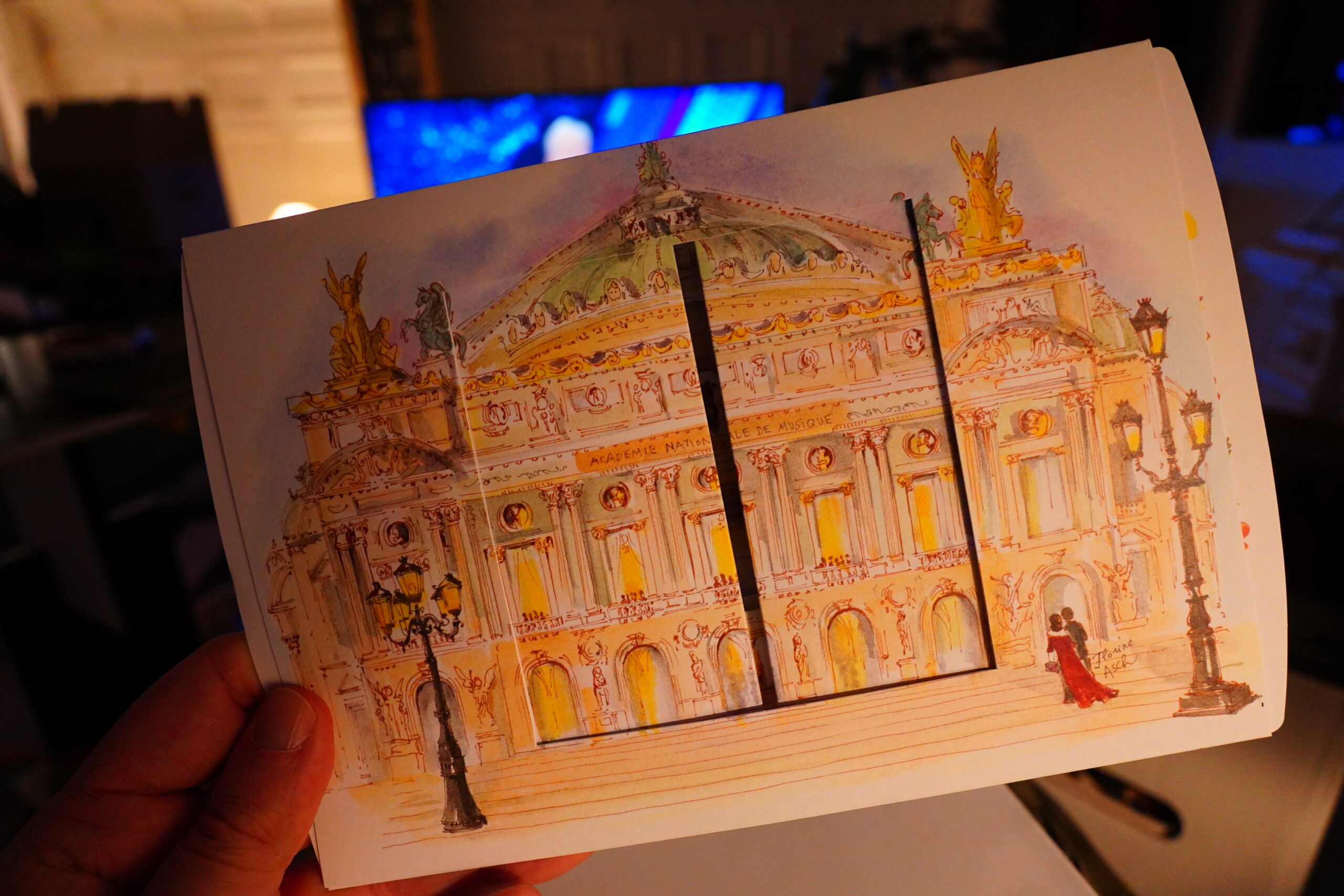

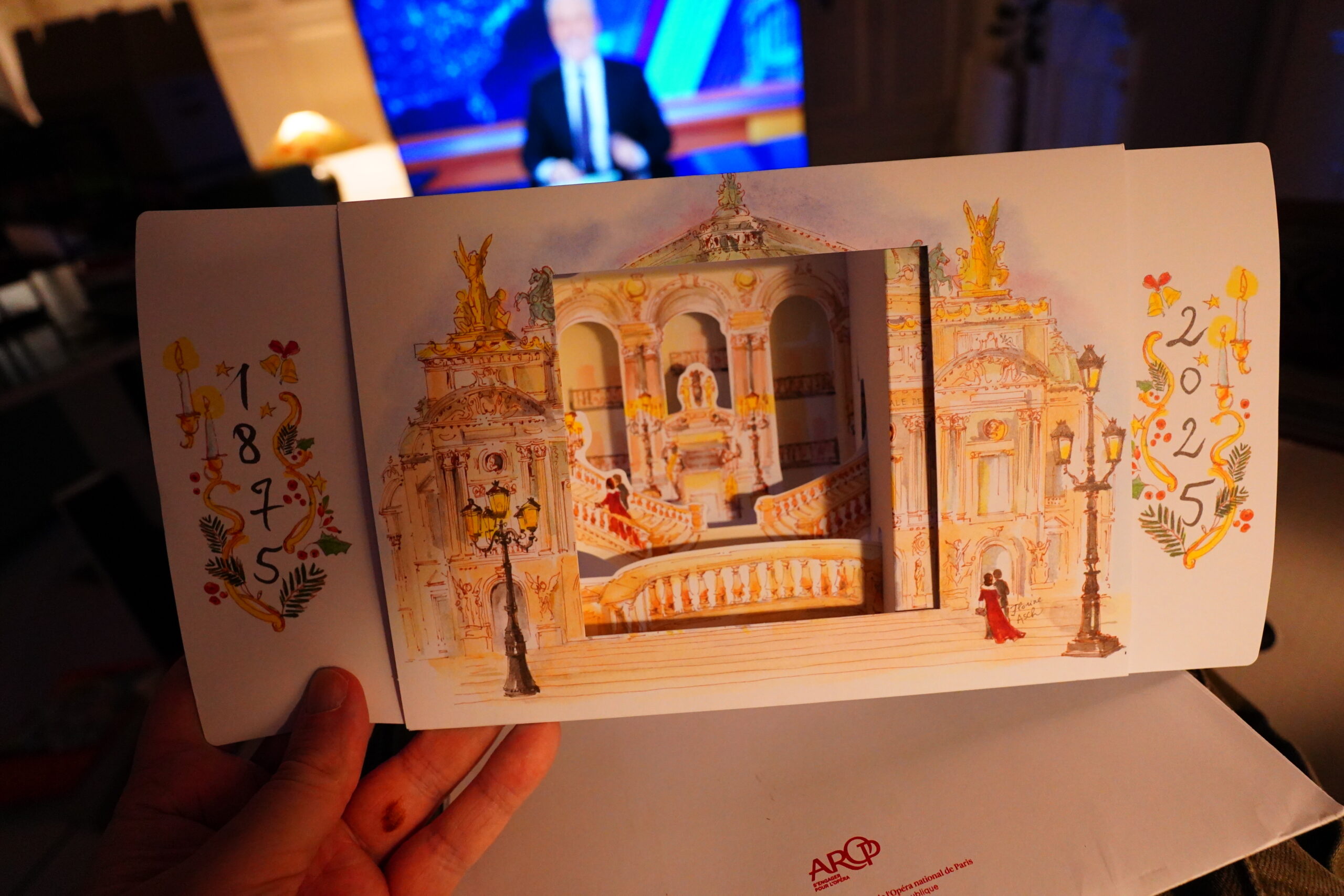

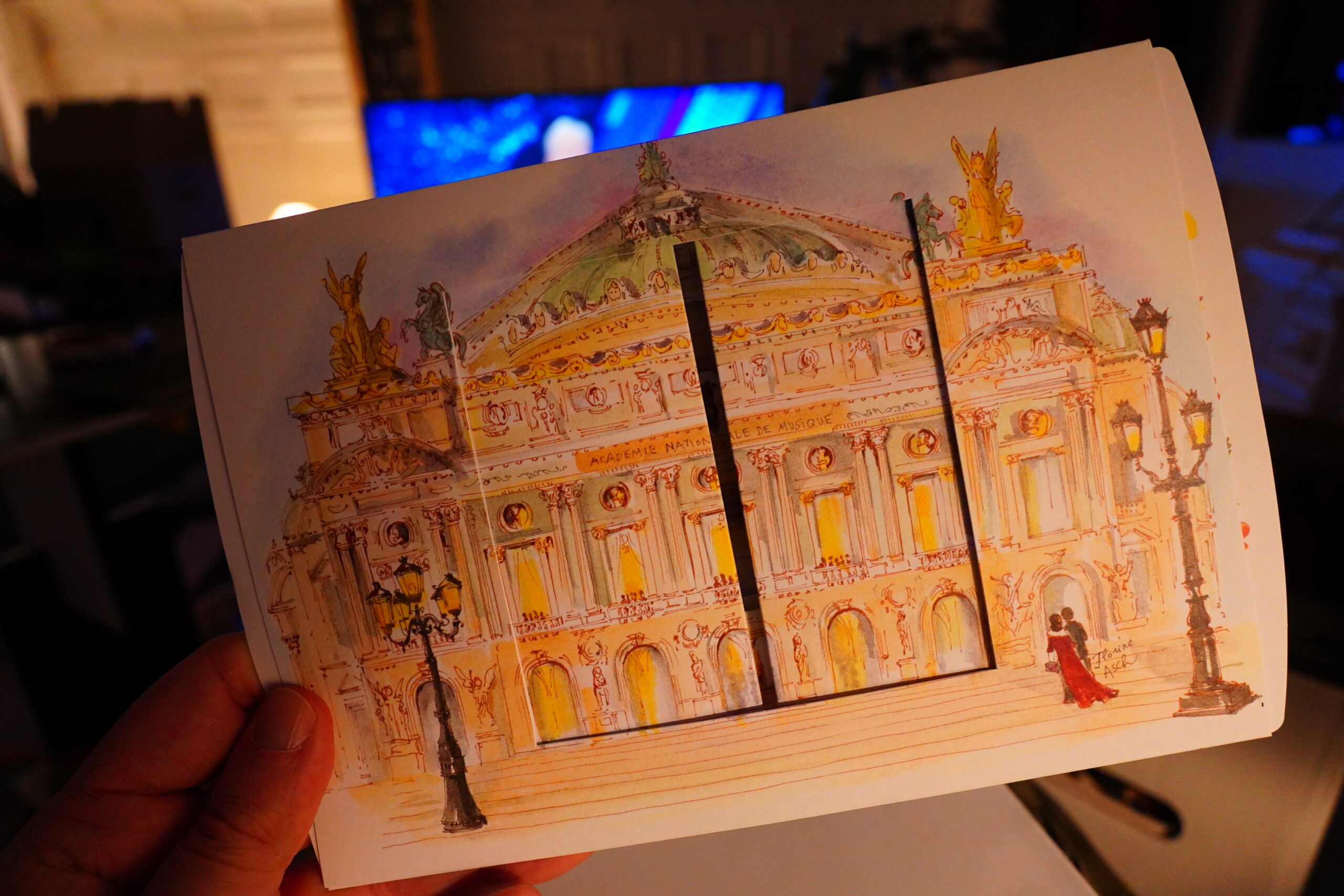

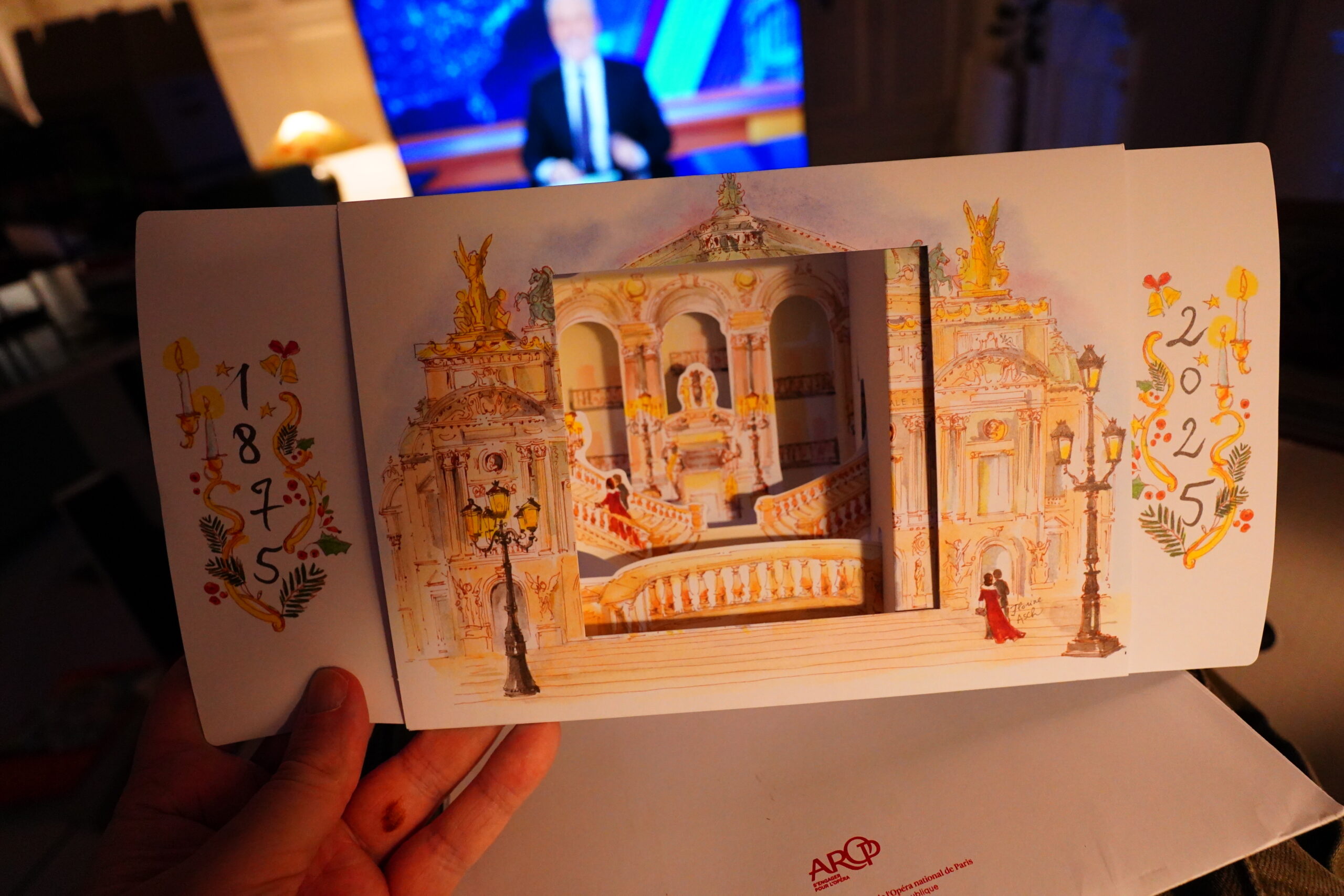

2025-01-28: French Opera has the best mail

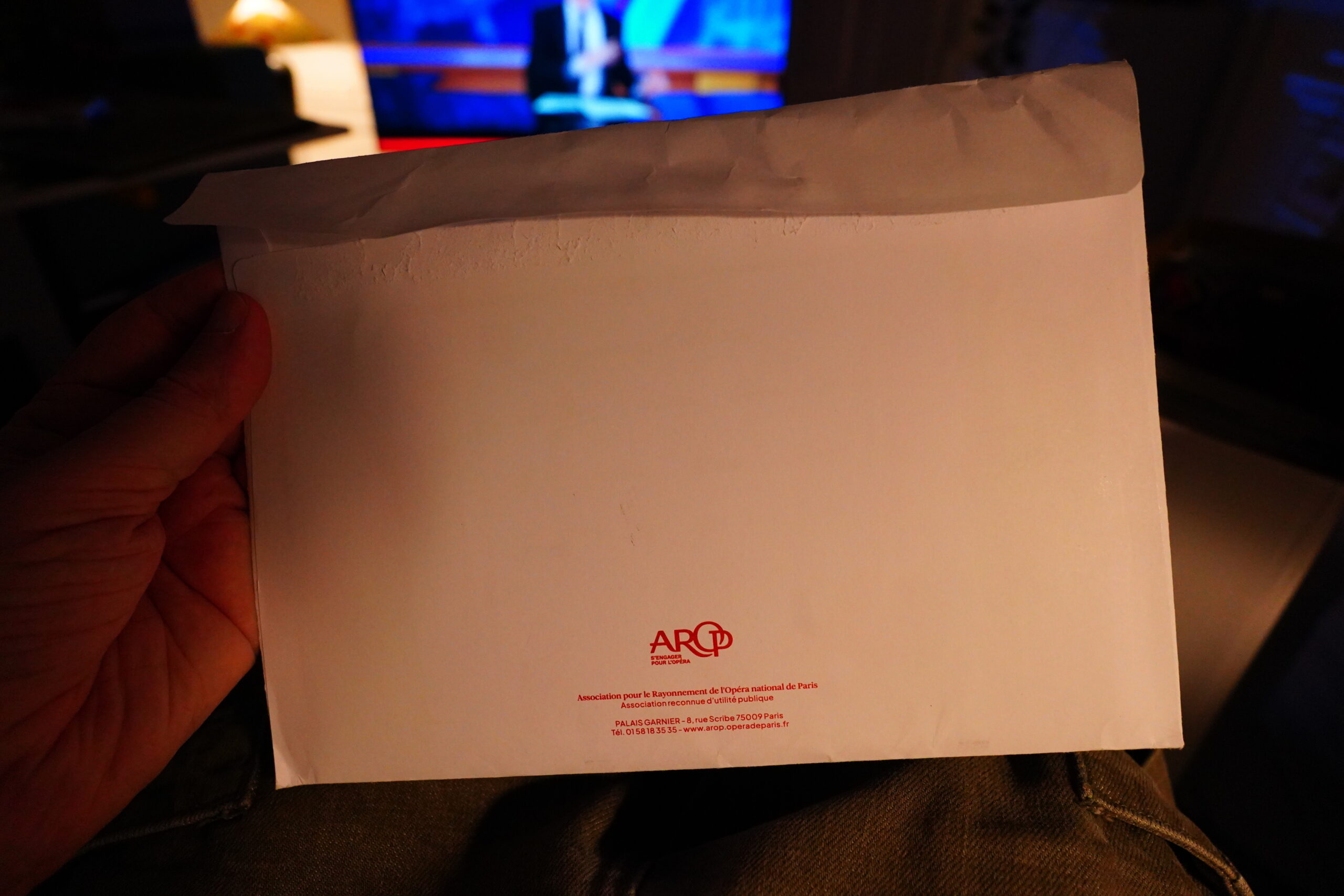

I got mail.

See… it’s a normal postcard…

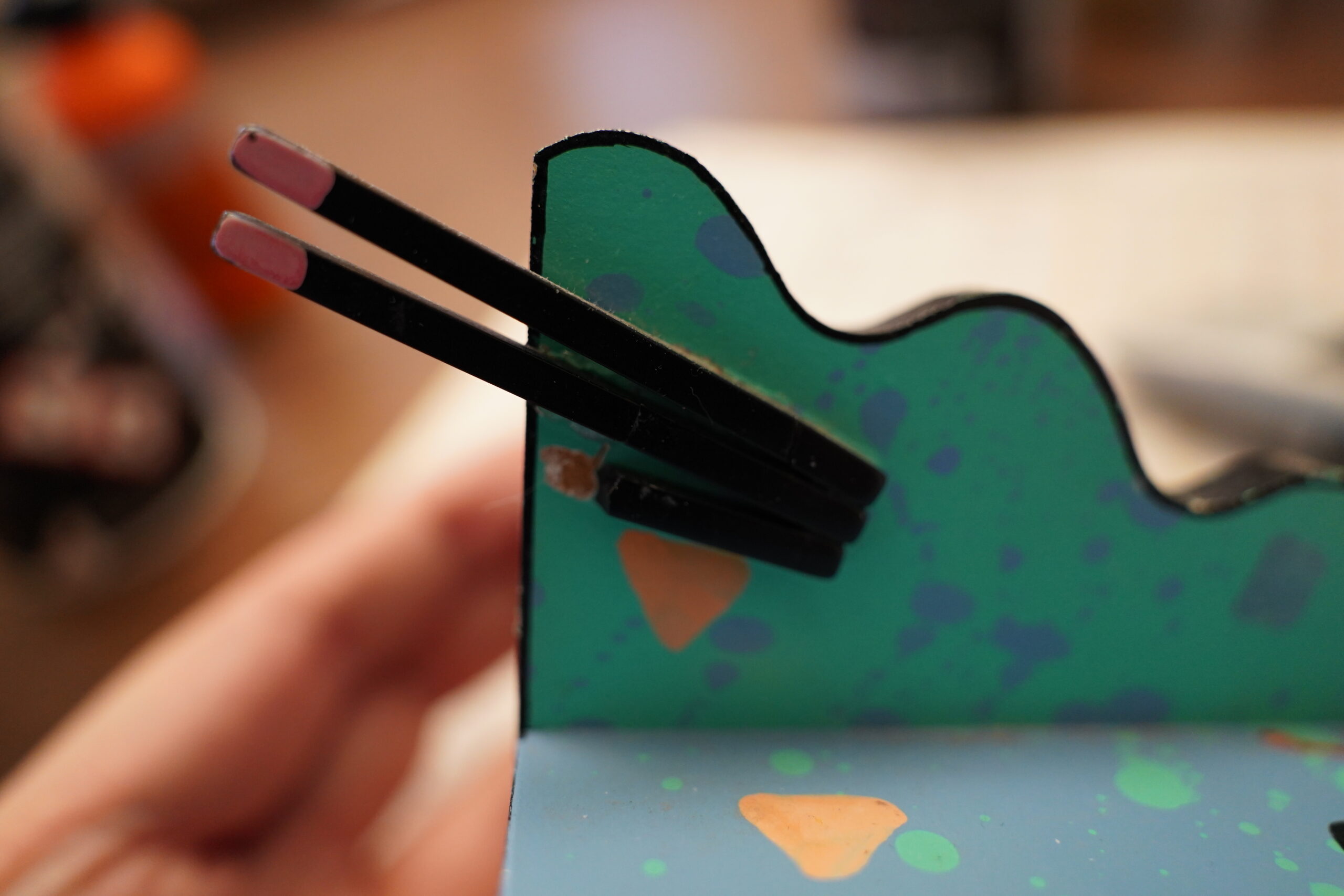

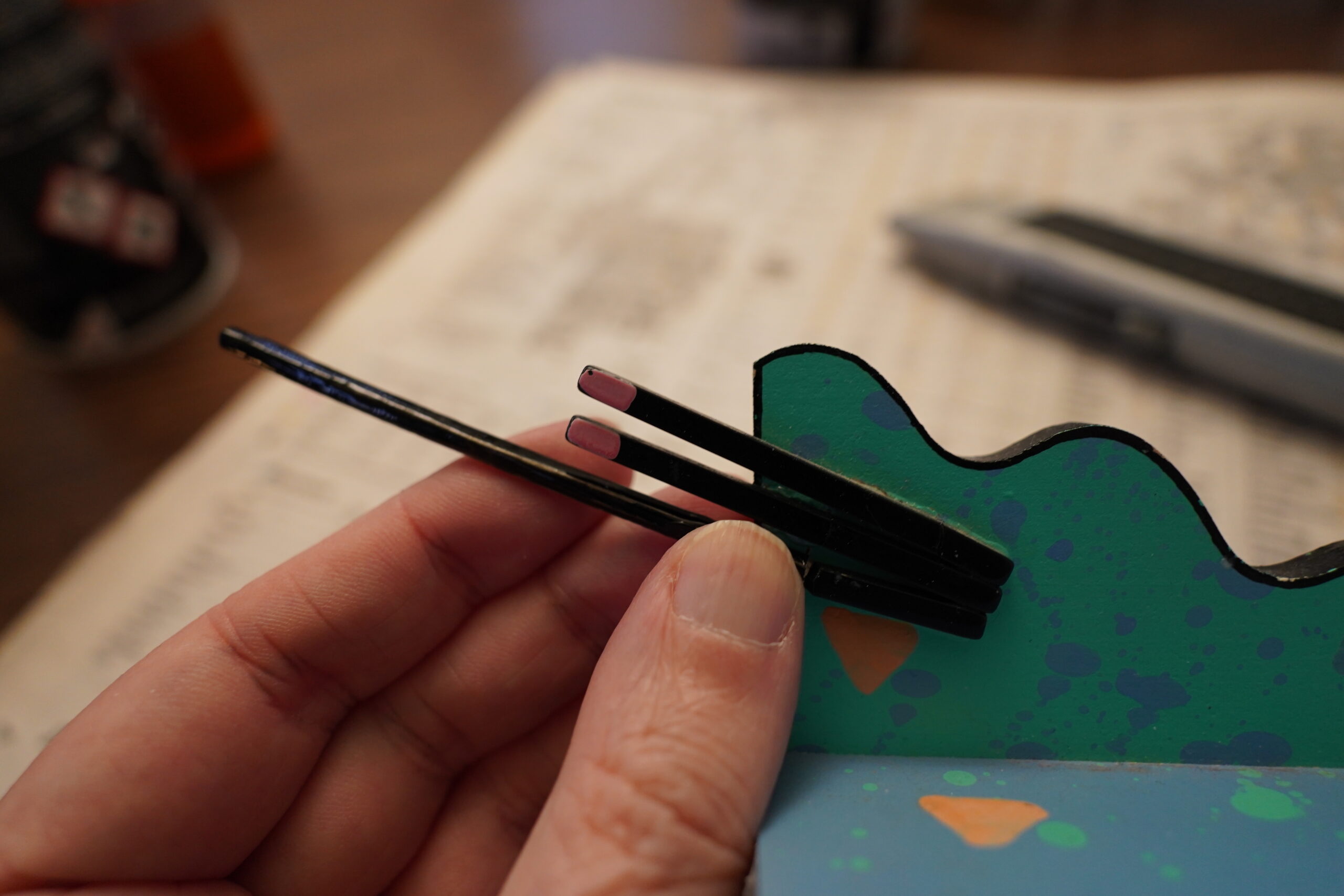

BUT THEN YOU PULL IT AND IT GETS 3D!!!!

The French opera people are the best.

The title here is true enough — I continue to get very elaborate mailings from the opera. But… c’mon.

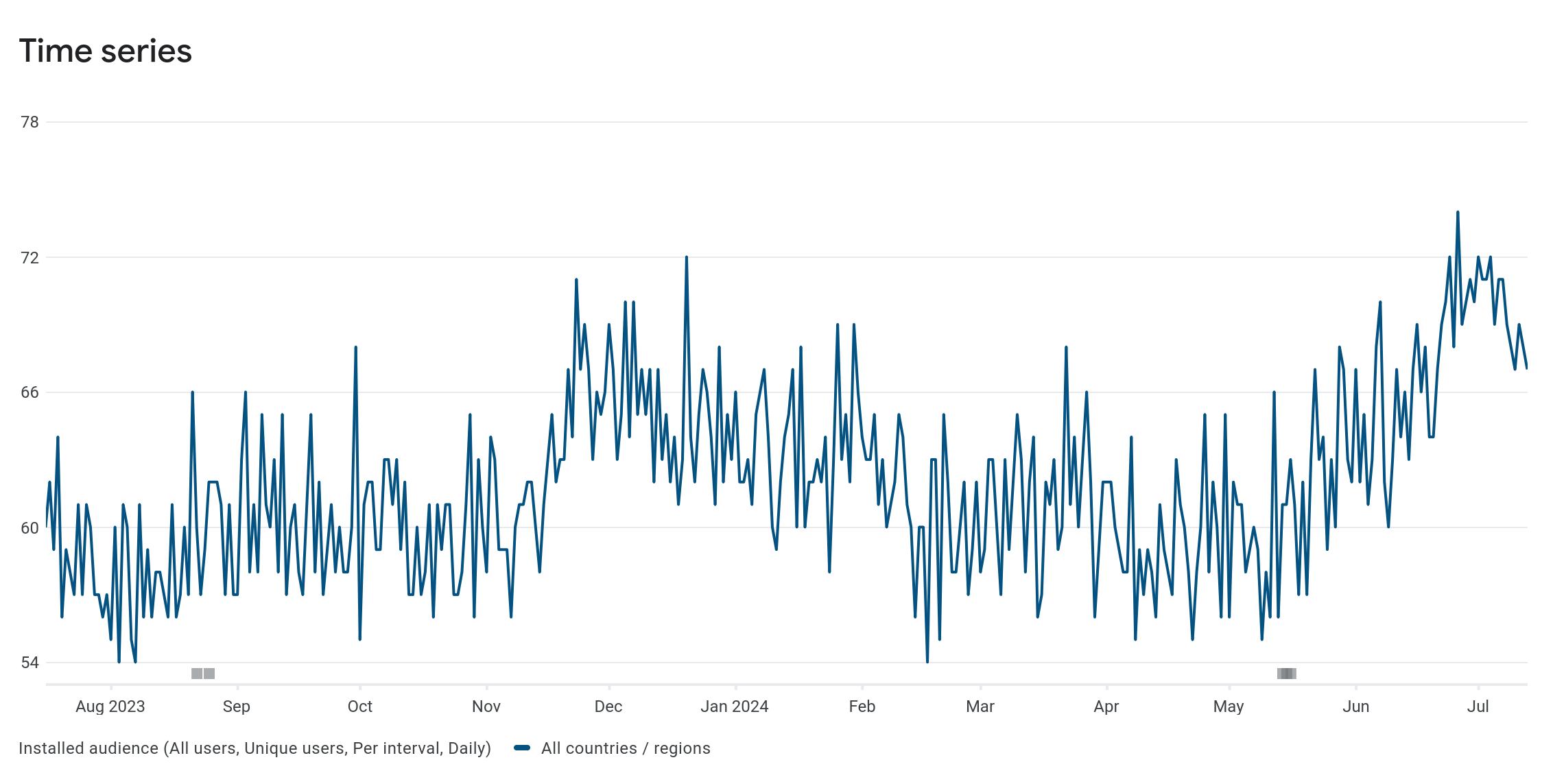

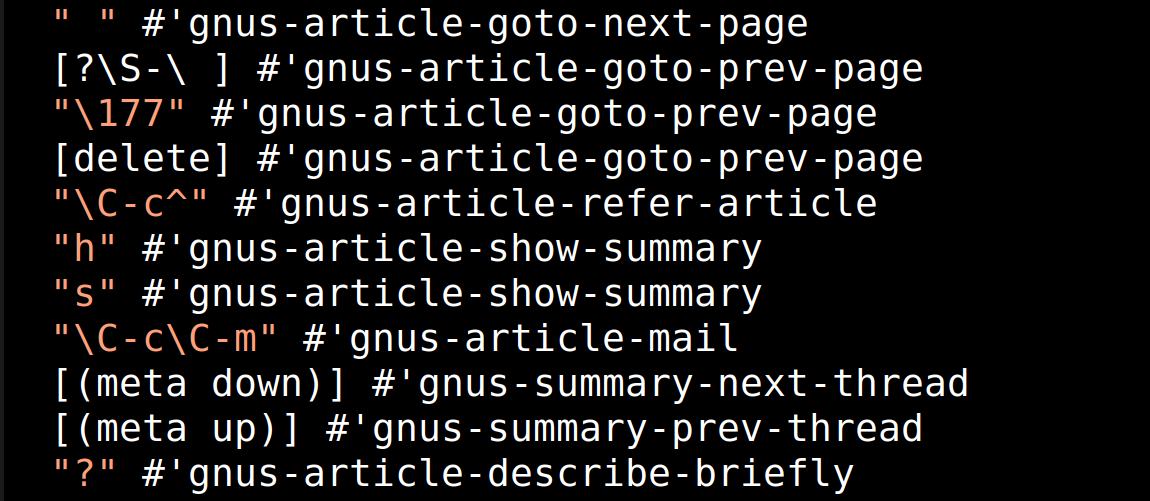

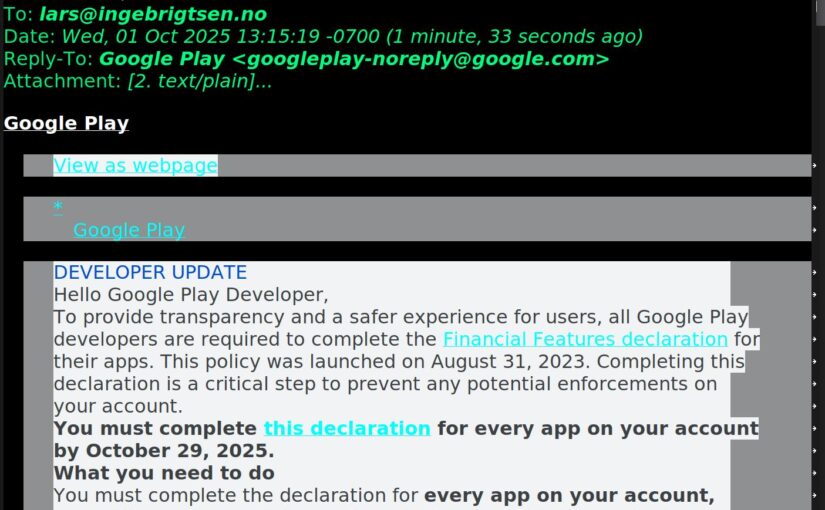

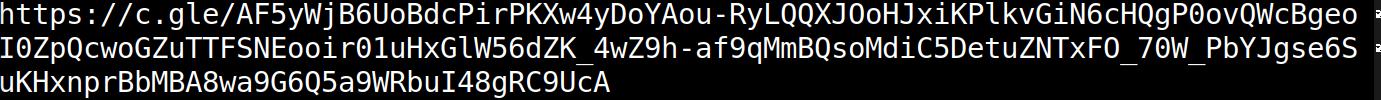

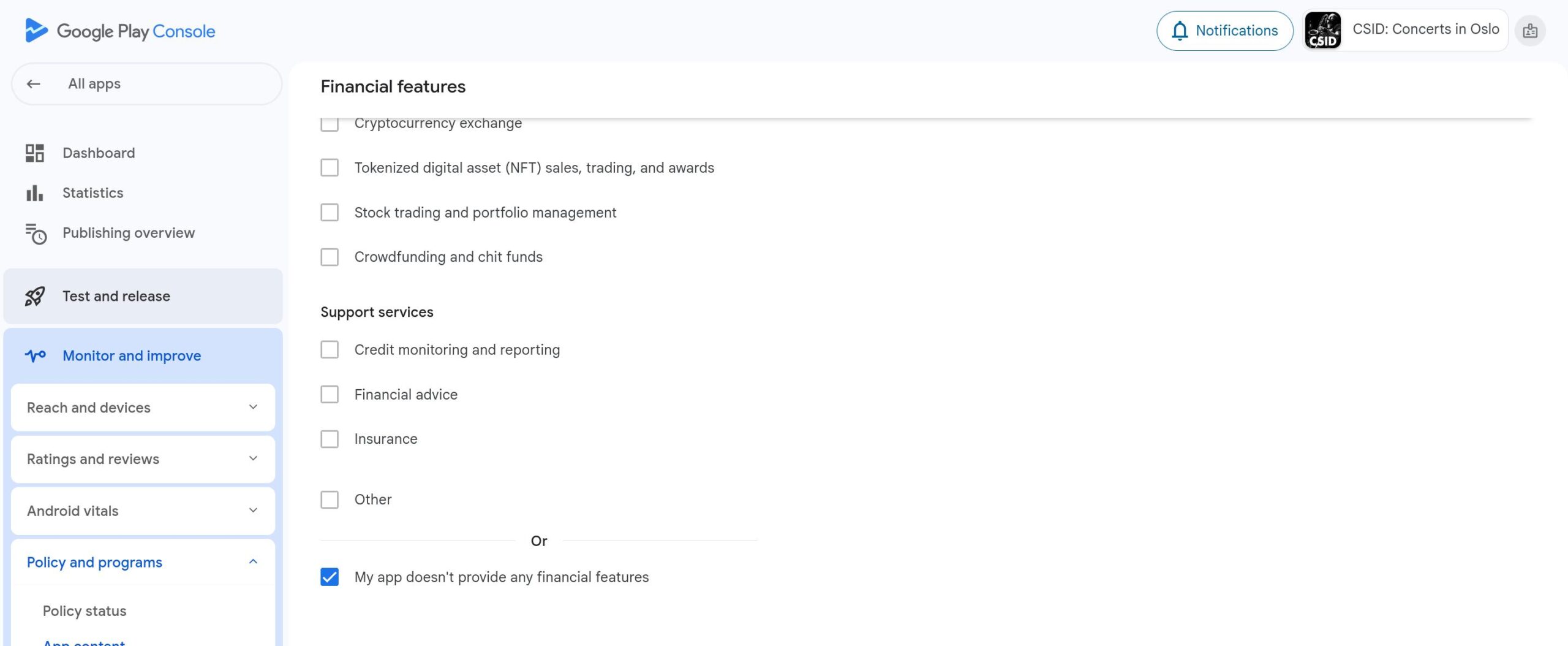

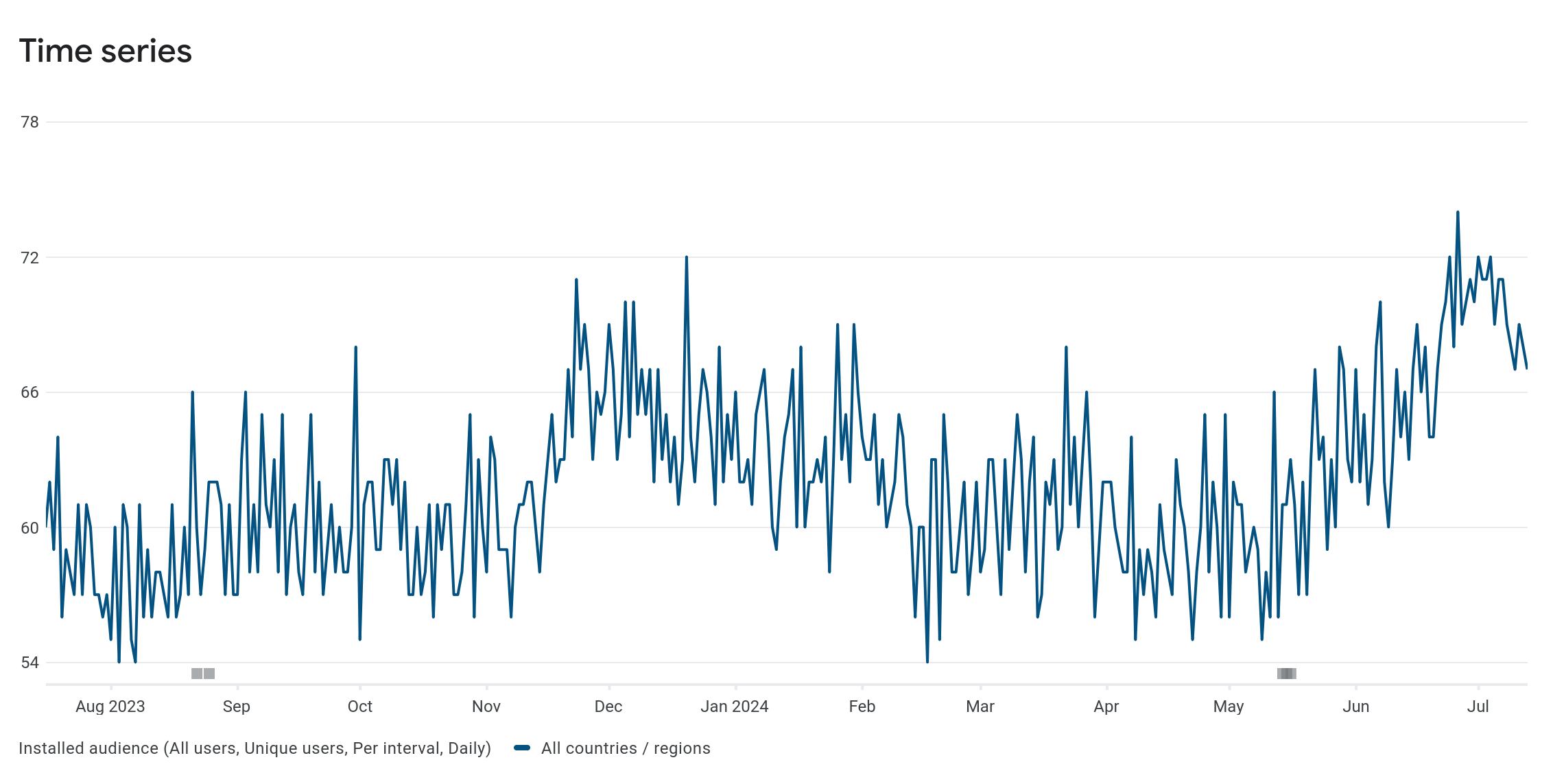

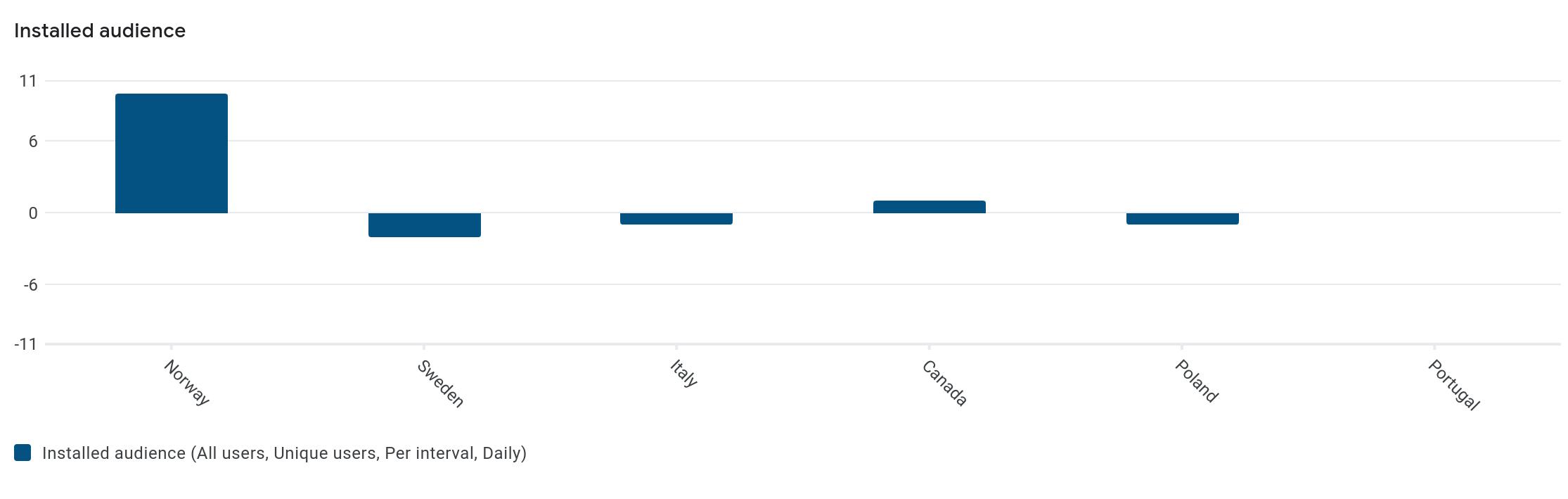

2024-07-15: More eplot

I’m, of course, still looking at charts and being judgemental.

This is from Google Console, and the axes are pretty reasonable. I like the “Aug 2023” at the start, and then just “Sep”, “Oct” until it gets to 2024. I think I’ll steal that idea…

The Y axis isn’t horrible, but it’s pretty meh. 4 as a “pretty number”? It’s not that pretty.

This is from the same page, and is awful. Why is the Y axis going 0/6/11? 11?!? Where did that come from? It turns out that the highest bar there is actually ten.

And then they extend the Y axis the same length into the negative zone? That makes no sense whatsoever.

(Yes, I realise whoever was programming this page was probably looking at the stats for Fortnite, where the Y axis goes to three billion and not, er, 11, and probably made that look all nice and proper, but it’s these corner cases that take effort…)

This is from when I implemented some charting stuff for Emacs, and I was looking at other chart libraries to see how bad they all are. I mean, for inspiration. But this post didn’t really go anywhere…

2024-07-13: Orbital isn’t poppin’

This is from London, and it’s Orbital’s pop up shop. But it really looks like I’m creepily snapping pics of these women instead of the shop, so I didn’t post it.

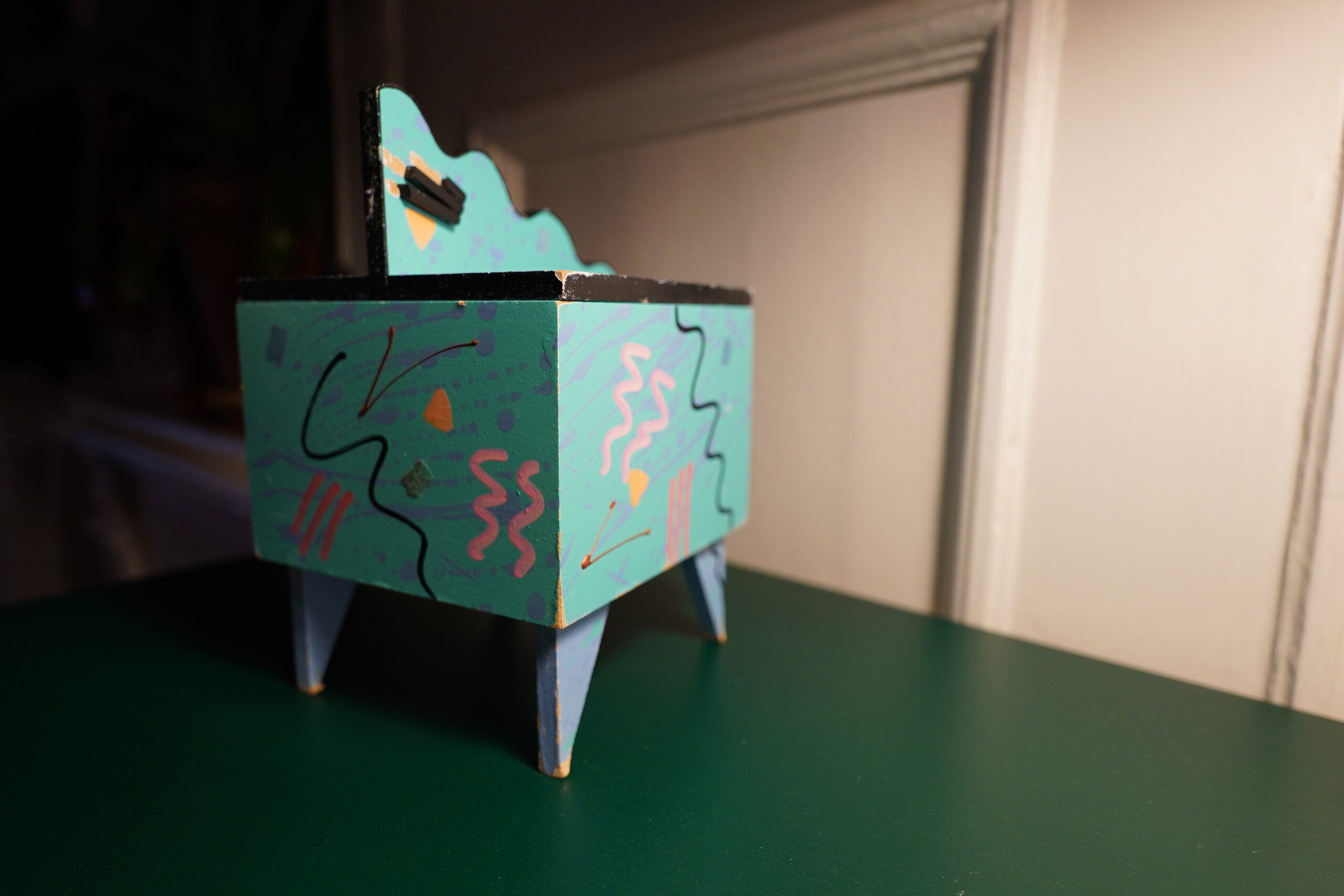

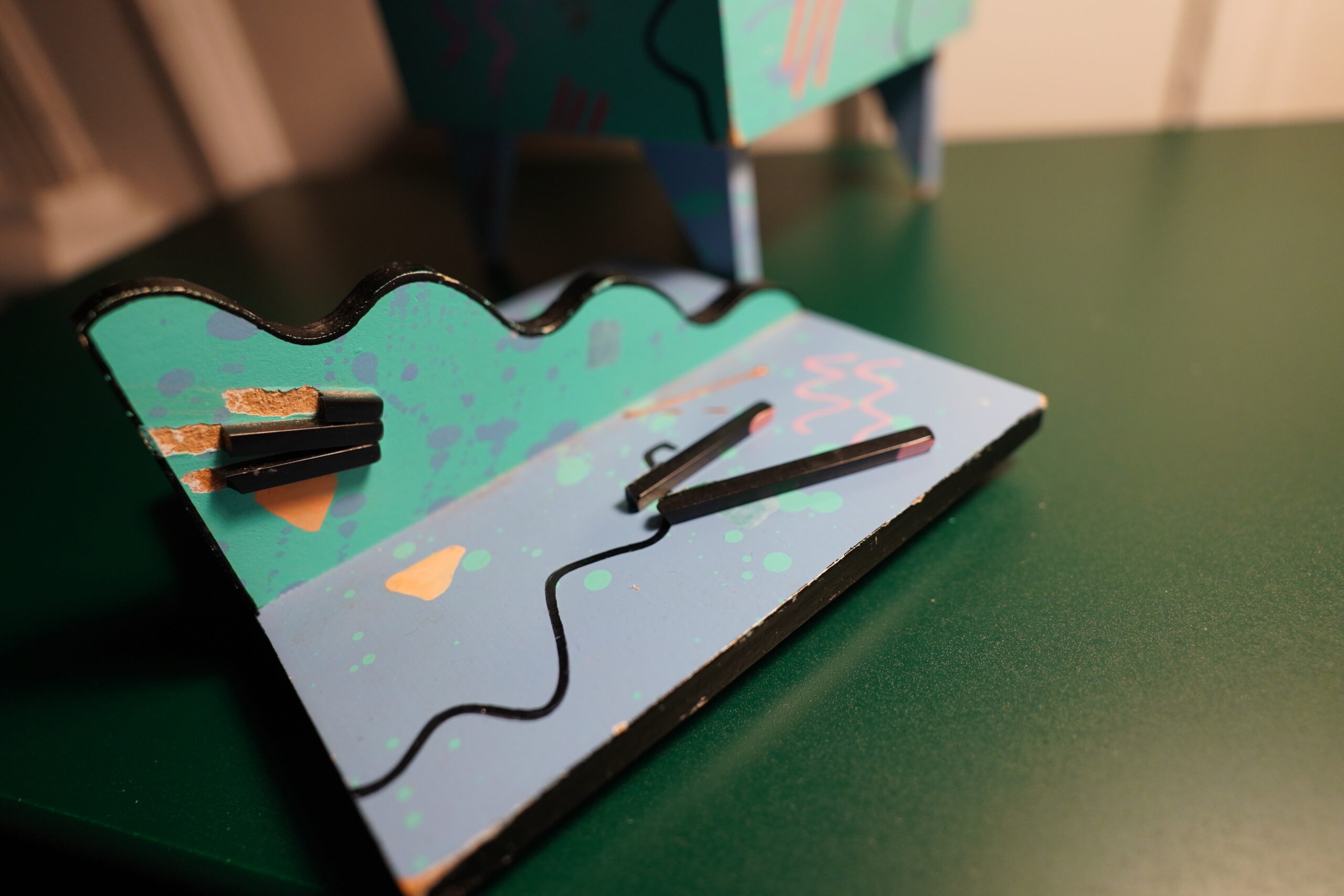

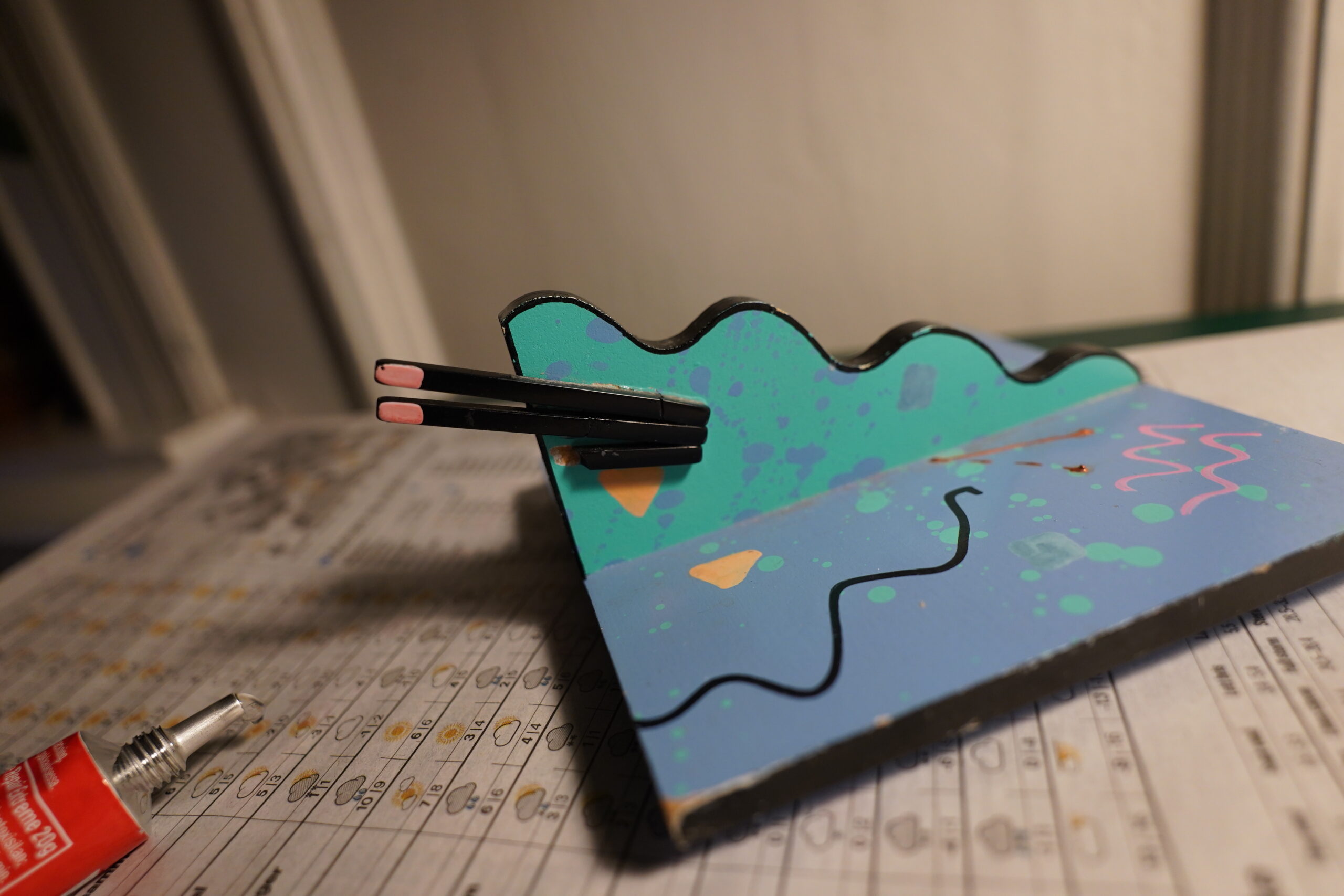

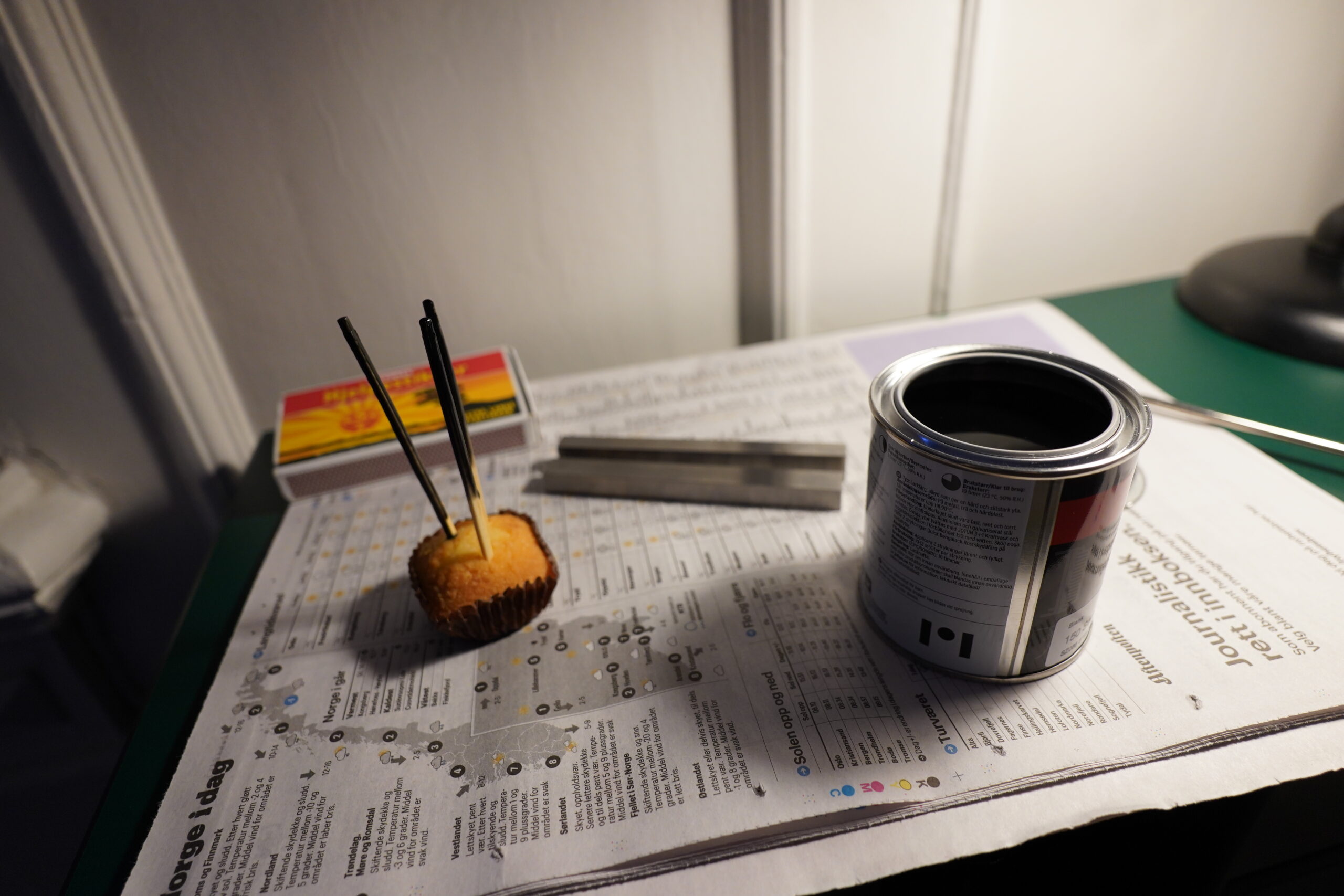

2024-05-17: Wood Stain

I was washing the balcony furniture the other day and somebody said “why don’t you use some wood stain while you’re at it?” Which I’ve never done before, so of course I had to try.

And… man, this foldy table has a lot of surfaces…

I’ve never done wood stain before, and it’s a lot more fun than painting, because you can be really sloppy when applying? And if you put it down on a newspaper wet, it doesn’t stick to the newspaper? Which is nice.

But man, the solvents… I’m glad I did it out on the balcony. And I’m glad I had a huge box of disposable rubber gloves to use when shifting the furniture around.

*zzz*

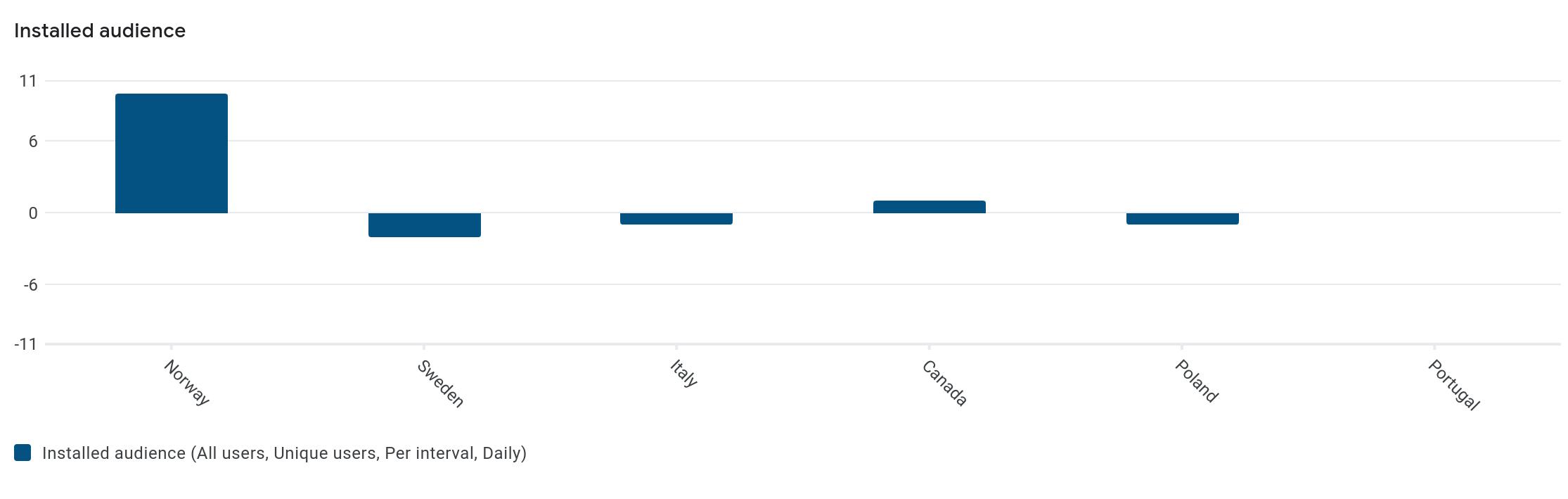

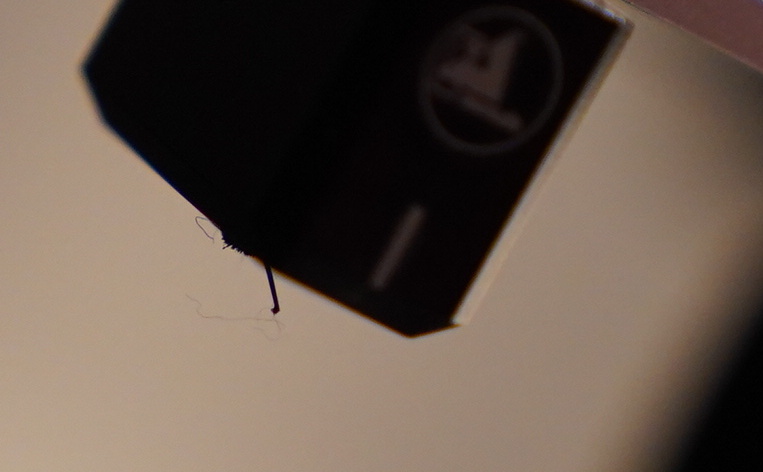

2022-01-08: Needle

Err… Hm… this is four years old… what was this about, then? Oh, right, the pointy bit on the record player needle had dropped off, somehow. It was very confusing until I realised that that’s what had happened.

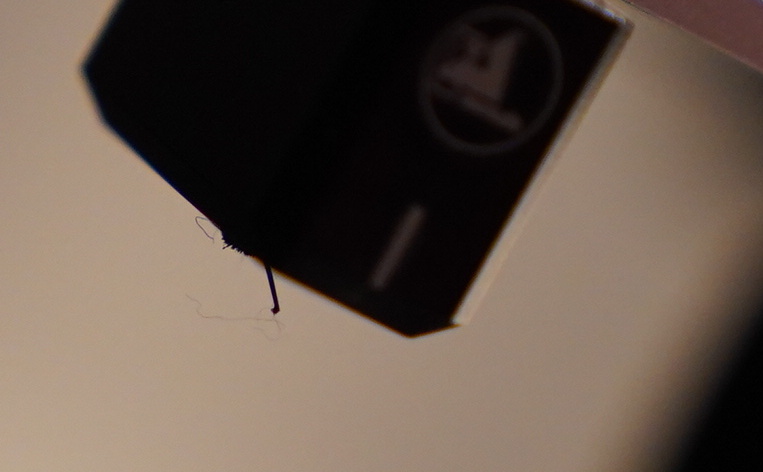

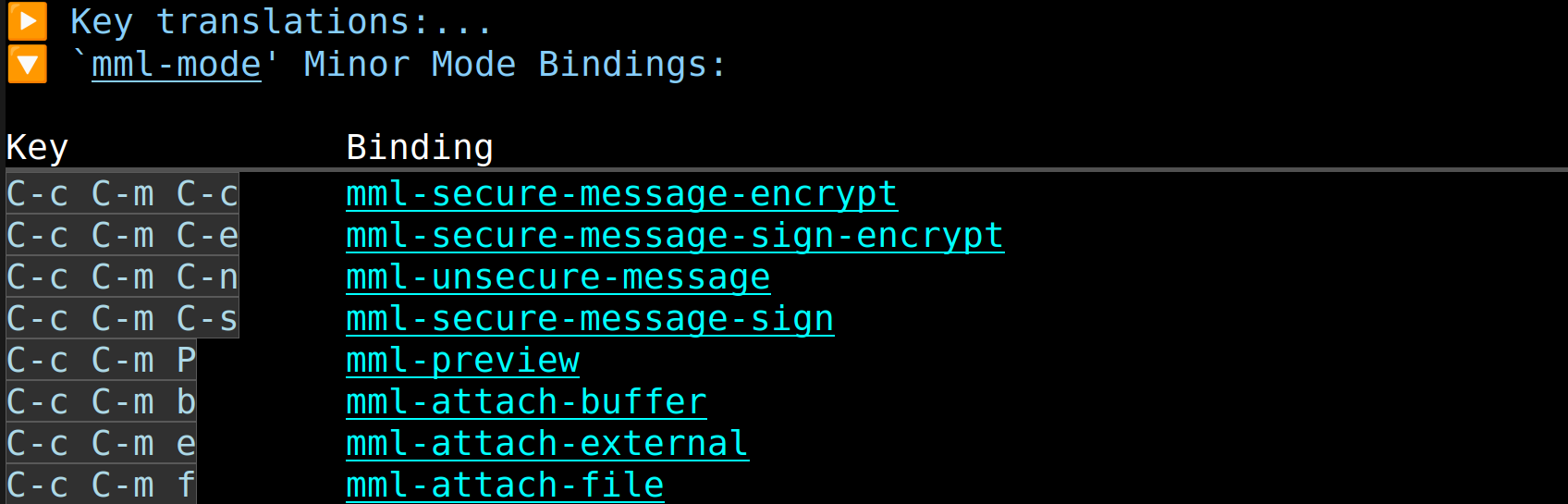

2021-11-13: Keybinding in Emacs Revisited

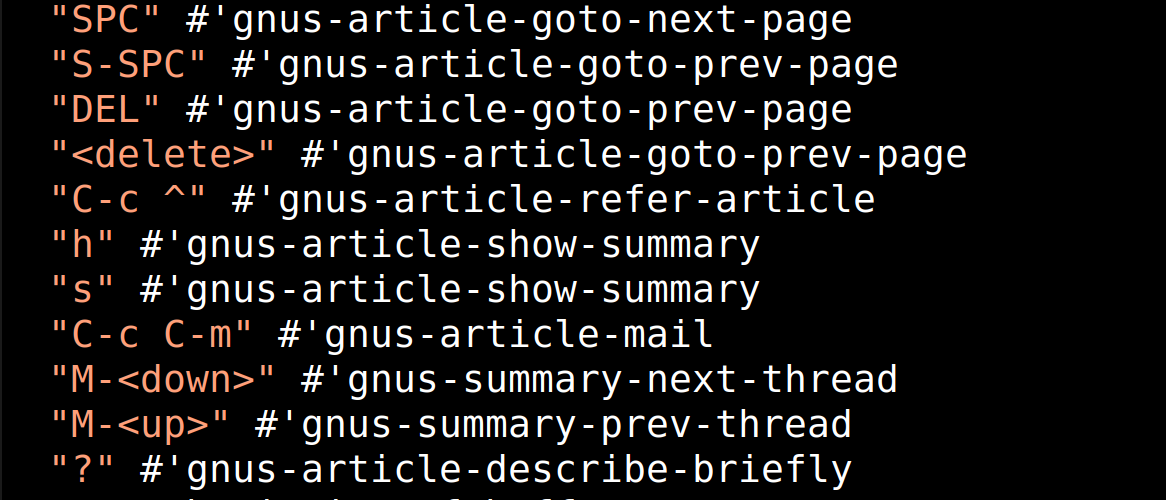

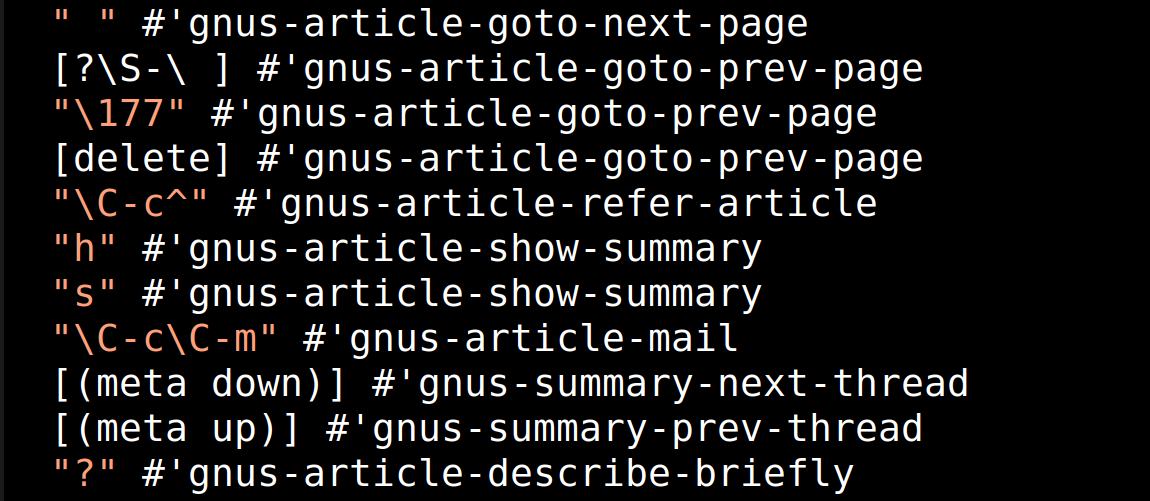

GNU Emacs is old — the 50th anniversary is coming up in a couple of years. One place that’s very noticeable is in the syntax used to describe key bindings:

It started off very simply with a string syntax that, more or less, covered the input you could get on a terminal. So “a” means that that key (but is really the number 97), and then you have the control characters like “\C-c” (control and “c”), which is a part of ASCII and is really the number 3), and finally you have “\M-c”, which isn’t really ASCII at all, but is the number 3 with a bit set to denote that the “meta” modifier is set, and is kinda-sort the number 227.

Which means that “\177” is the DEL key. *sigh*

So that’s the one syntax. It didn’t fit everything, so there was a second syntax on the form [?\S-\ ], which means “shift space”, and is really a vector with one or more numbers inside.

Then window systems happened, and you got events like , so Emacs grew the syntax [down] to designate those, and you couldn’t use strings for those events at all. Meanwhile, the XEmacs people thought it was silly to have two different syntaxes for this, so they introduced One New Syntax to Conquer Them All [(meta c)], so now there were four syntaxes.

The Emacs people though this was all a bit silly, so the ‘kbd’ function was introduced as a helper function, so then you had people saying

(local-set-key (kbd “C-c”) ‘foo) all over the place, and you had, in effect, a fifth syntax.

Which brings us to today, where you commonly find code in Emacs on the form above — a mixture of all the five styles isn’t uncommon to find in keymaps that have evolved over the years, because everybody uses different syntaxes here.

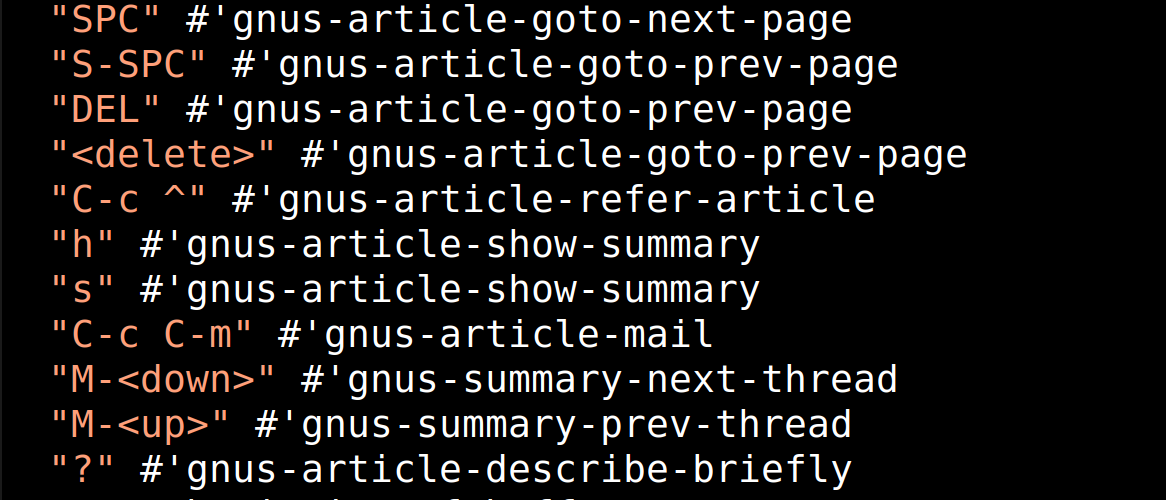

I think you can see what’s coming here — A Sixth Syntax To Conquer Them All For Real This Time.

But no. Instead we’re taking a different approach, and sticking with the fifth syntax, but making that the only syntax. It’s used when displaying keymap information, and you can now use the described syntax directly to define keys.

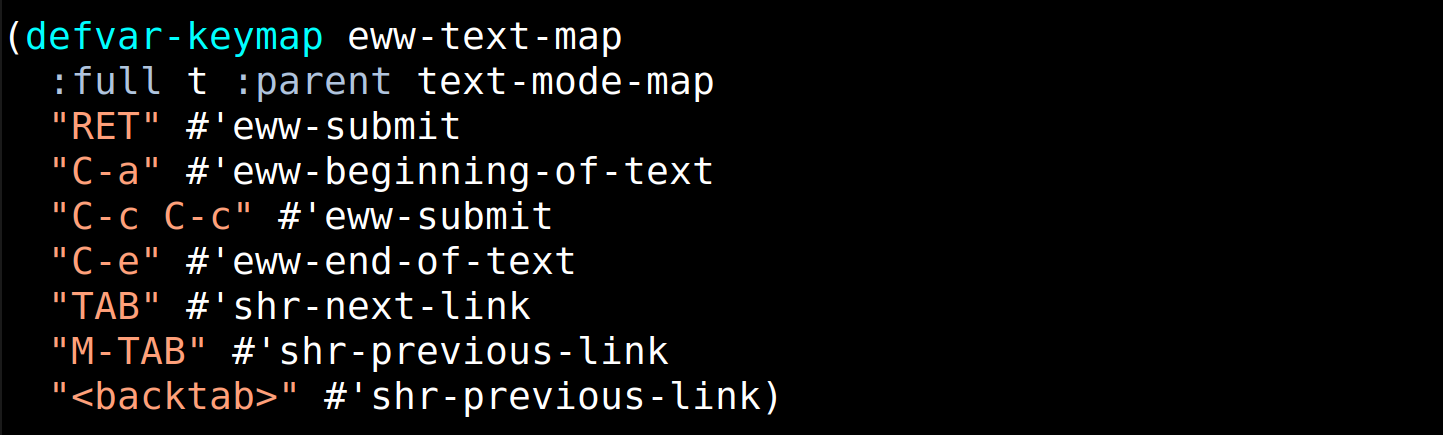

Here’s how it looks with that syntax:

To do this, we’re introducing new functions that only accept the “new” syntax, and will signal an error if you try to feed them anything else. All the old functions still exist, but will be phased out in the Emacs code base, but we can realistically not ever remove them, because there’s so much code out there that uses them. Perhaps in 50 years or so, though, because we hope that the new functions have so attractive ergonomics that people will be flocking to them in droves.

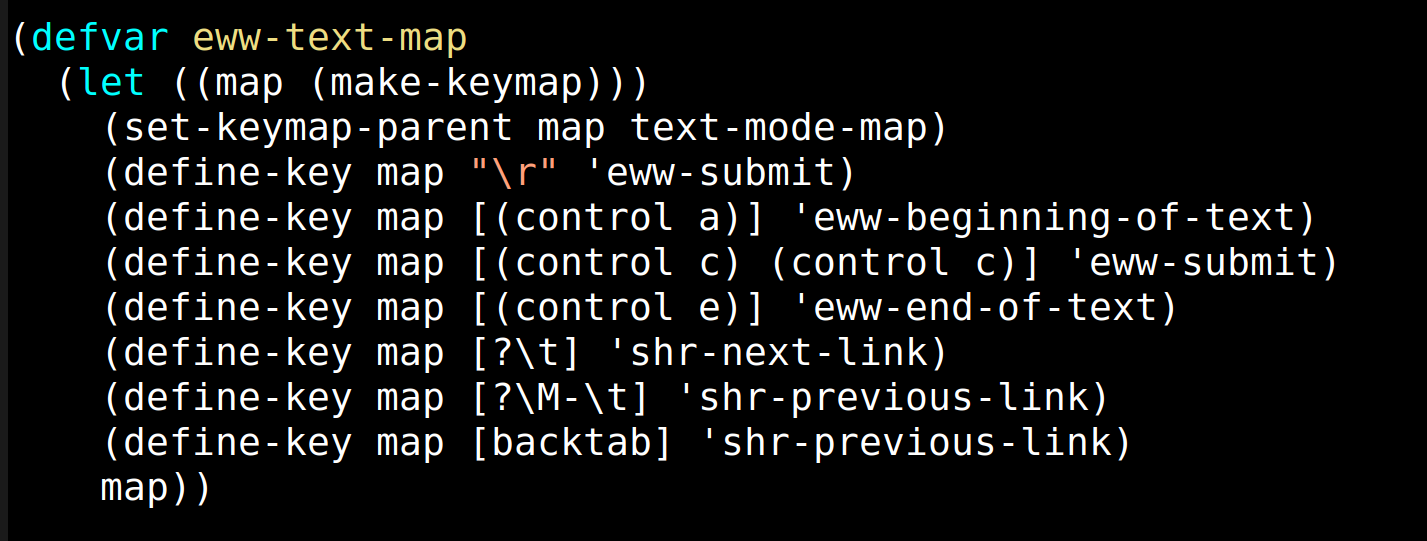

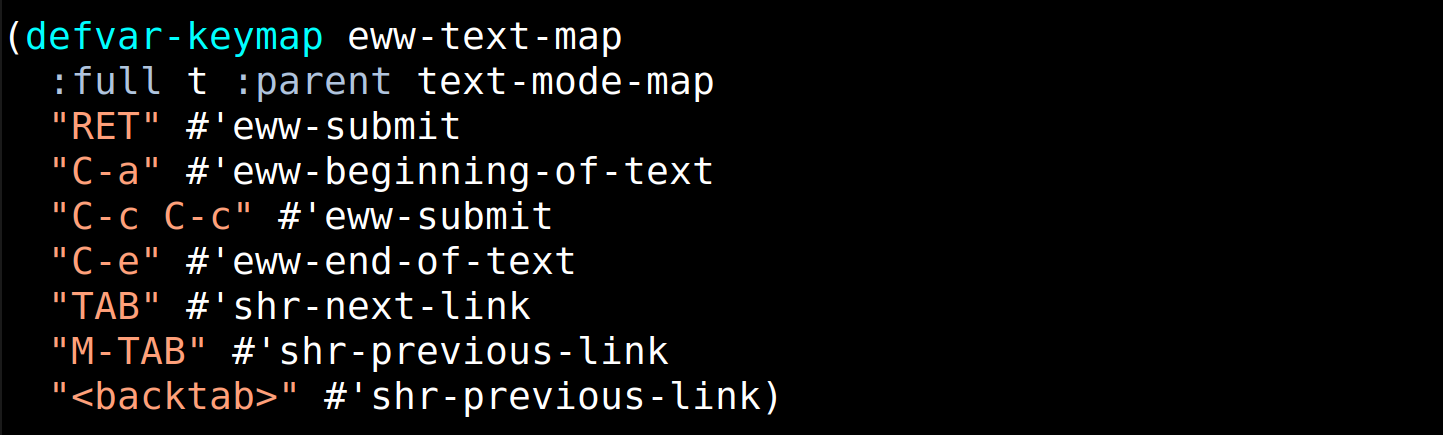

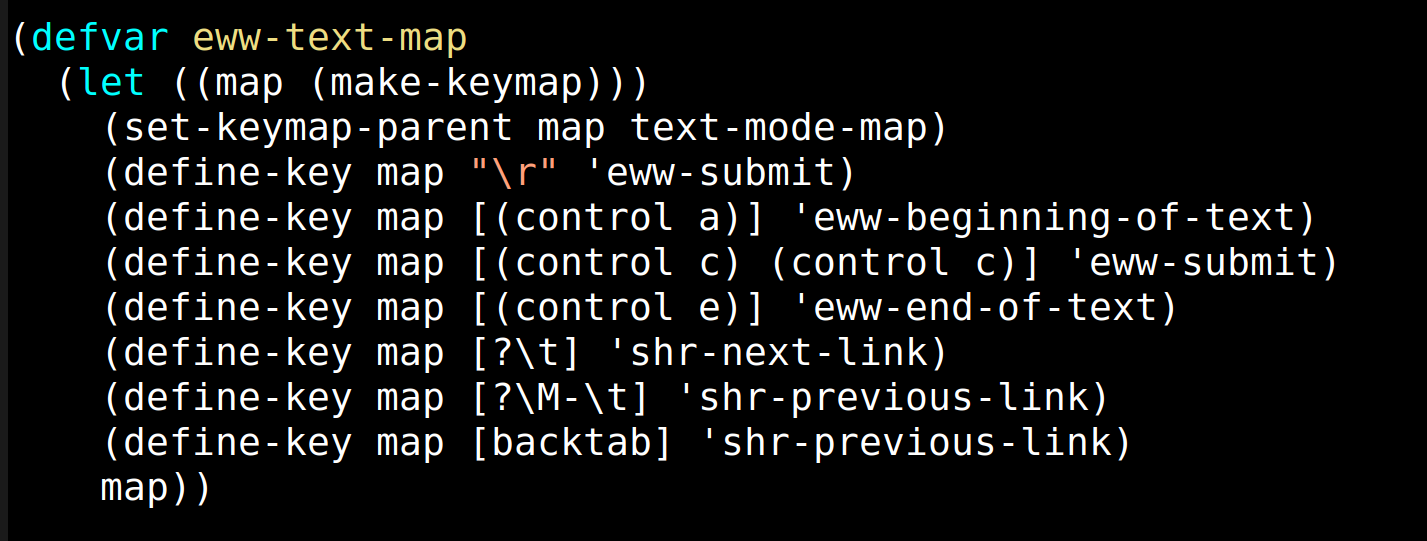

To take a complete examples, here’s the definition of a little keymap in eww in Emacs 28 (and older):

Here’s how that looks in Emacs 29:

It’s a whole lot less typing! (And reading, when you’re reading the code.) In addition, it’ll actually tell you if you’re trying to define something that’s syntactically invalid — ‘define-key’ is infamously permissive; it accepts anything, but if it’s wrong, it just doesn’t work.

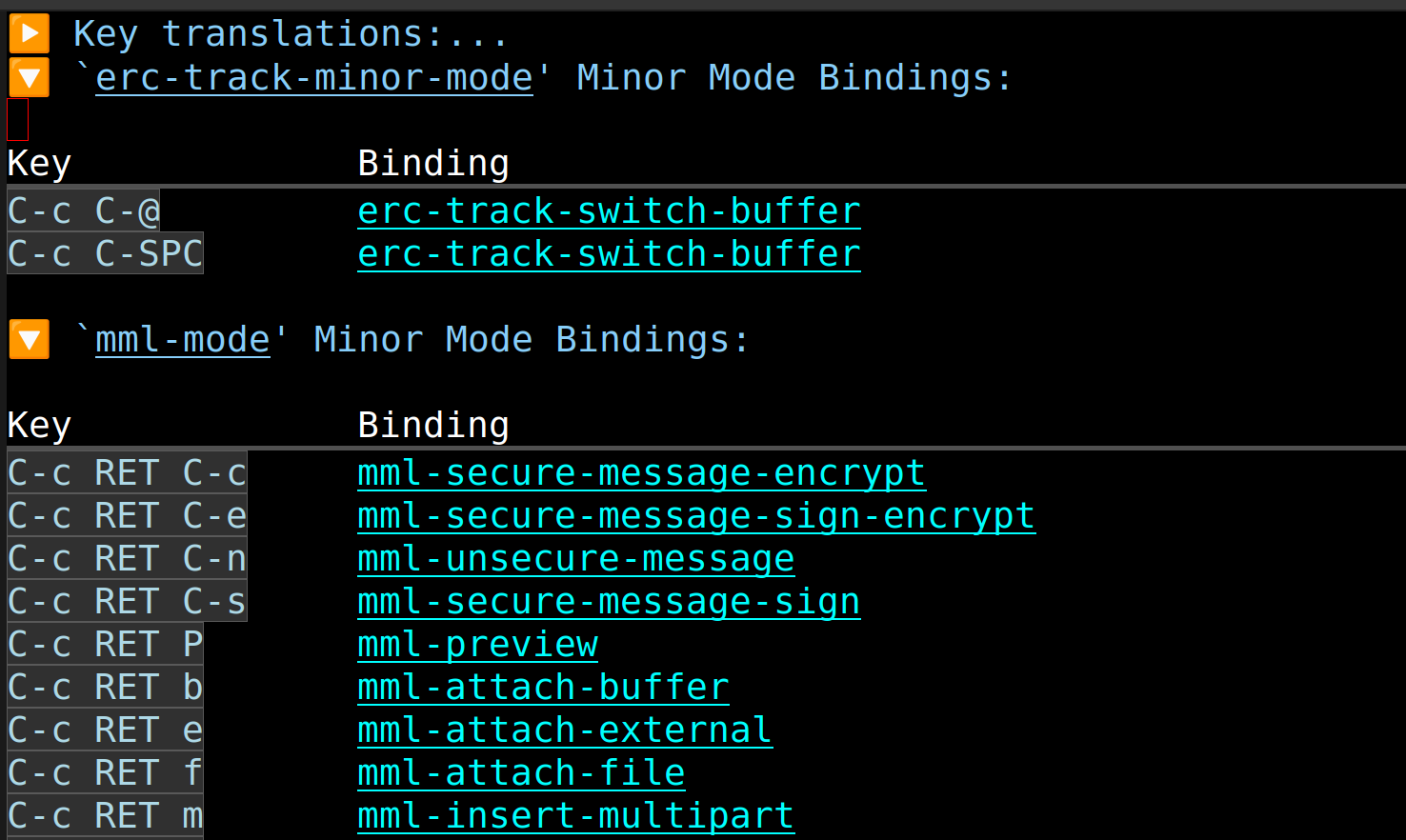

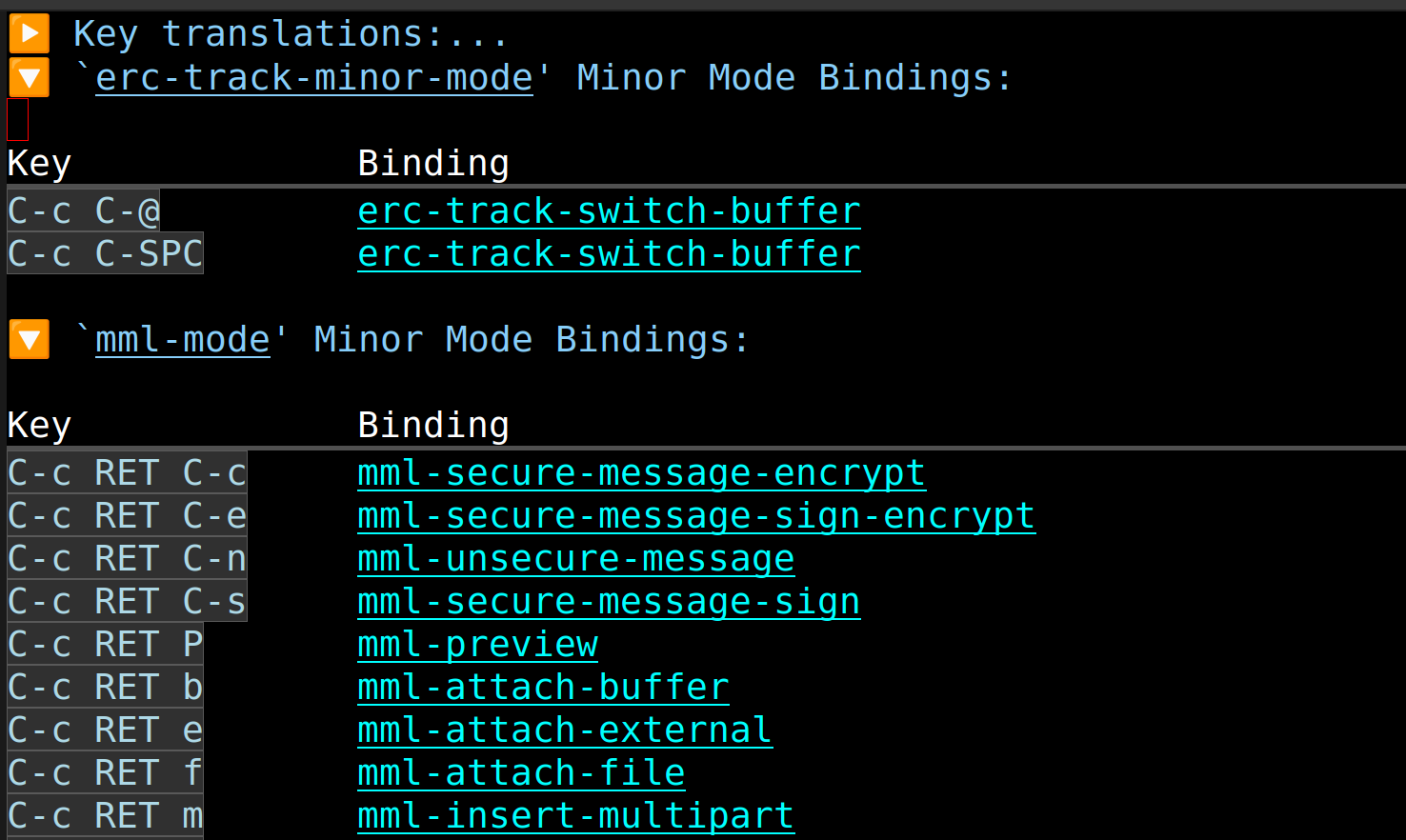

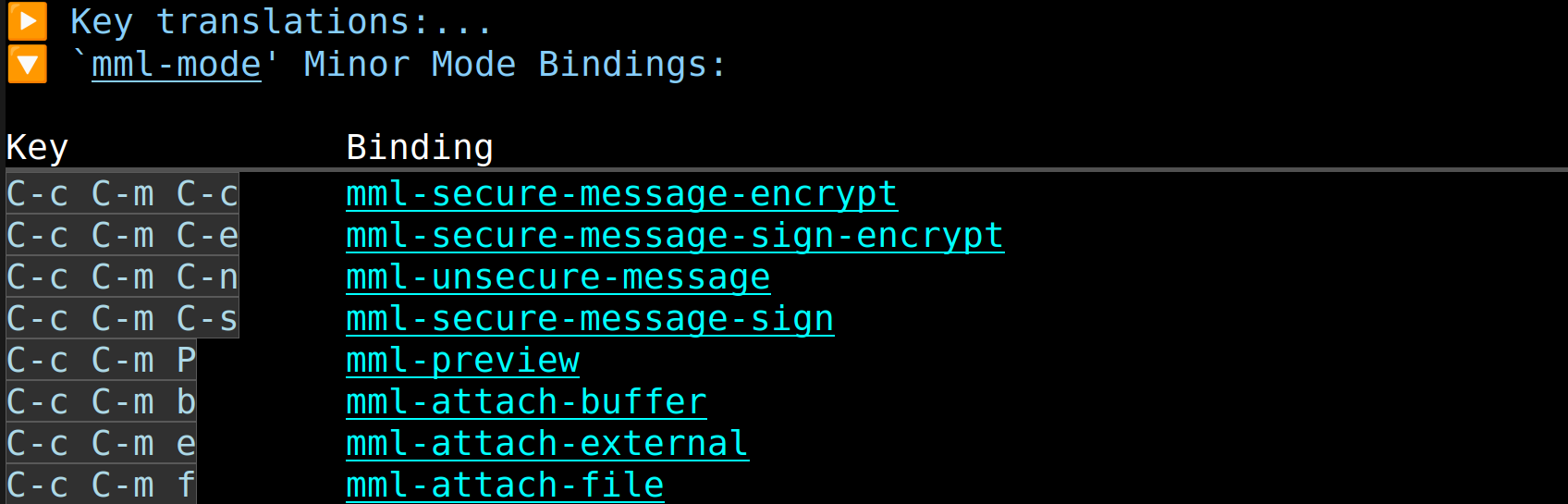

And remember what I said up there about key descriptions? It’s now round trip proof. C-m (control m) is internally represented the same as RET, so if you defined a key with a mnemonic of “m”, ‘describe-binding’ would spit out RET at you:

The intention here was “m for mml”, not RET. (And C-c C-m C-c is a lot easier to type than C-c RET C-c.)

Voilá! Prestó! And other French words!

This “fixed” output is only available using the new key definition functions, so perhaps that’s another incentive for people to switch.

Hm. I have no idea why I didn’t post that back in 2021. You’re getting some quality material here among the stuff I wisely never posted!

2021-02-12: Waves

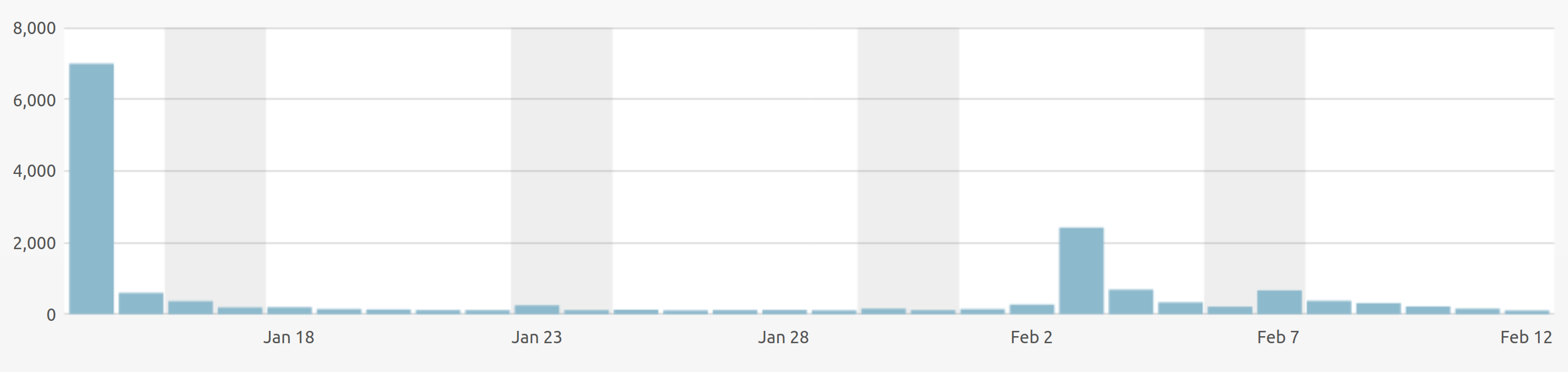

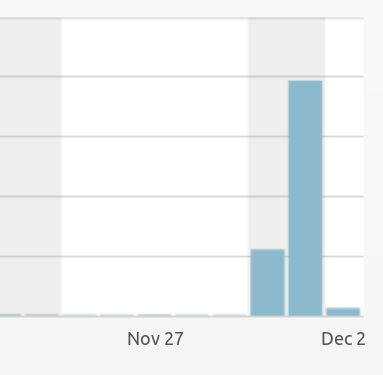

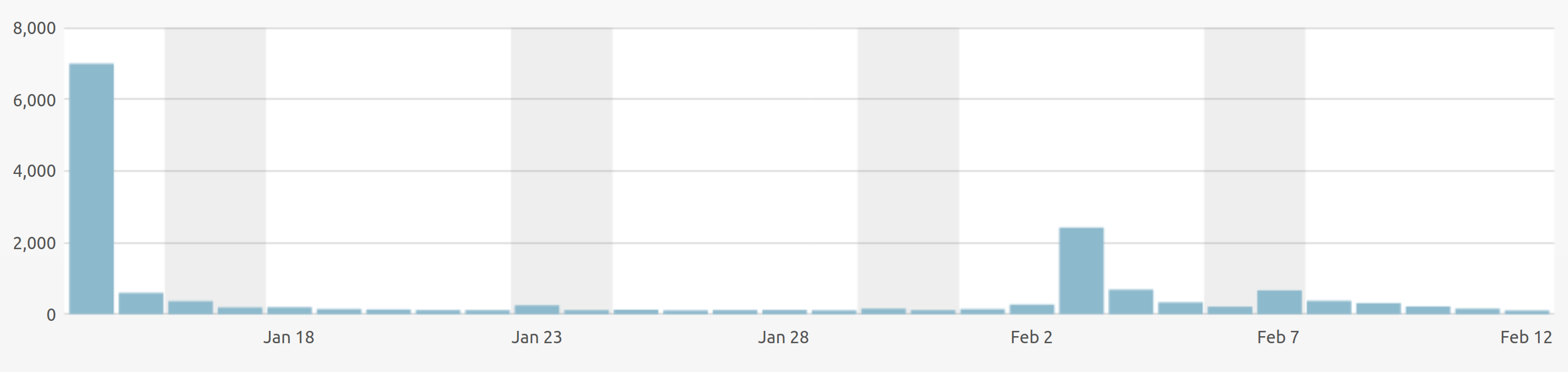

Uhm… this is the WordPress stats chart? I don’t know what this was going to be about.

2021-01-31: The Most Beautiful Hacker News Thread

If you’ve read Hacker News for a while, there’s certain thread shapes that appear again an again, and I’ve just seen the most beautiful example of a really common one.

1) Somebody makes an observation referring to a really trite pop cultural thing:

2) Somebody makes a really hoary old joke about that pop cultural thing (that you’d assume everybody has seen a million times before):

3) Somebody doesn’t understand that it’s a joke, and explains to the person in 2) what 1) meant:

4) Someone explains to 3) that the factual content of what 2) said was right, though:

5) Someone incorrects 4):

6) Fact check to the rescue:

7) Finally, we get somebody who misunderstood the really, really trite pop cultural thing totally:

The only thing that stops this from being the most perfect thread ever is that some of the X)s are the same people.

Otherwise: Highest Grade Ever.

Eh… I think I decided that this was just too petty and unfunny.

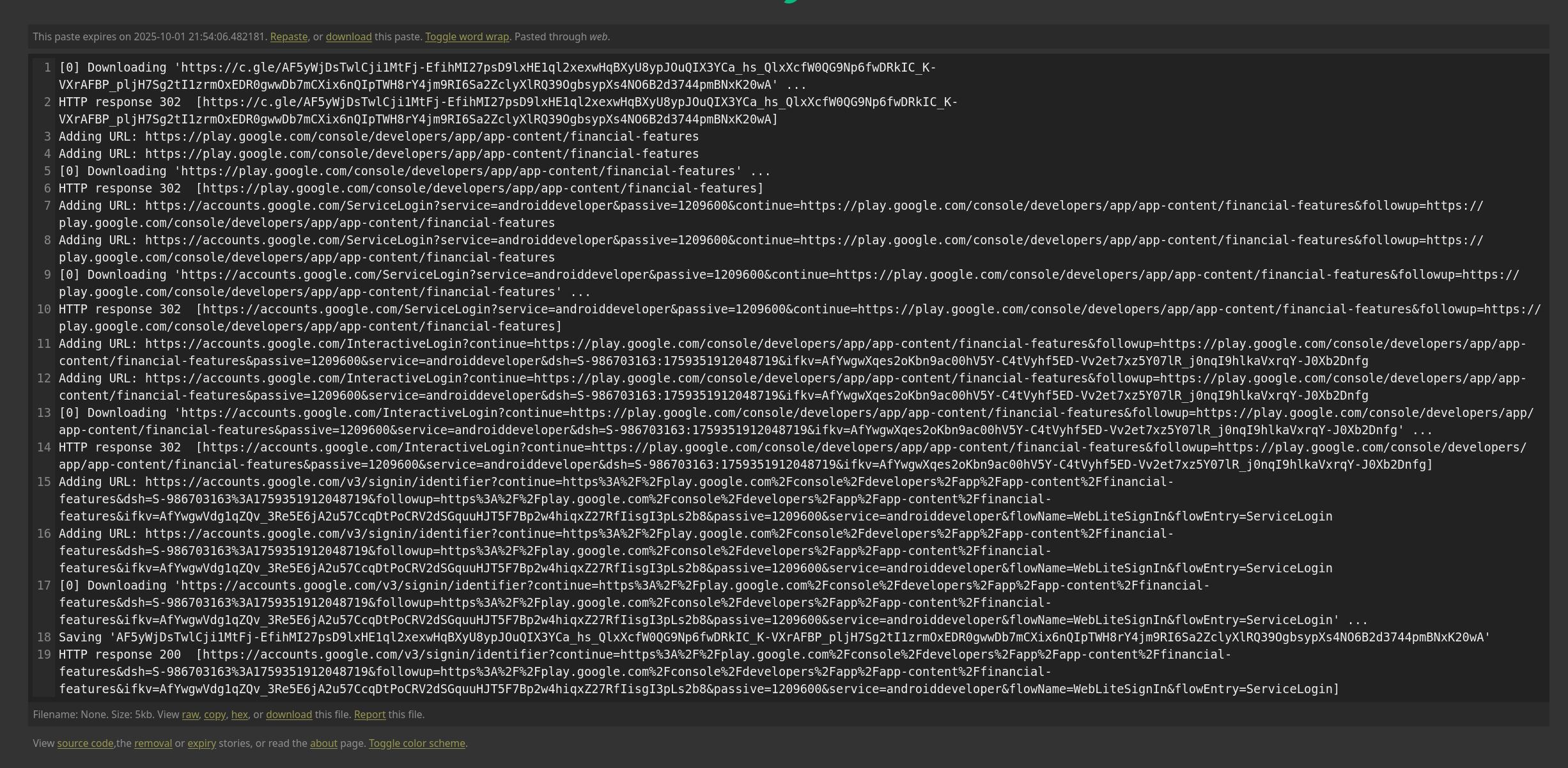

2020-02-18: Testing

Foo bar.

This is a foo.

Whoa! I must have been doing some experimentation with CSS 3D animations. I don’t know why I’d do that on the blog instead of a web page somewhere.

2020-02-02: Around the Interwebs

Perhaps I was considering doing a link blogging blog…

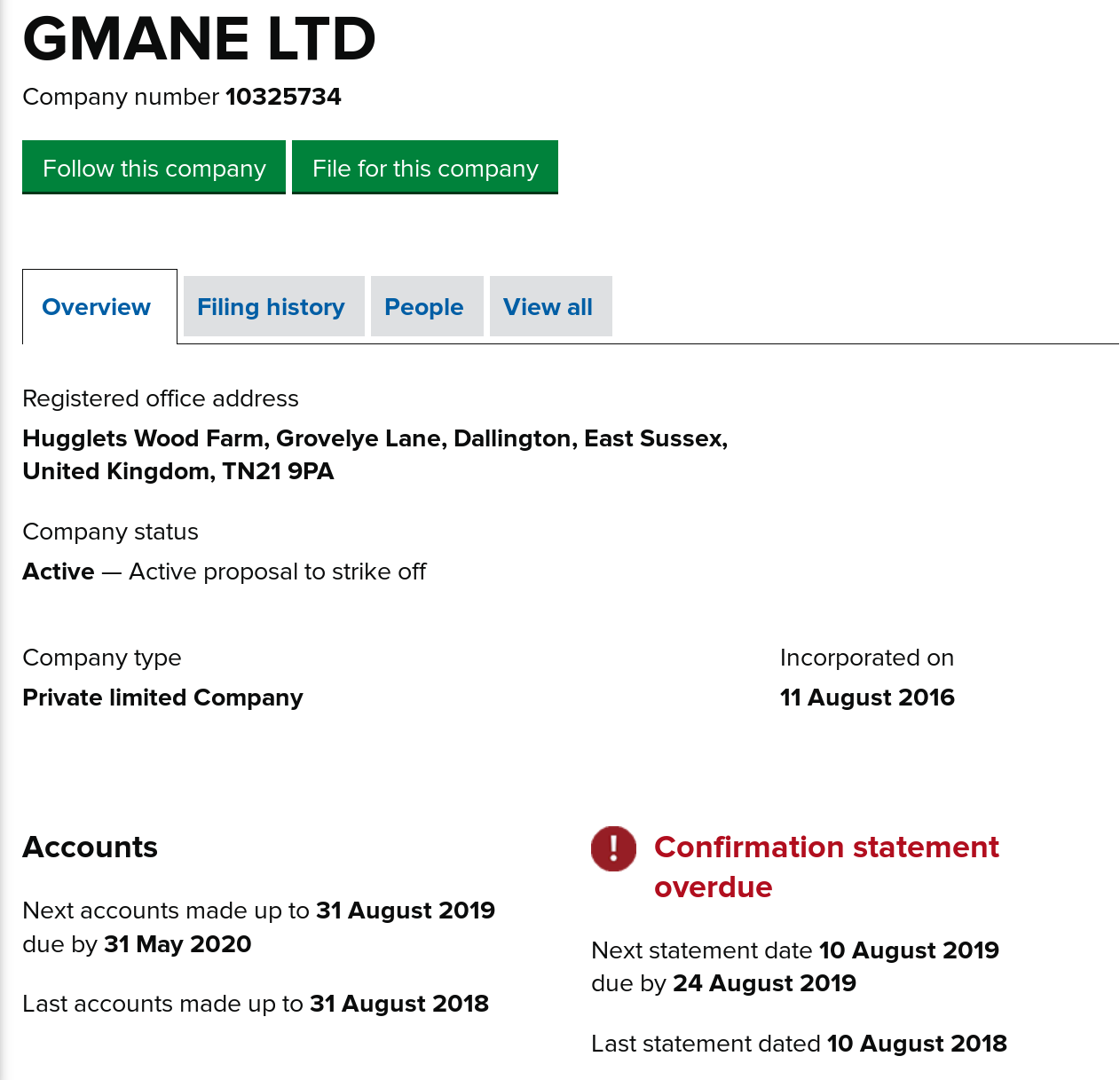

Er… this has to have had something to do with Gmane shenanigans.

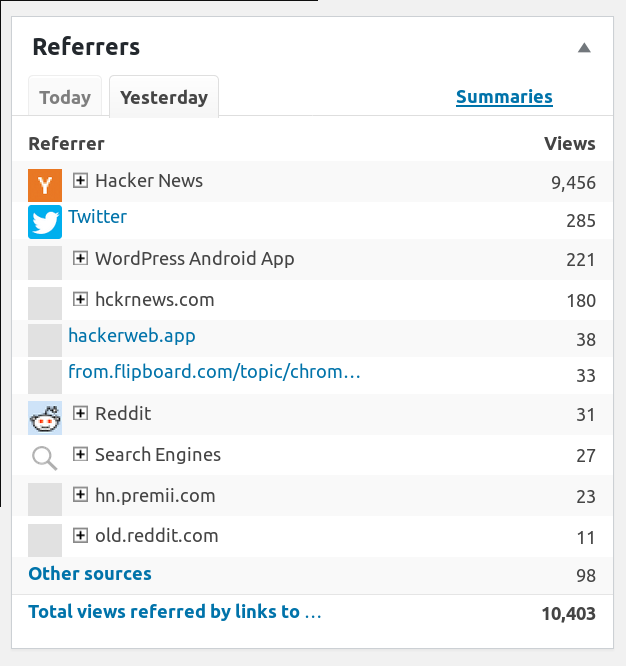

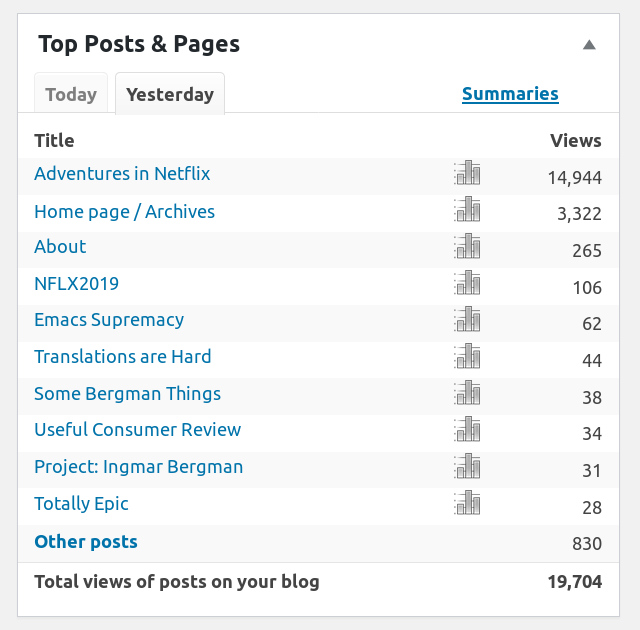

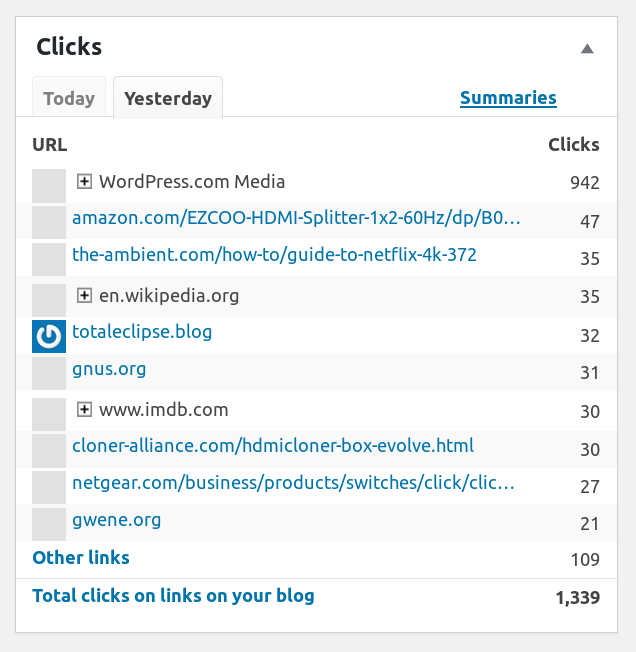

Something must have gone viral. But what’s up with the picture of that HDMI splitter?!

2016-10-30: Obey

This box was sitting in the stairwell at work for about two months.

The text says: “VERY IMPORTANT. DON’T TOUCH”.

Heh heh.

2016-09-12: Finally AirPods

It seems to be obligatory to write think pieces about the Iphone 7 finally getting rid of the 3.5mm audio jack.

Here’s my reaction: Good! I’m glad! Finally somebody is going to mass produce the components that will enable somebody else to make the earphones I want.

There have been several attempts at making completely wire free headphones and ear buds. What all these have in common, and will probably have in common with the forthcoming AirPods, is that they don’t work.

Because audio over Bluetooth is an inherently non-workable idea.

But wait! You have several Bluetooth headphones? You never have any problem with them? Except that they sometimes won’t pair with your device? And you have to switch them off and then switch them on again? And when they’ve paired and you place your phone in your left pocket wallet you get frequent drop-outs? So you put them in your right shirt pocket? And then you only get a few barely noticeable dropouts like almost never? I mean, most a few per hour? So they work perfectly, and you see no need to ever have a different technology?

Yes. Those.

They. Don’t. Work.

Relying on having a wireless technology that streams audio to your ears continuously will never work. It’s an inherently stupid thing to do.*

Instead, the generation after the next of wireless phones/buds will have a cache and a built-in mp3 player. From the user’s point of view, it’ll work just the same as now. You press play on your phone audio player and the music starts playing, and you put the phone back in your pocket.

What happens in the background is that your headphone downloads the rest of the mp3 over whatever protocol is being used (a new low power wifi? something?), and then it downloads the next few mp3s too, just in case. Then it puts its wireless chip into very low power mode, and just plays the files it has in the cache.

The phone becomes a remote control and a file server for the semi-autonomous headphone, and if you lose the wireless connection now and then (which will happen a lot, since that’s what wireless connections do), you won’t be able to tell. (It will, of course, also have to be able to mix audio live, since you want it to say “boop-i-doop” when you get a call.)

This is the window of opportunity for Samsung, Sony and Oppo: They will be able to get this tech up and working quickly. All the tech is already available; it’s just a small matter of programming. Samsung is almost there with these (apparently horrible) earbuds already, which do most of these things, but not very seamlessly, of course. Since it’s Samsung.

But then! With all this in place, it’s just a hop and a skip away from getting good totally autonomous headphones and buds up and running! There will be hundreds of Chinese companies making cheap, functional, good sounding mp3 playing headphones!

Because I don’t care about any of the phone integration stuff: I just want to be able to buy wire free headphones that I can just pick up, switch on, and listen to music on.

This is what I’ve been doing for the past 15 years, anyway, but whenever somebody puts mp3 playing headphones into production, they stay in production for two weeks and then they decide that nobody wants that stuff, anyway, and I have to buy them from ebay when I discover that they exist.

Death to Bluetooth! Long live wire free headphones!

—

*) on the other hand, people have been living with wires to their headphones for years and years and have accepted that as a thing they can live with (no matter how absurd that seems), so perhaps they’ll just accept living with Bluetooth headphones, too.

That’s the oldest unpublished draft I have, almost a decade old, and I was wrong: Getting Apple on board with Bluetooth didn’t really fix anything: Bluetooth is almost as janky as ever, and I still think they should implement a more “offline” version of Bluetooth.

*phew* That’s a lot of posts that could have been lost to humanity! Aren’t you lucky that I finally posted them!