It’s Thursday, so it must be time for some baking and an old book.

I decided on ginger nuts, and I wanted a recipe that would give me slightly soft cookies. So I went for one with syrup. Does that makes sense? I don’t know? Do I look like I know what I’m doing?

There’s an extraordinary amount of butter in this… 250g butter vs. 400g flour. Does that even make sense?

Melting the butter in the syrup… Look how delicious that looks! LOOK!

Eww.

Fold fold.

Yeah, that’s an appealing colour. I took one quarter of the dough and added liquorice powder, because I wanted to experiment. But no matter how much powder I mixed in, the dough tasted like… dough… So I may be giving myself a heart attack.

Most important of all, I got to use one of the attachments to my kitchen machine that I’ve had for a while but never found a use for: A spice grinder thingie. It works well, but it’s fiddly: The finest powder seems to migrate to underneath the rubber sealing ring, no matter how hard I fasten it…

Roll roll…

Bake…

Er… flattening…

Growing!

Totally flat!

Well, that was a bust. Not only did they flatten out way too much, but I burned them. I tasted a couple and they were… not very good. To the trash can.

I should perhaps add more flour? I don’t know? But the dough is super-hard and that doesn’t seem likely to happen, so…

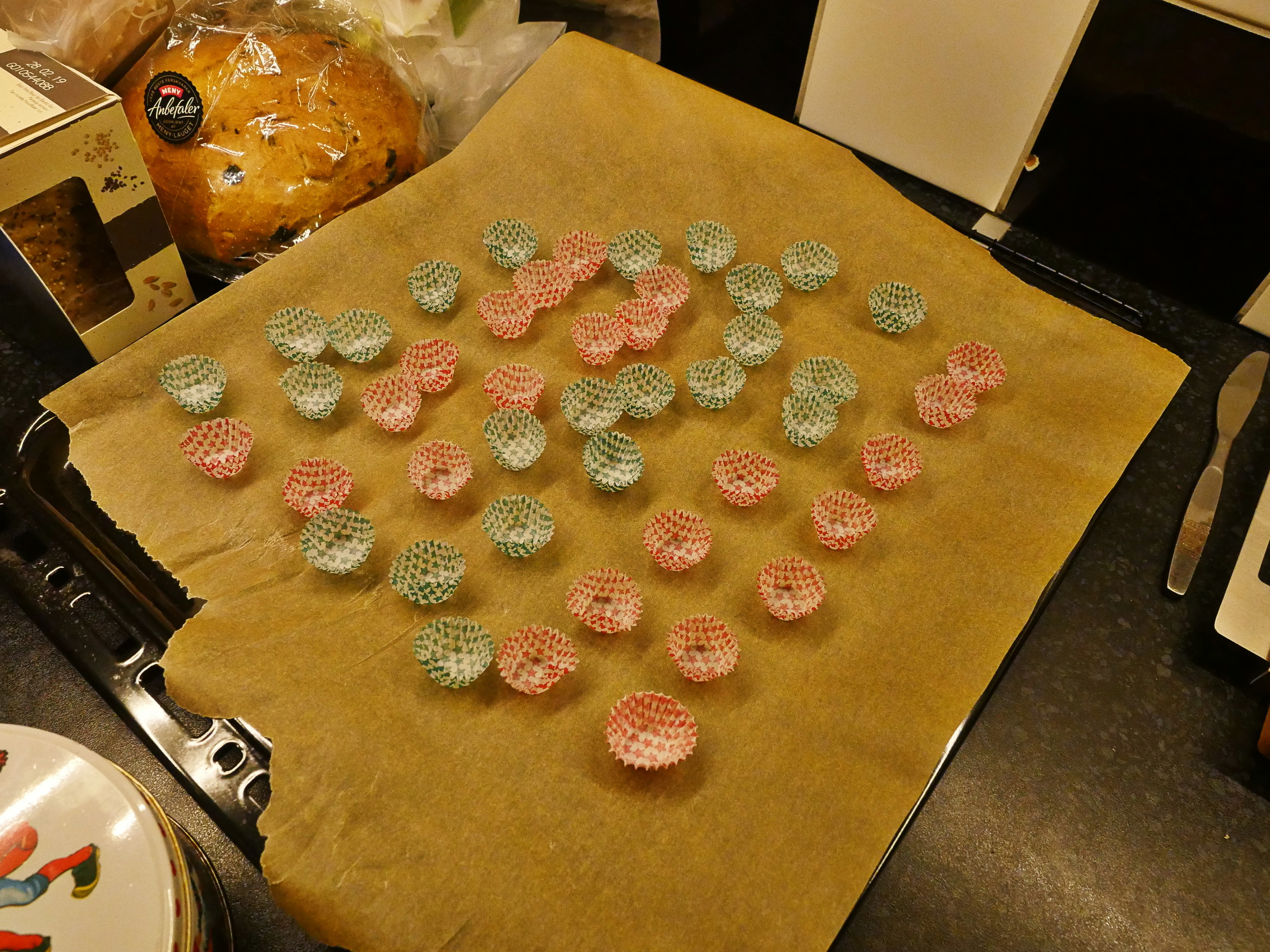

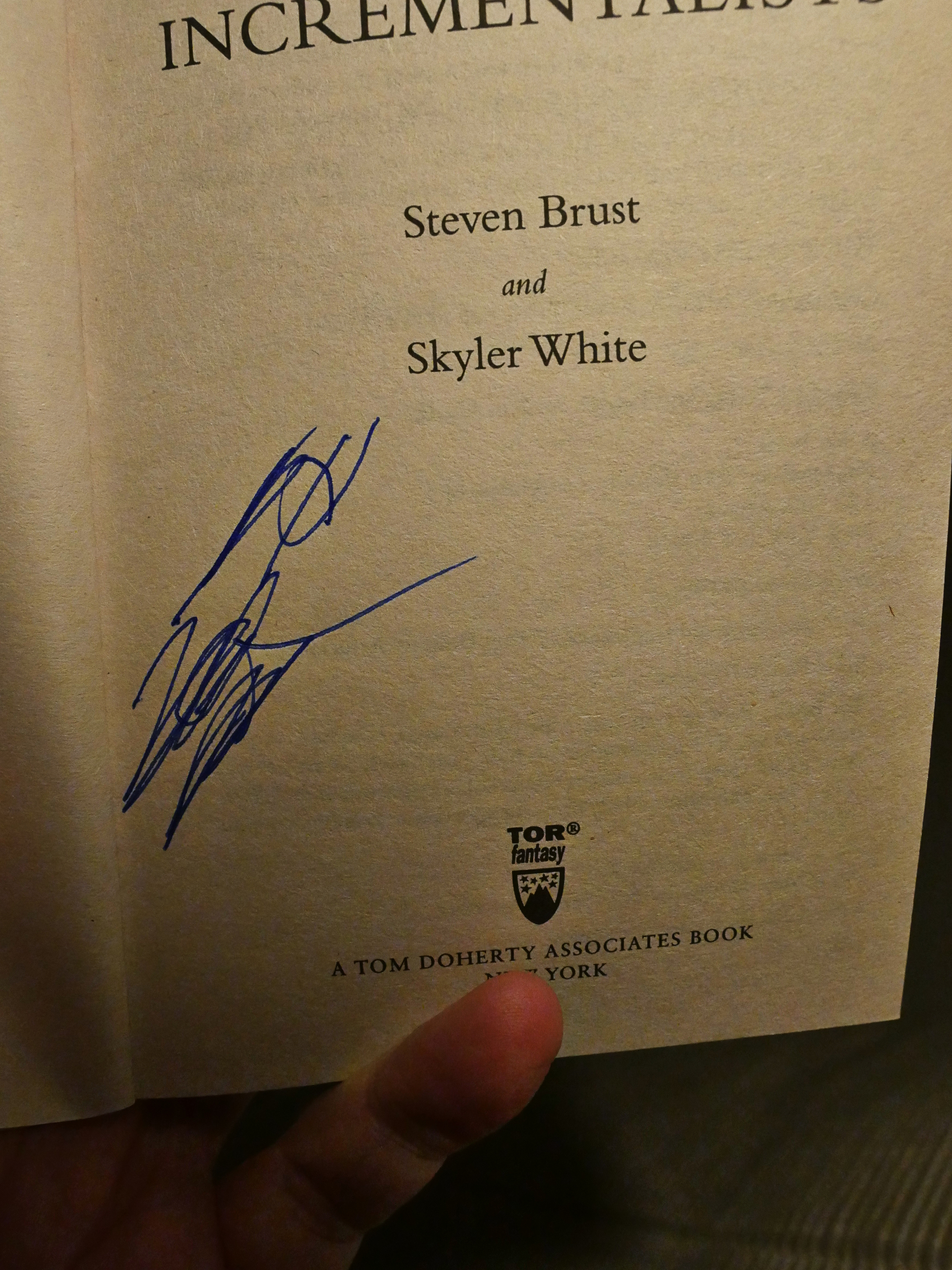

Paper cups!

Now then!

Uhm…

Mushrooms!

And…. I undercooked them.

Next try!

Ok then!

And… they turn out to just not be very good. If I bake them X amount of time, they taste like dough, and if I bake them X+3 seconds, they get hard and greasy at the same time.

And the liquorice batch weren’t much better. I’d rate them…. almost edible?

That’s an awful recipe. Or I did something really really wrong.

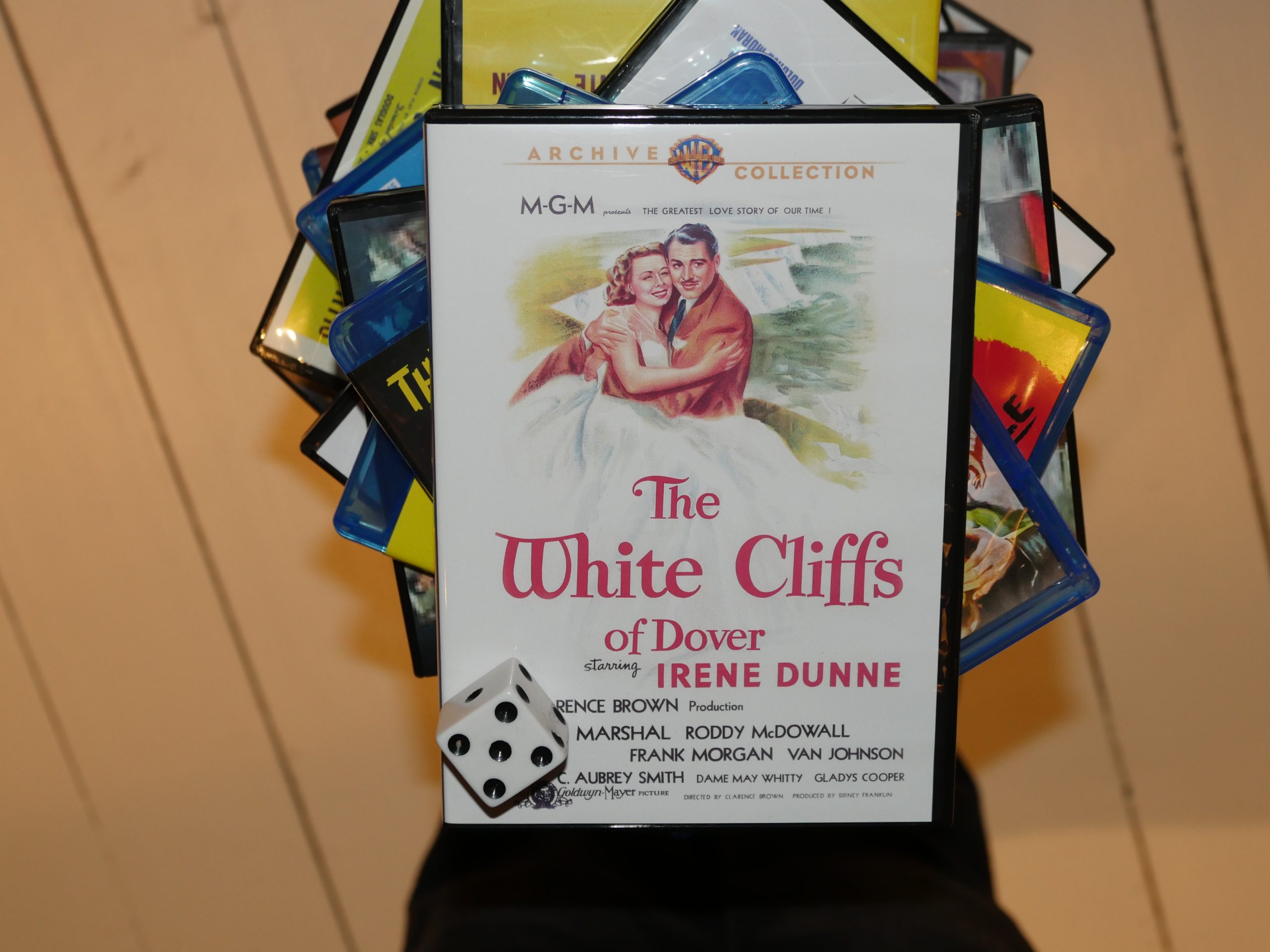

I hope the book’s better!

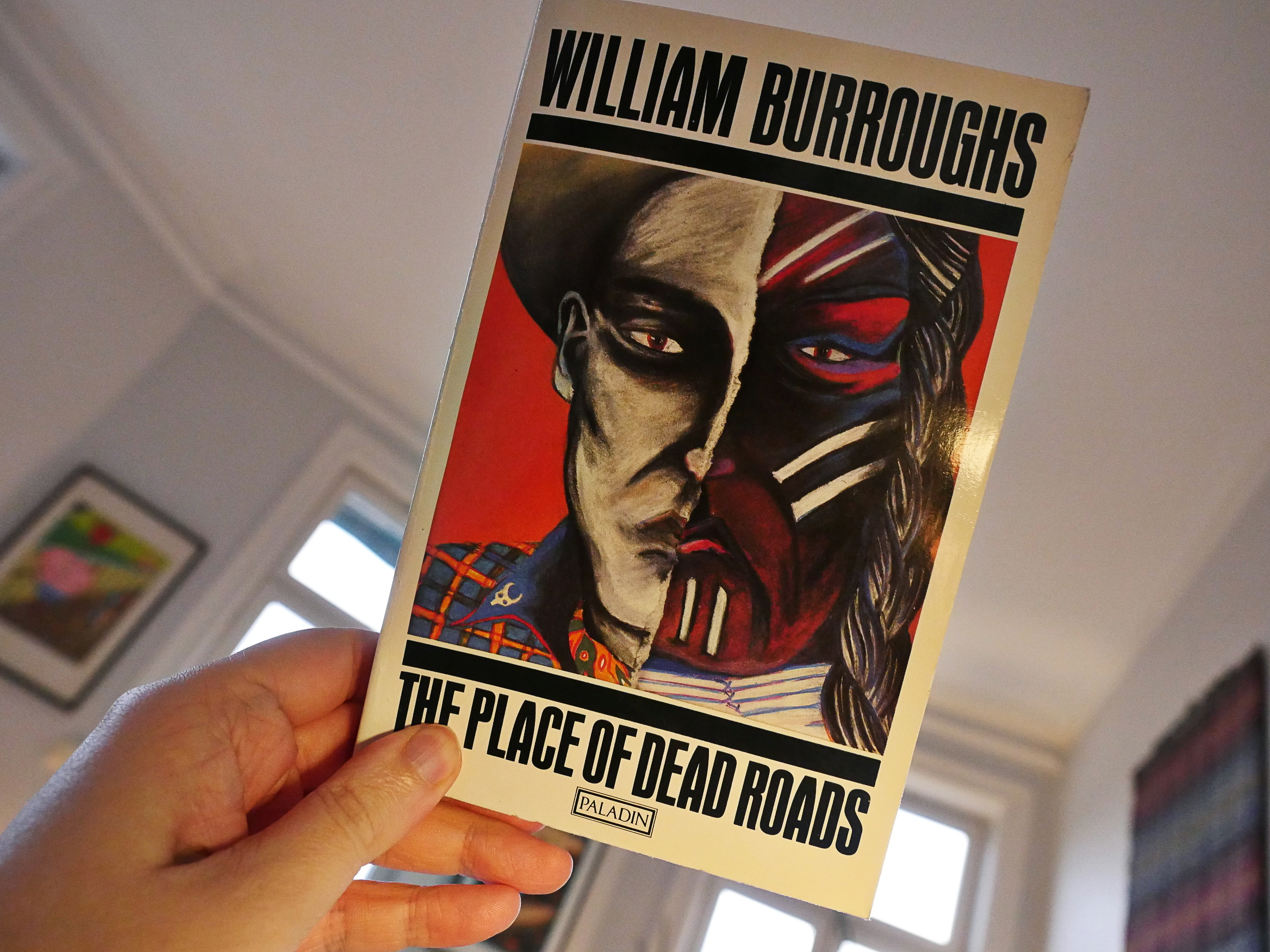

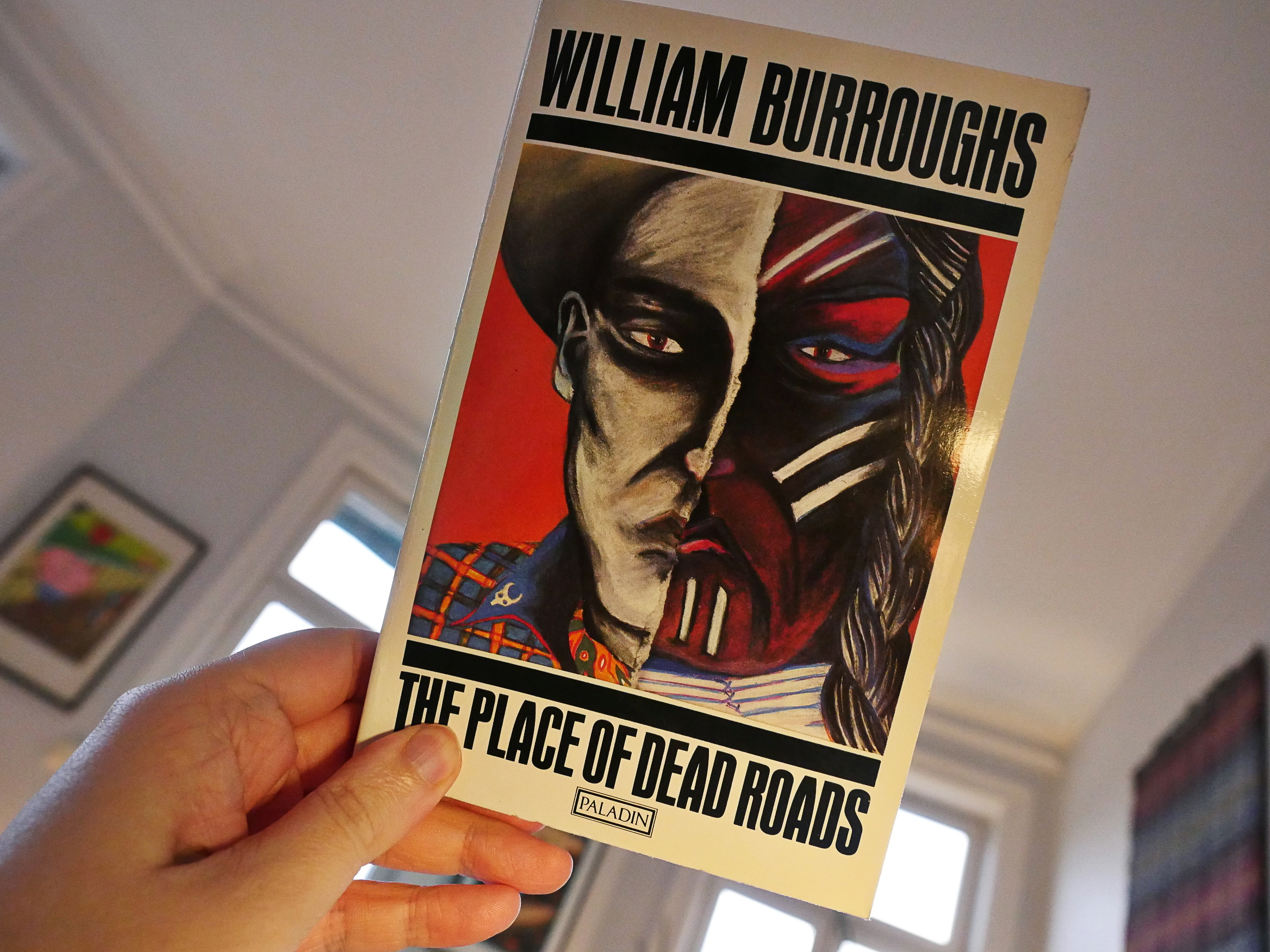

It’s The Place of Dead Roads by William Burroughs.

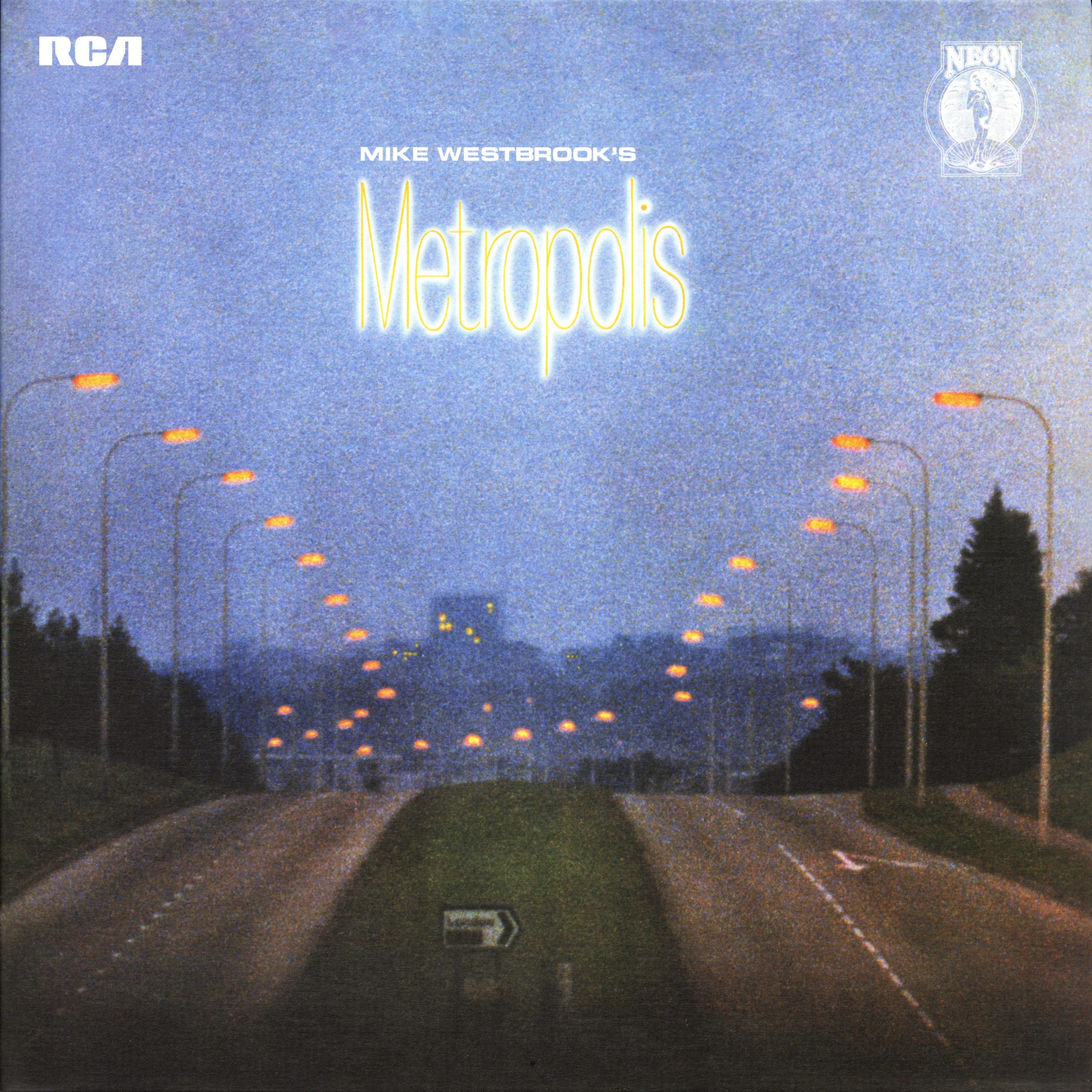

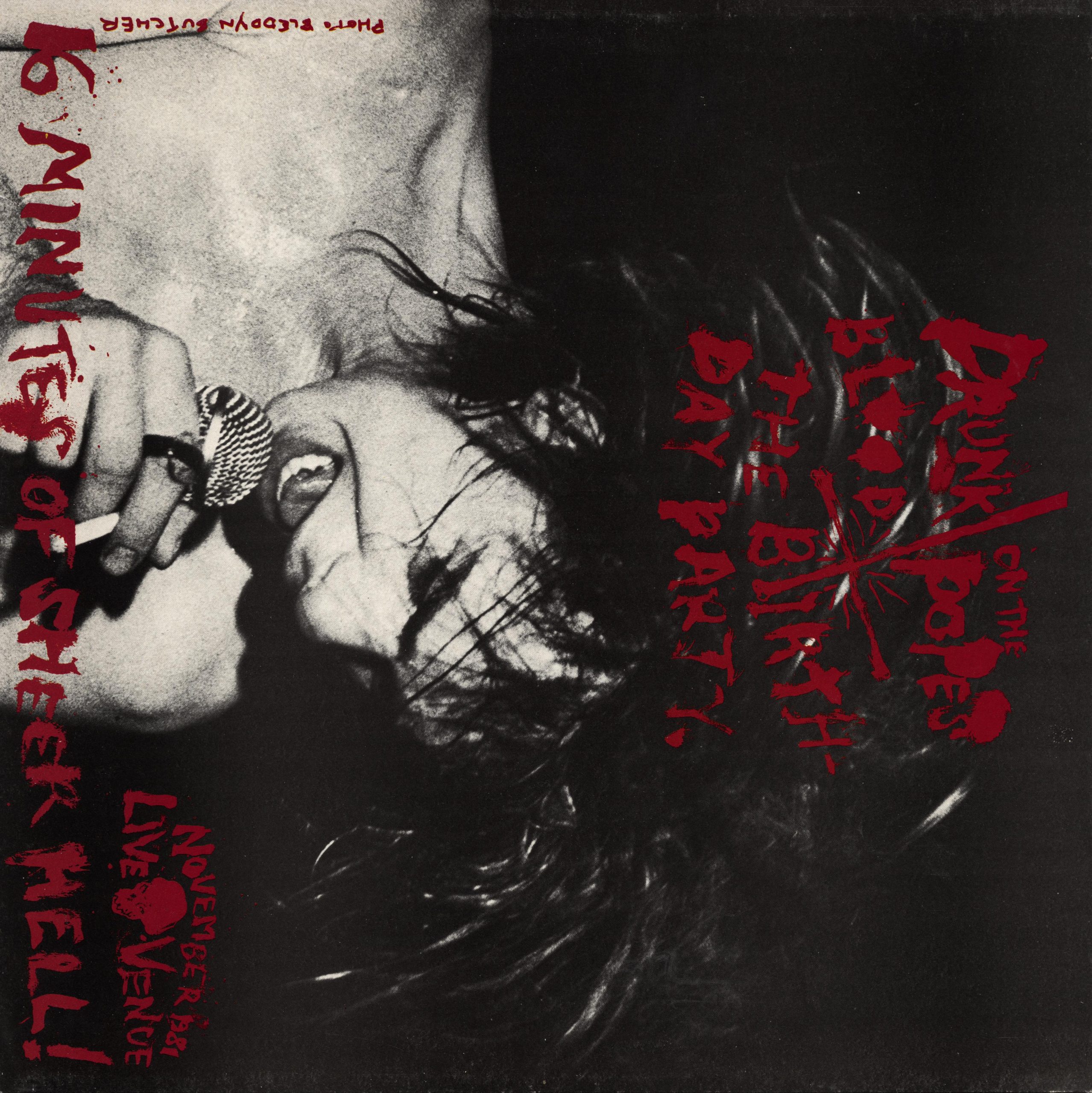

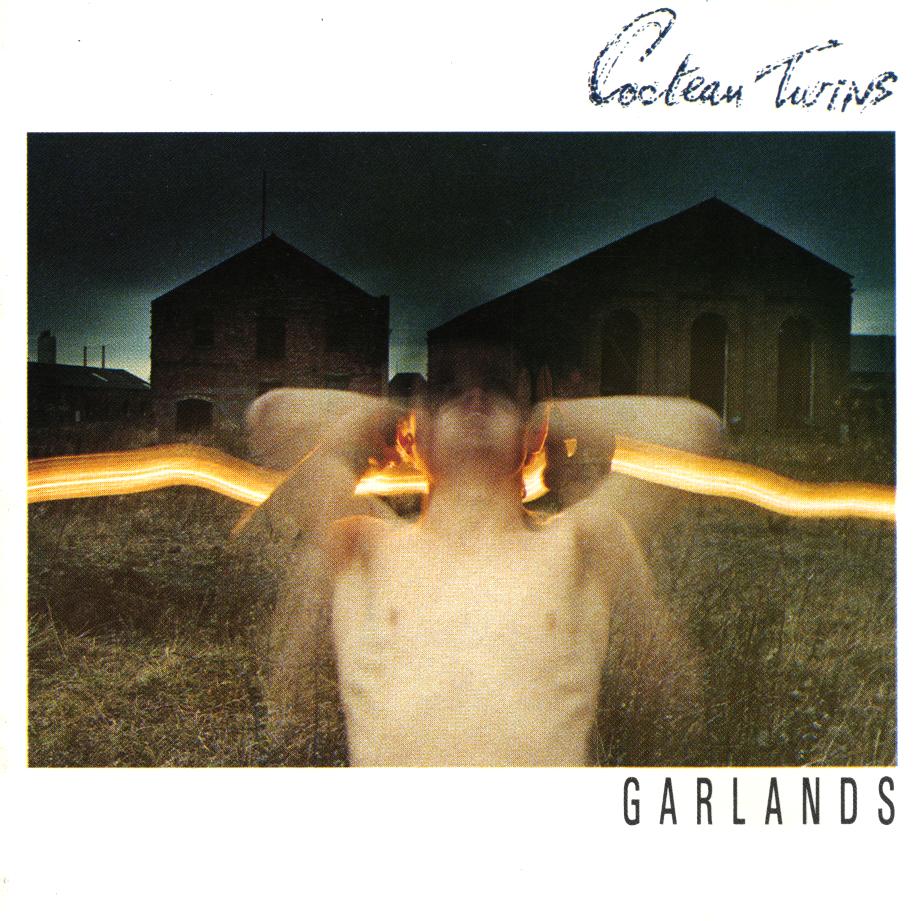

I remember when I bought this: It was one of my first trips to London, in 1993. I went there with a friend to see a week-long series of concerts called The Thirteen Year Itch. It was a showcase for the British record label 4AD, and I was all agoggle.

I was in my early 20s and made my way to all the record shops and bookstores in London, I feel, and had a suitcase filled with goodies when I left. I remember… Sister Ray on Berwick Street? And several other record shops around that area. Sister Ray was mind-boggling. I remember buying a bunch of Angela Carter books at… Blue Moon Books?

And then I bought a bunch of inter-leck-tuals books at Foyle’s. Just the size of that place intimidated me. I remember getting… I think Thomas Pynchon’s Gravity’s Rainbow there at the same time? And a couple of Burroughs books because I had read Naked Lunch.

But I never read this book, because… I was kinda over Burroughs already, but bought it because it was something to buy. It’s not that I didn’t intend reading it, but you know.

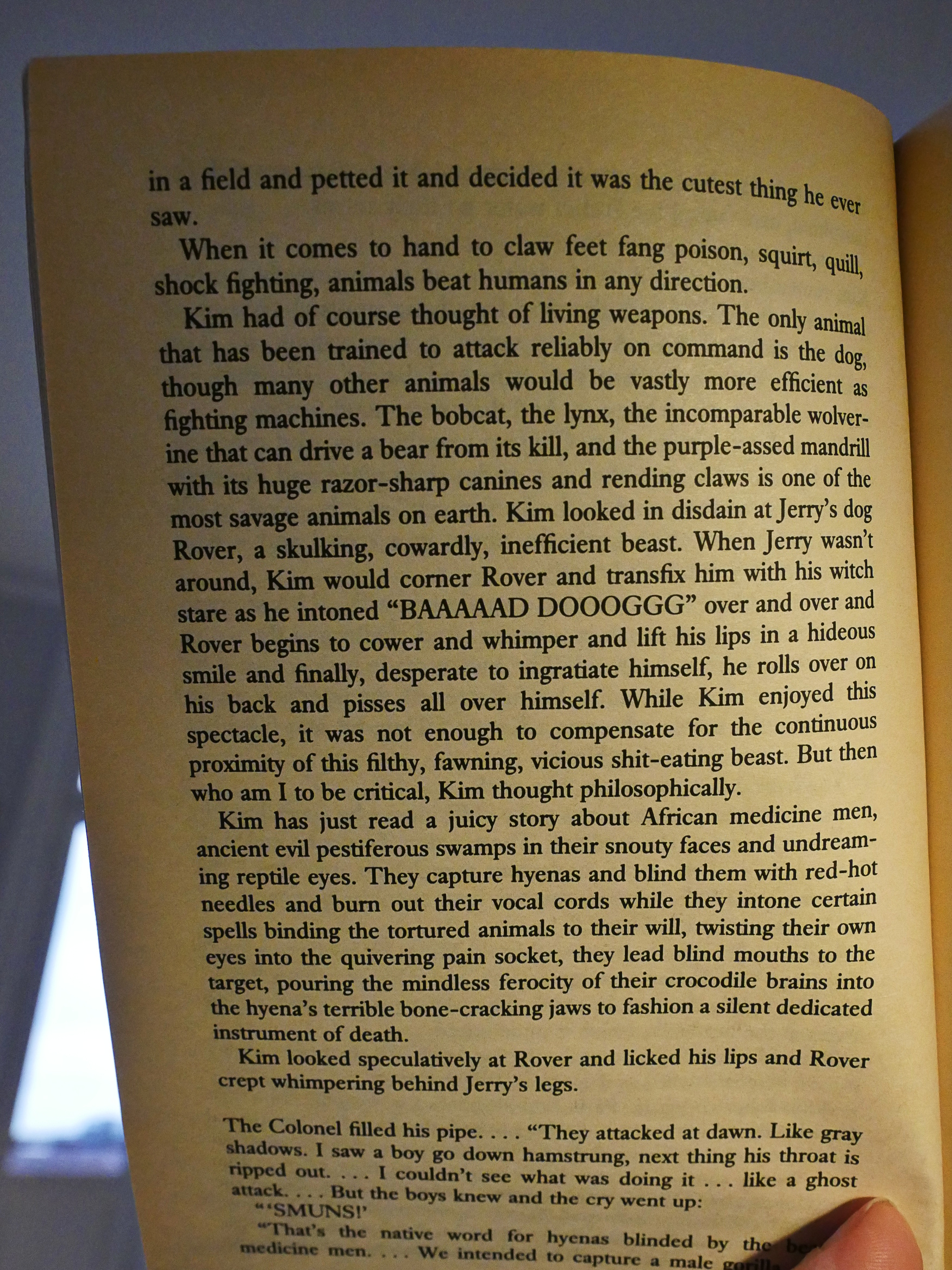

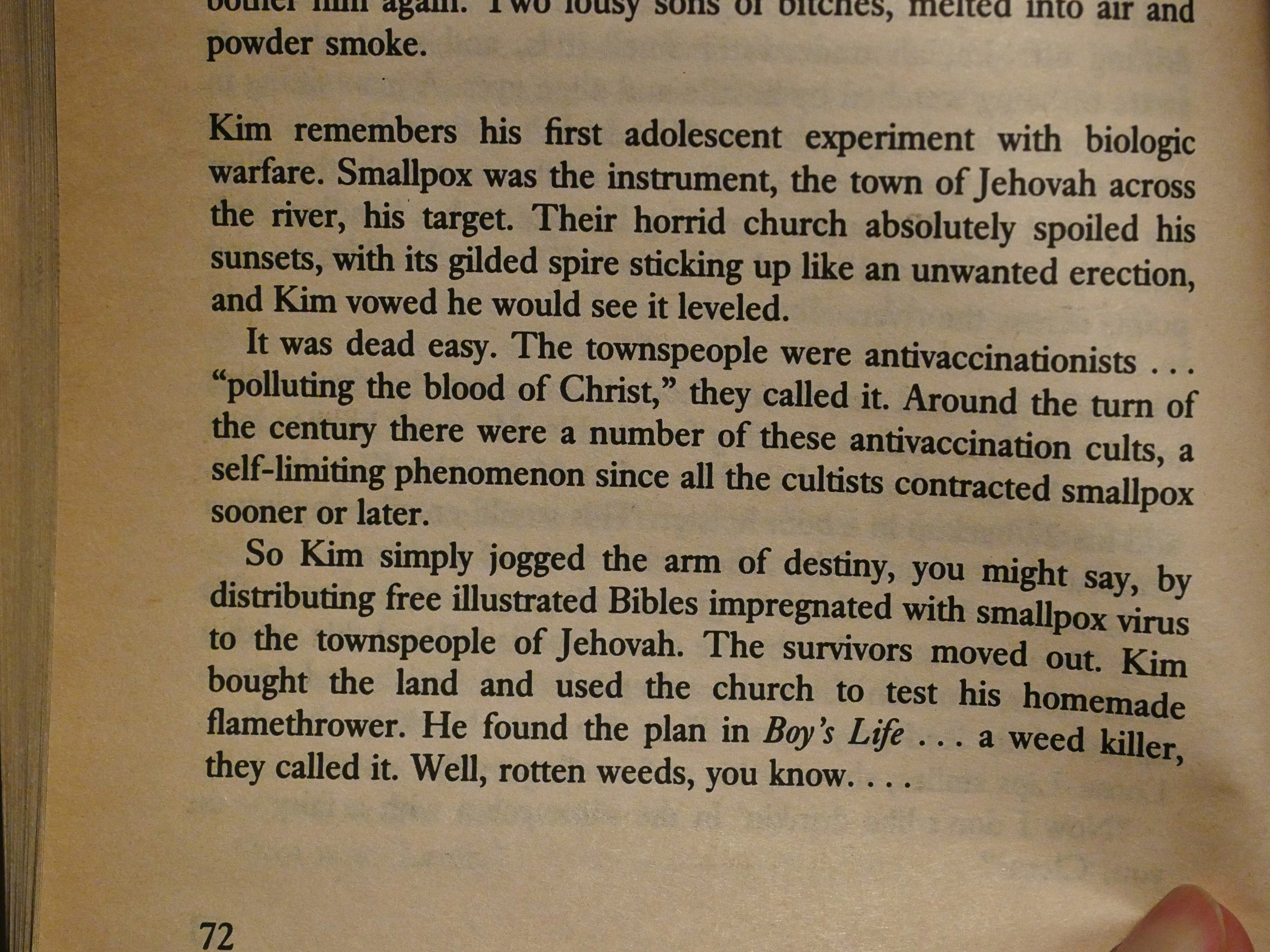

It’s not quite what I expected slash dreaded. This is a book from 1983, and is much more subdued than Burroughs’ well-known 50s/60s work. It’s a fairly straight-forward narrative novel about a gay Western gunslinger, and has all of Burroughs’ ticks. It’s an entertaining read.

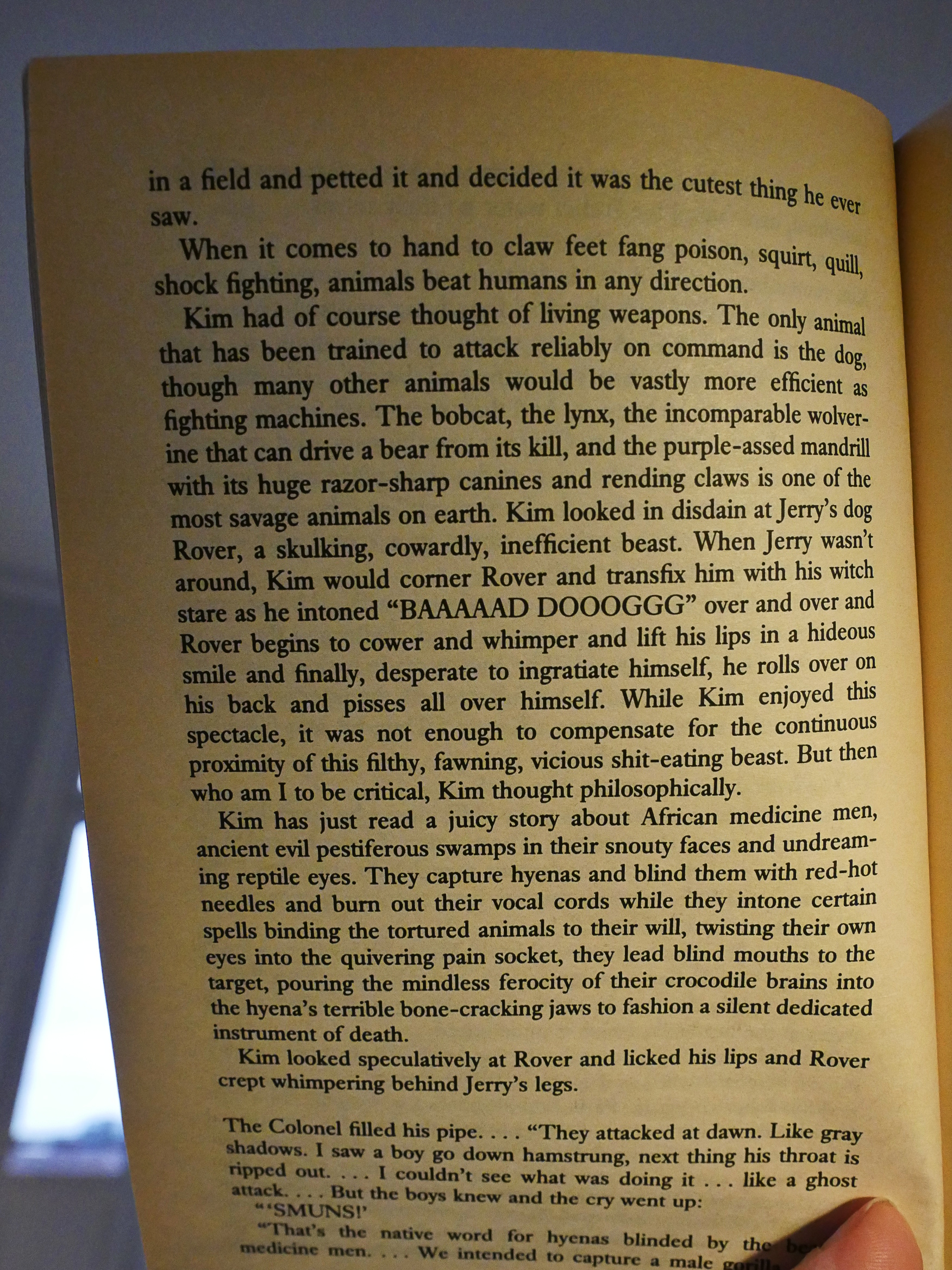

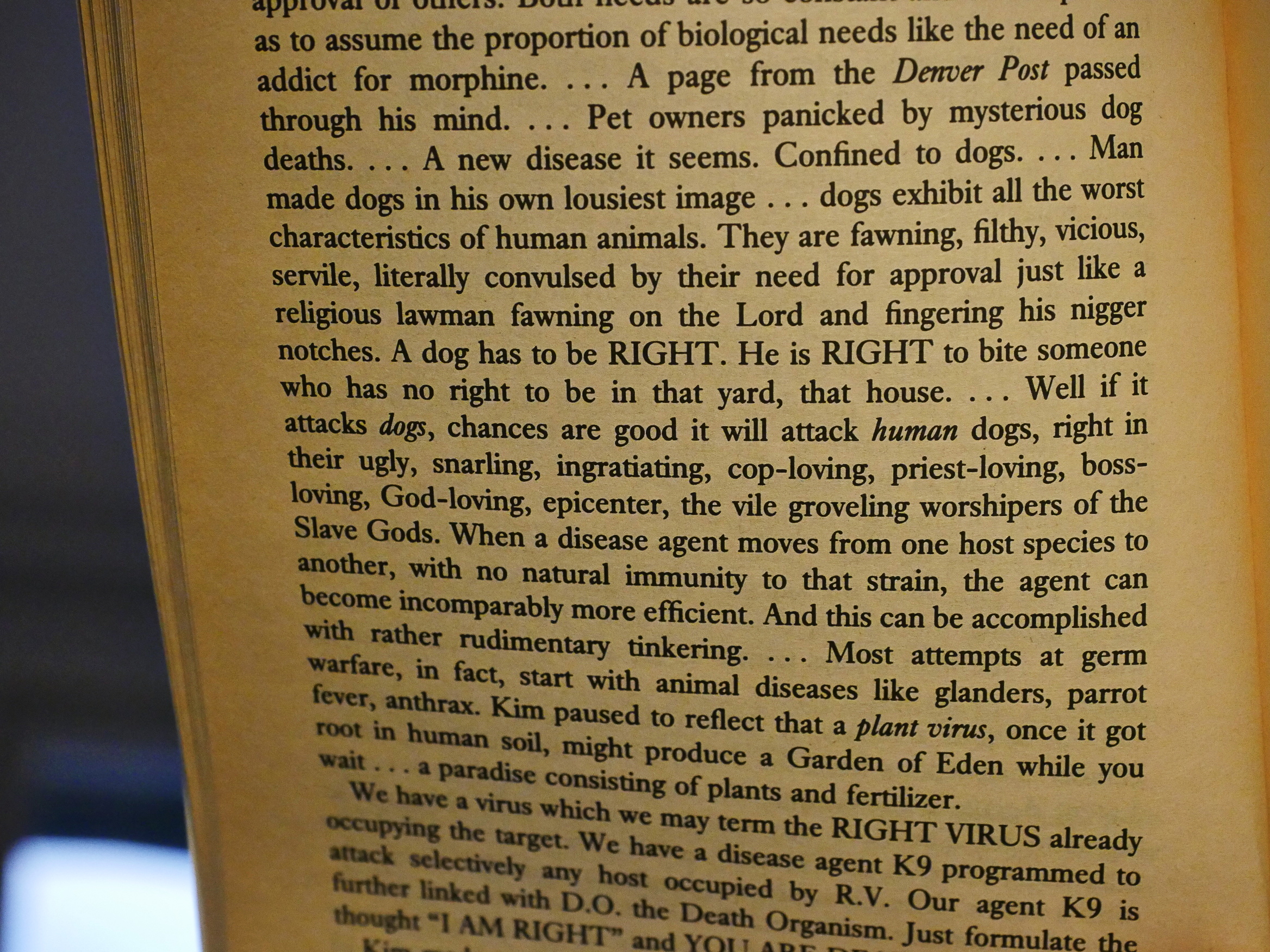

You gotta love these anti-dog rants. “… ingratiating, cop-loving …” There’s also long loving descriptions of all kinds of guns.

The narrative drops into dreams and fantasy without much preamble, so you gotta pay attention. It takes a while to get into the rhythms of any writer when starting a book, and that Burroughs takes a bit more time than most isn’t that surprising.

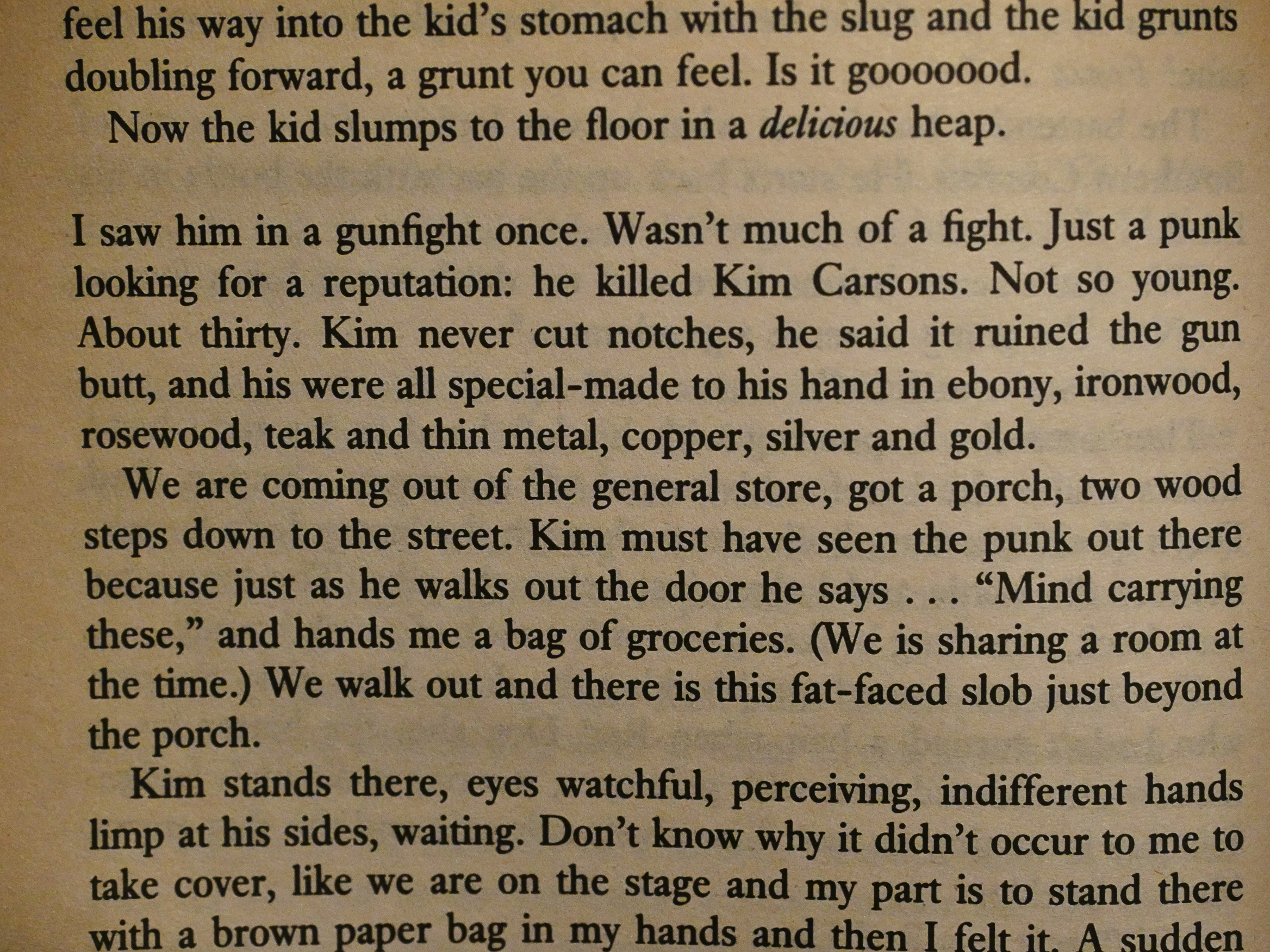

It’s written in third person most of the time, but Burroughs drops into “I” at particularly exciting points, and things get perhaps a bit more verbal? I thought that we were going to see Kim Carsons killed. Instead the bit after the colon is just a description of what reputation that punk was looking for.

But once I got into it, there’s so much fun stuff in here. Burroughs is funny and he writes exciting bits when he wants to. But, of course, he’s more into confounding the reader than telling gunslinger stories, which is fine.

Burroughs plays a bit with dialect, which is fun, but he also uses odd spellings in non-dialogue text. Or is “opponenet” just a typo? If so, there’s an awful lot of these, so perhaps it’s just bad proff-readding?

It’s all of Burroughs’ obsessions (guns and drugs and sex) quite condensed, but it’s a sometimes-exhilarating read. The bits about taking out mobsters and fashioning a new world were a lot of fun.

But then there’s the third part:

It’s a time slip thing, and we go forward to the present (i.e. 1984) through a series of not very developed scenarios, and then to Venus, and then we slip back again…

… and this part of the book was a bit of a slog for me.

But I was overall surprised at how enjoyable a read this was. Nine thumbs up. Makes up for those horrible cookies.

And I’ve got one more Burroughs to do in this blog series.

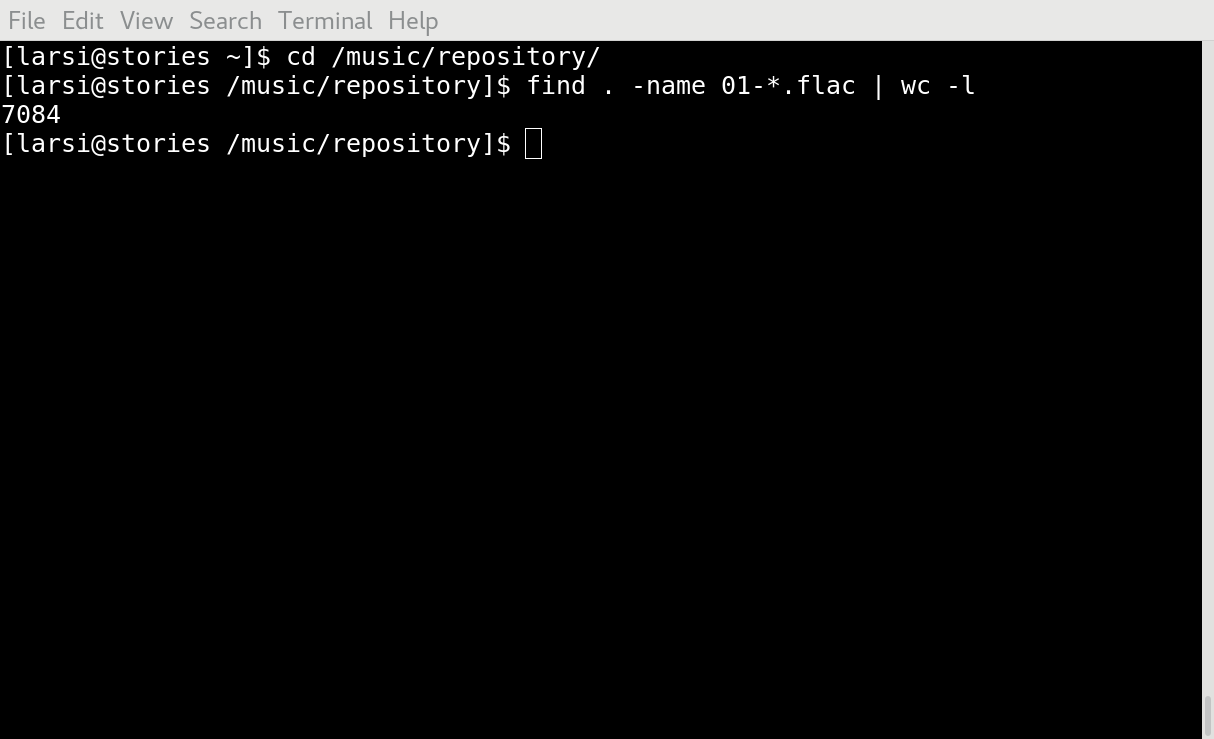

CAD201

CAD201

BAD203

BAD203

AD204

AD204

CAD206

CAD206

BAD213

BAD213