As I was falling asleep the other day, I wondered whether adding a simple delay would fix issues with doing WordPress visitor statistics. (To recap, the problem is that “modern” scrapers run a full browser instance, and they don’t put anything saying that they’re a bot in the User-Agent.)

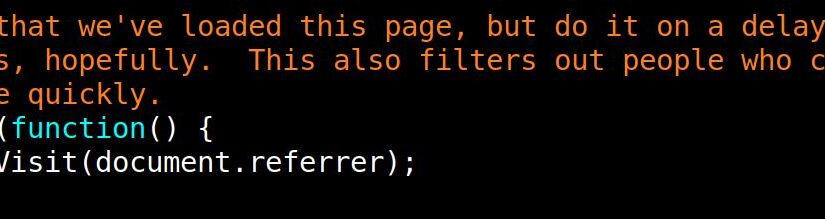

So: Instead of calling registerVisit immediately from Javascript, I delayed it for five seconds. (This has the added side effect of not counting people who just drop by and then close the page immediately — which is also a plus, I think?)

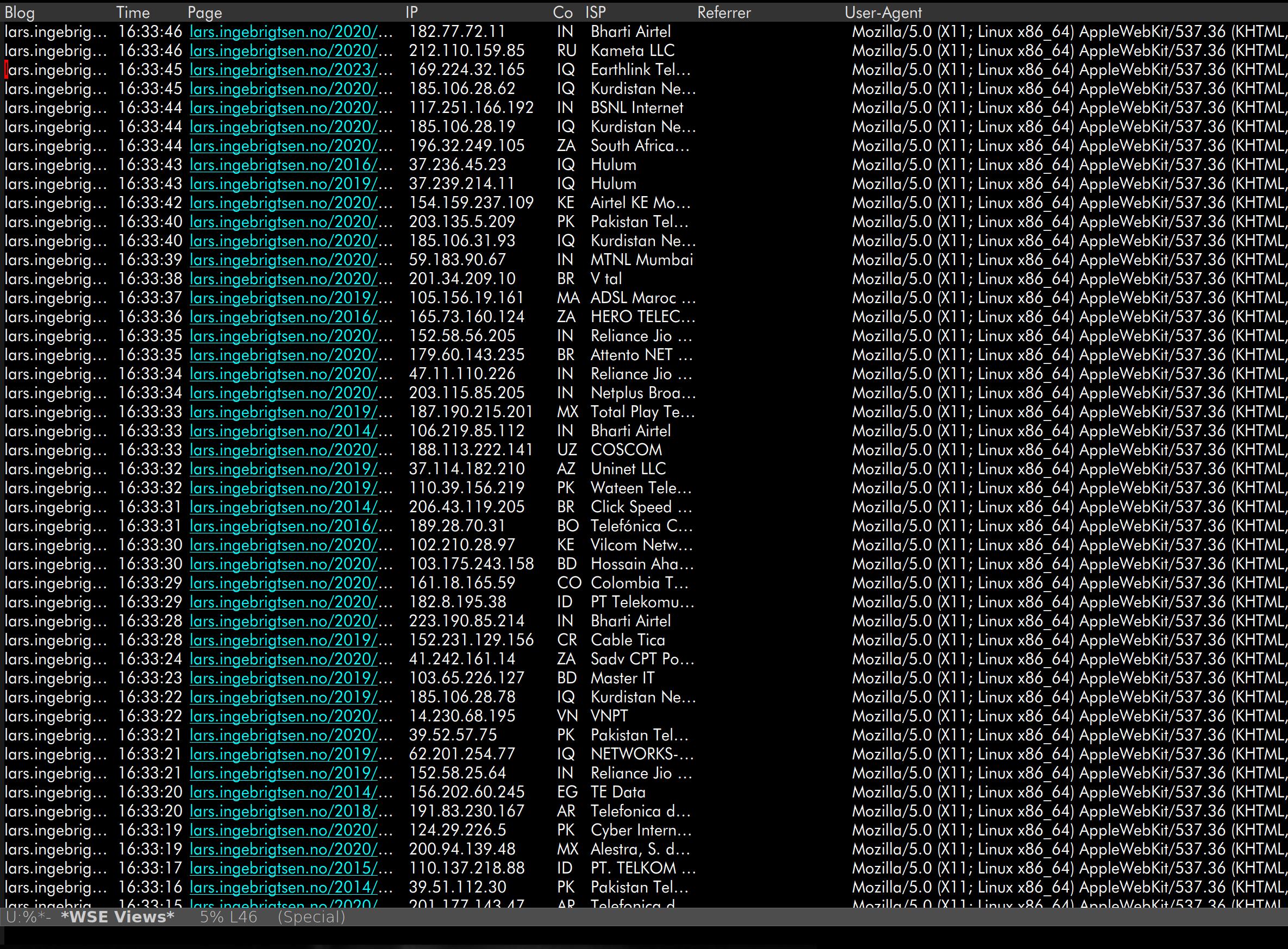

So I made the change and waited a bit and:

*sigh* Nope, doesn’t seem to help much at all. The people running these things just have way too many resources — they fetch a page, and then let the Javascript elements run for several seconds before grabbing the results.

(An additional amusing thing about the specific bot in this random example is that it’s really into using VPNs in really, really obscure countries…)

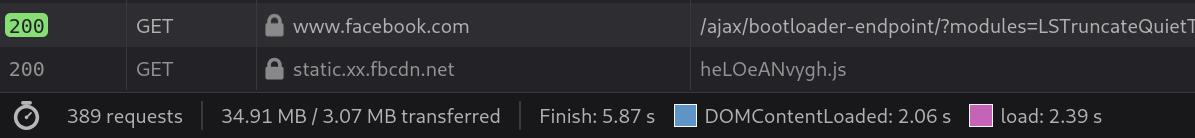

Which may be a testament to how awful many web pages are these days, really — you go to a page, and it takes ages for all the data to load, and then finally something renders and you can read it. For instance, here’s Facebook:

It takes about six seconds until it’s “reasonably” loaded… Pitiful.

Well, if Facebook takes six seconds, perhaps AI scrapers have settled on something along those lines?

What if I increase the period to ten seconds? I mean ten seconds is longer than it takes to read an article like this, really, but win some lose some.

*types a bit and then waits for another day*

Uhm… I think this basically fixed the issue? I can now no longer detect any typical obvious scraper runs, and virtually no traffic from China/Singapore is now logged.

I think I found a solution! Kinda! I guess I should let this run longer before drawing any conclusions, but that’s for cowards! Ten seconds is the magic number!