The other day, I was happily ripping a new bunch of DVDs and blu rays that had arrived (so that I can actually watch them). Afterwards I pointed Emacs at the new directories to add metadata semi-automatically… only to find that the computer just said “no”.

Well, the code works by scraping imdb.com, and the HTML changes once in a while, so that’s no big worry. But looking at what I got, I got pretty puzzled:

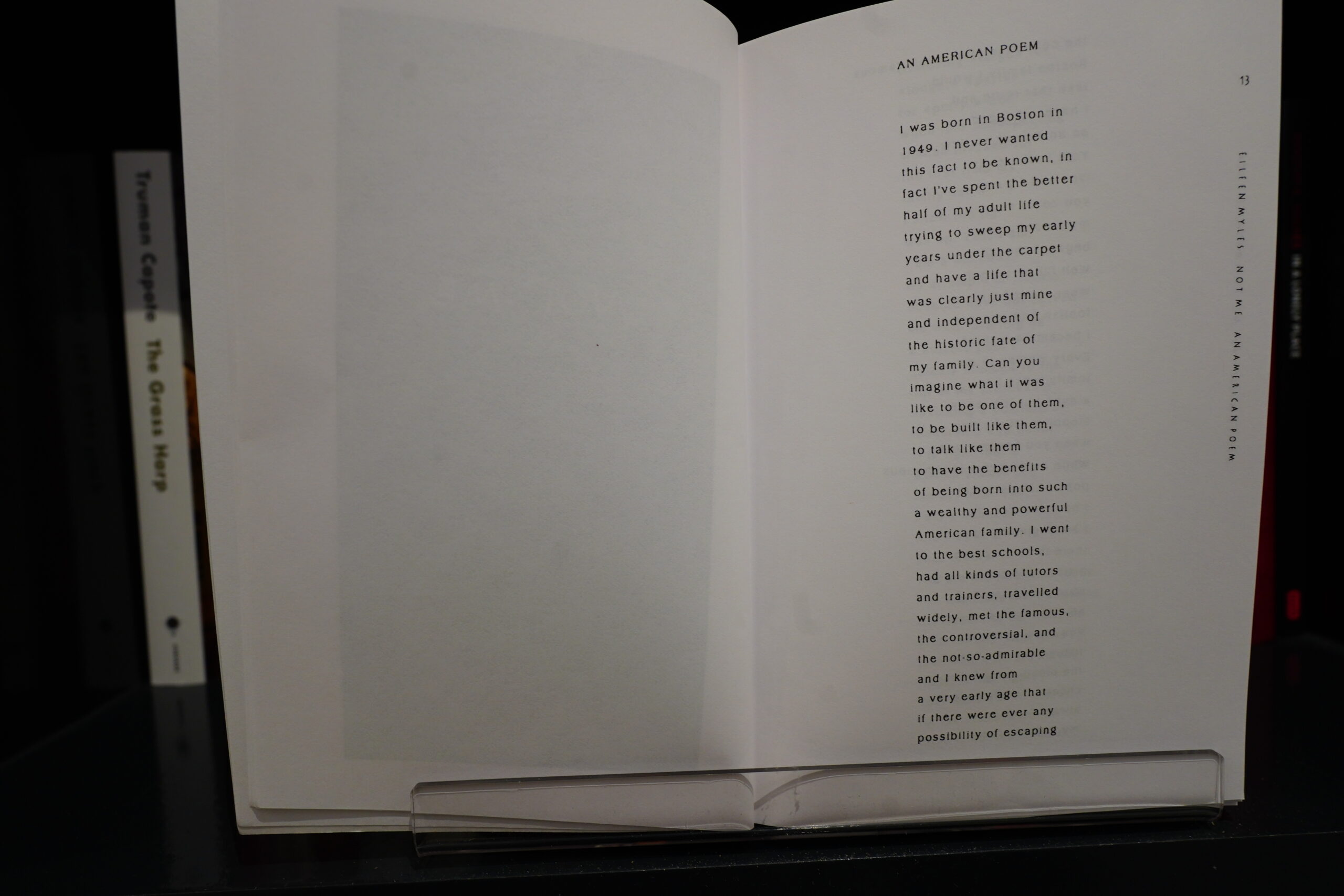

HTTP/2 202 server: CloudFront date: Mon, 24 Nov 2025 23:58:24 GMT content-length: 2388 x-amzn-waf-action: challenge access-control-expose-headers: x-amzn-waf-action x-cache: Error from cloudfront via: 1.1 079d0a29fa76c3721f14a4132ec9e372.cloudfront.net (CloudFront) x-amz-cf-pop: ARN52-P1 alt-svc: h3=":443"; ma=86400 x-amz-cf-id: Q6mGF4pMTpmxiyQJUsZN5m8RuTD9dsU0xpzpENImJVvn7jRzdLkVqA==

And that’s it. Just a HTTP 202, and some headers. But that x-amzn-waf-action… WAF probably doesn’t mean “wife acceptance factor” in this context, right? So I googled, and this is indeed Amazon’s way to fend off horrible people like me that just wants to automate fetching the name of the director of a movie without opening a browser.

Sorry, I misspelled “AI scrapers”.

Fair enough. But how does this actually work, and how trivial is it to work around?

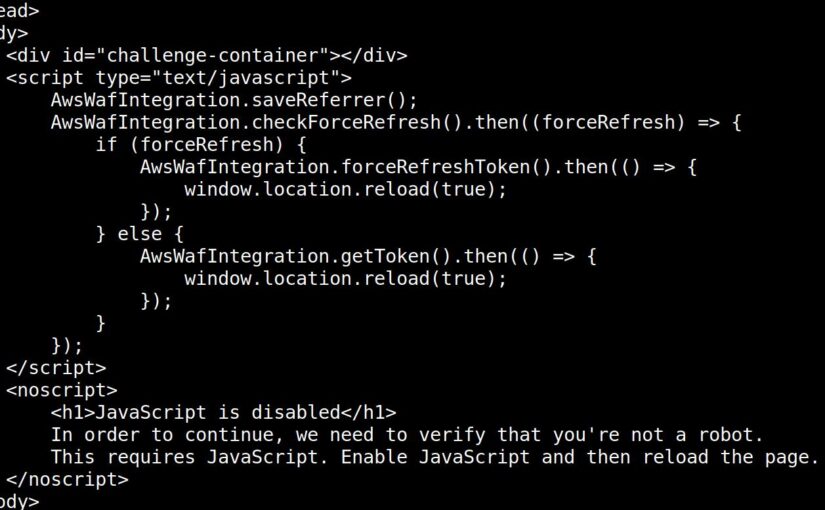

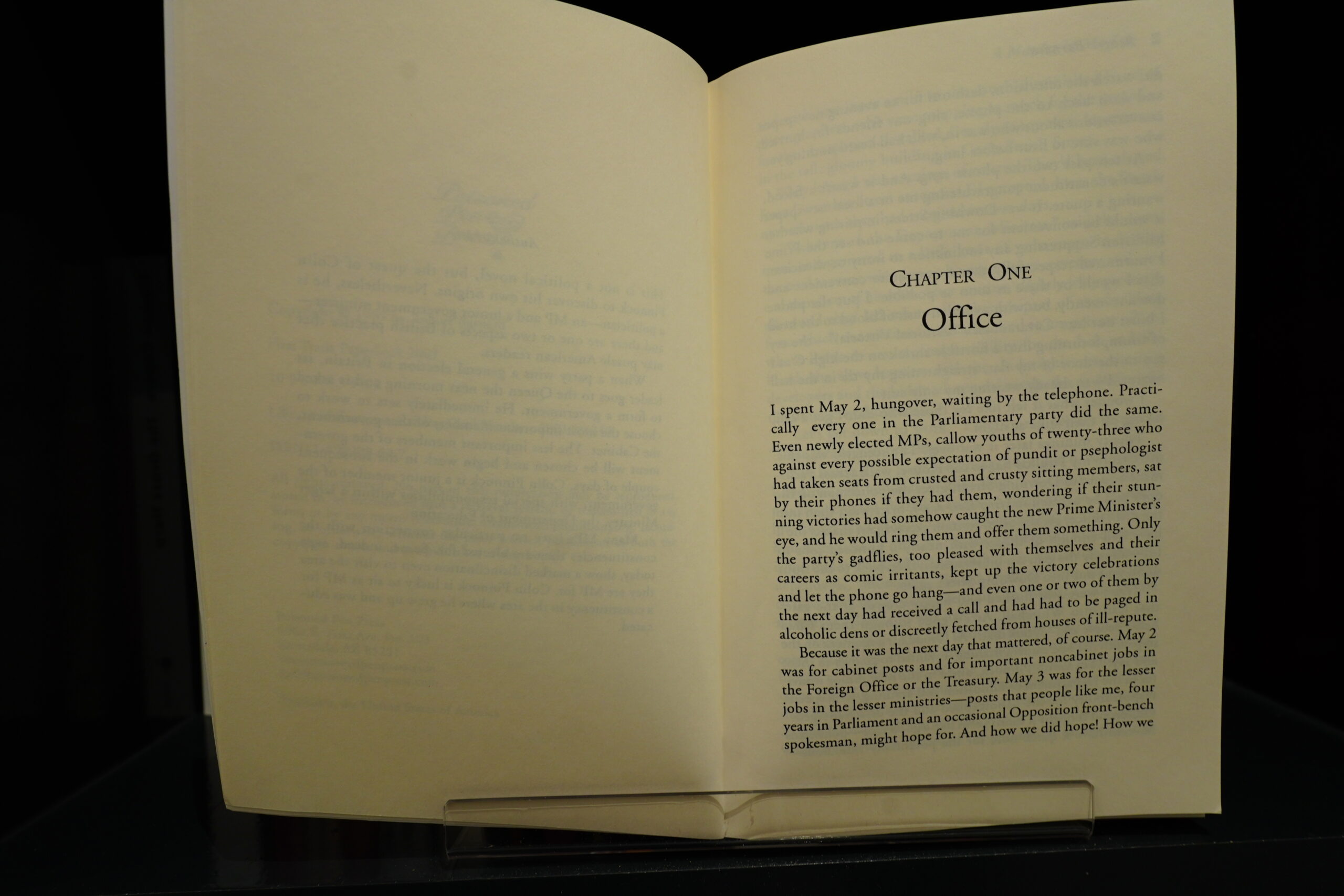

Well, it’s a Javascript challenge. If you say this:

curl -D /tmp/h -H "Accept: text/html" 'https://www.imdb.com/find?q=If+Looks+Could+Kill&ref_=hm_nv_srb_sm'

You get the JS (shown in the image above). So basically, you have to spin up a more or less complete browser to fetch a web page from IMDB now. But *type* *type* *type*, et alors:

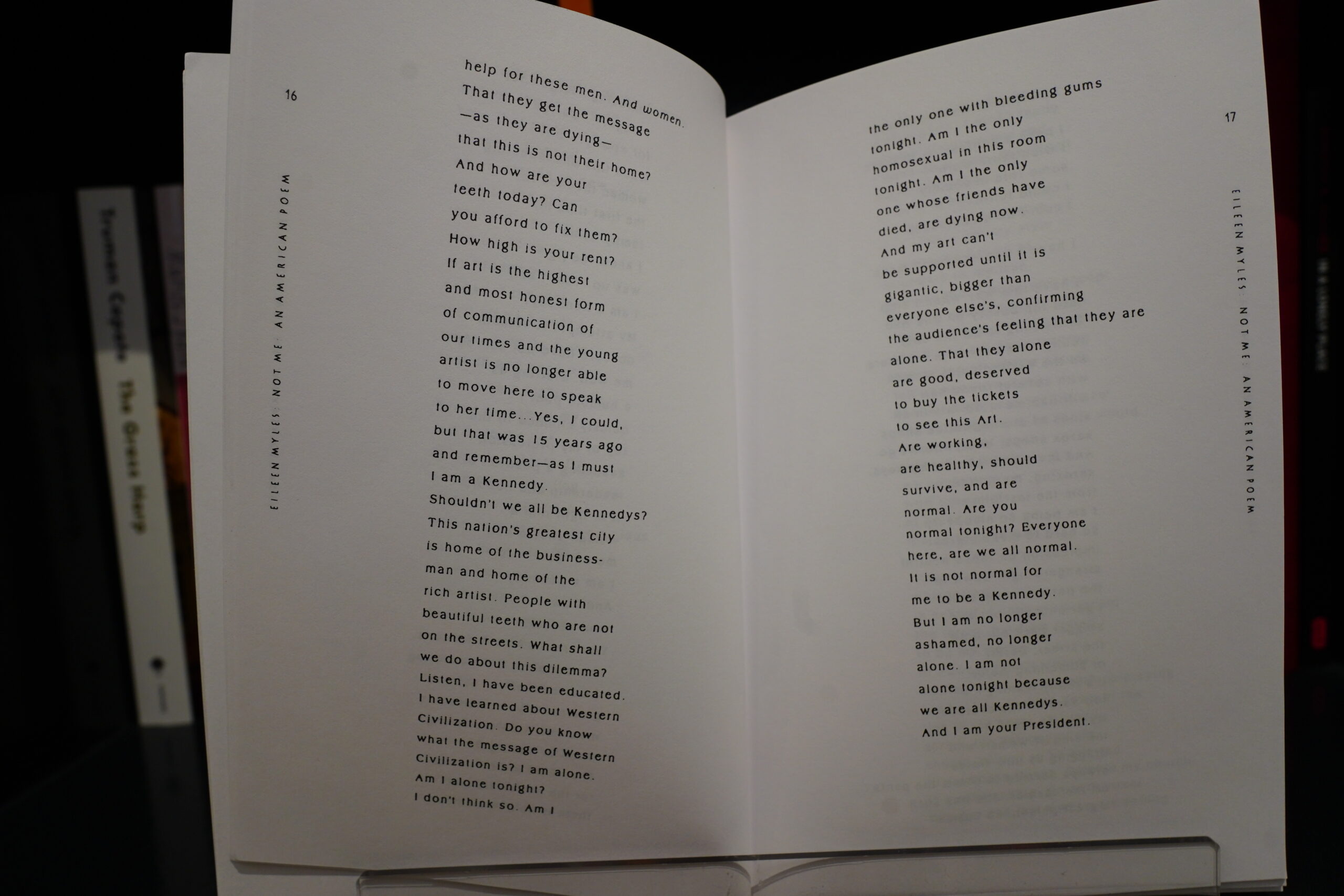

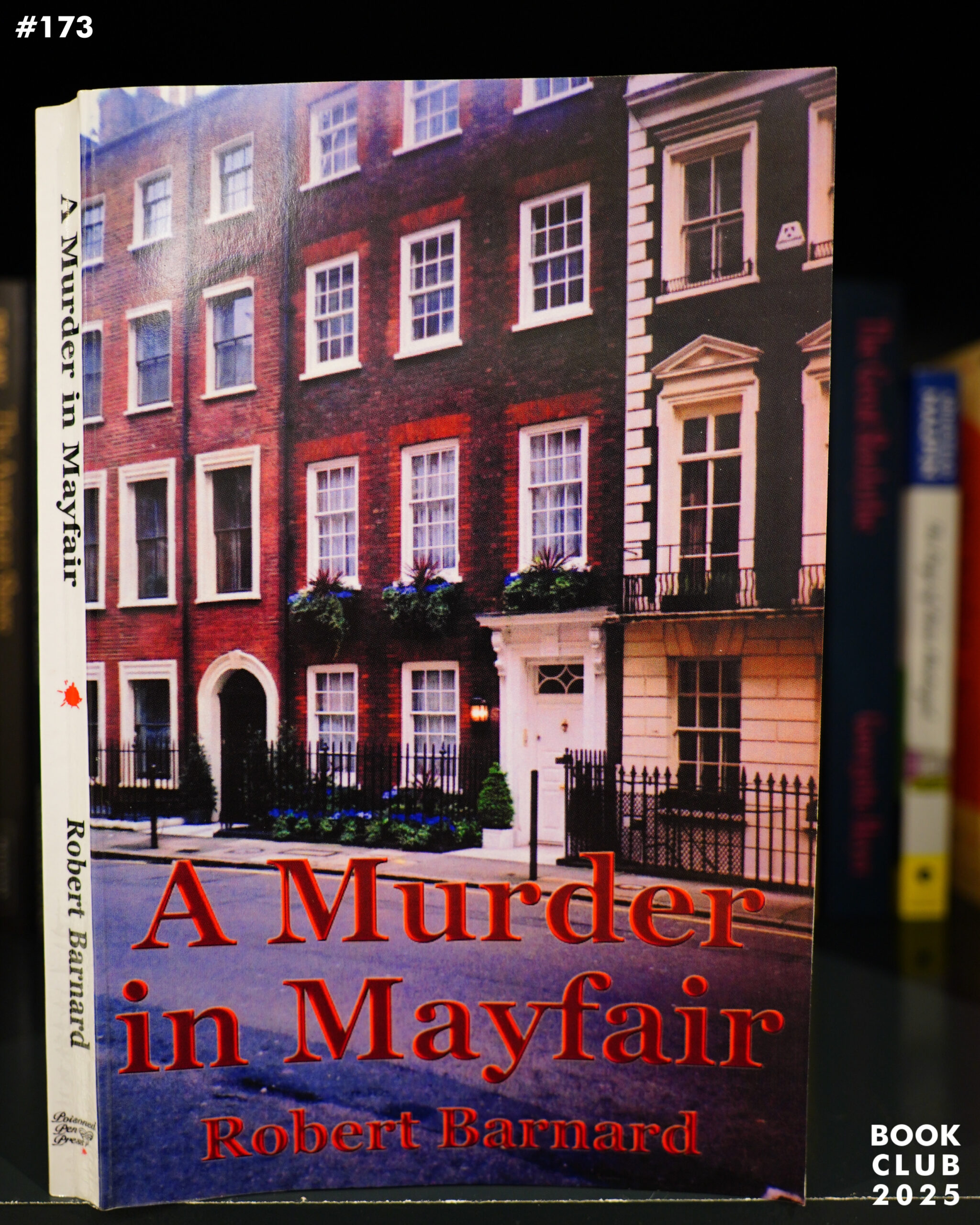

Now it works again — I can hit a on a newly ripped movie, and Emacs prompts me for likely matches, and I hit ret, and:

There. Release year, country, director and poster, all scraped. The only difference is that it now takes two seconds to fetch the data instead of half a second. That’s progress for you, I guess.

If you want to look at the resulting trivial code, it’s on Microsoft Github. (It’s just a trivial Selenium script.)

Like sand through the hourglass so are the days of our lives.