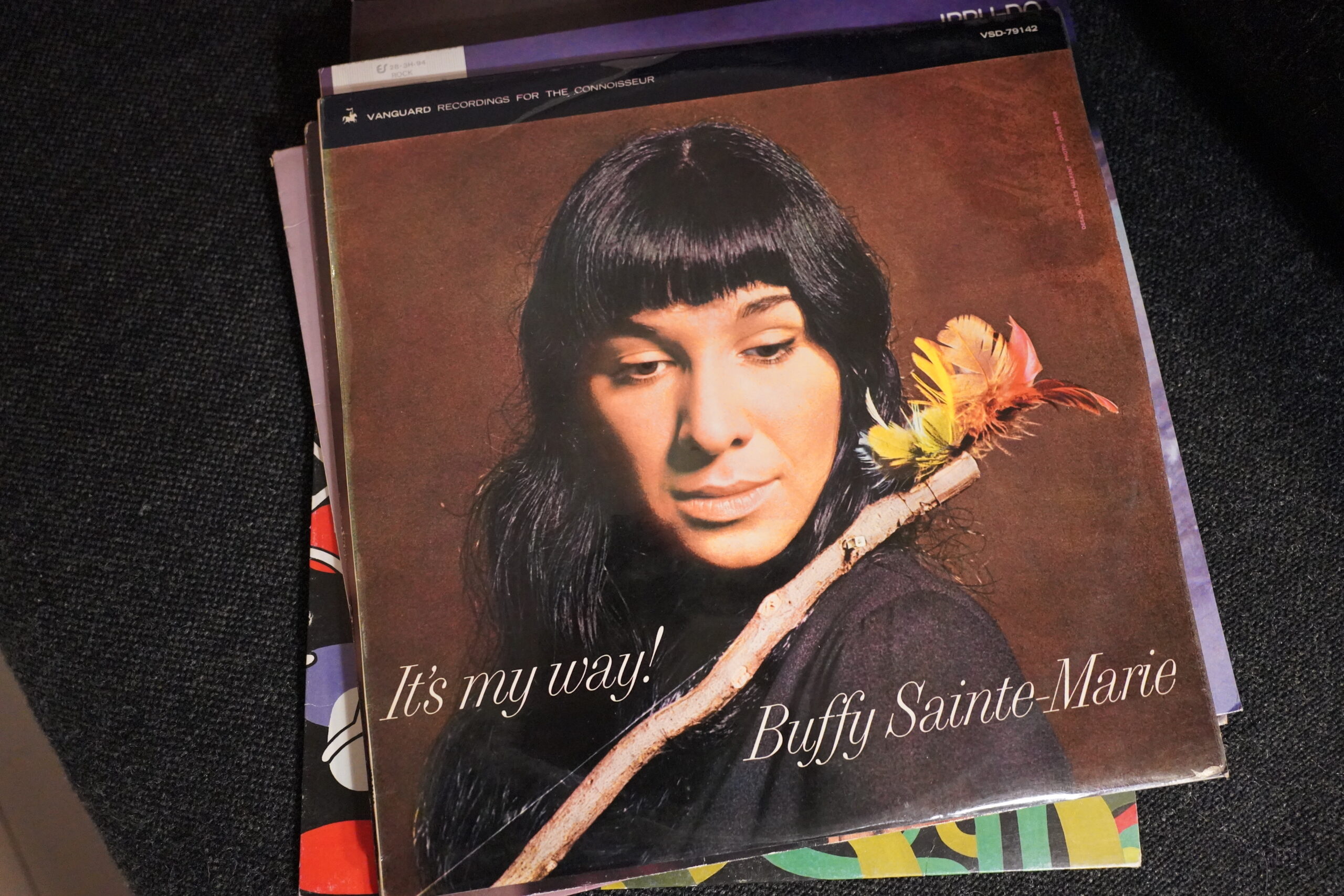

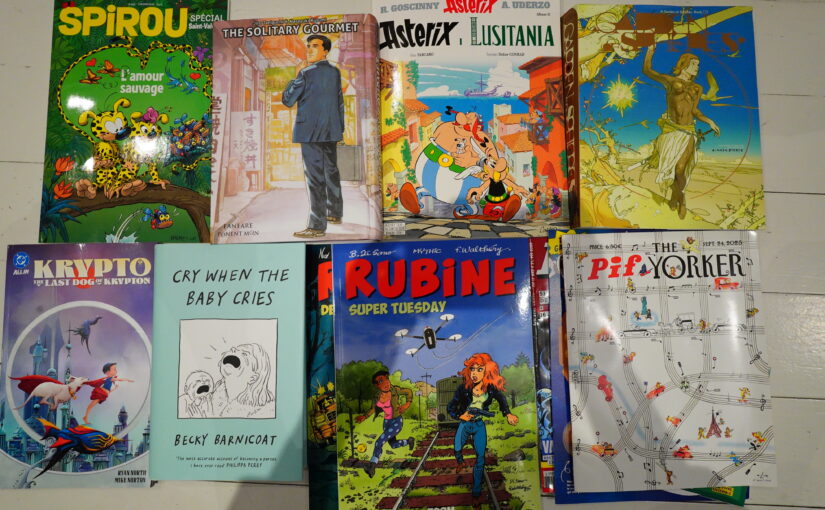

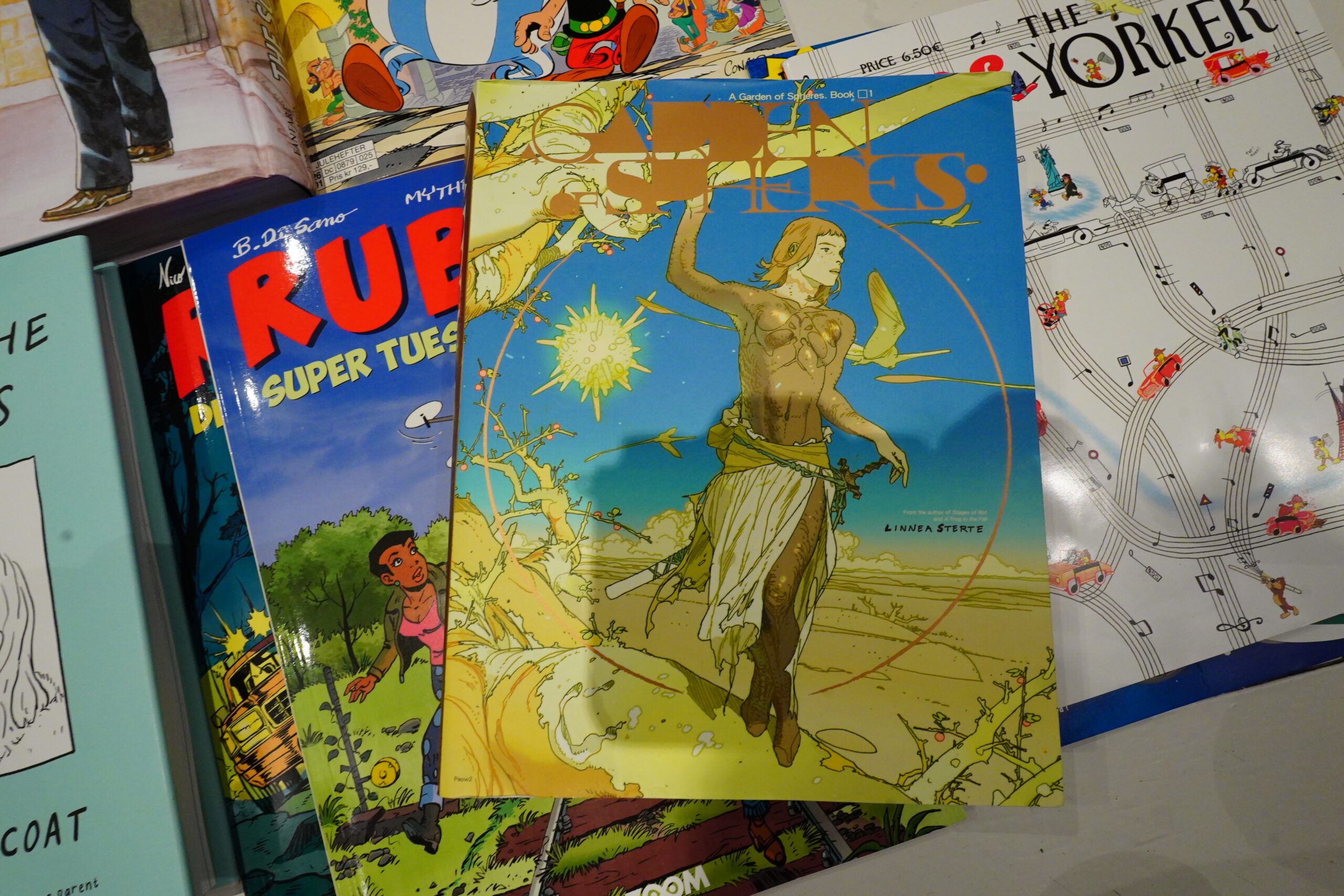

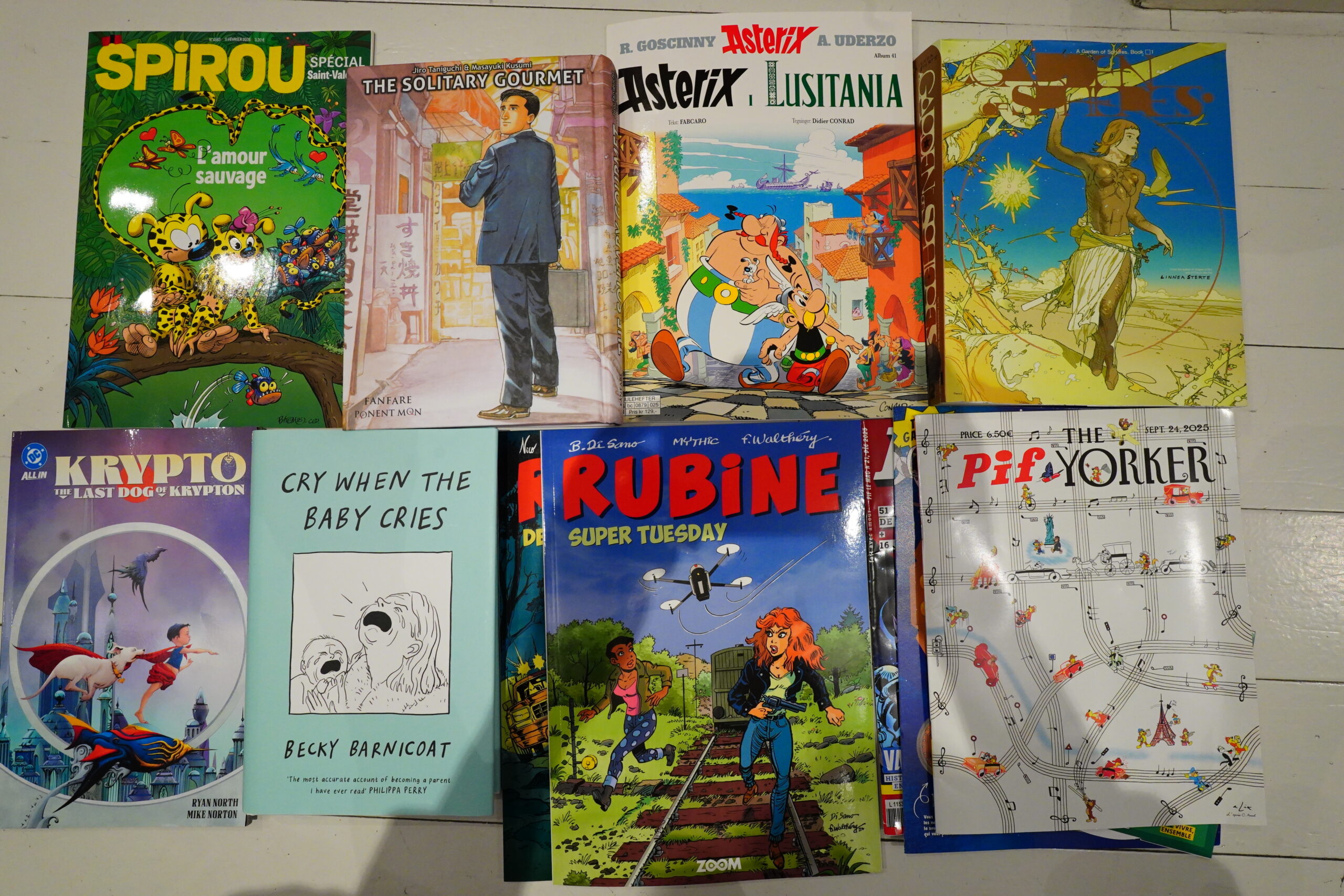

Hey! I read some comics the past week.

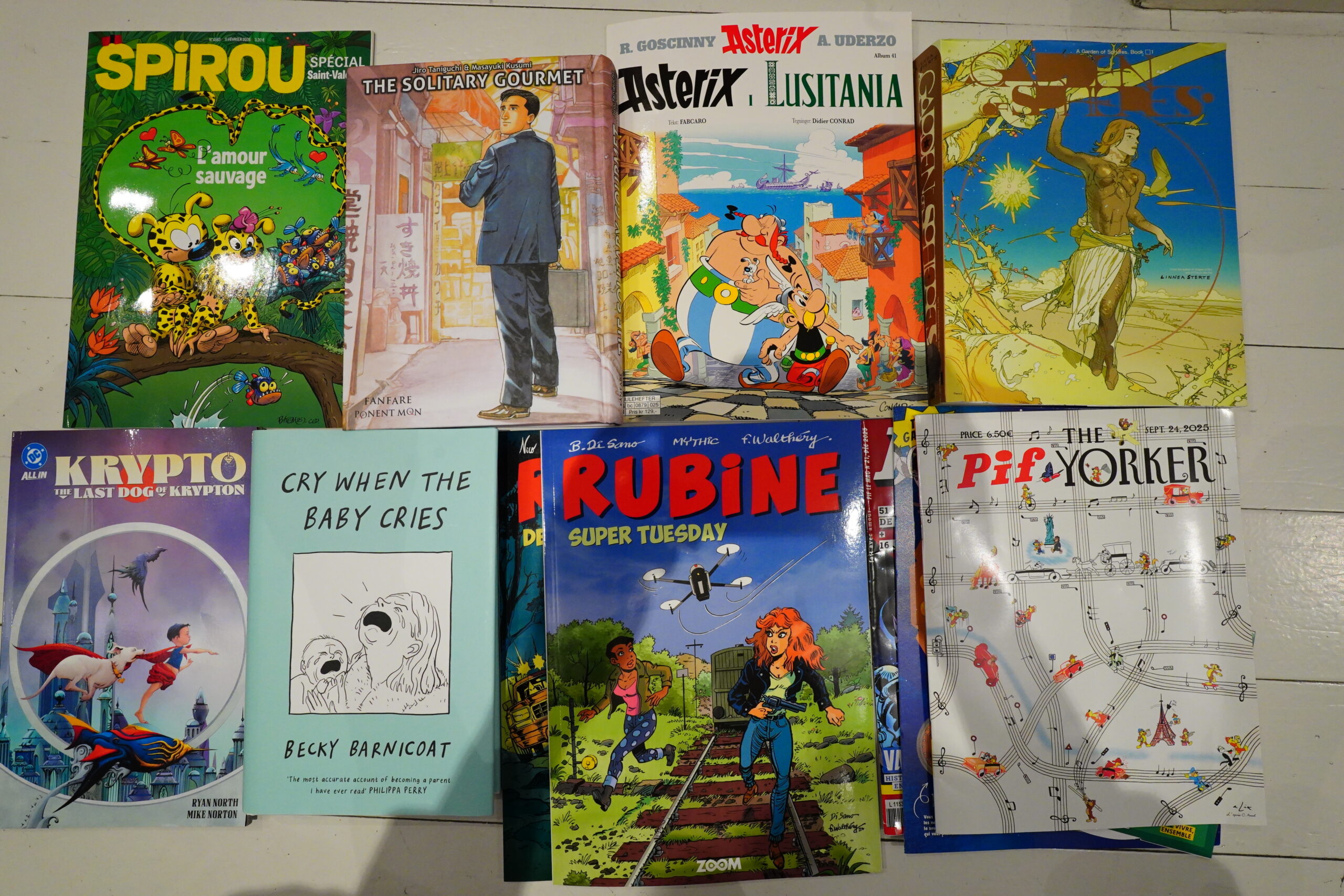

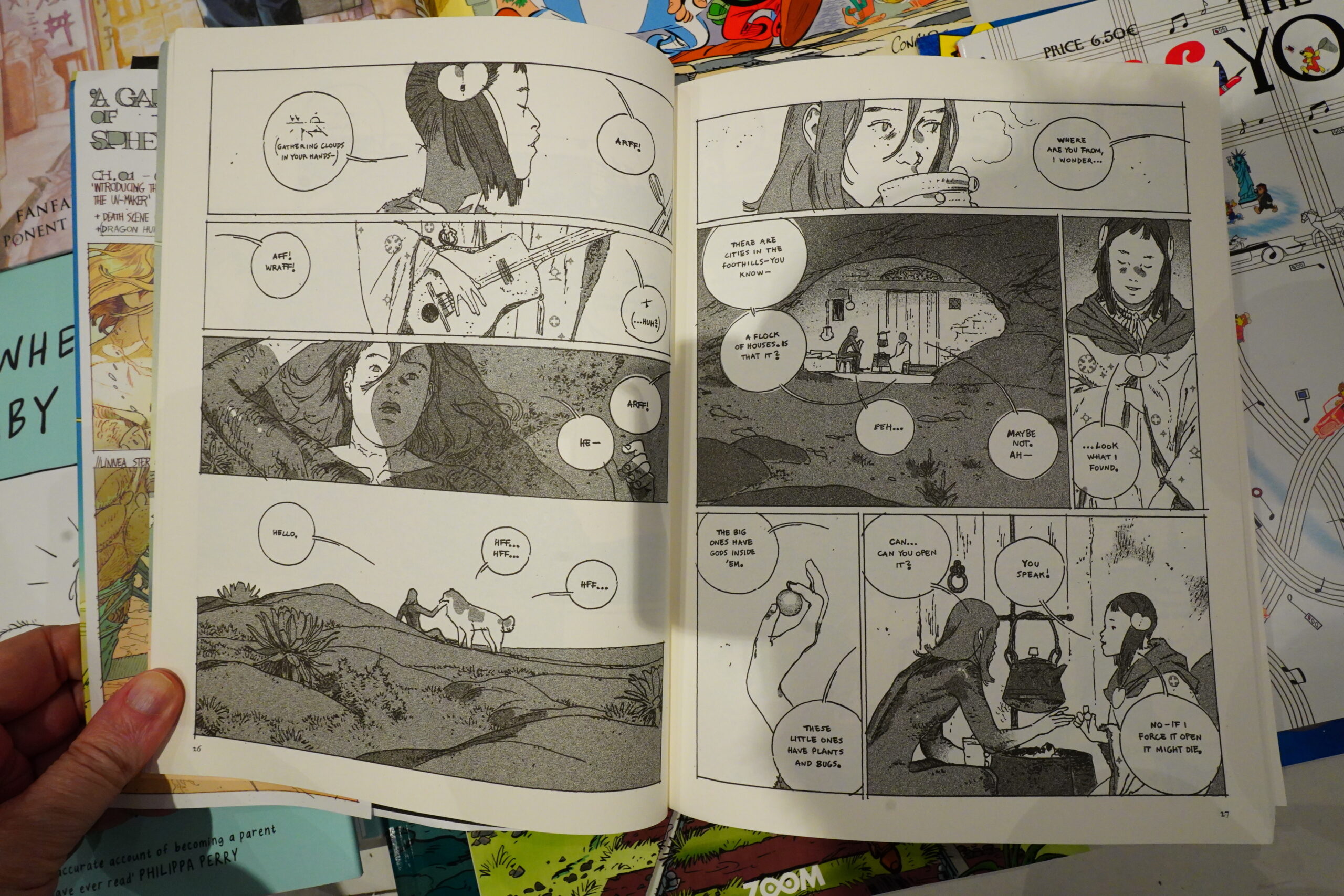

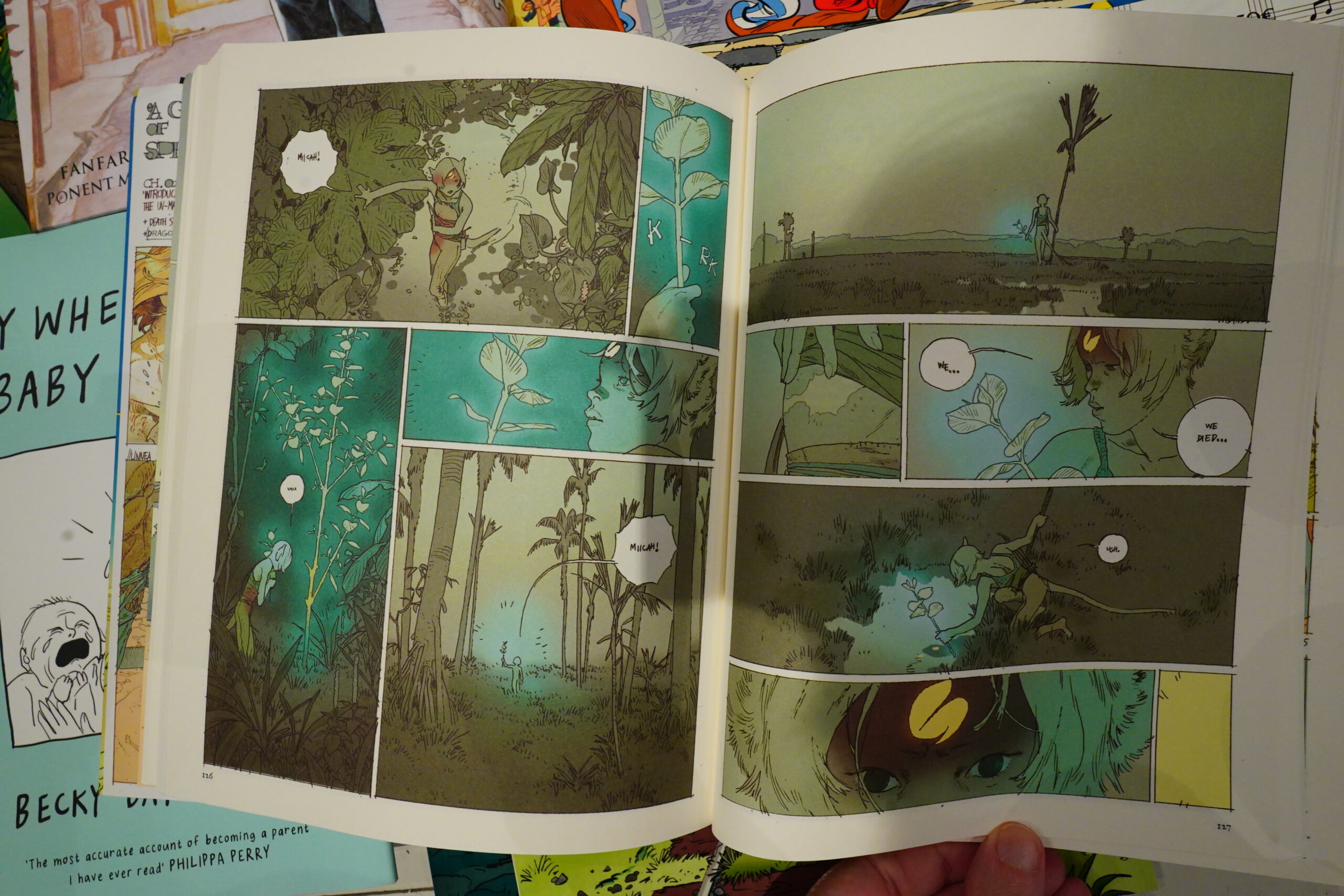

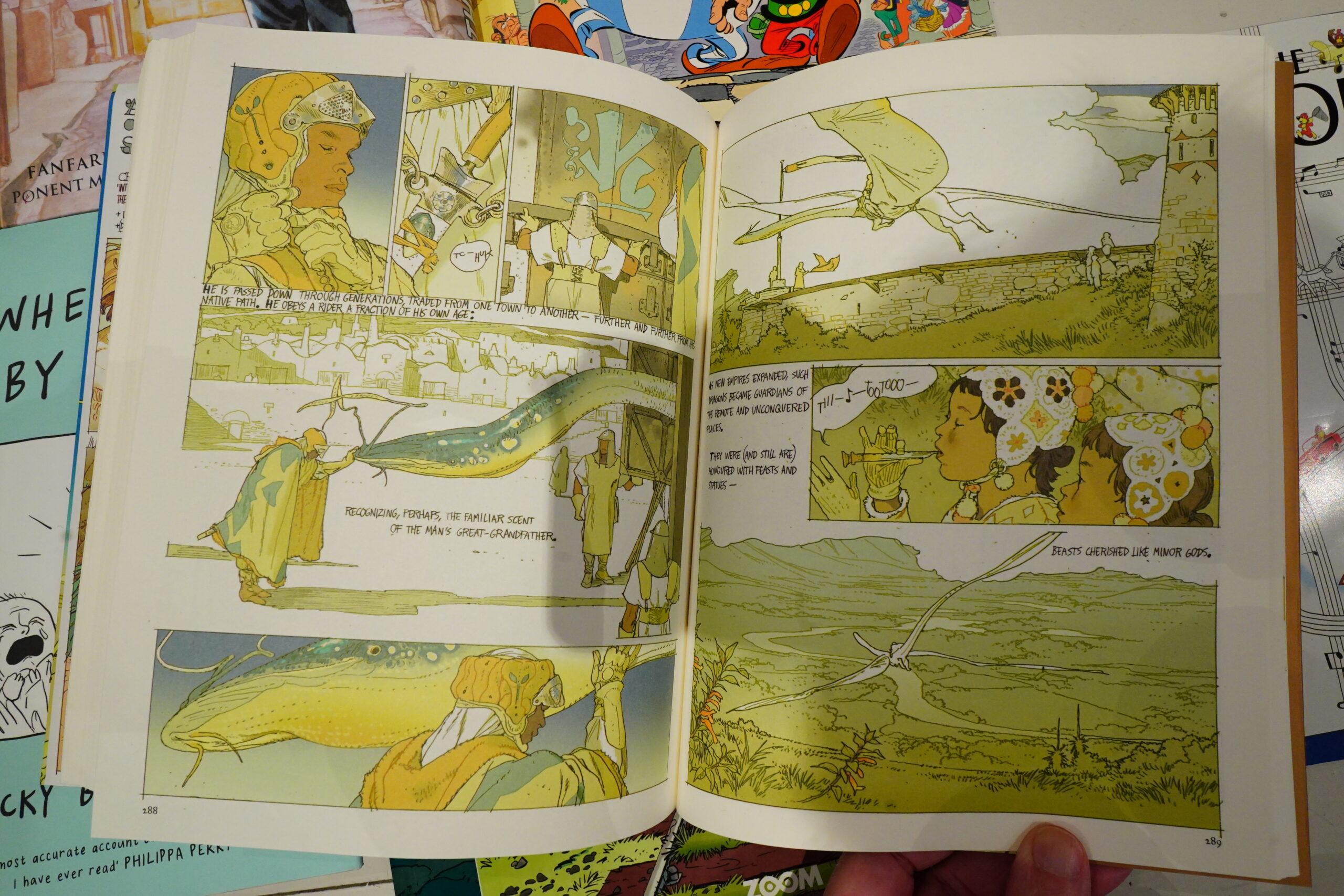

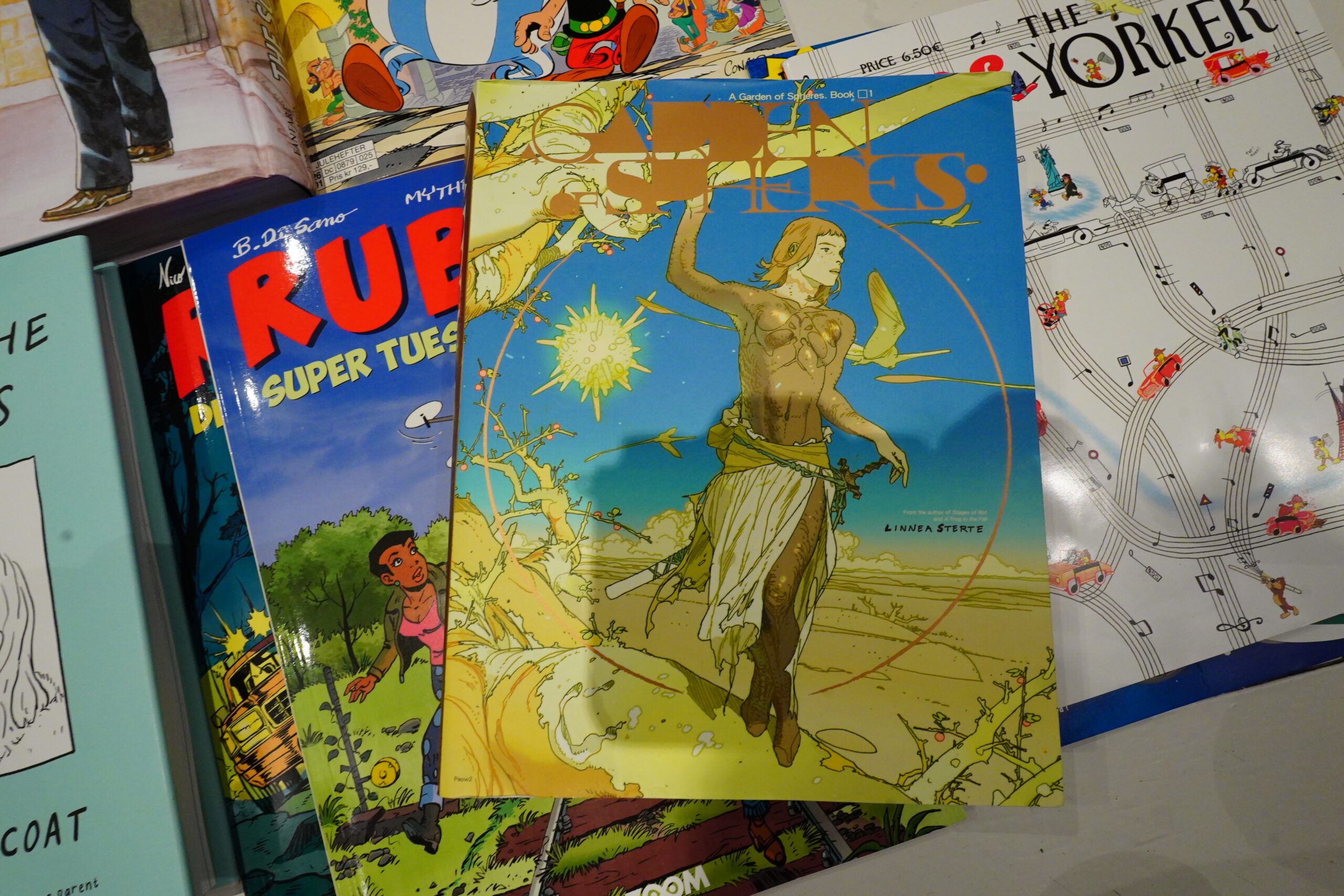

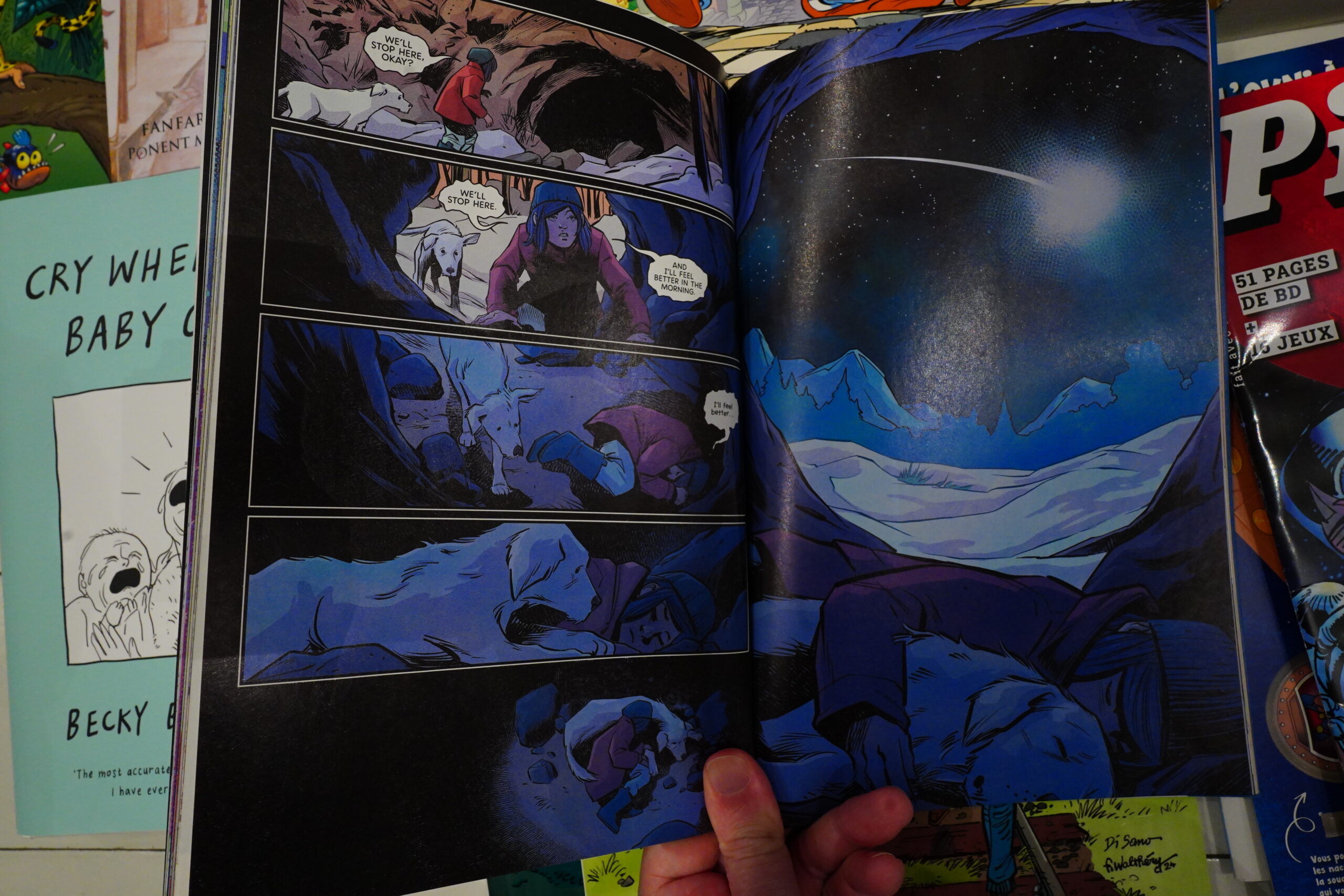

Garden of Spheres by Linnea Sterte is very good. Of course, like everybody else, I loved A Frog In Fall, so I was excited to read the next book from her

.

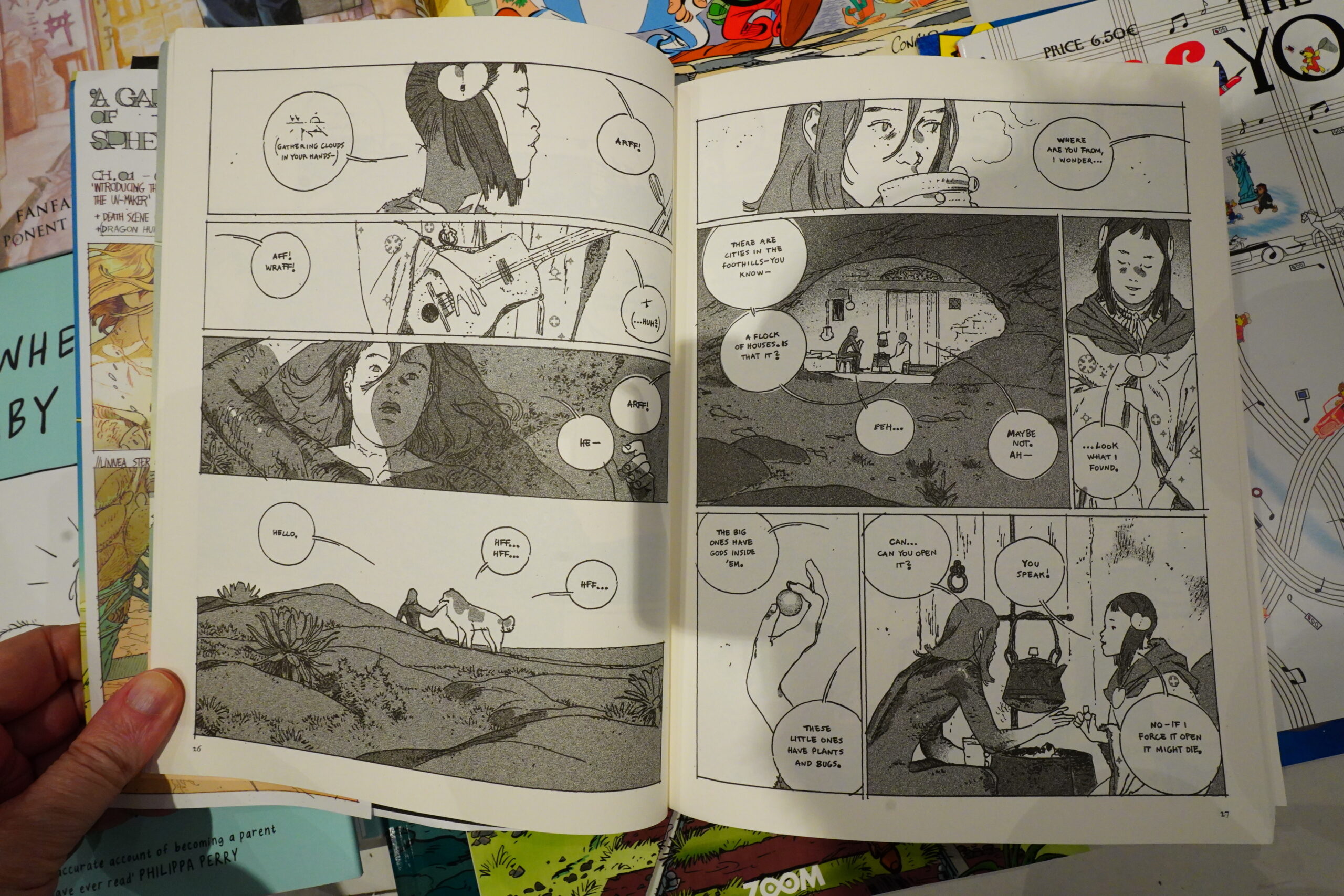

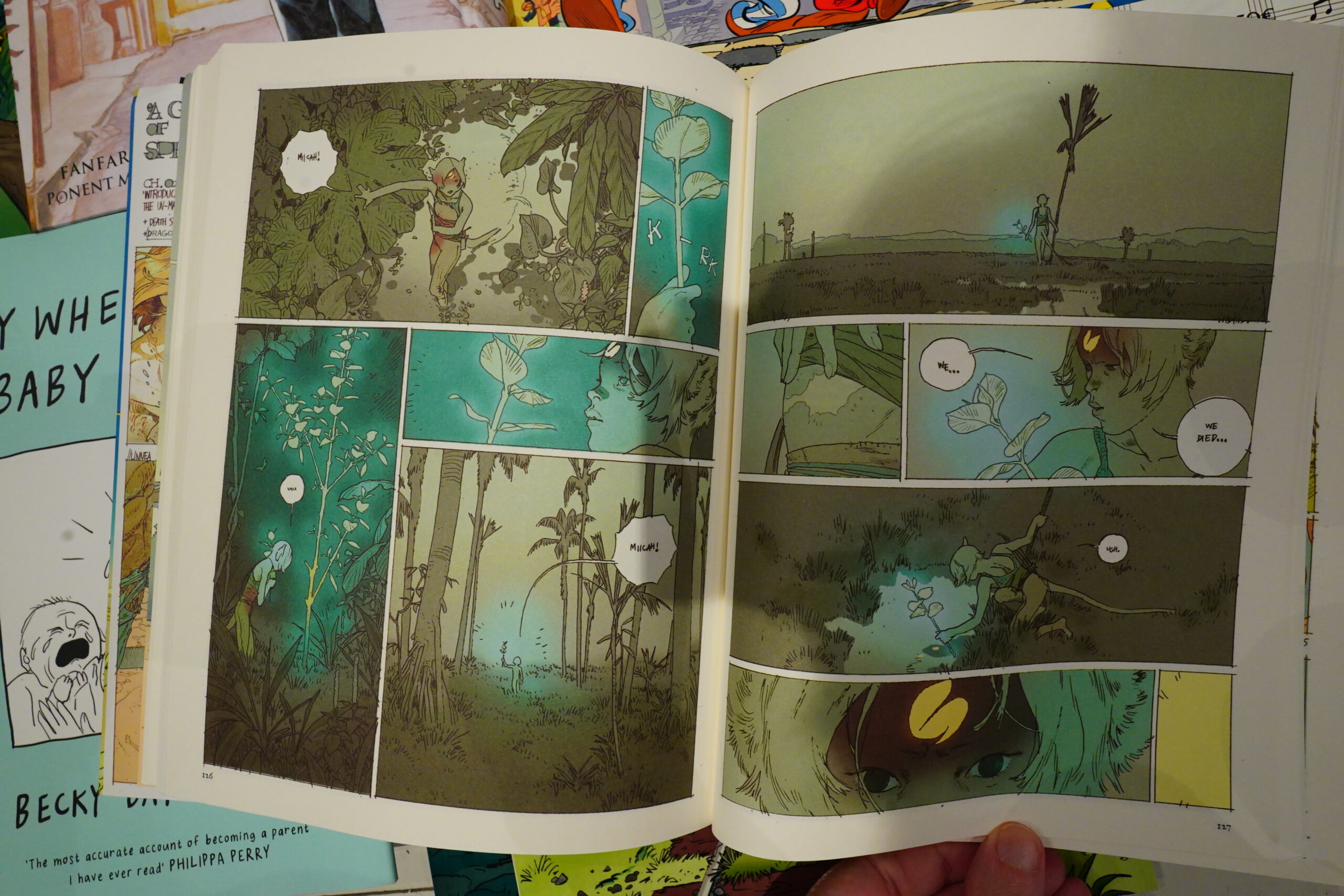

And it’s very different — it’s a sprawling science fiction saga dealing with some Gods that hatch from eggs on a planet, and then…

… er, things happen. It’s very un-structured — we follow a handful of people over several hundred years (I think), but it’s told in short pieces without much of in the way of segues, so the reader has to be on their toes. It’s quite involving and very mysterious. And even though this book is a bit of a brick, it’s just the first part, so this is going to be some kind of epic.

But it’s been in the making since 2021? So I guess it might take a few years before we get the next part?

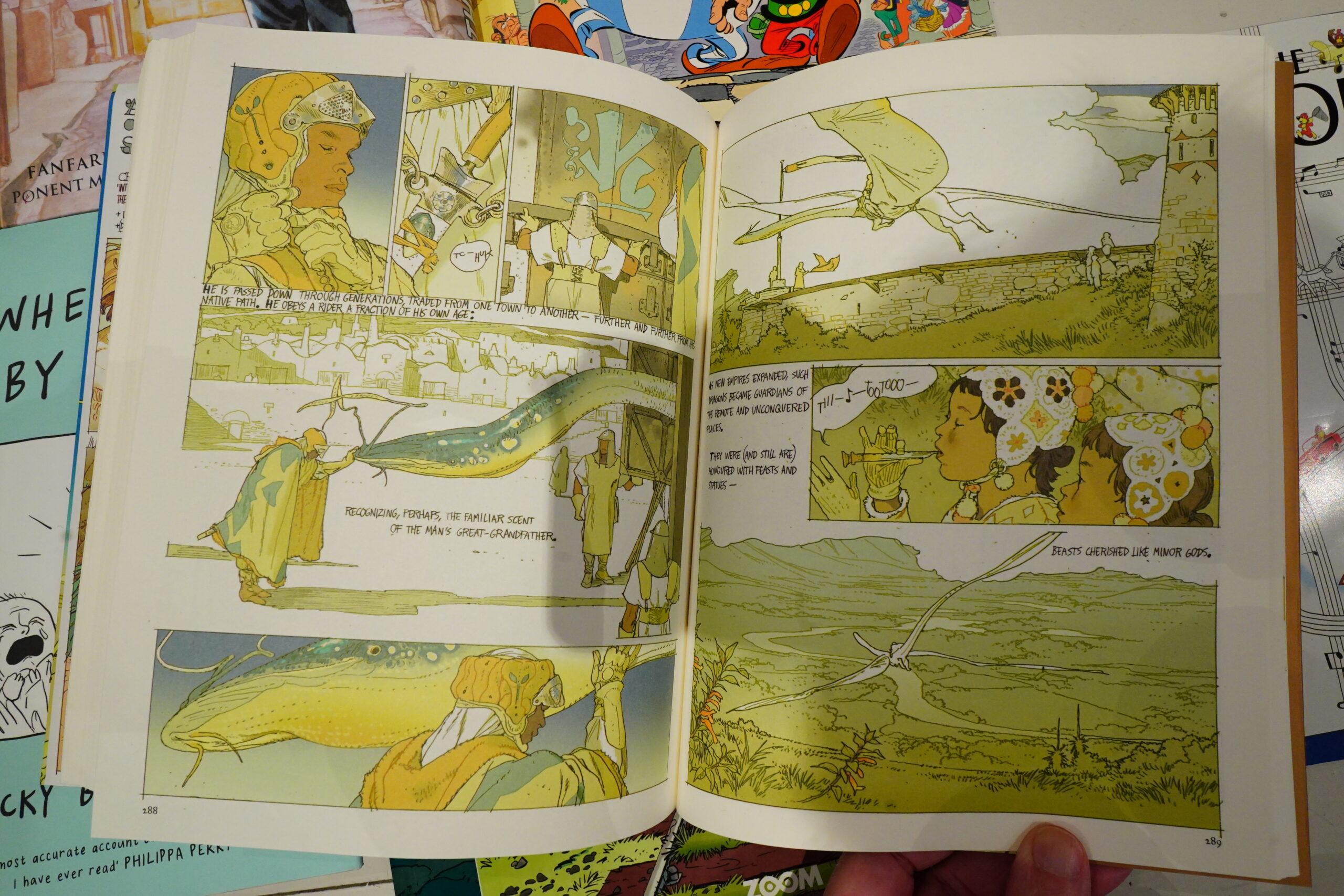

The artwork is just lovely. I guess Sterte is part of the neo-Moebius thing that’s been going on over the last decade and a half, along with the Decadence Comics guys… was Brandom Graham/Simon Roy the people who started it all, I wonder?

There’s also more than a touch of Hayao Miyazaki here, I guess, but it’s its own thing, and it’s so attractive to look at.

Looking forward to the next book.

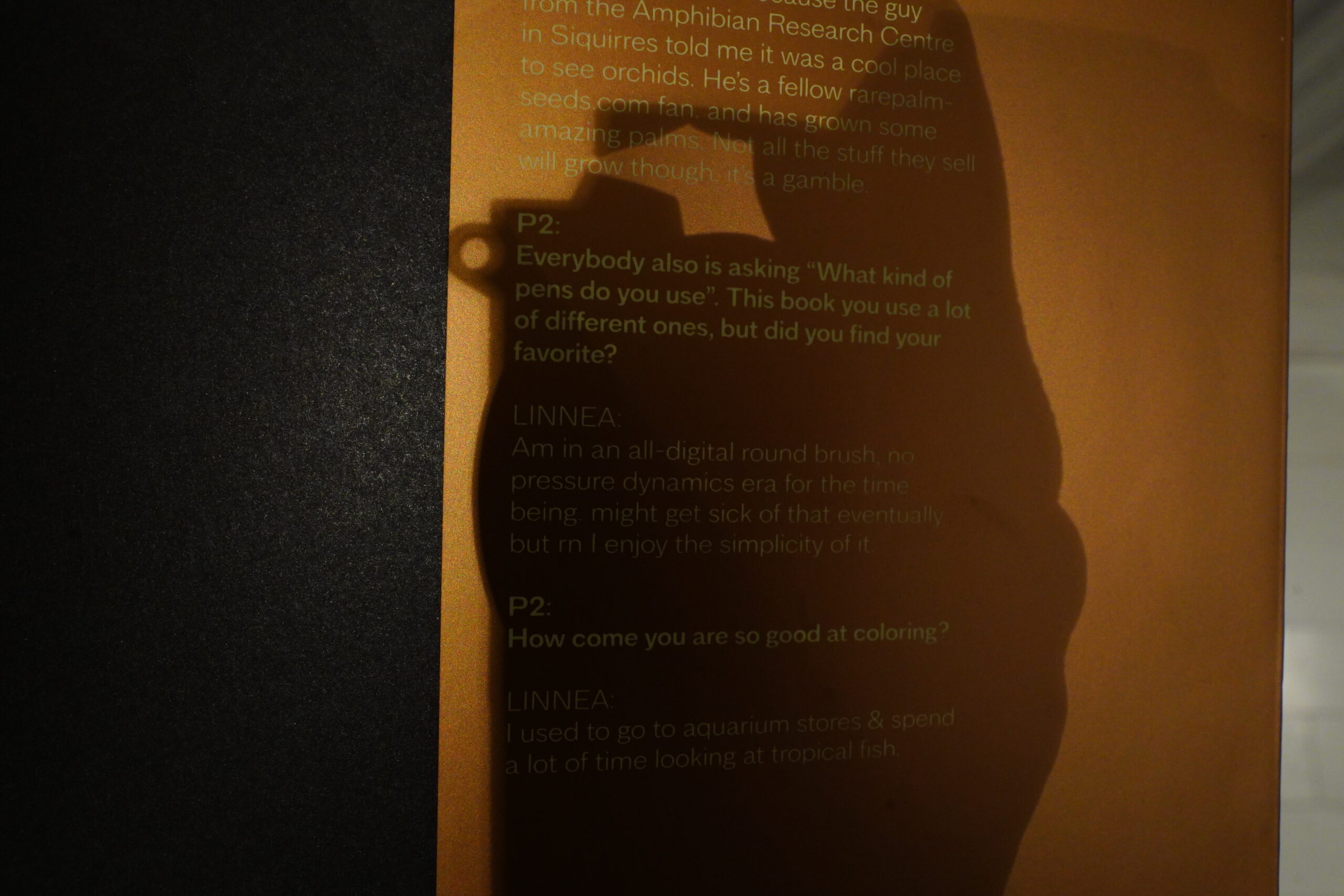

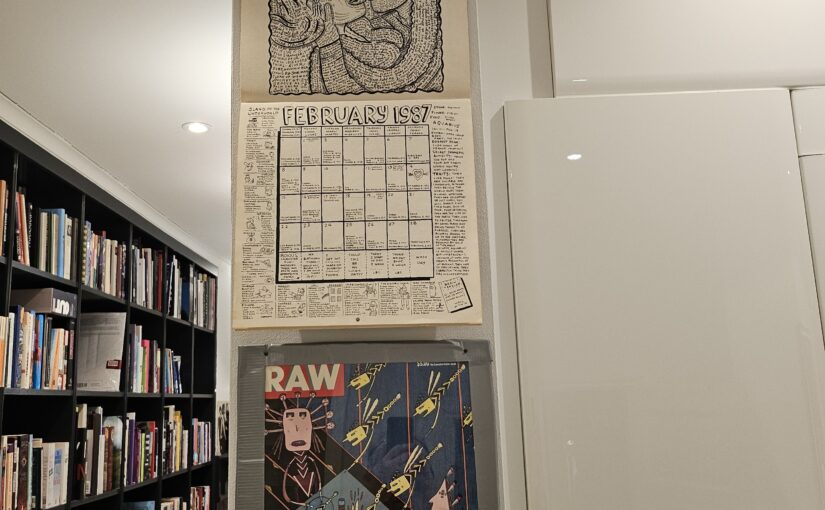

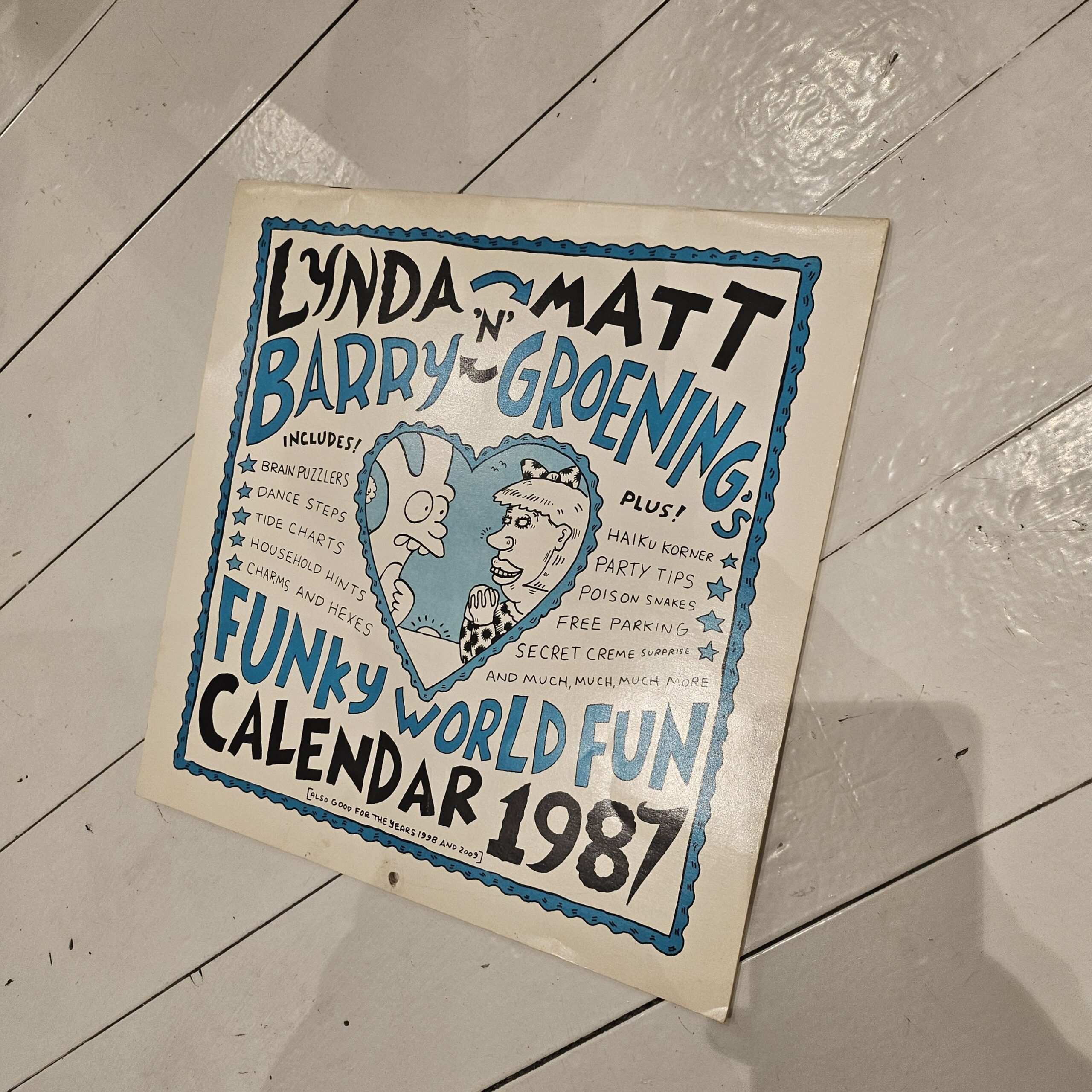

I’m not sure whether this is a joke or not. (The bit about the pencils.)

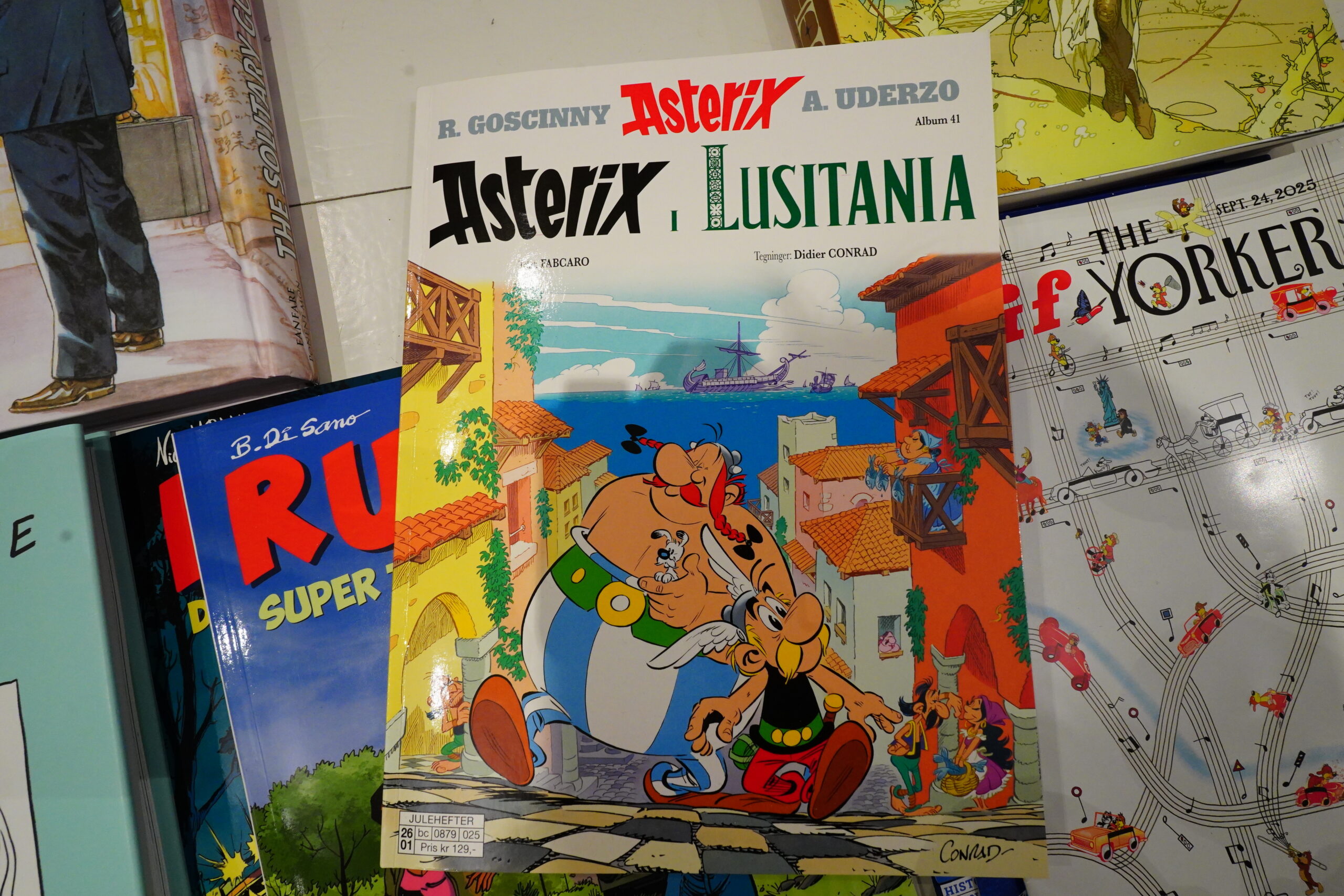

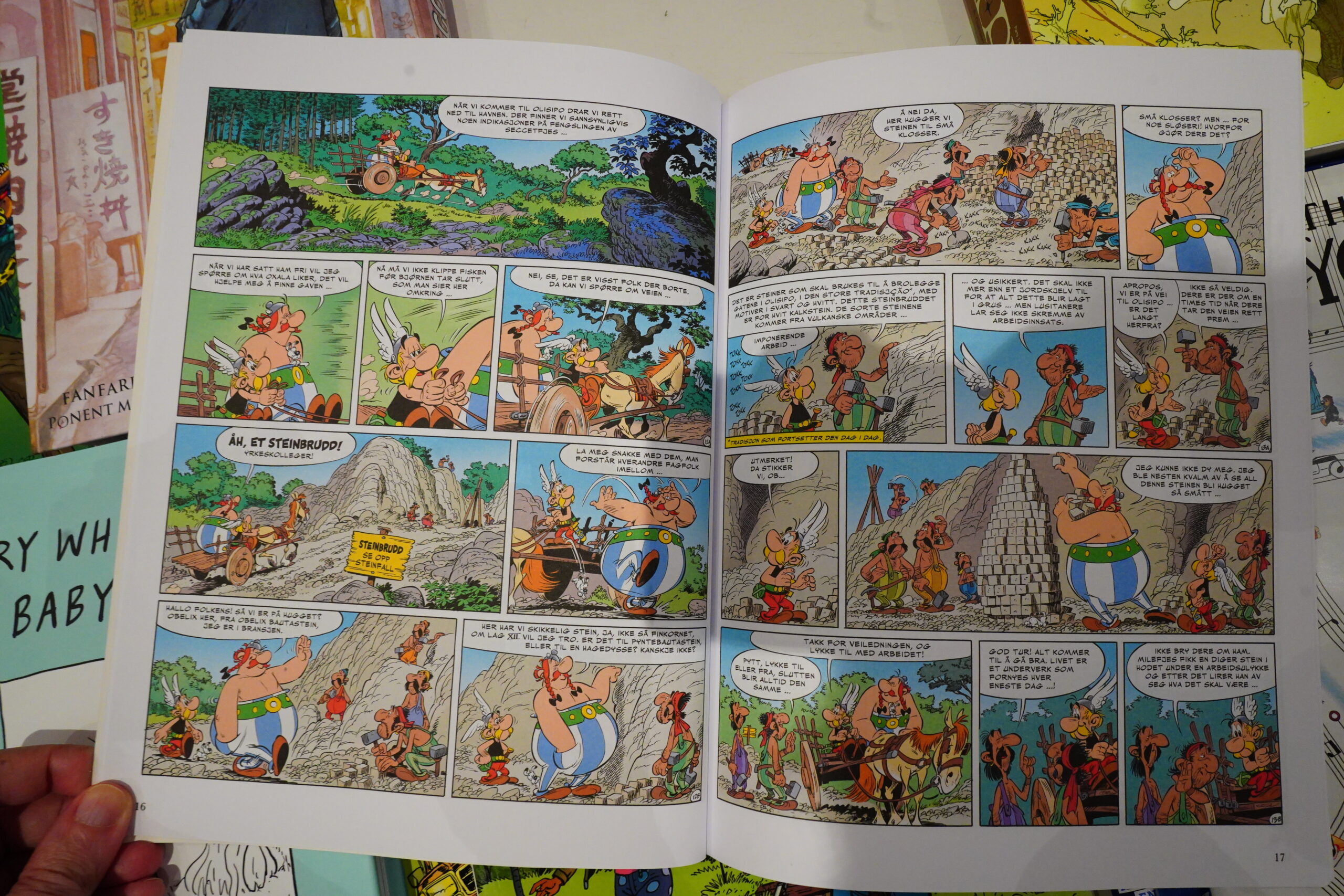

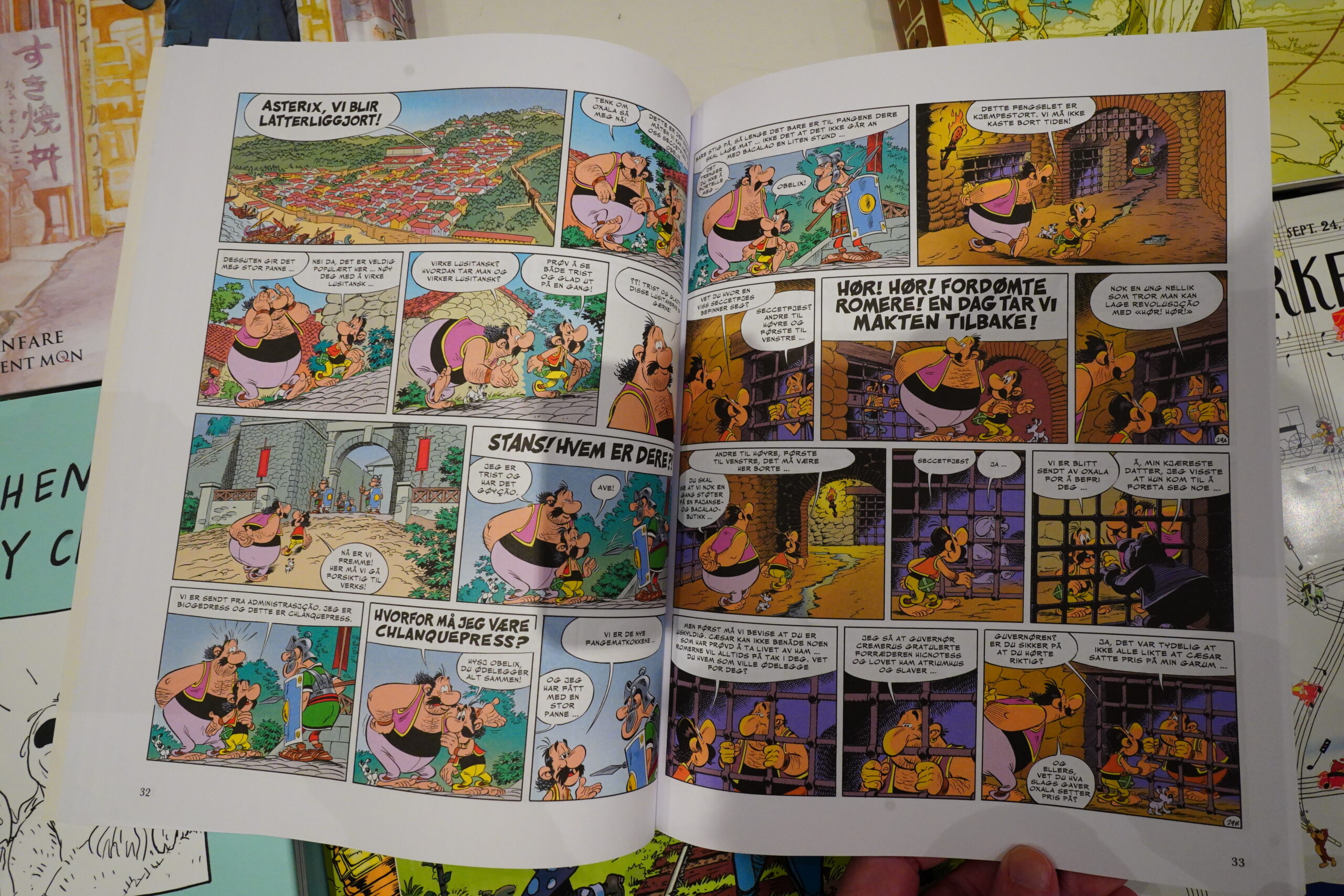

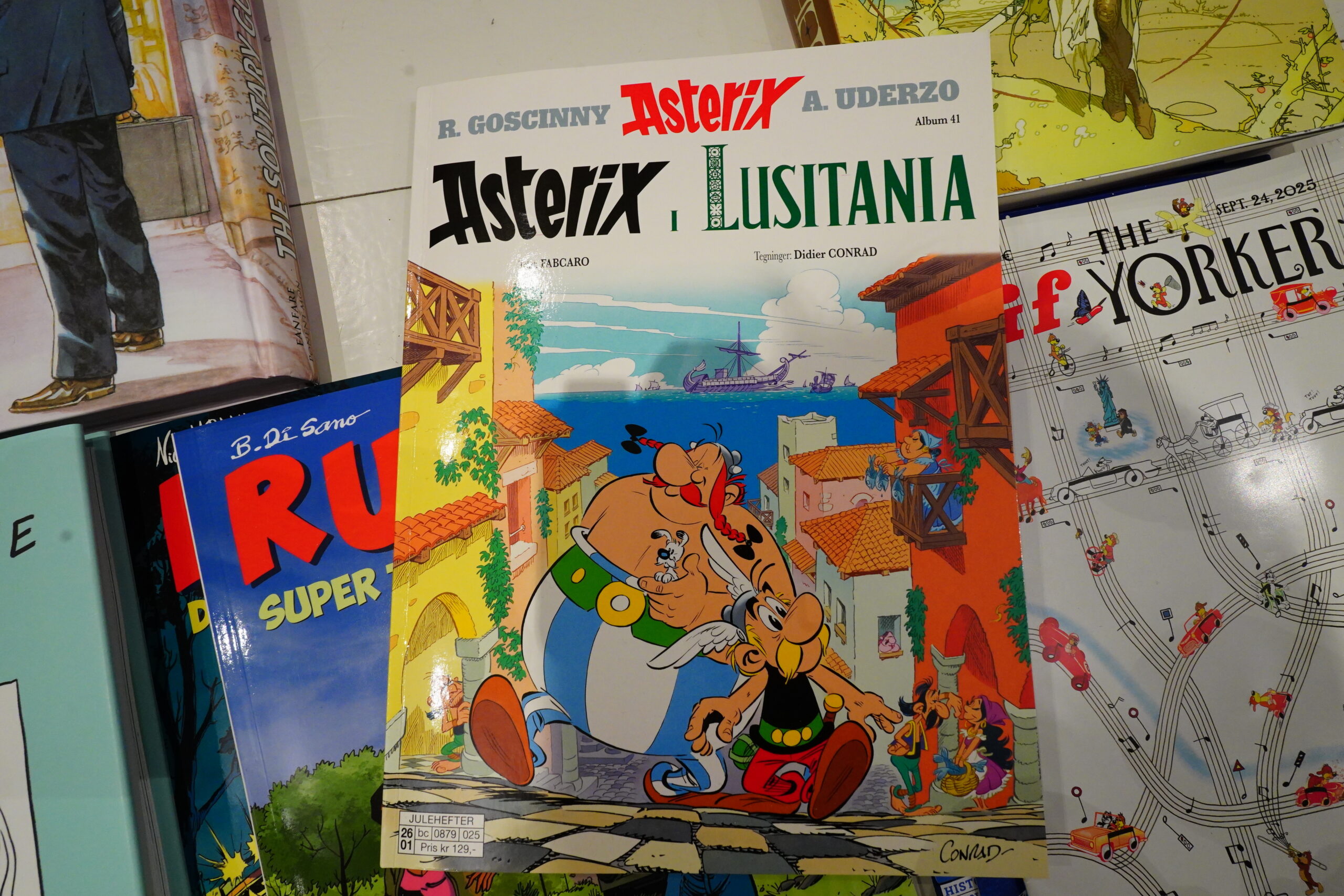

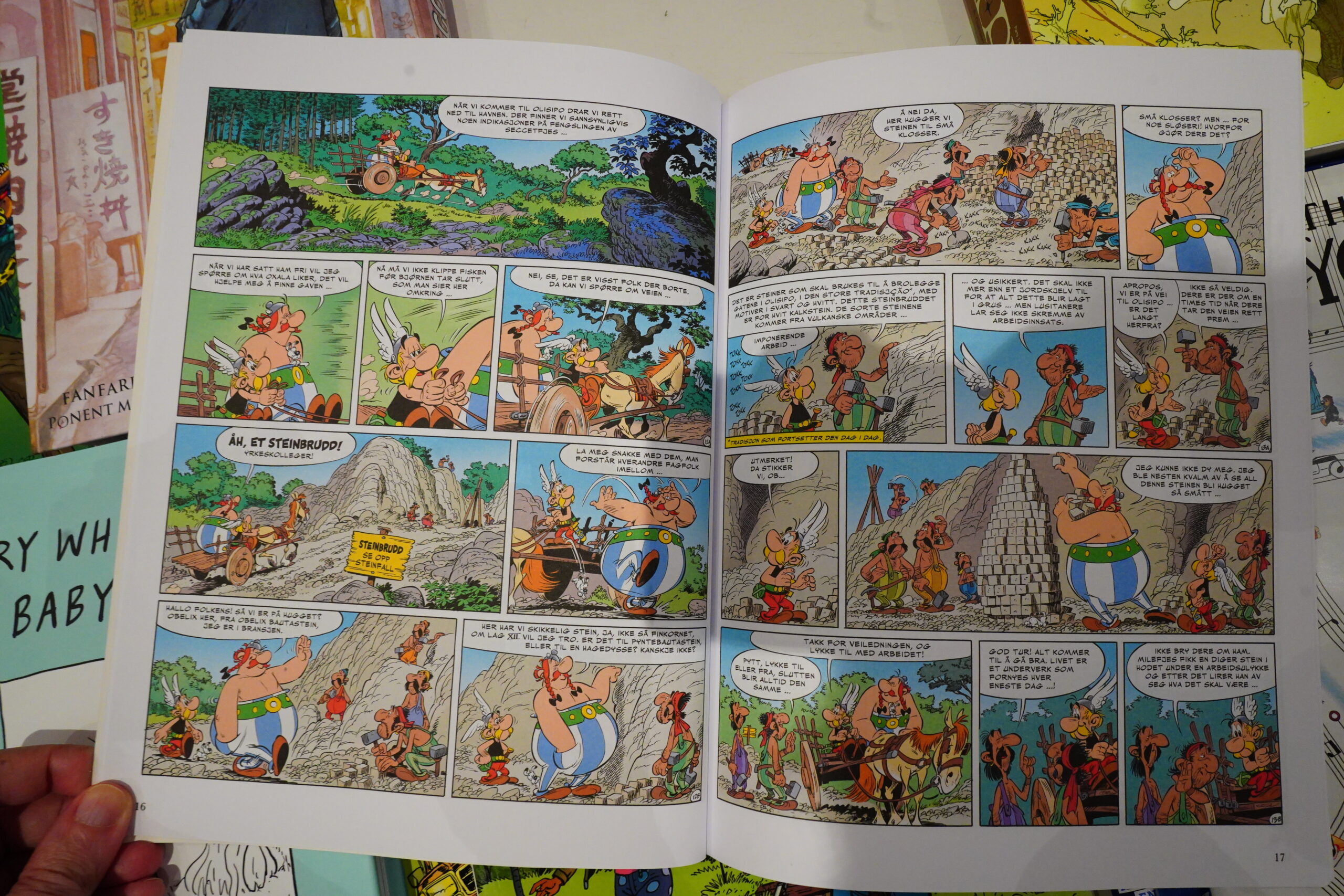

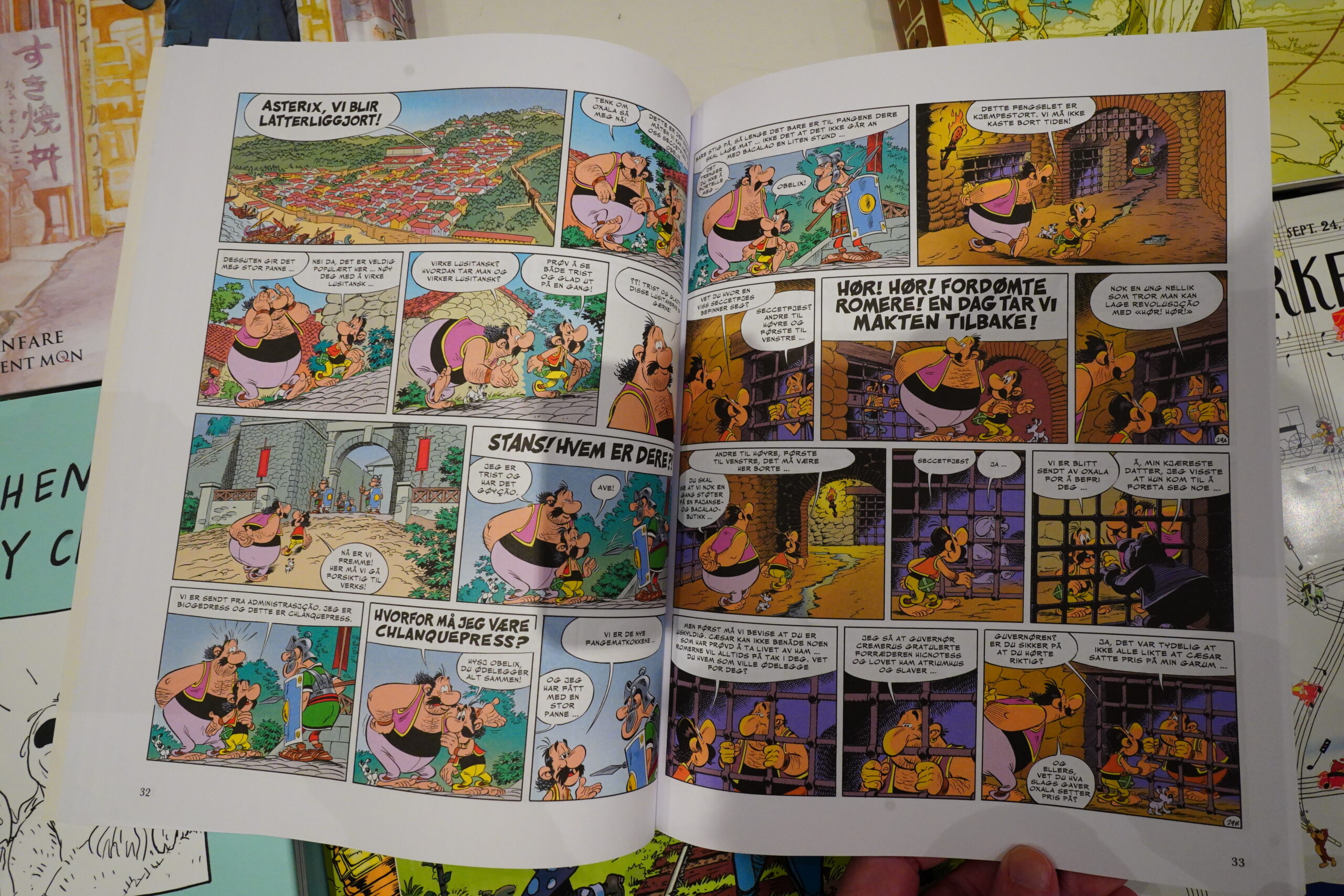

Of course the major comics event last year was Asterix en Lusitaine, which has sold millions of copies all over the world. And I think it’s also been getting good reviews? I didn’t get around to reading it until now, and…

… eh. It’s kinda meh. Fabcaro has them going to Portugal to solve a mystery, and we get all the classic elements, but it also feels a bit like reading the Wikipedia article about Portugal.

It may well have been better in French, though — this Norwegian translation is just awful. Asterix is all about the gags and word play, and the translator here isn’t up to the task at all. Frequently the characters are just spouting nonsense, and you can surmise that there had originally been a joke there, but it’s just… nothing. We were so lucky with the original Goscinny albums — they were translated by a very, very funny Norwegian (Else Michelet), but she died many years ago. So now we have this, which is just sad.

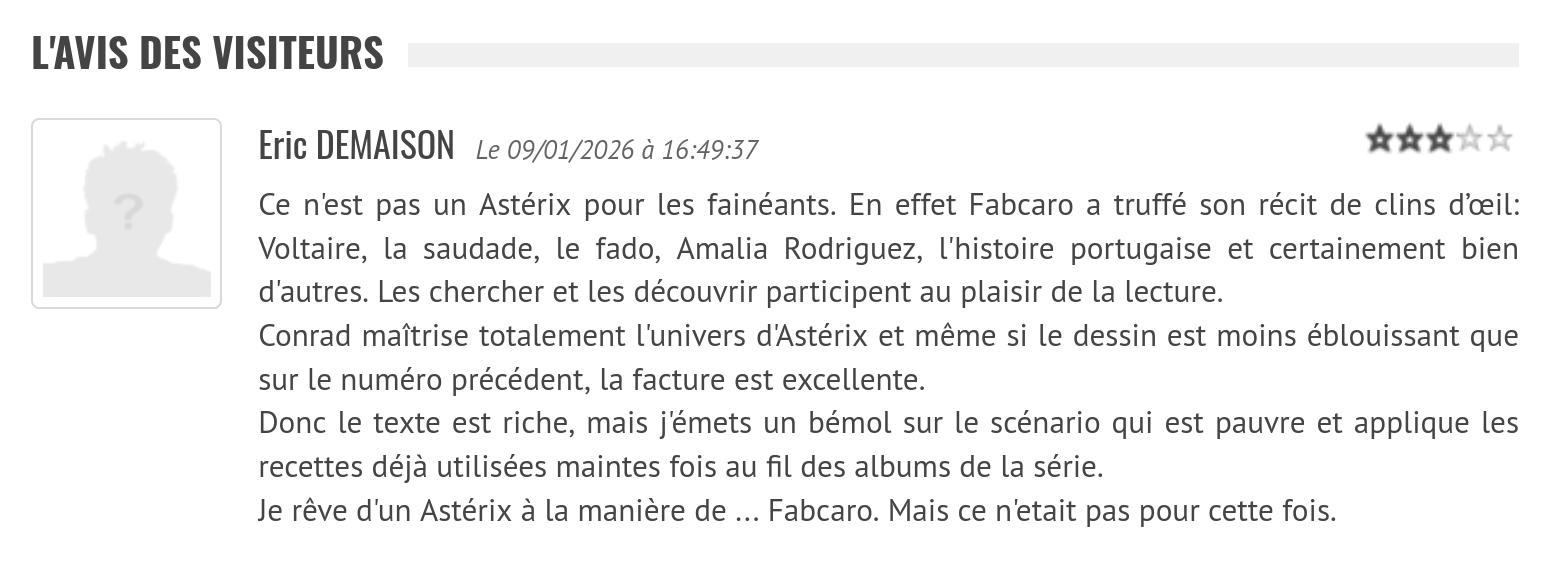

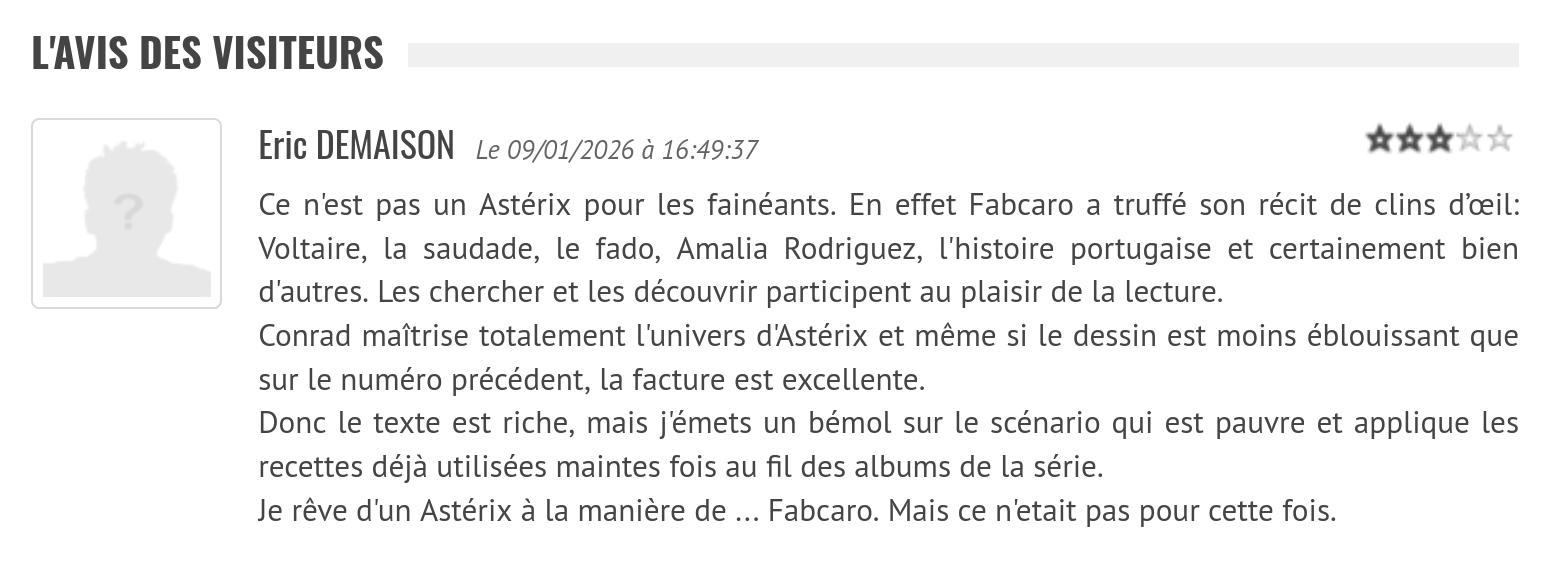

On the other hand, perhaps it sucks in French, too? Let’s see what the comics nerds say:

Well, he likes all the historical illusions, but things the plot is kinda lame. The others are much the same, so I guess my opinion wasn’t very original.

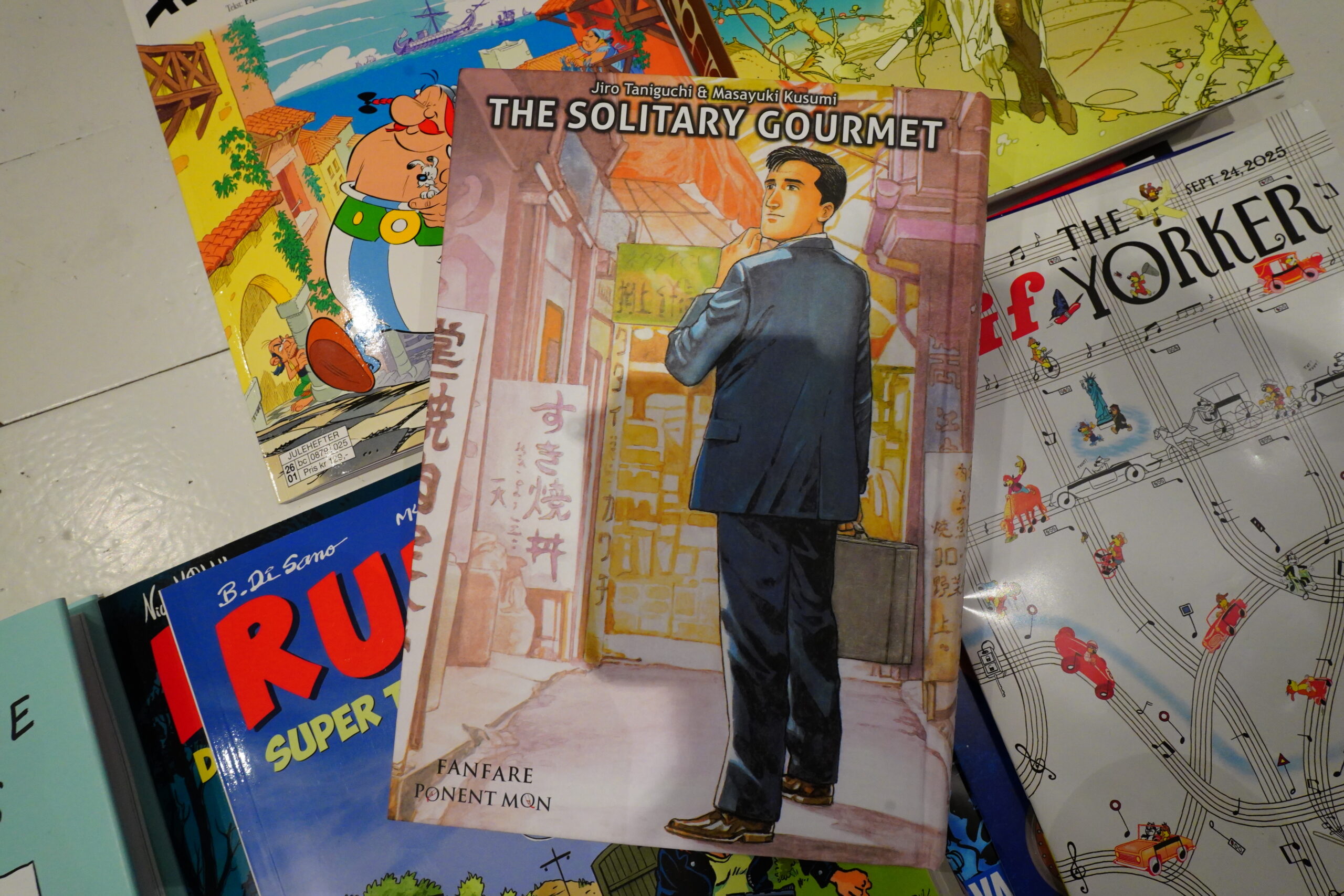

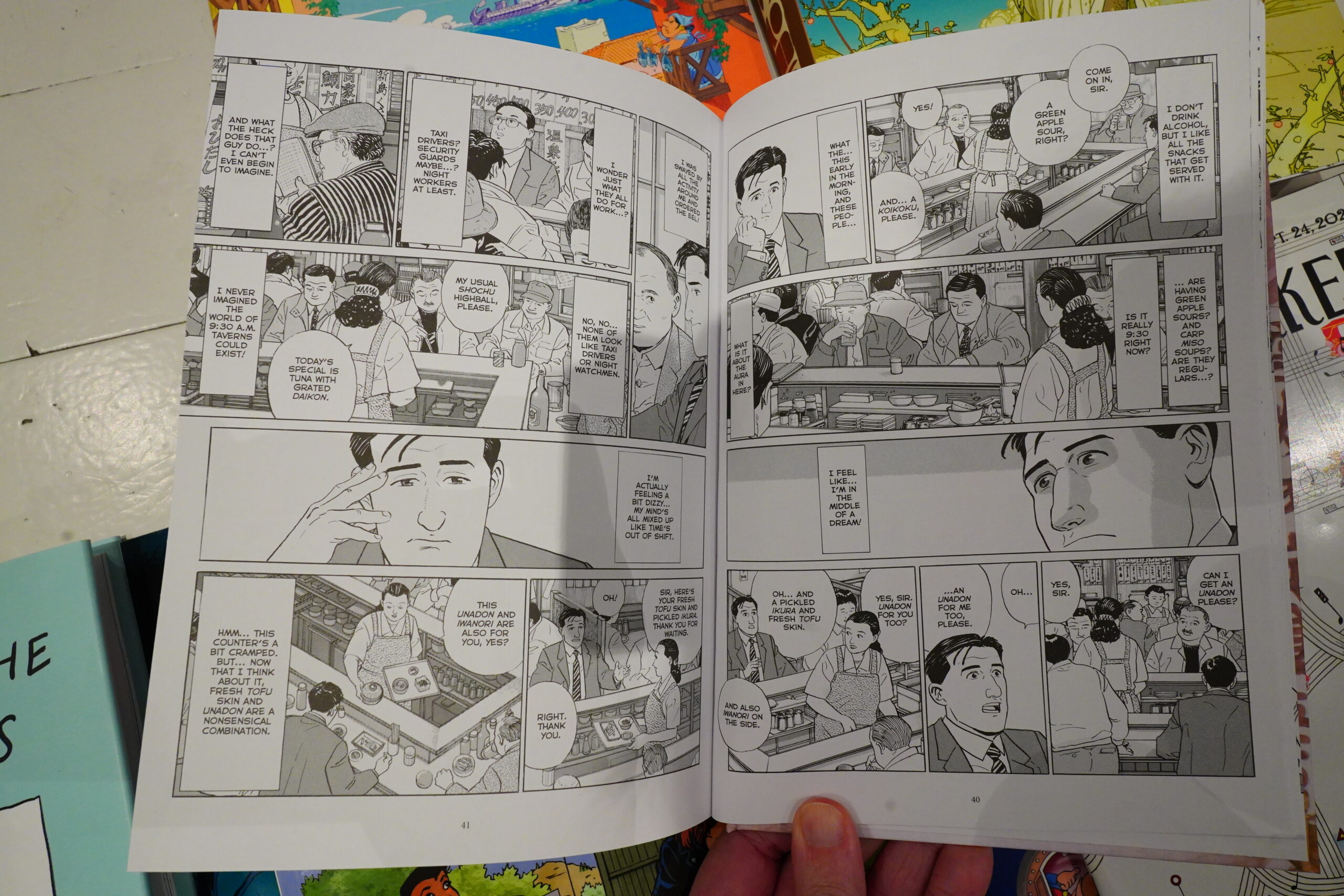

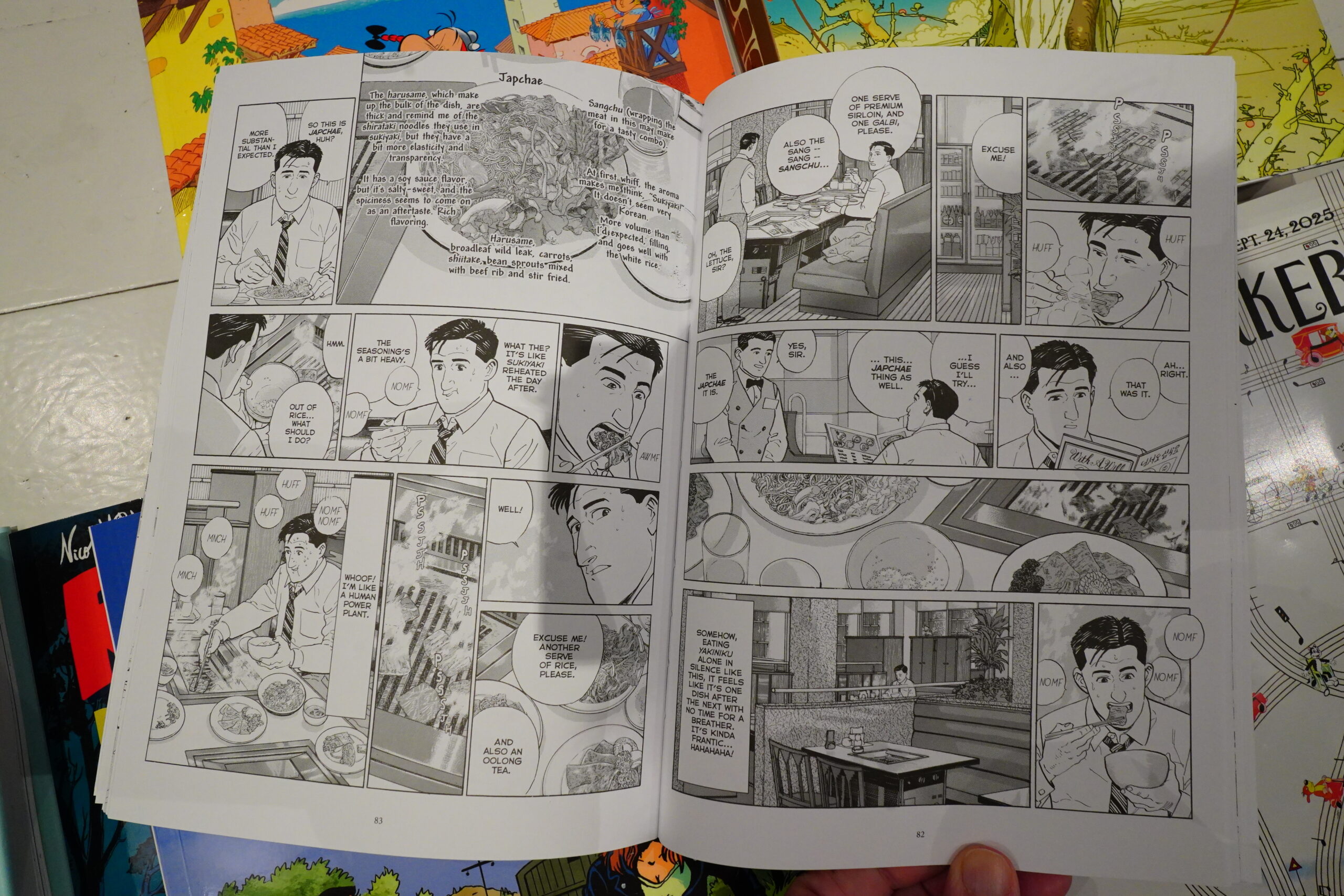

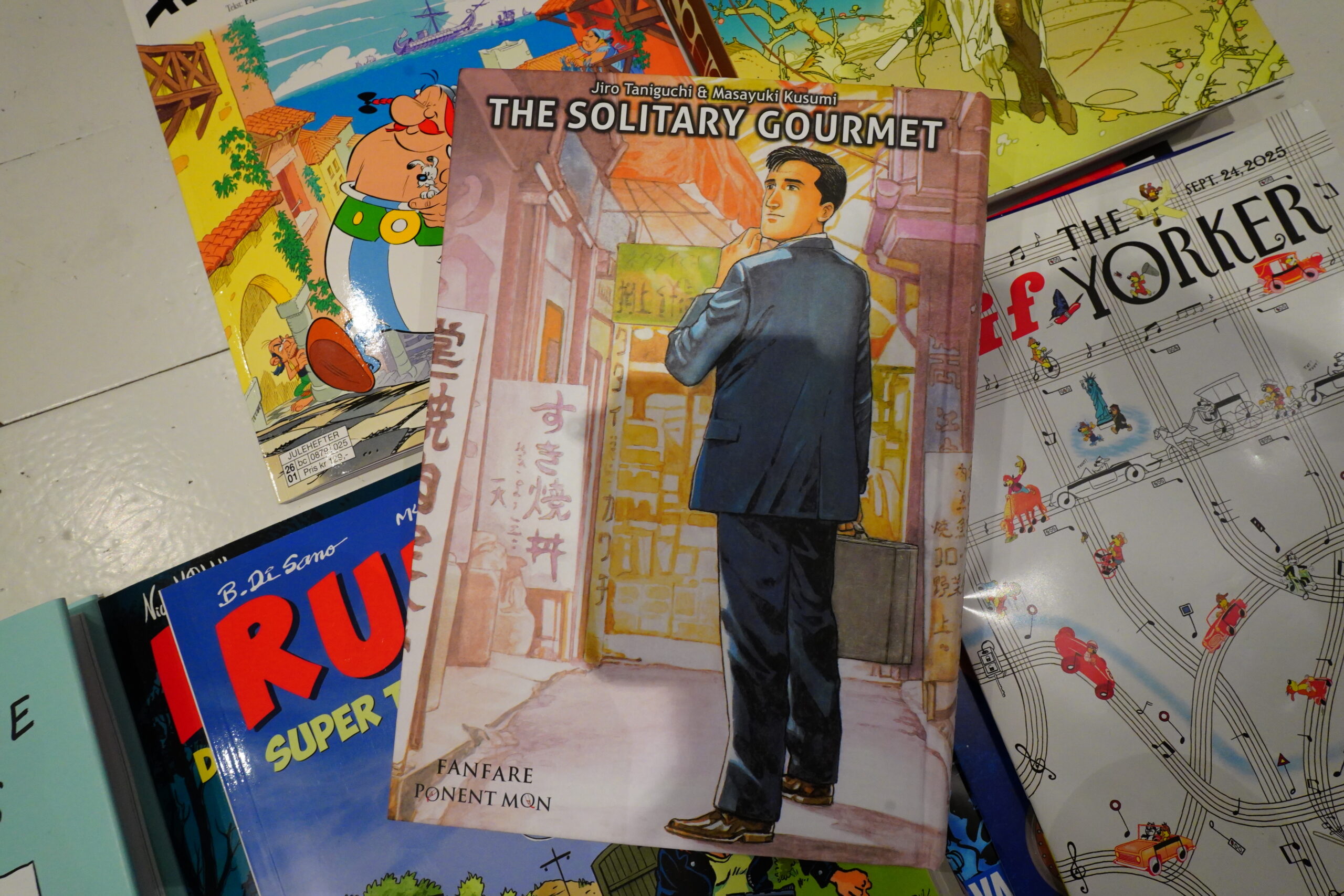

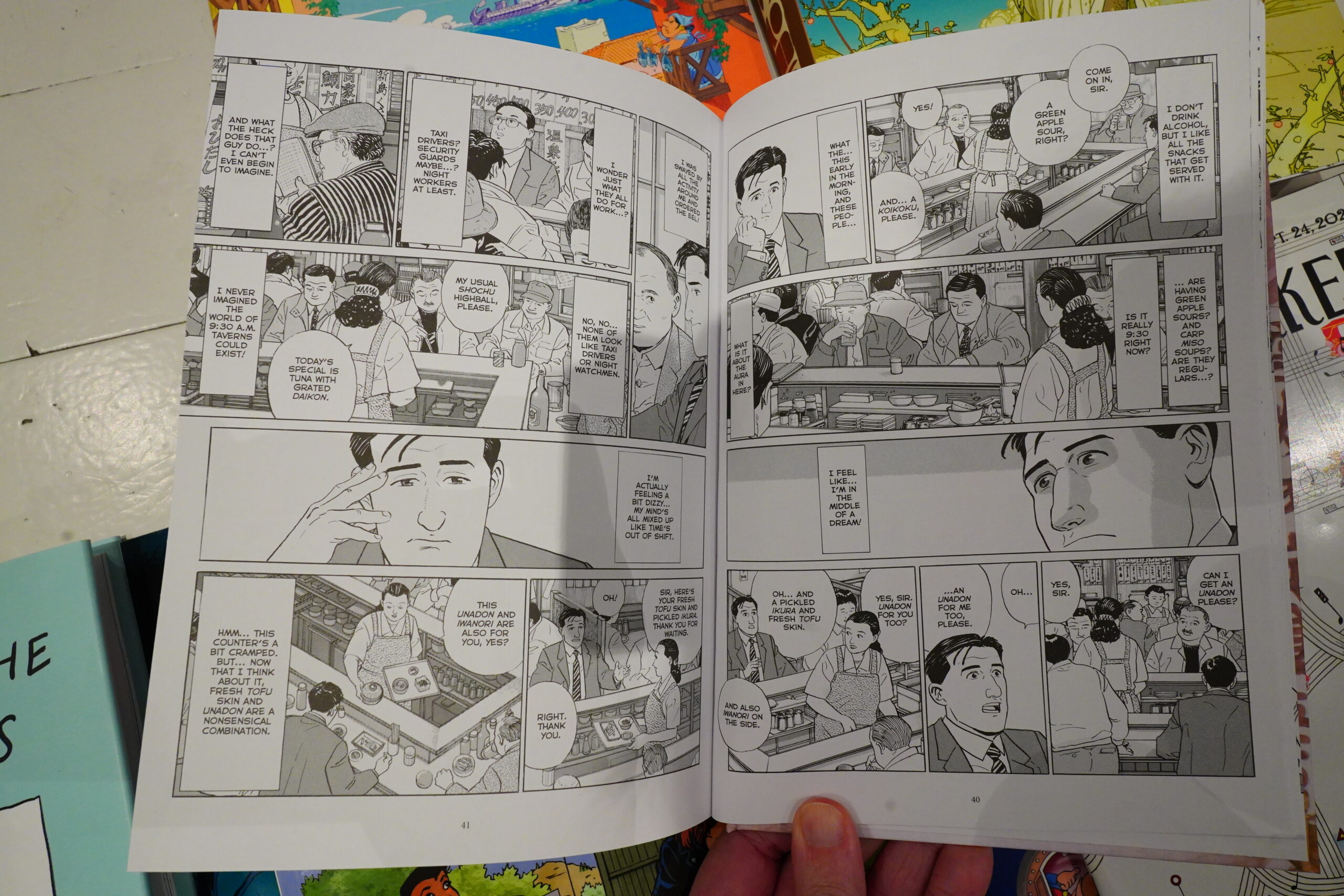

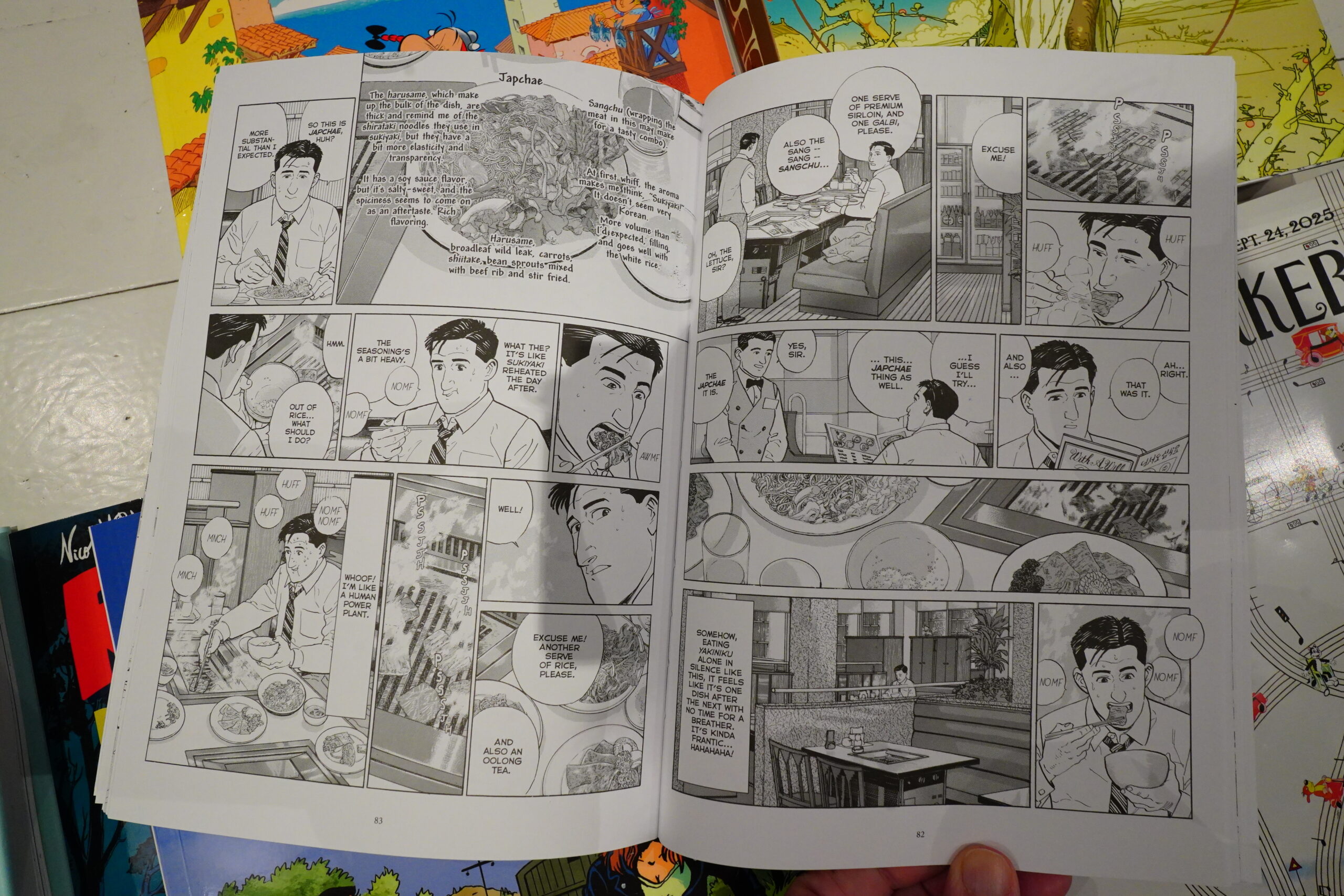

And neither is my opinion about The Solitary Gourmet — I really, really liked it, so I googled it and it seems like everybody else did, too. It’s been made into a TV series and a movie.

x

The book consists of 32 stories of about 10 pages each, and (almost) every one has the same plot: This guy goes into a restaurant, alone, and eats food. The end. And it’s absolutely riveting, and so romantic — he stumbles upon one wonderful restaurant/bar/cafe/cafeteria after another, and eats stuff that’s either nostalgic or unusual.

I would not recommend reading this in one sitting, because it is, you know, 32x the same story, but it is irresistable. Jiro Taniguchi’s artwork is stylishly stiff and has a very attractive line. Of course much of the appeal is the appeal of mysterious Japanese diners, but the atmosphere is just undeniable.

(You do eventually learn something about our mysterious dinner guest, but not a lot.)

This edition collects two books, and the second (published two decades after the first) isn’t quite as strong as the first. It’s still very good, but not sublime.

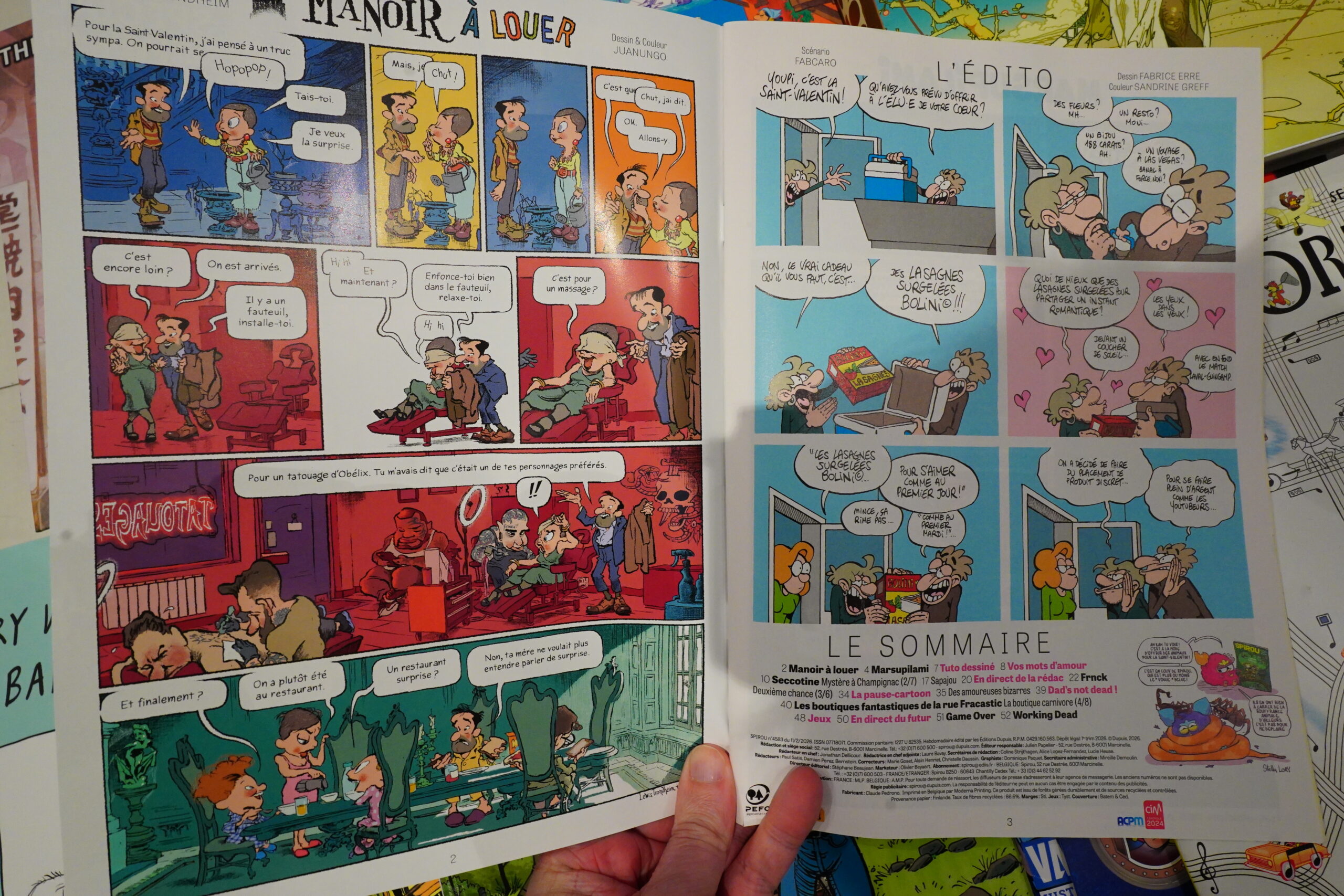

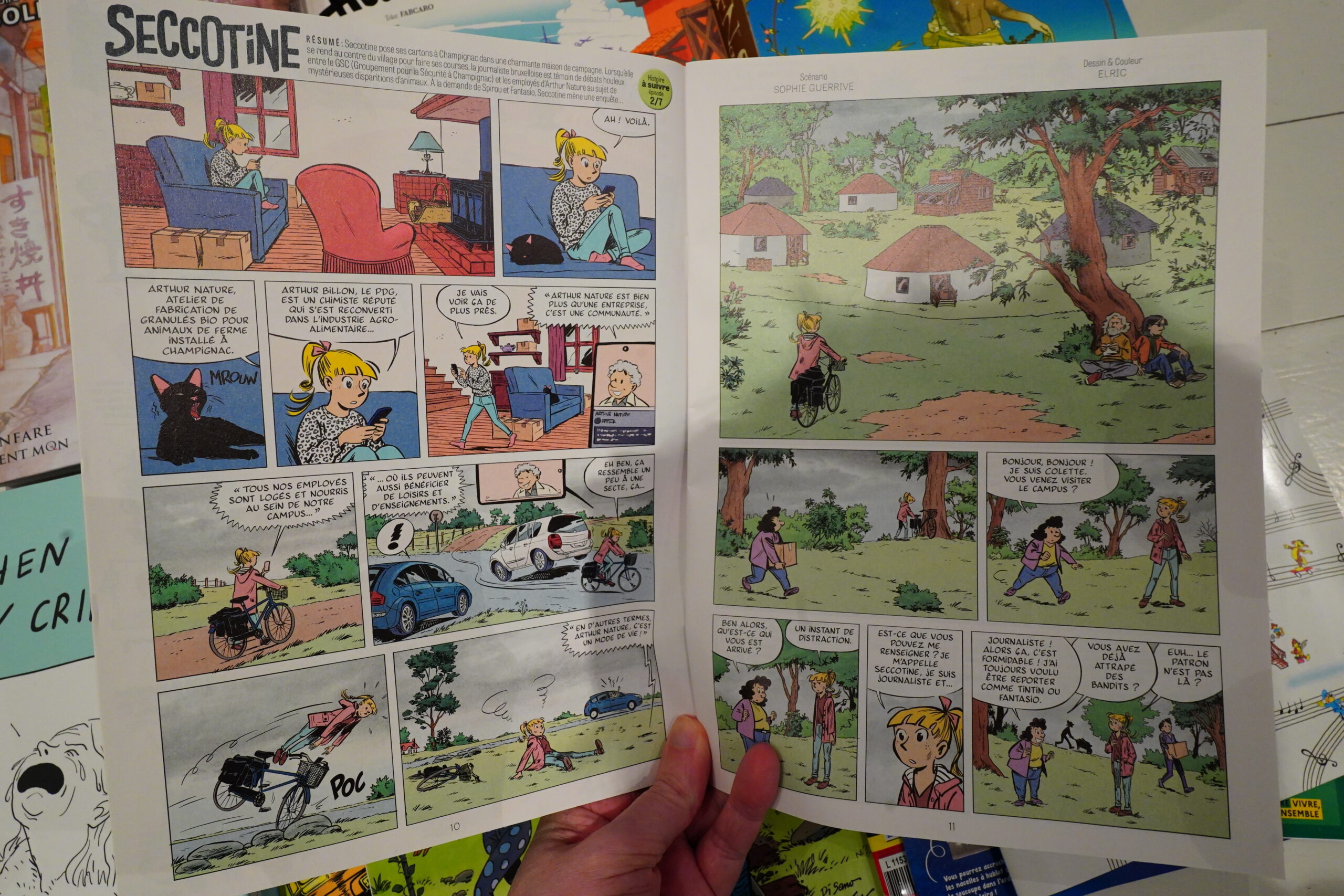

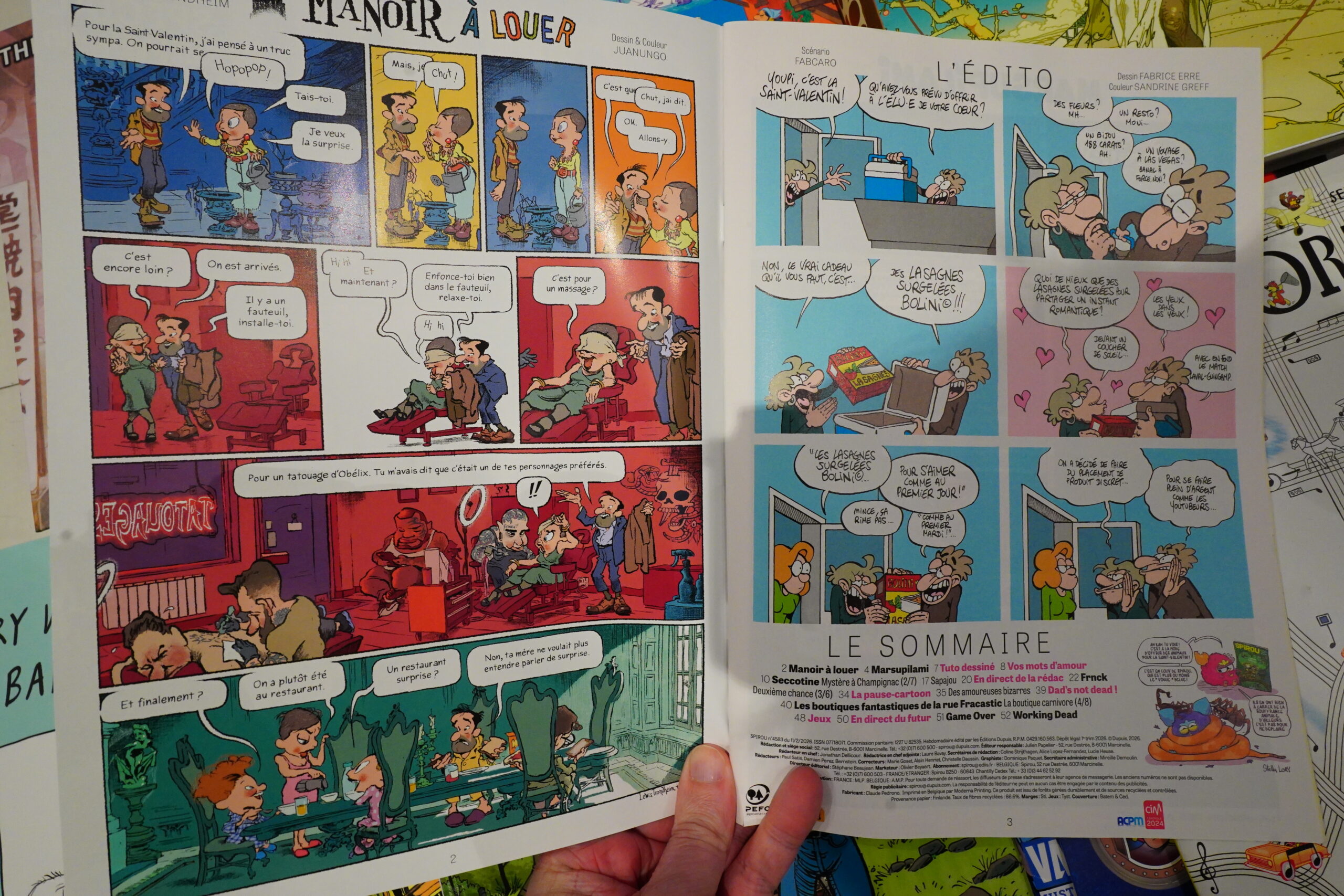

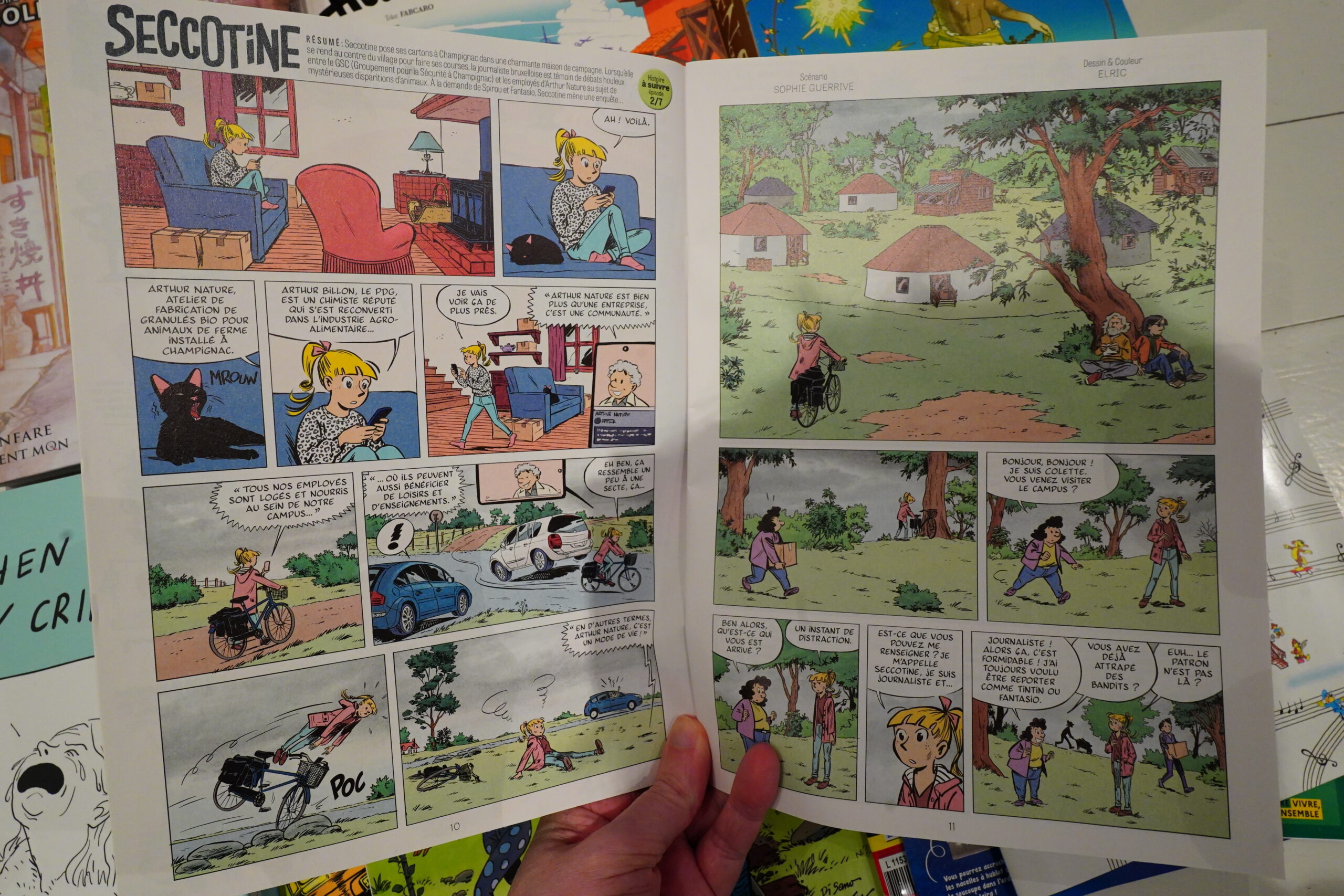

I ran out of Spirous to read, almost.

It’s a good issue.

I’m really into the Seccotine serial.

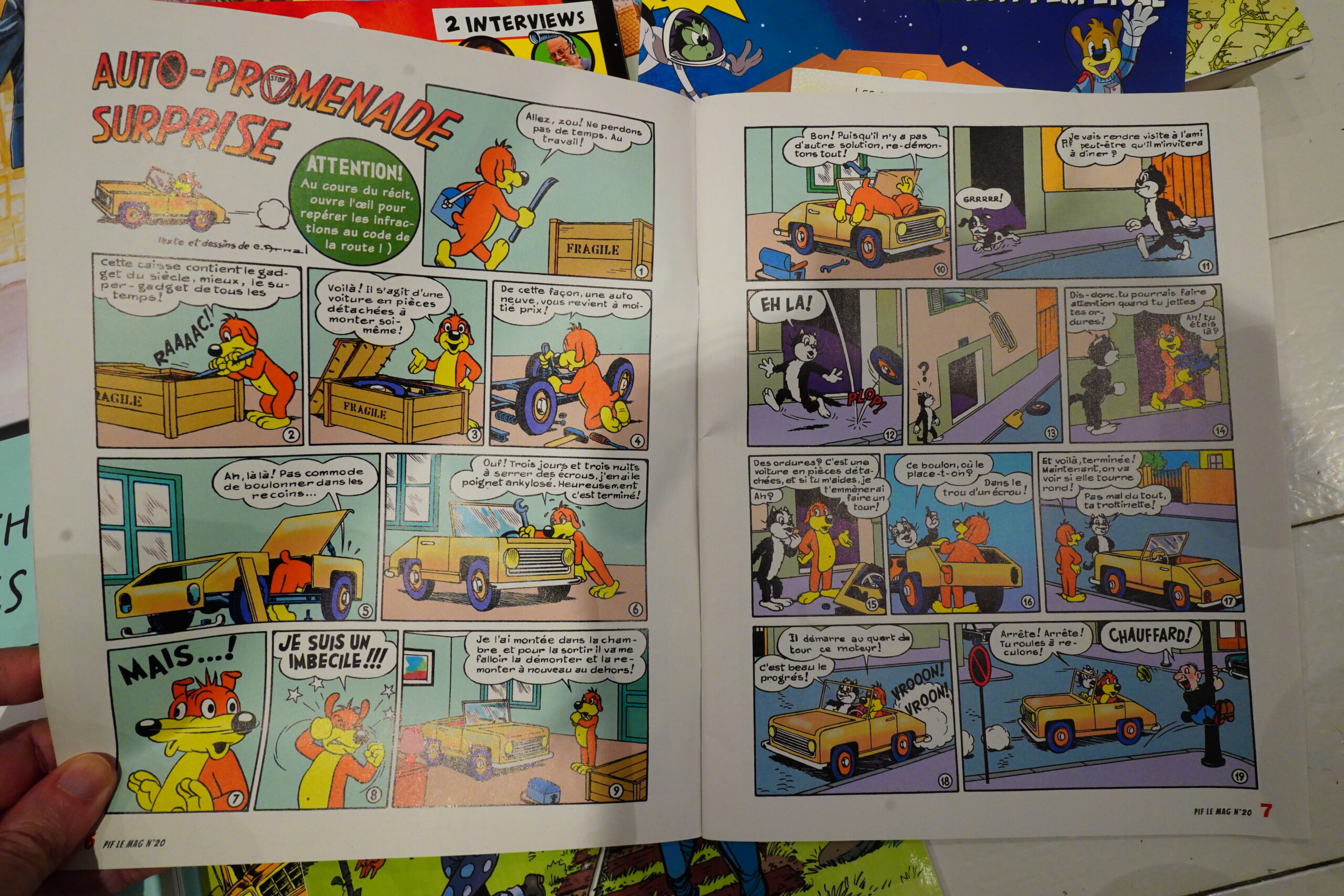

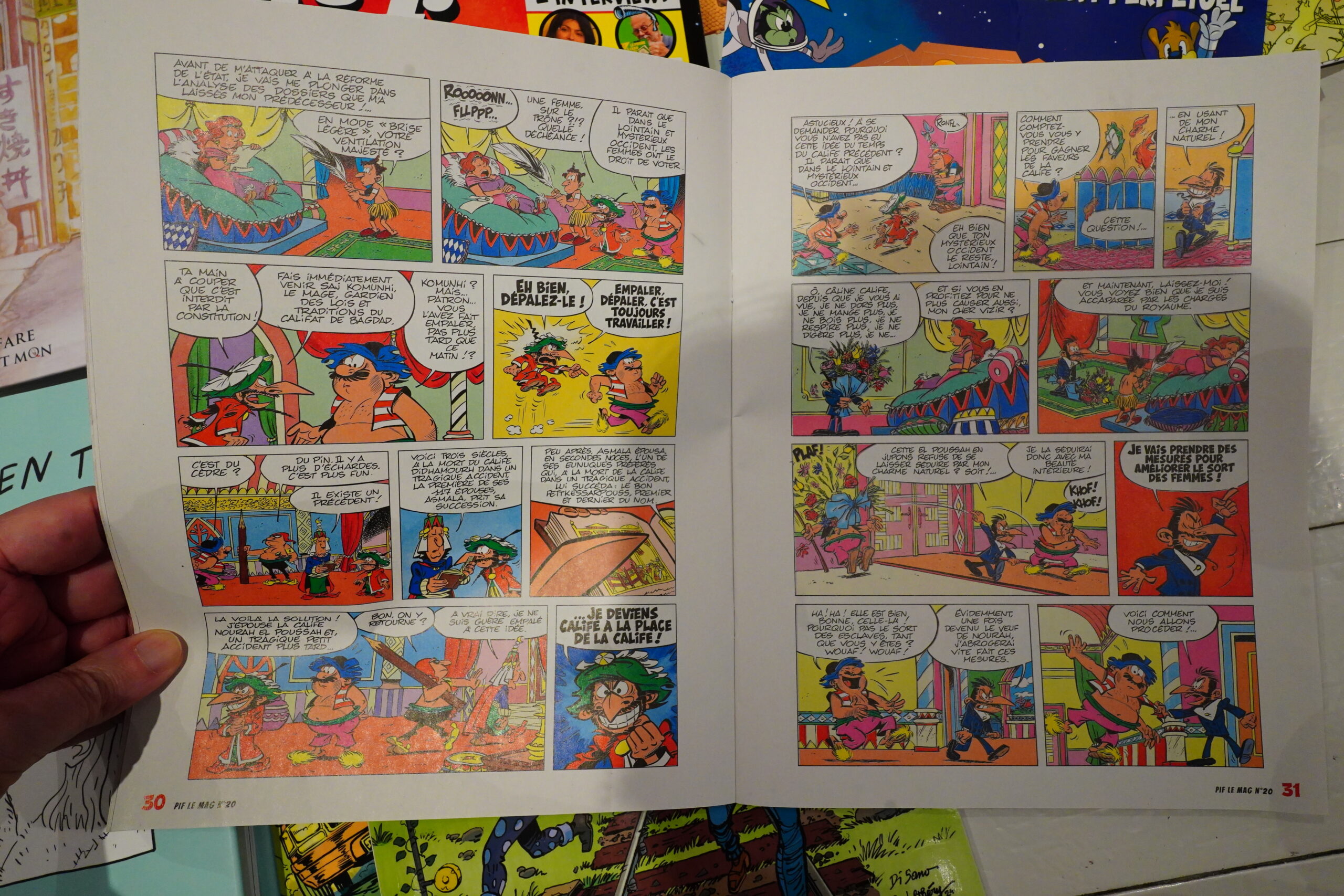

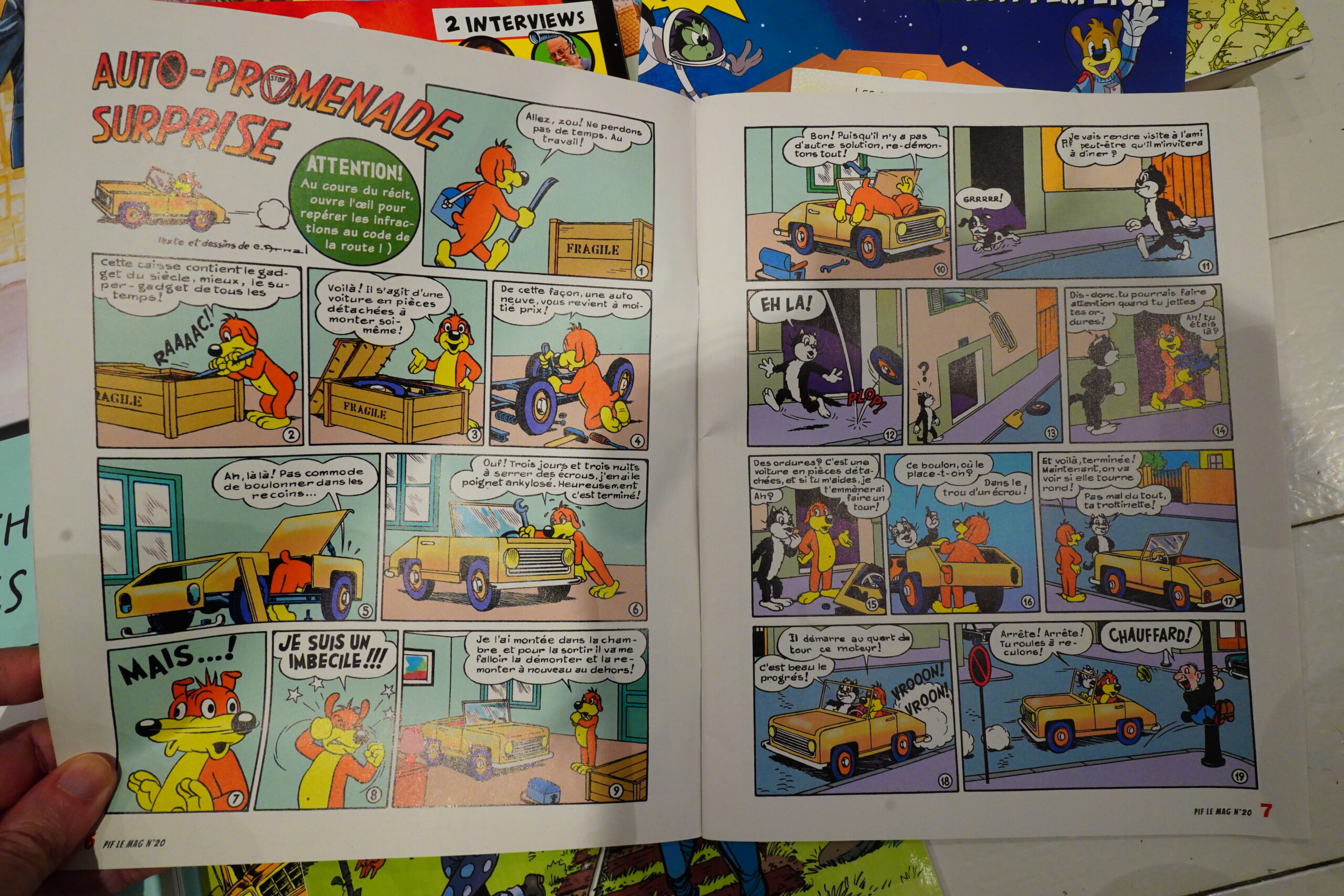

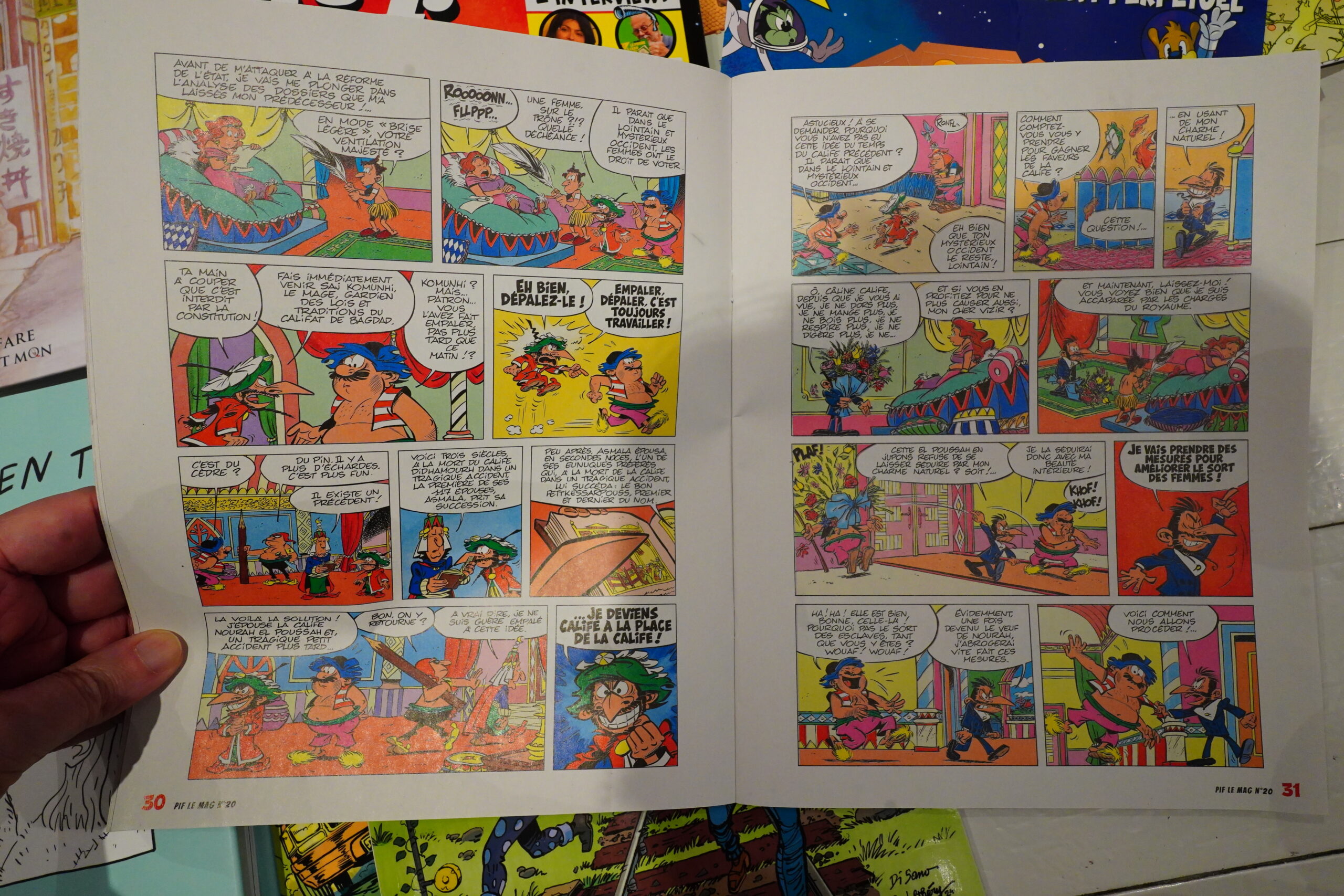

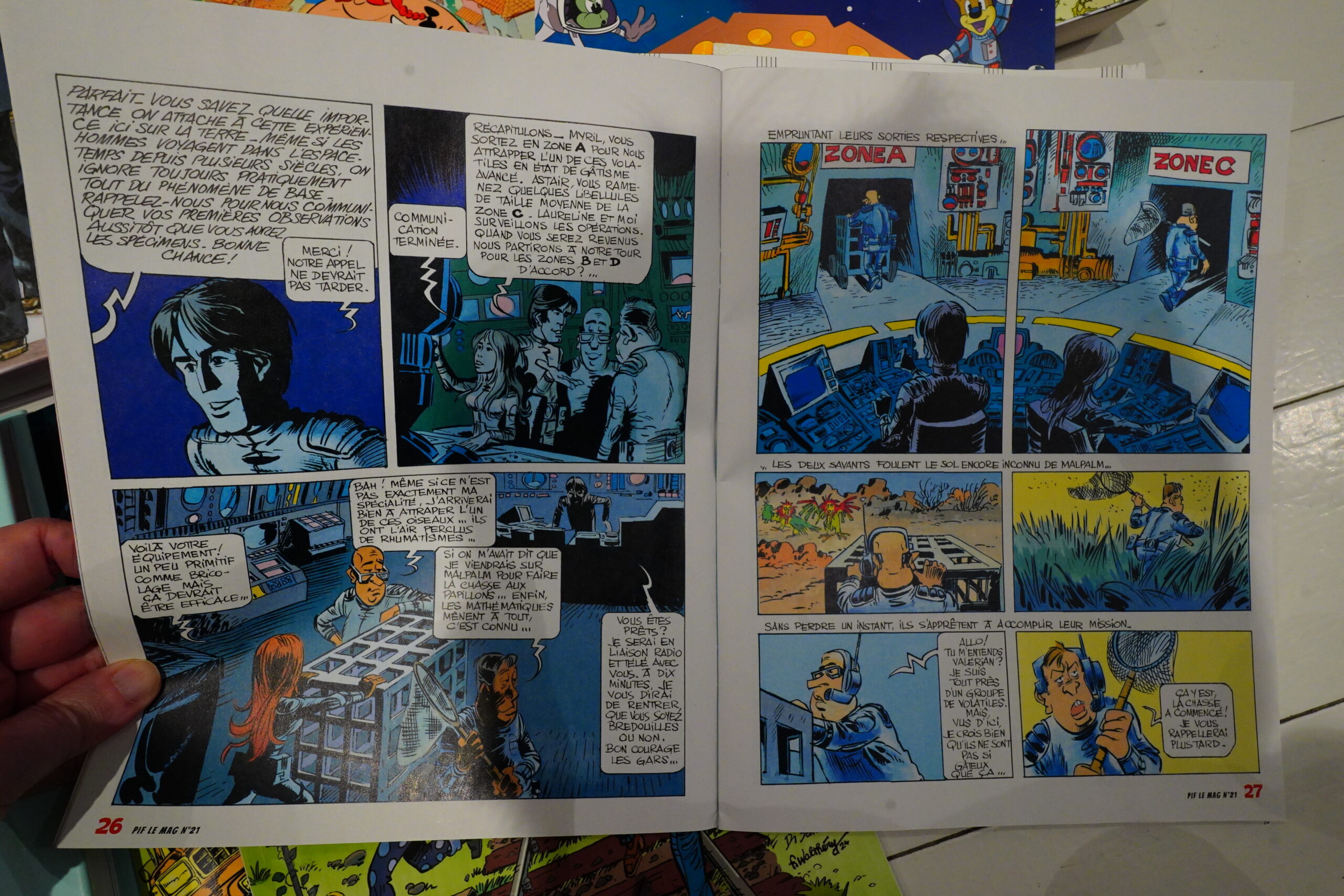

I’m not into Pif Gadget. It’s aimed at even younger children than Spirou, so you’d think it’d be perfect for me, learning French and all.

But no — most of the strips are just bad.

And the ones that aren’t rely heavily on word play, which I don’t really understand much of anyway, so it’s both too childish and too advanced for me at the same time.

They do reprint some old classics, though, which is nice, but I’m letting my subscription lapse.

Even if there’s fun things included, like this perpetual motion machine. (I haven’t actually checked the perpetualness, but I assume it works.)

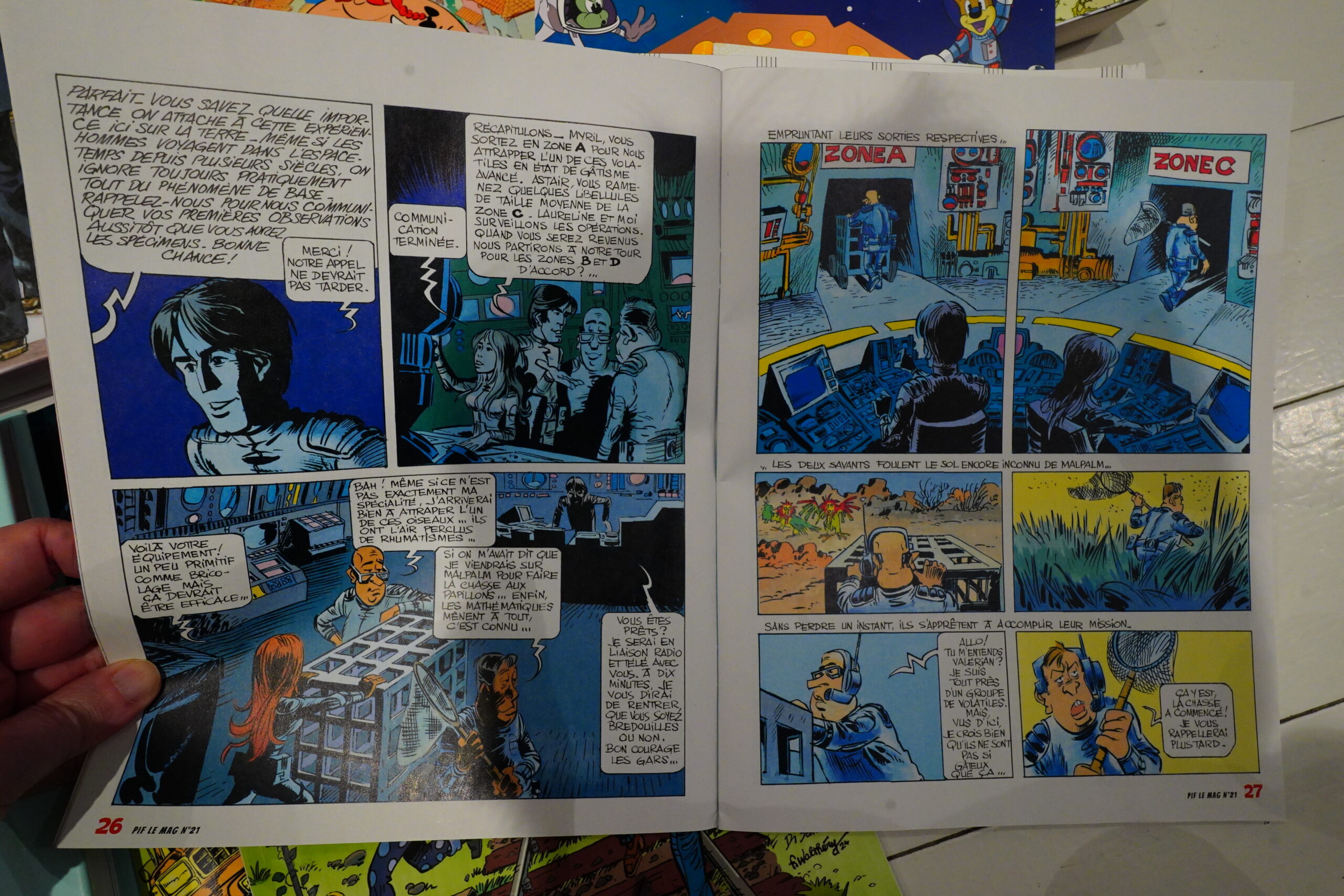

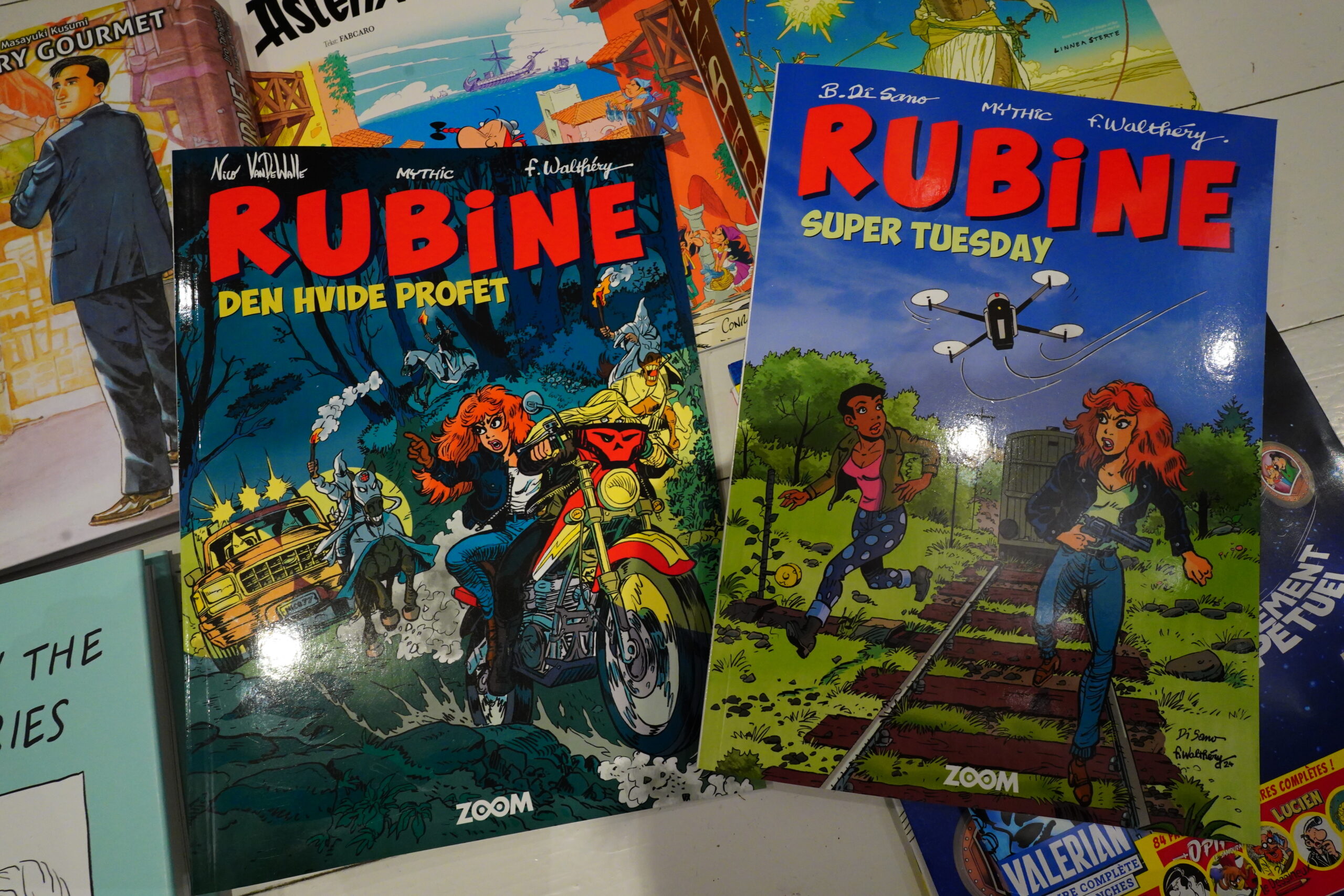

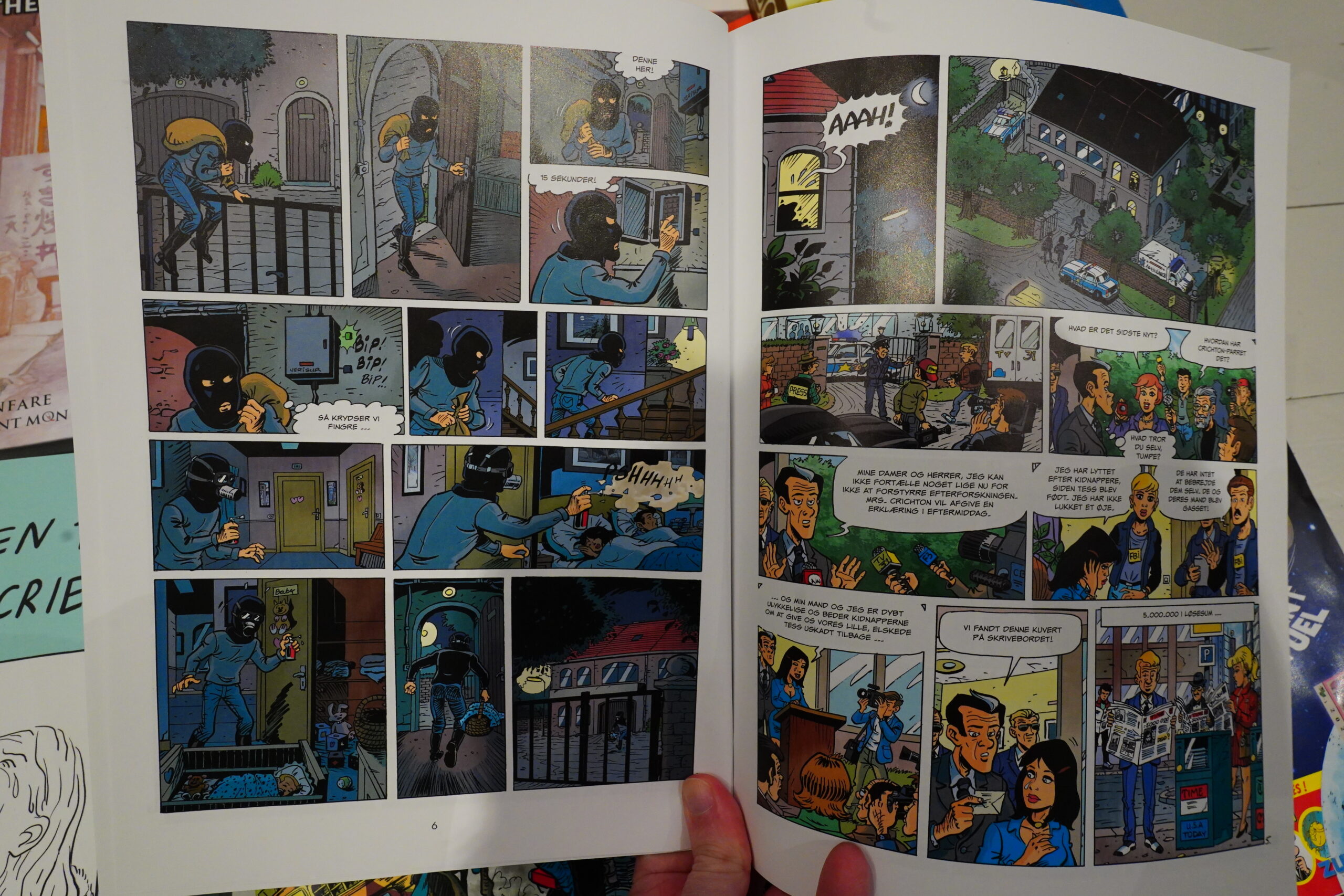

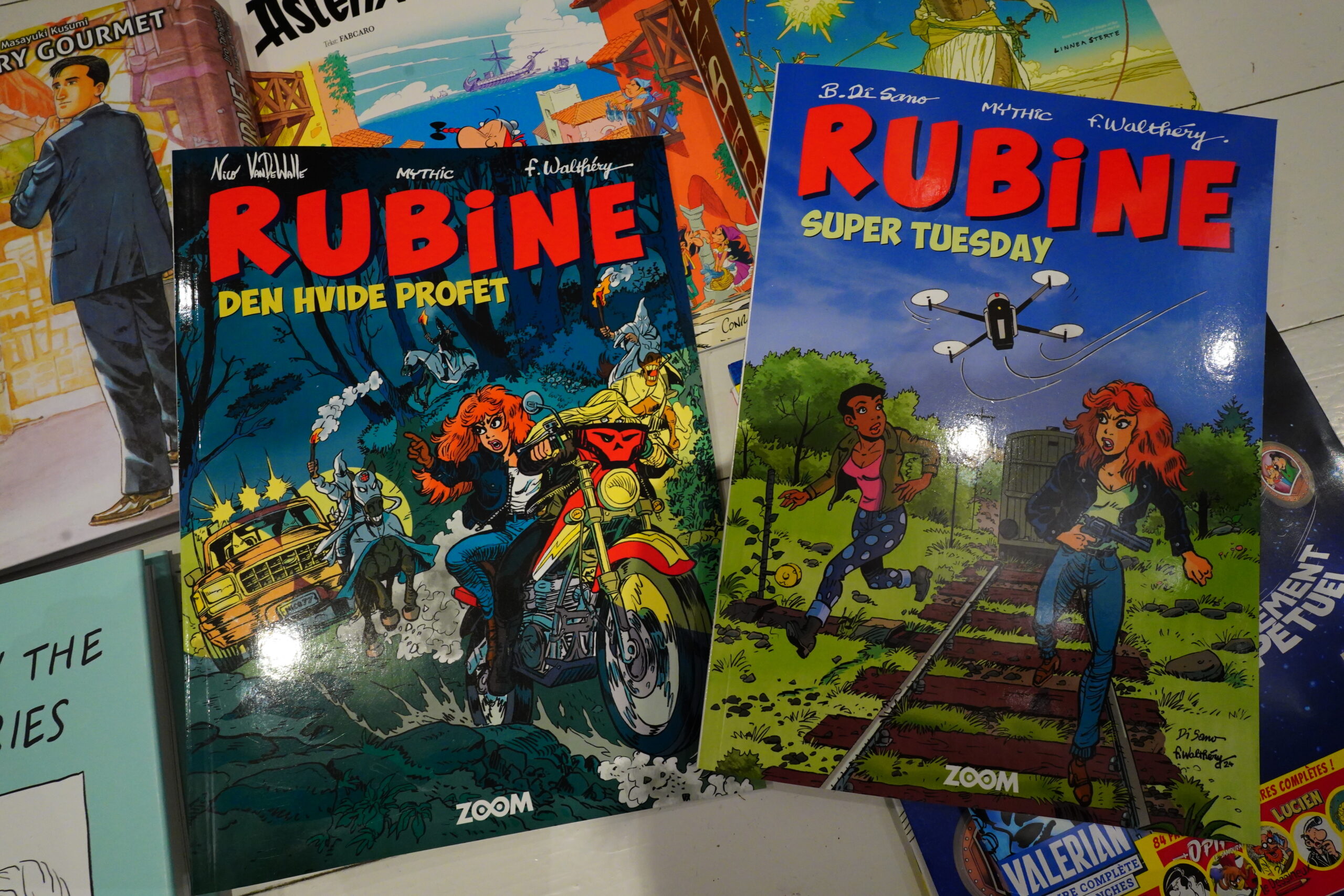

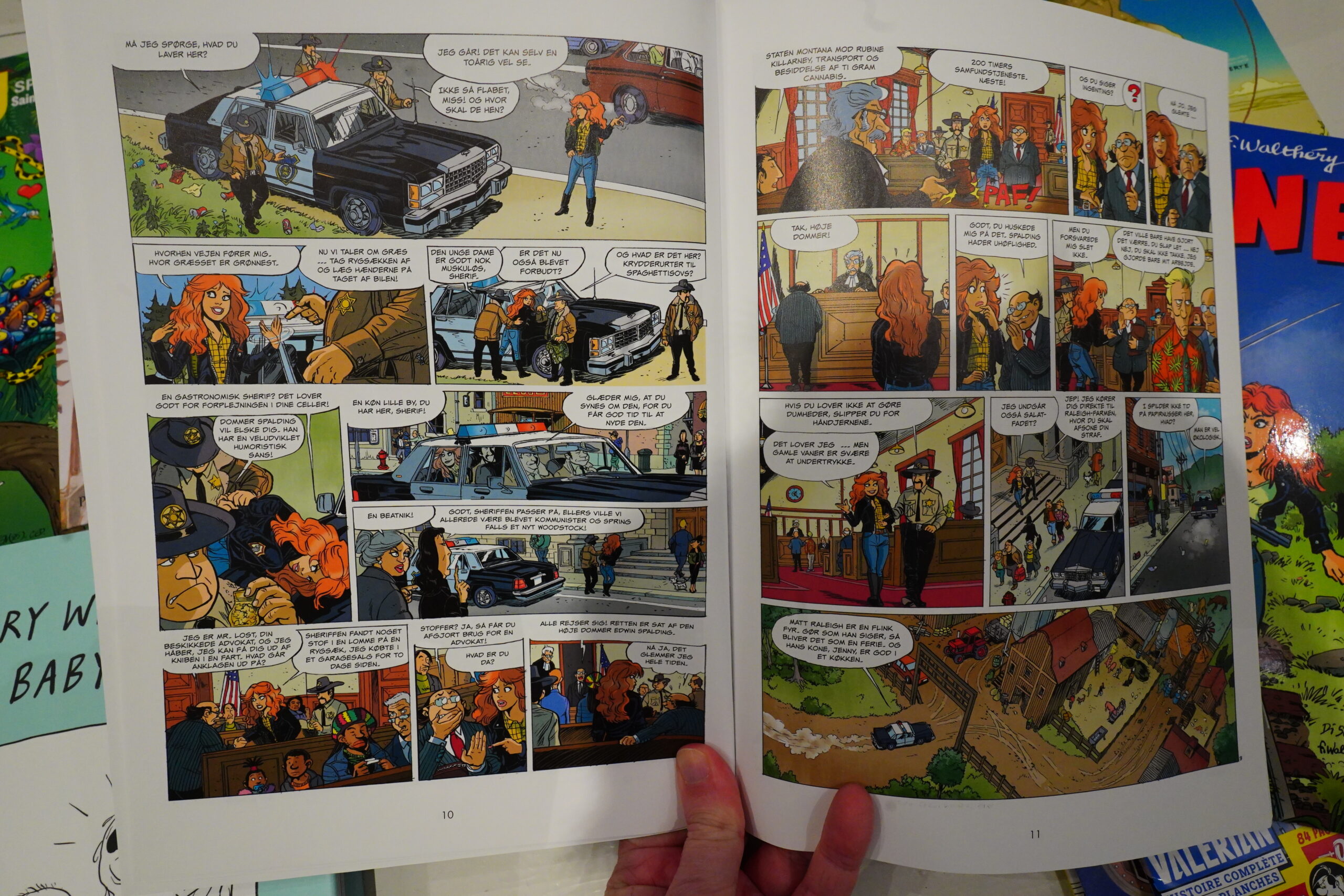

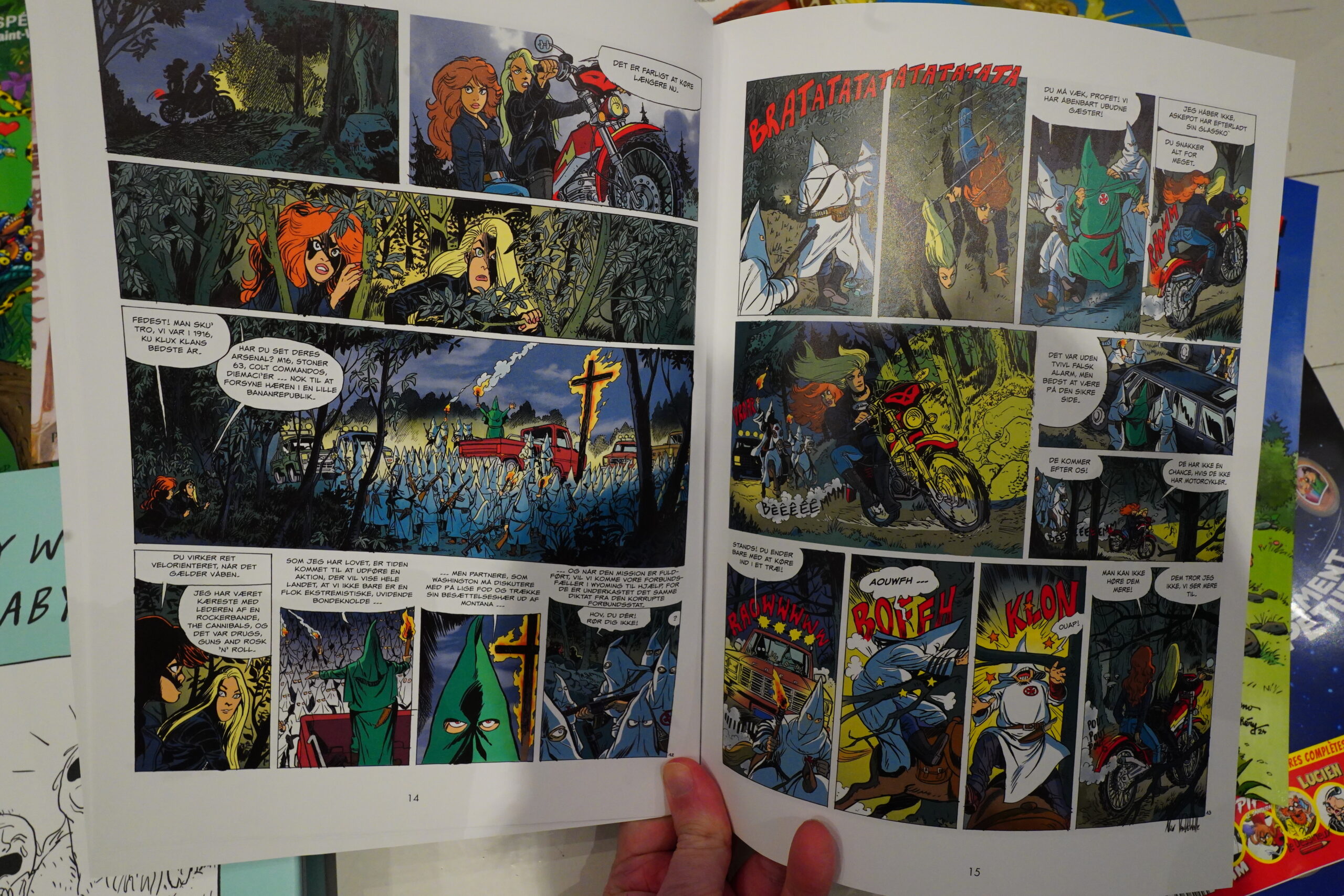

Rubine is one of Frank Walthery’s series, but he’s old, so it’s now mostly drawn by others? Does he do inking? And it’s written by Mythic.

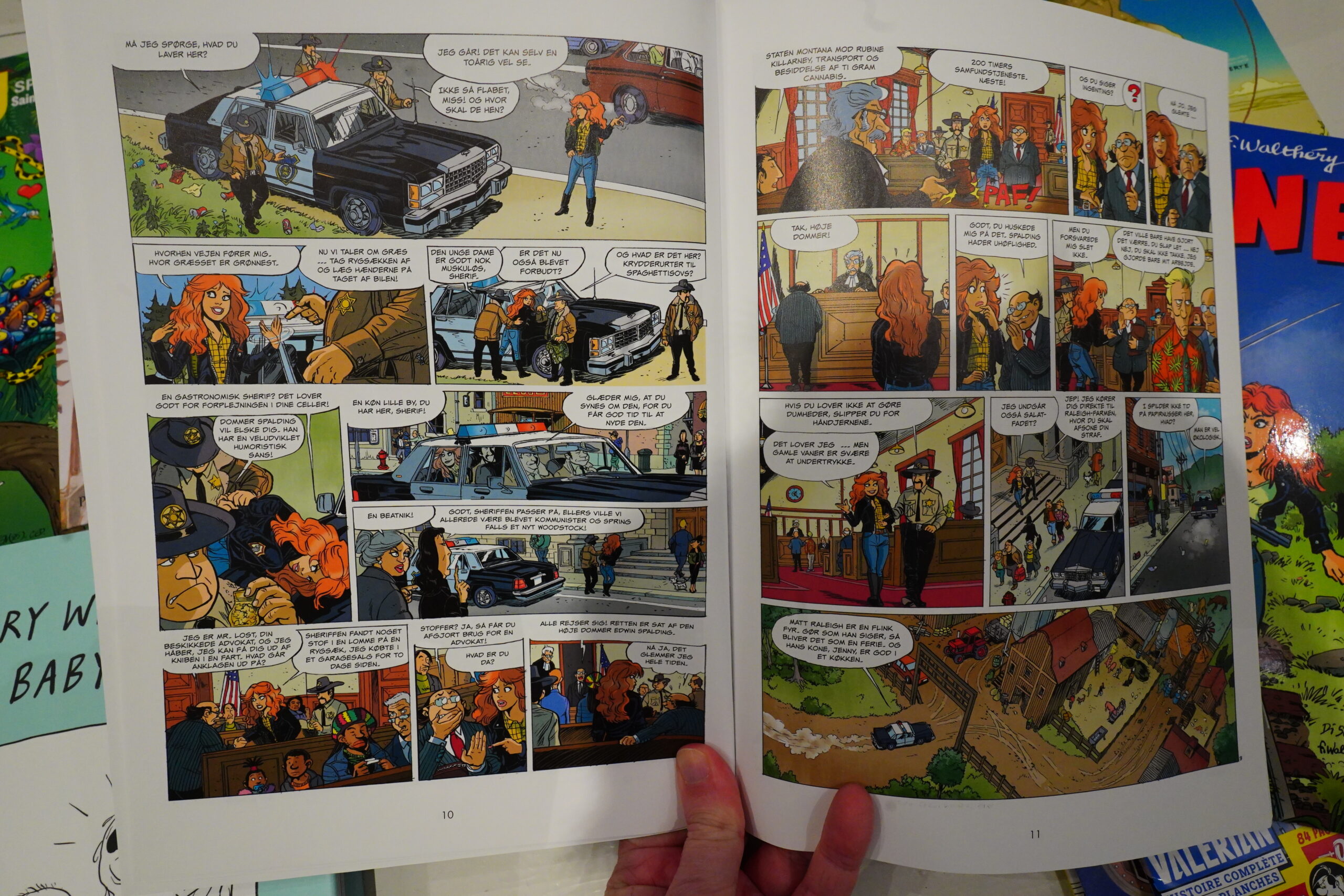

The first one seems very tailored to a European audience in 2026 — it’s all about how awful Americans are. So we have Rubine going undercover in the South and getting arrested by a totally corrupt police force (and judge)…

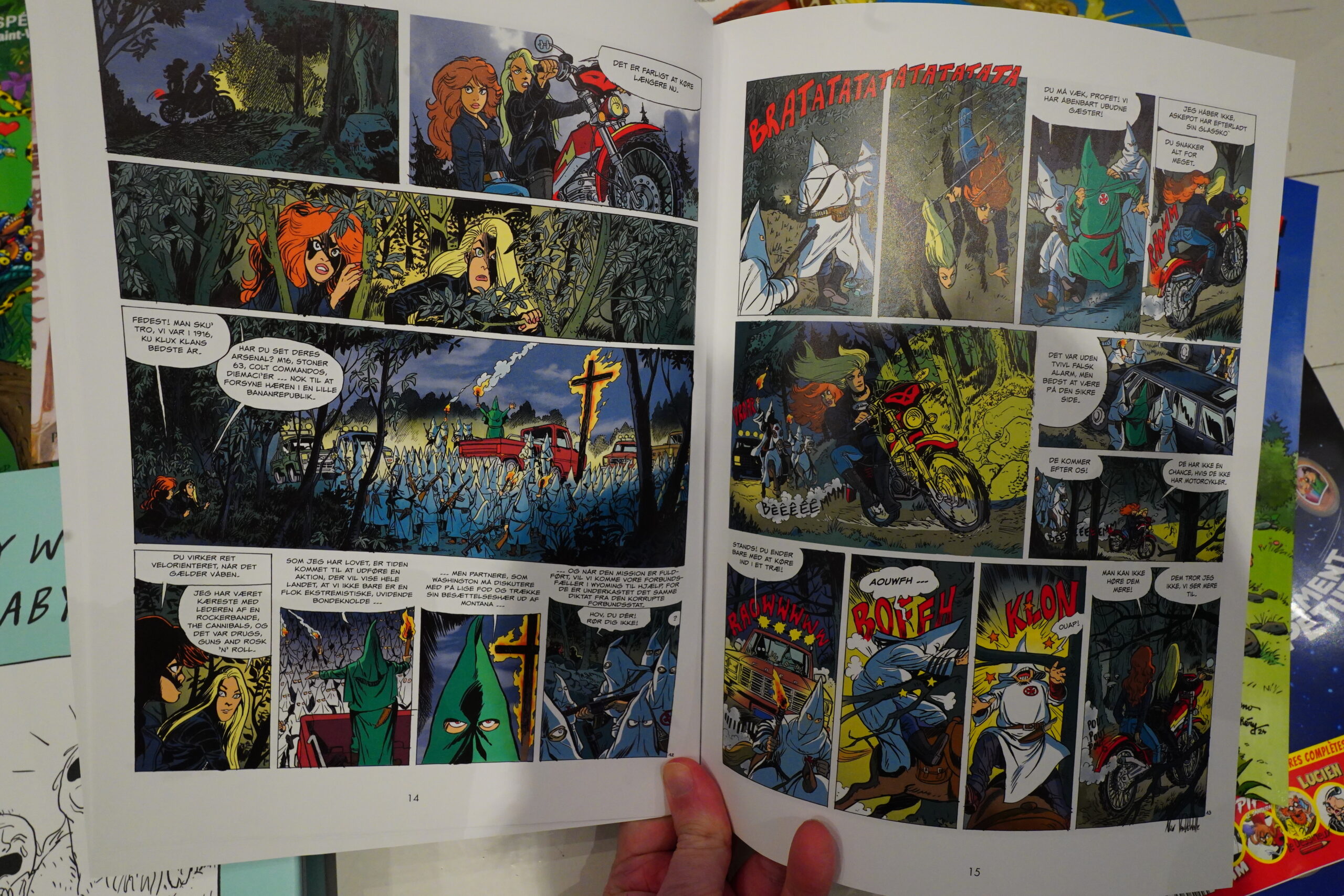

… who all turn out to be members of the KKK and stuff. So all that’s excellent, but the story makes absolutely no sense whatsoever. After I’d finished, I had to flip back and re-read the ten last pages to see whether they made more sense the second time through, but nope: Absolute shit show.

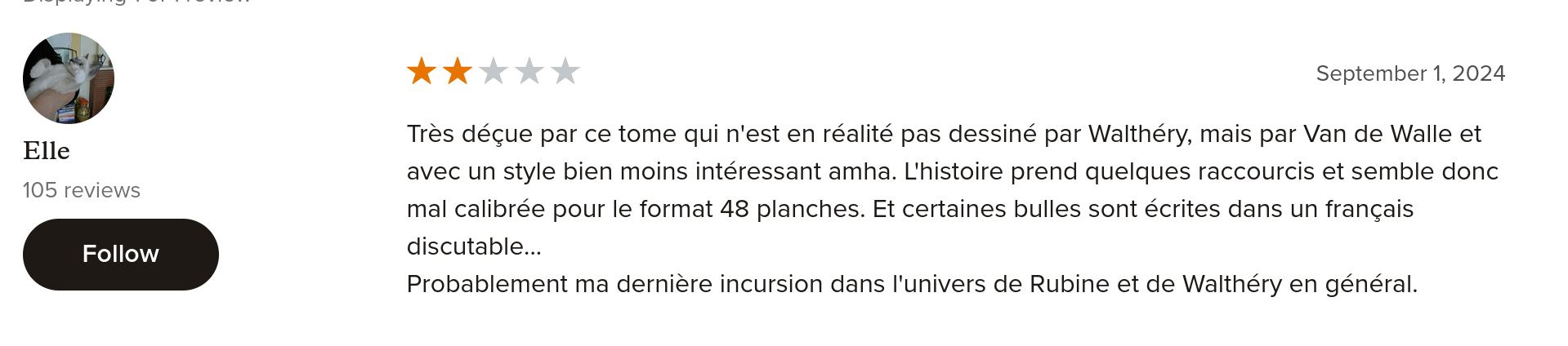

People don’t seem impressed.

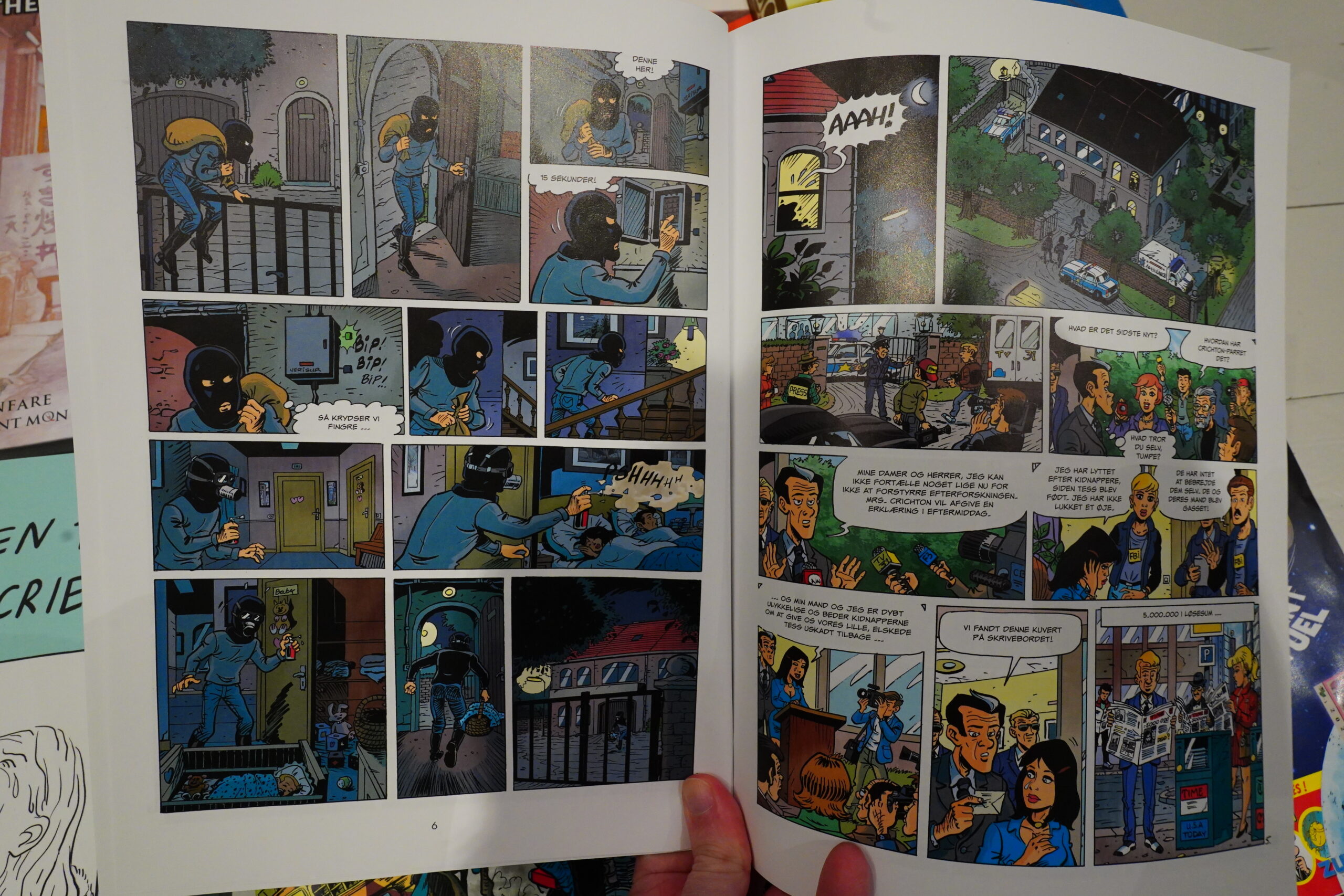

The second writer is much better — it’s a quite complicated plot, but I think it makes sense, sort of? At least a bit. And the artwork’s nice.

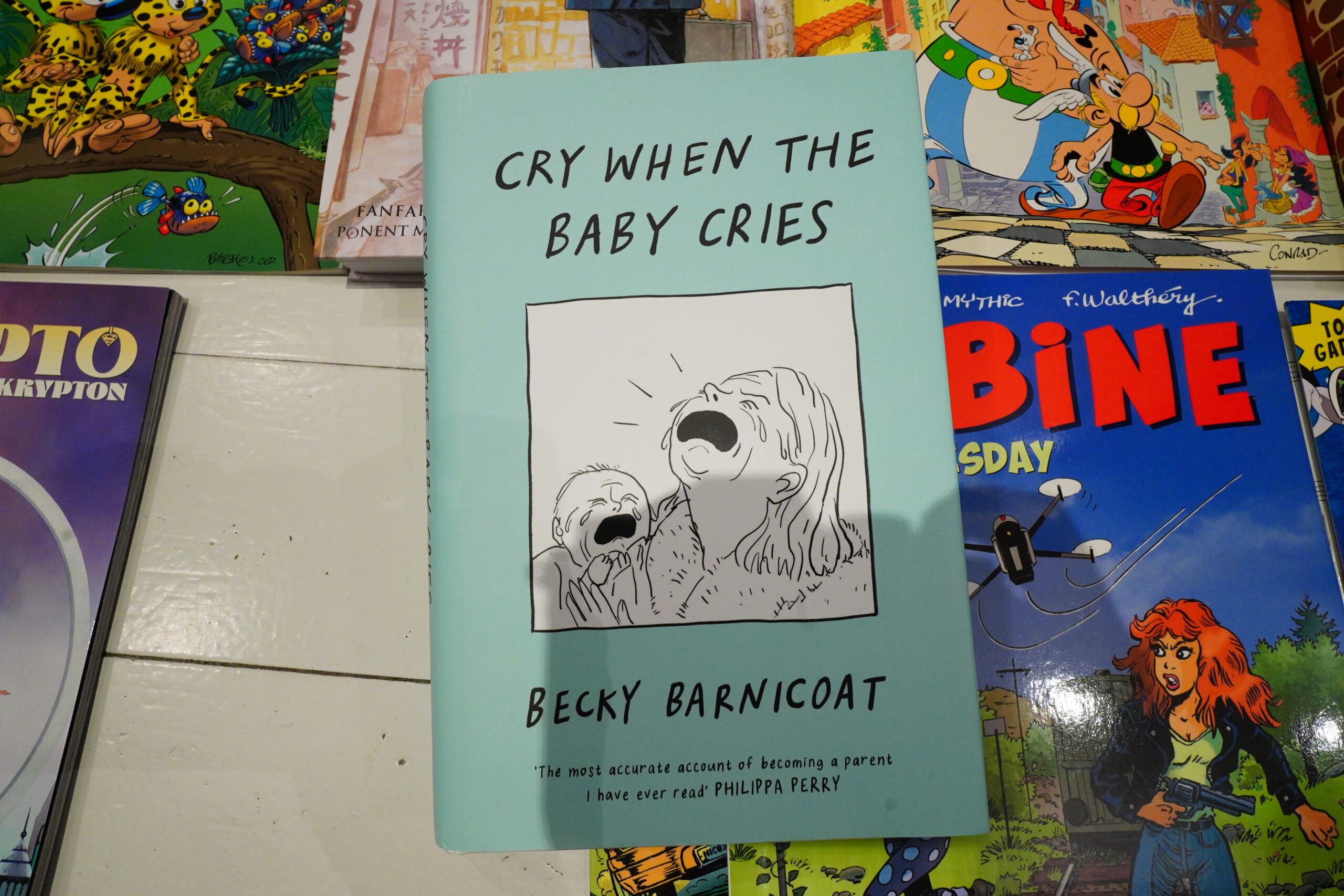

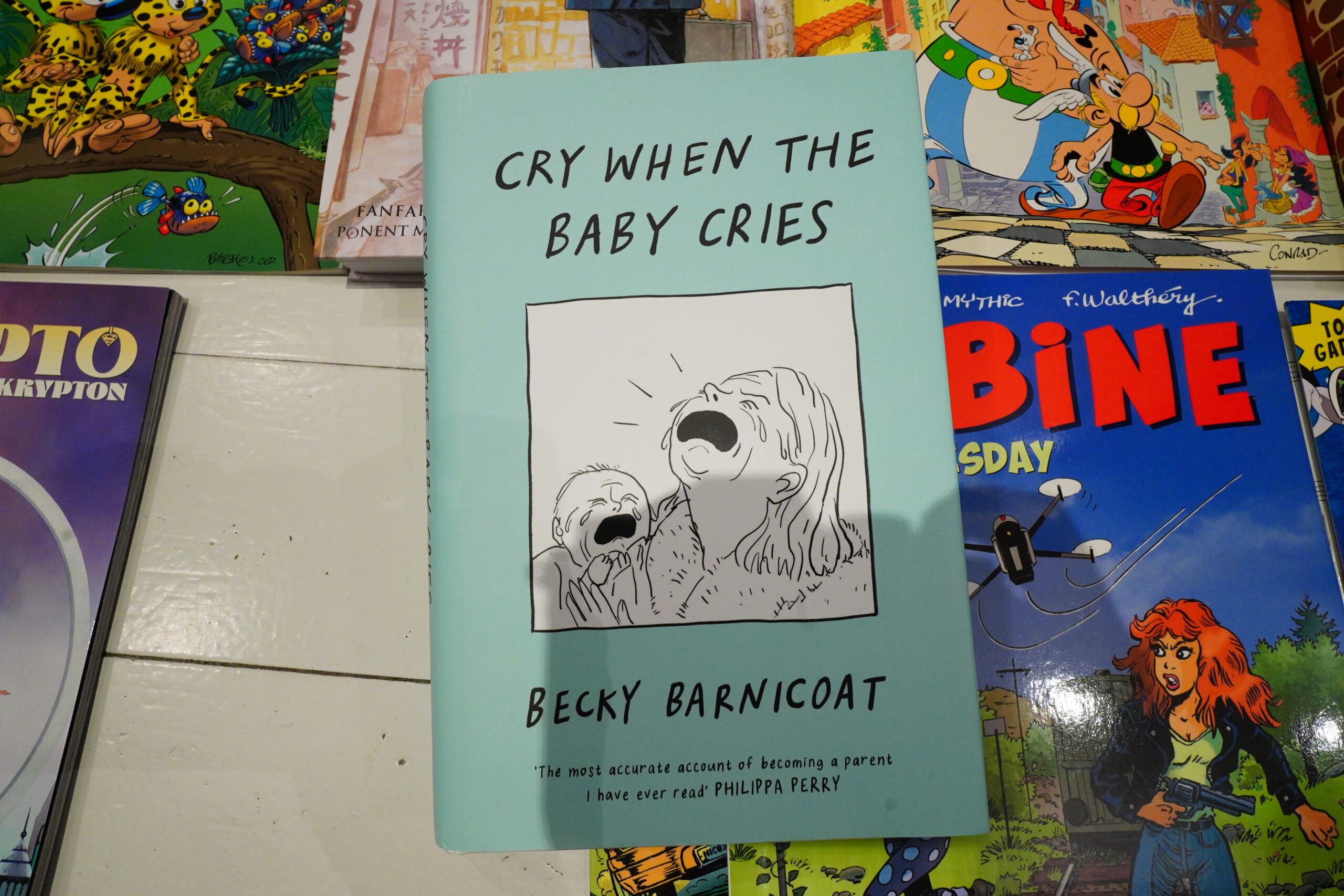

This book was a bit of a roller coaster… and let me bloviate a bit first about a pet peeve of mine: Introcuctions.

Why!?? Why are there introductions!? Why not just start your book instead of first spending time explaining your book to the audience? The introduction here is the worst part of the book, which is common, too.

And then the book starts, and… it’s a kind of prologue? At this point I was ready to just ditch the book, because I was getting annoyed. I understand the impulse to have something at the start to explain what you’re doing with the book — I mean, making a book is a kinda megalomaniacal thing to do, so you want to excuse yourself a bit so that people don’t think you’re getting too big for your britches… or something.

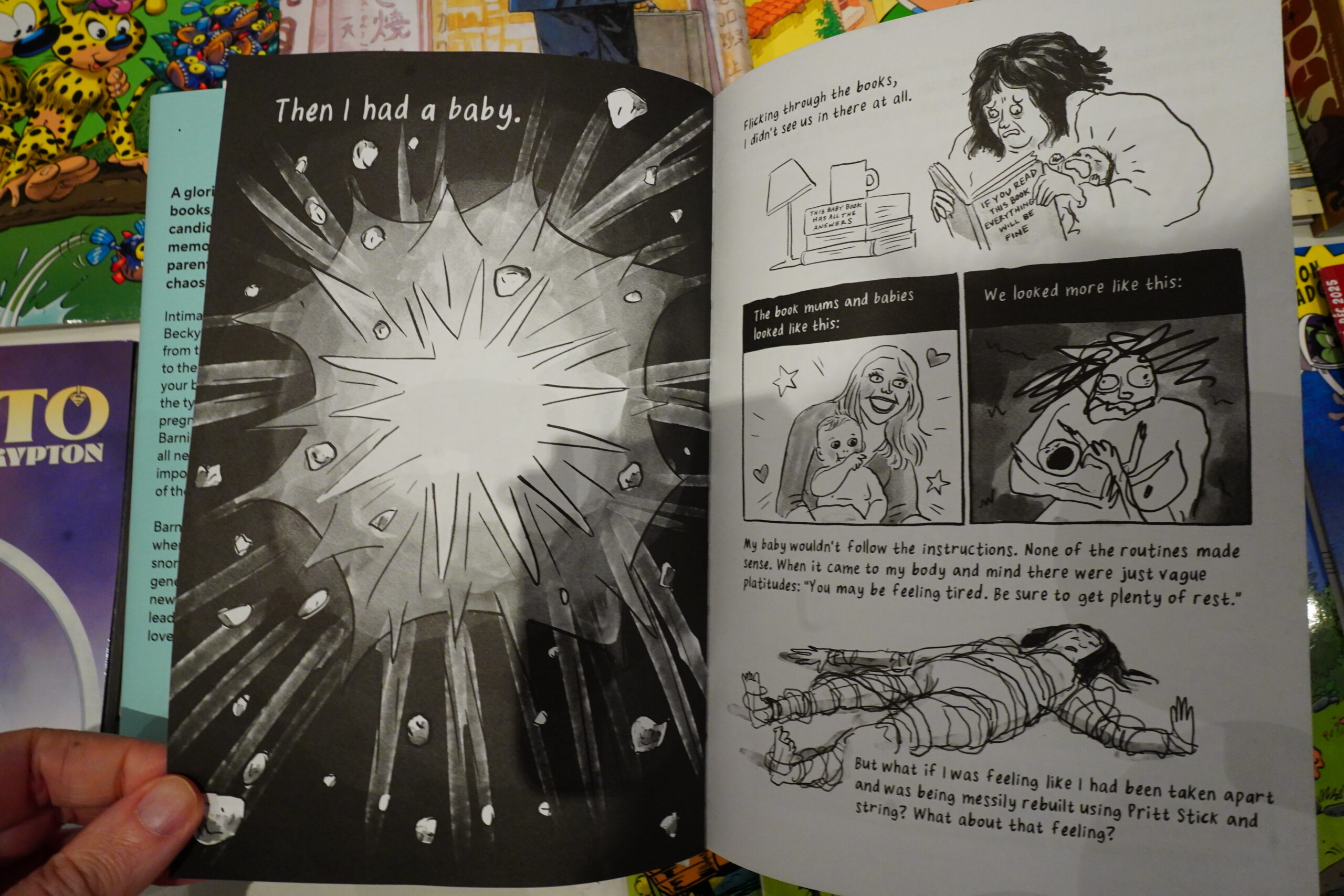

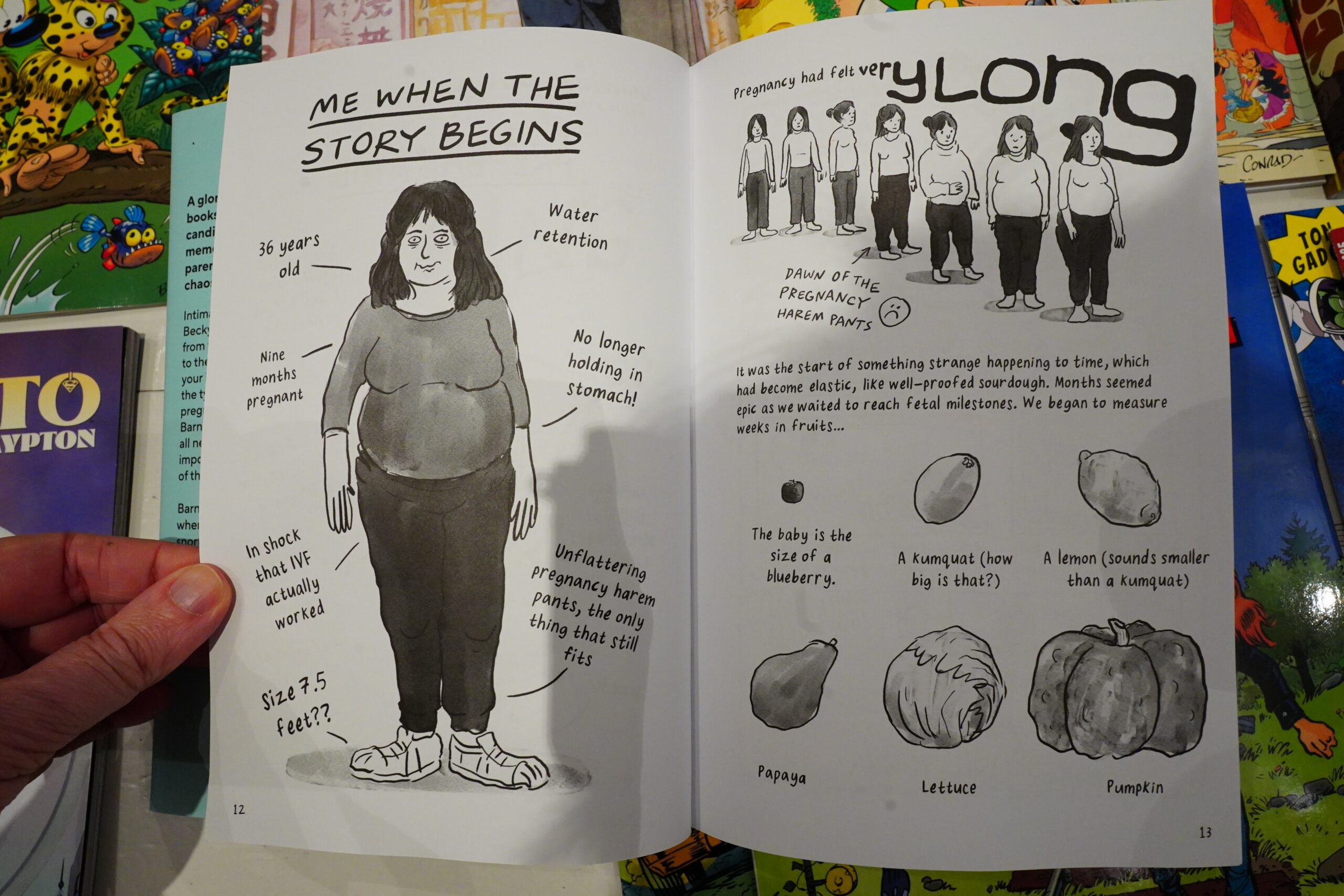

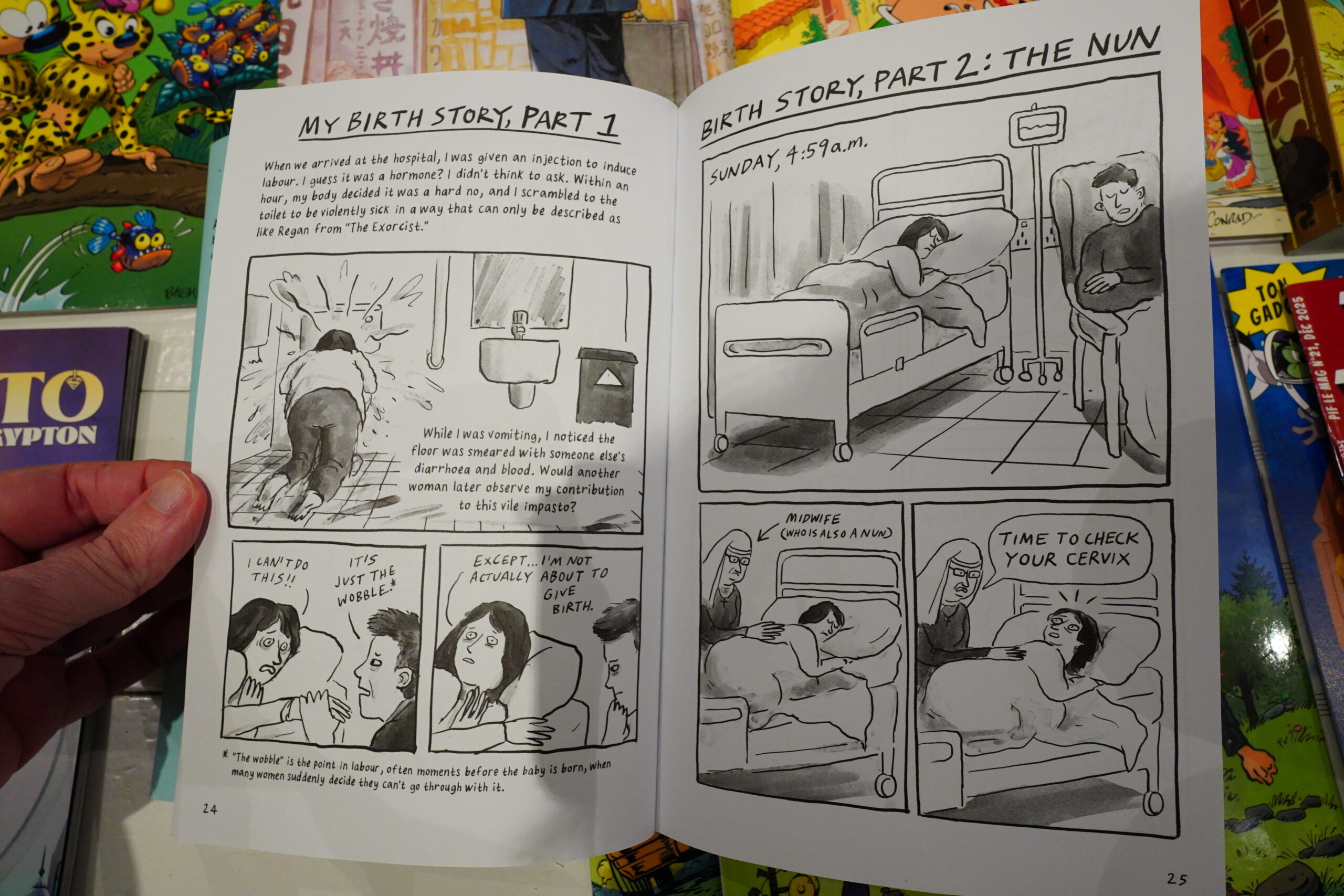

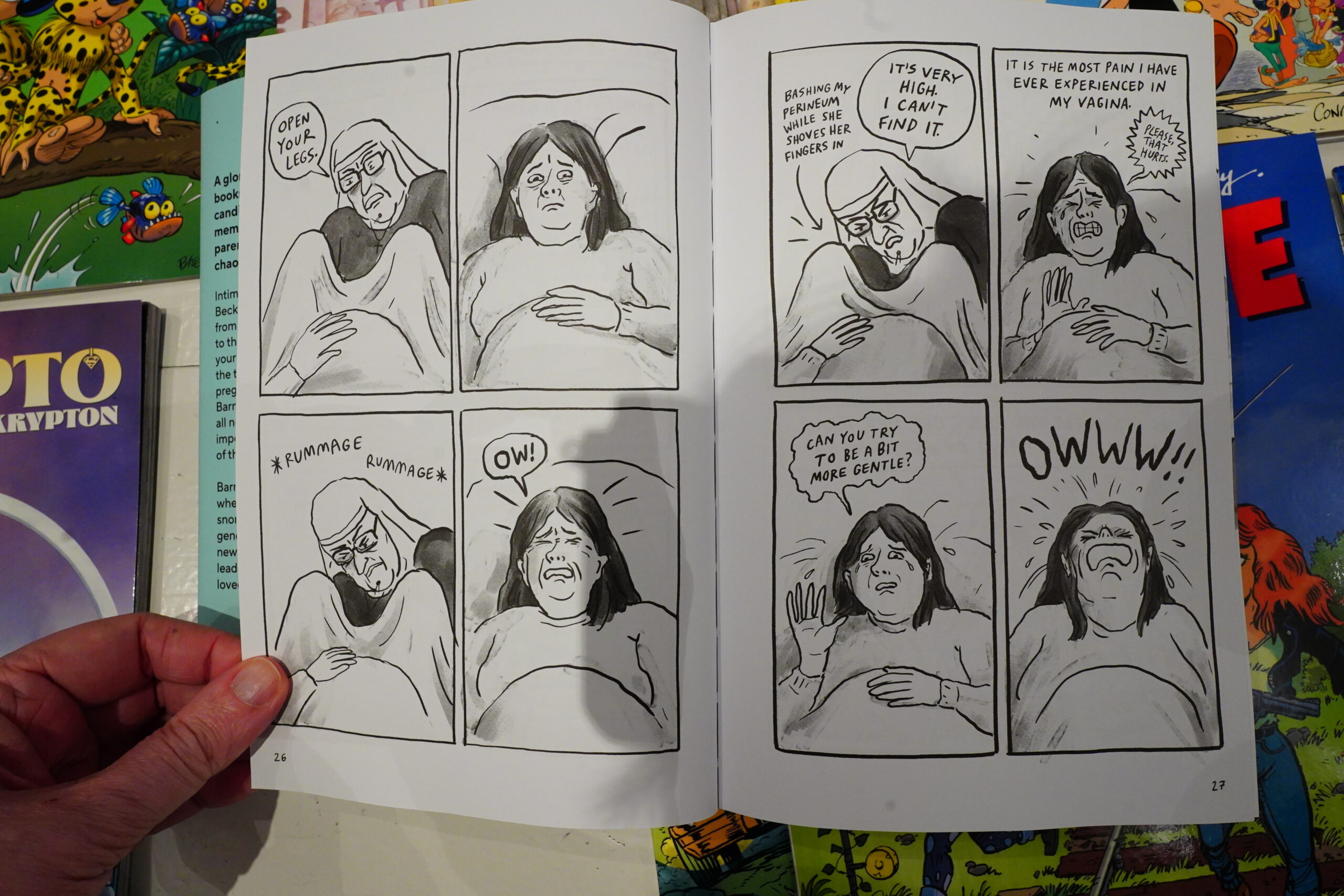

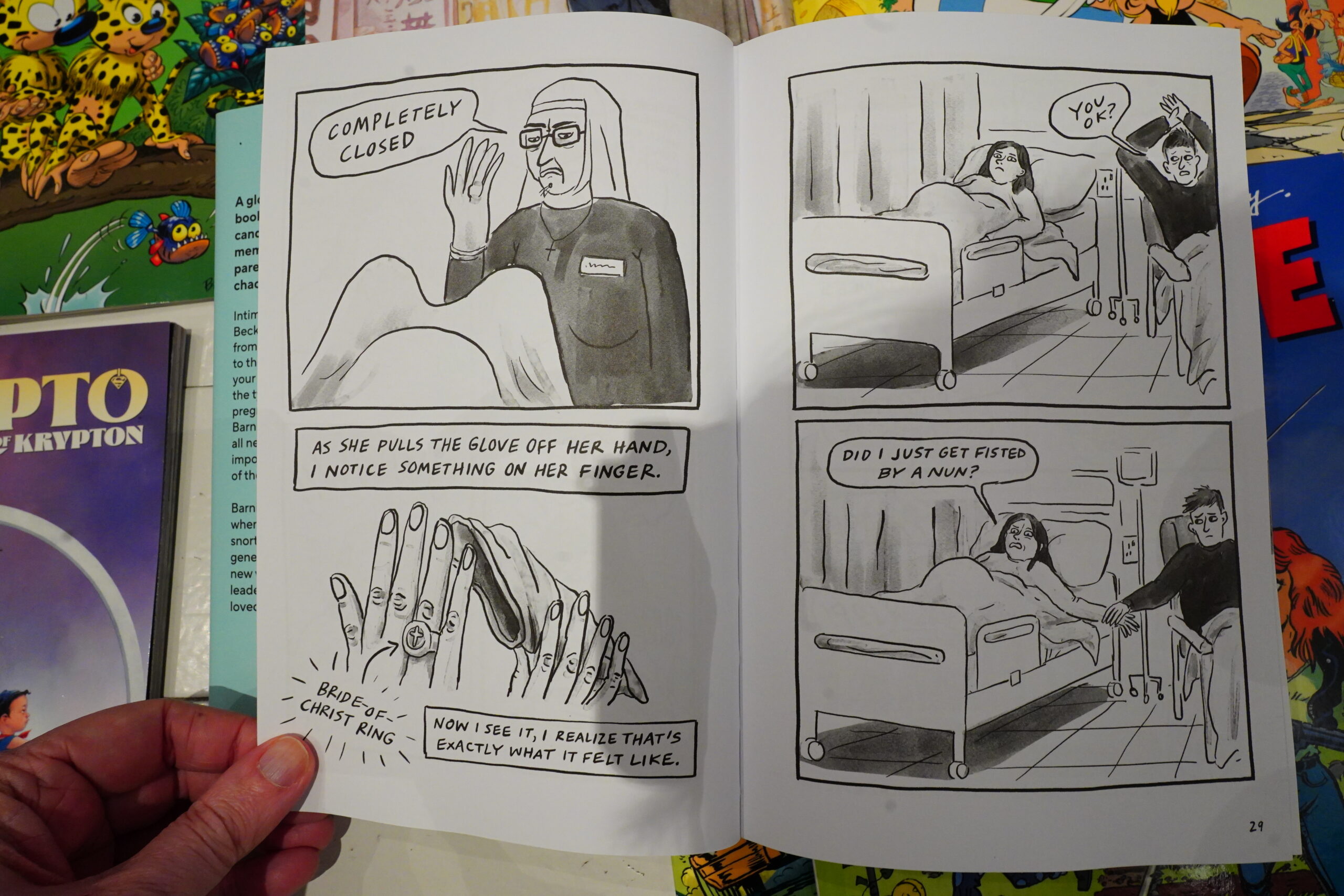

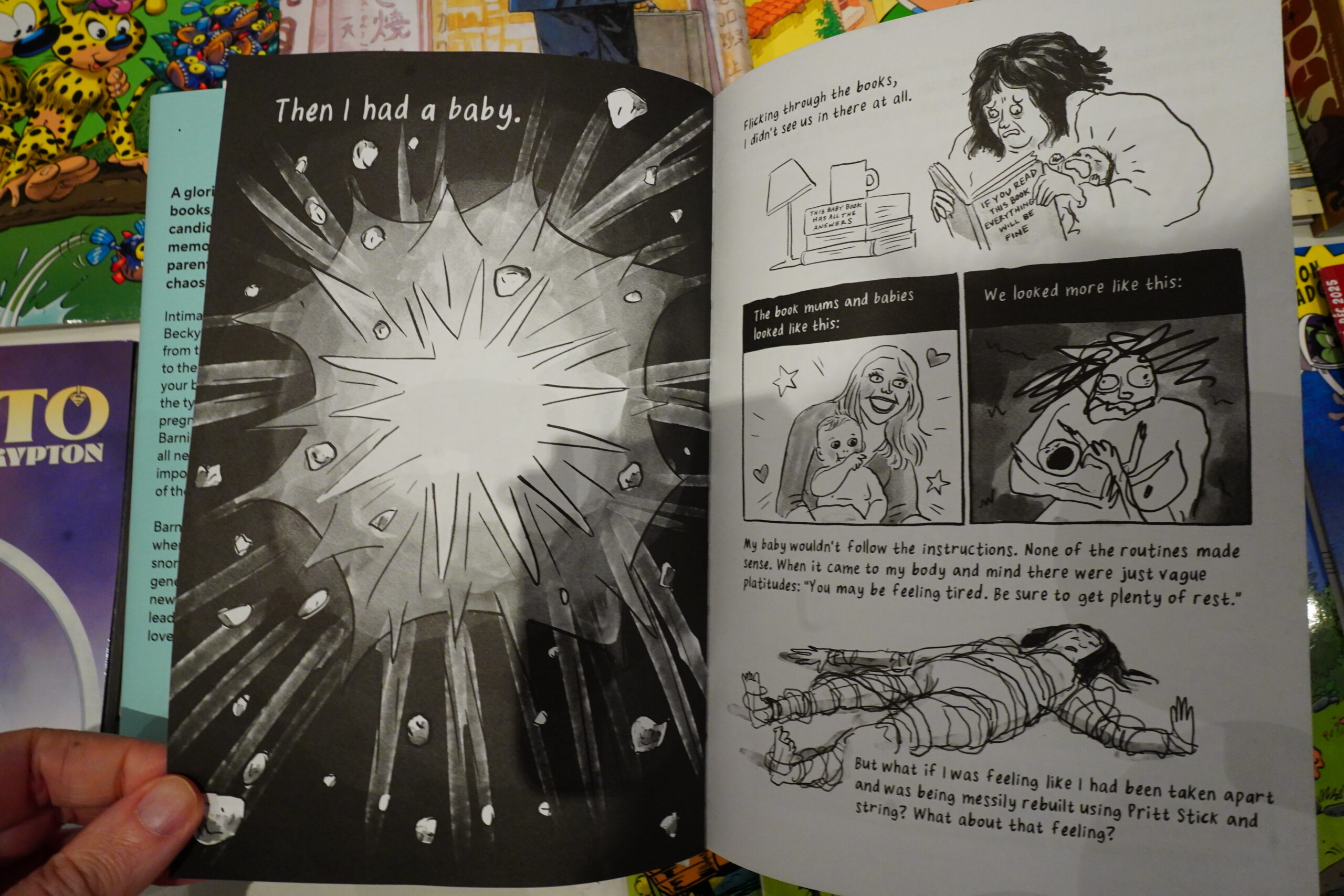

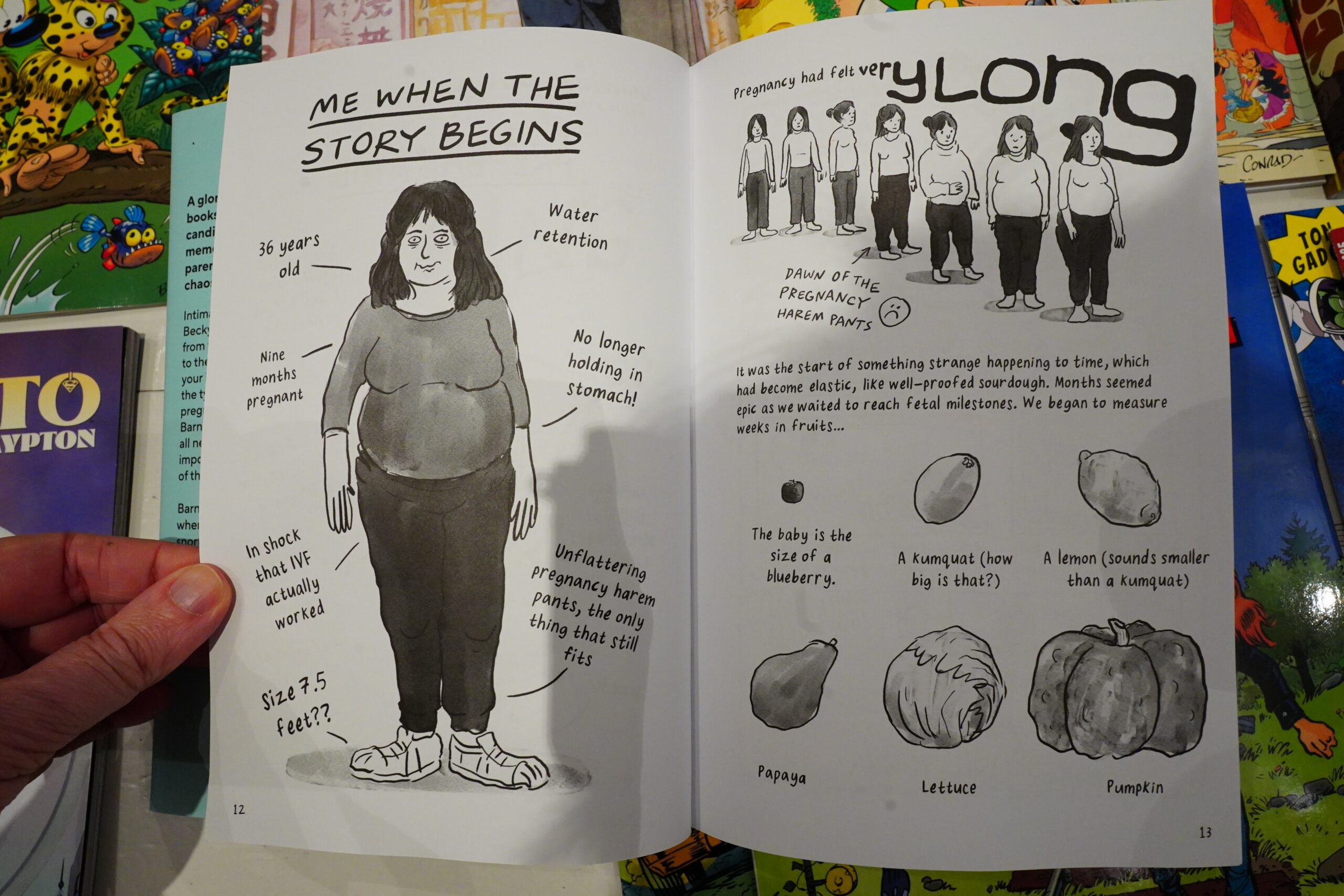

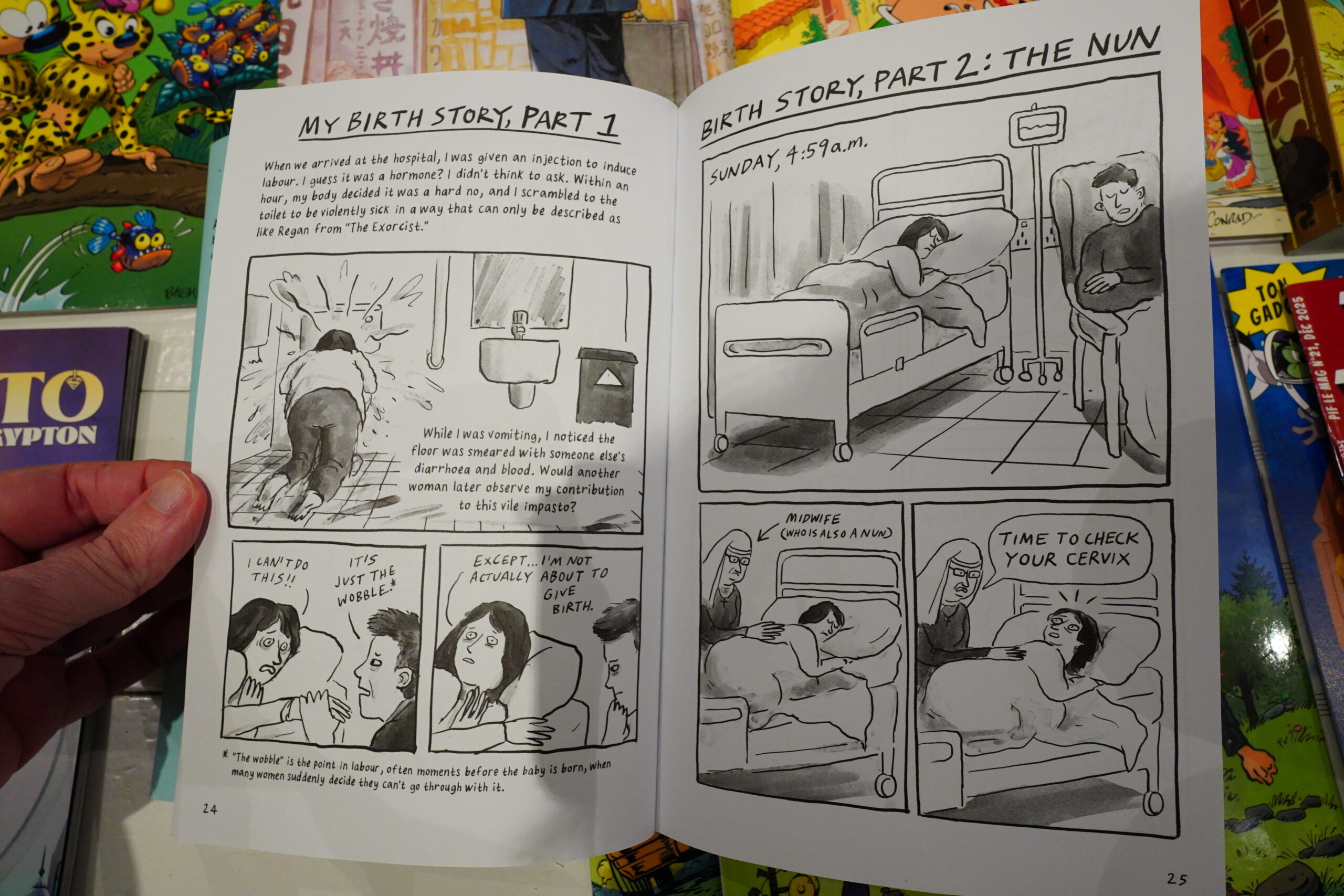

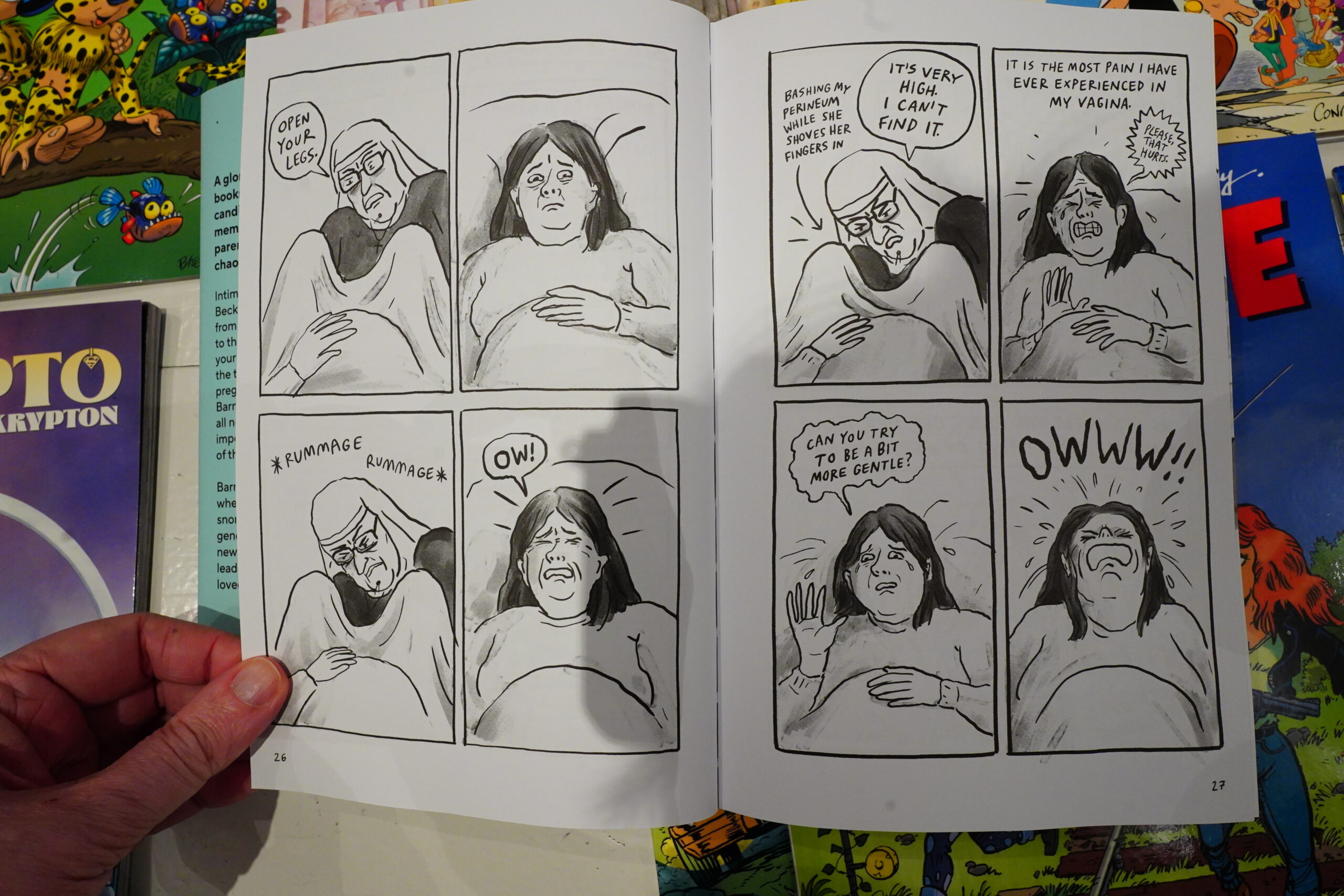

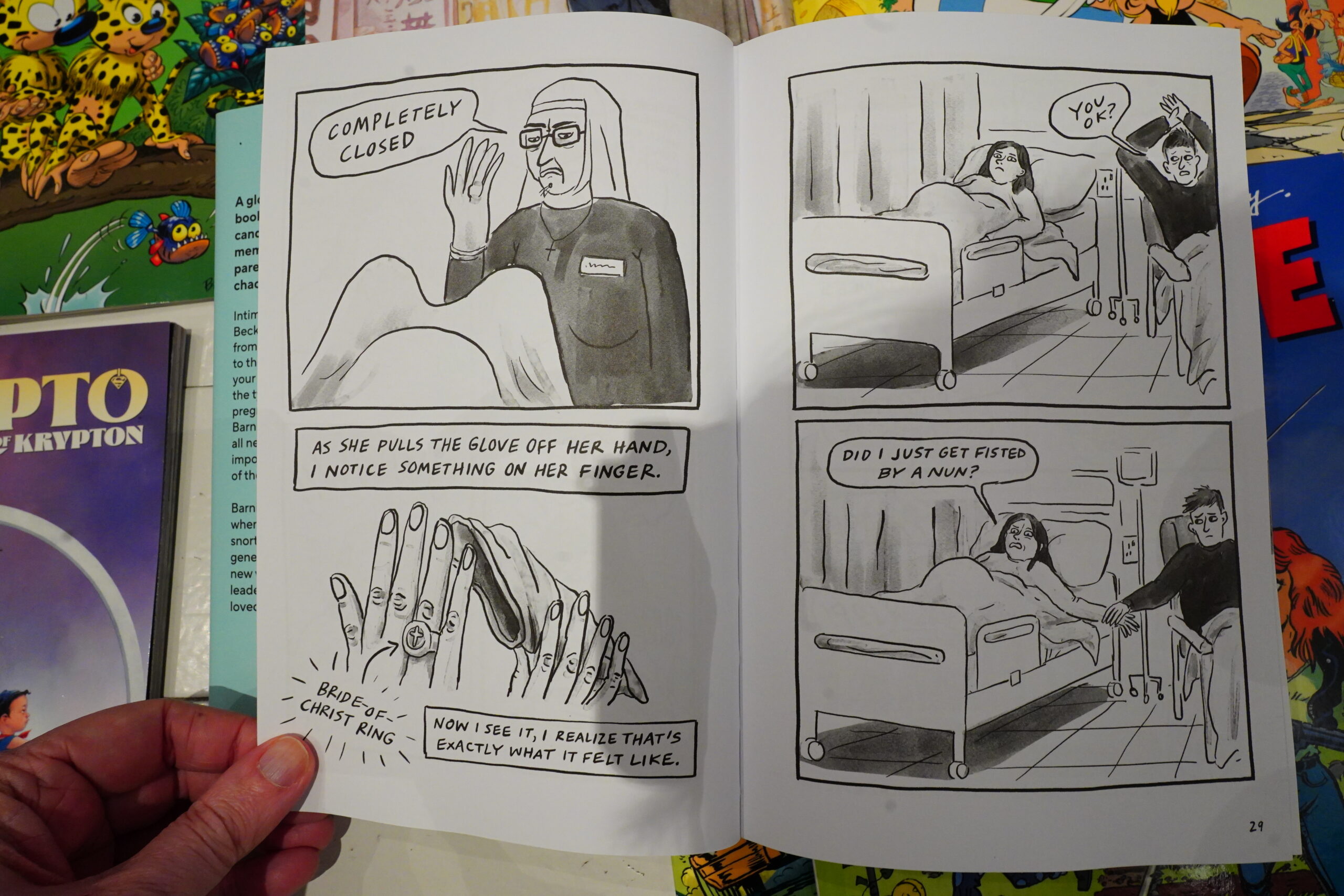

OK, here’s where I think this book should have started. Let’s read these pages together:

See!? That’s an absolutely perfect way to start this book — it’s horrifying and it’s funny, and that’s how the book continues.

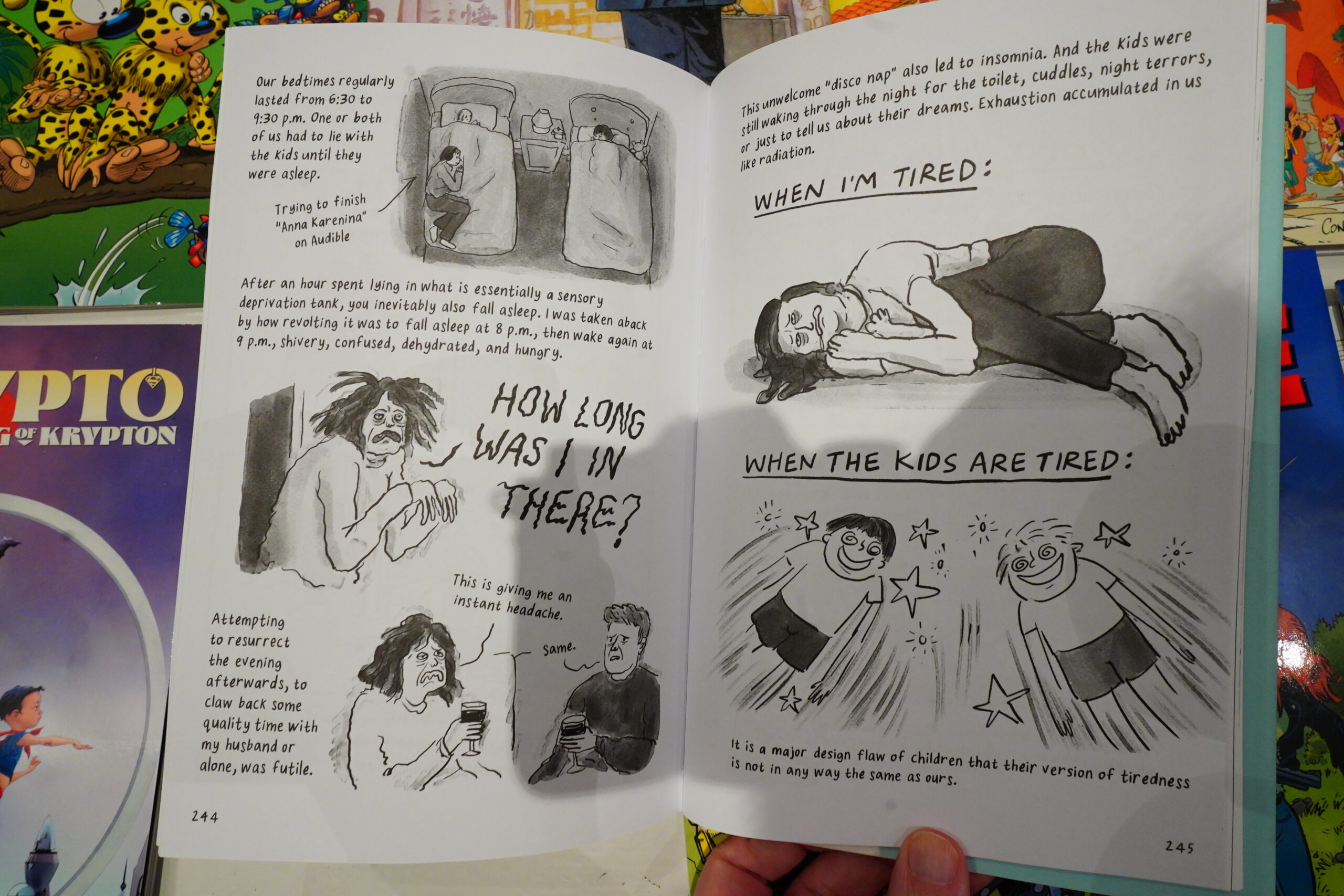

It’s a solid book — very interesting and also very entertaining.

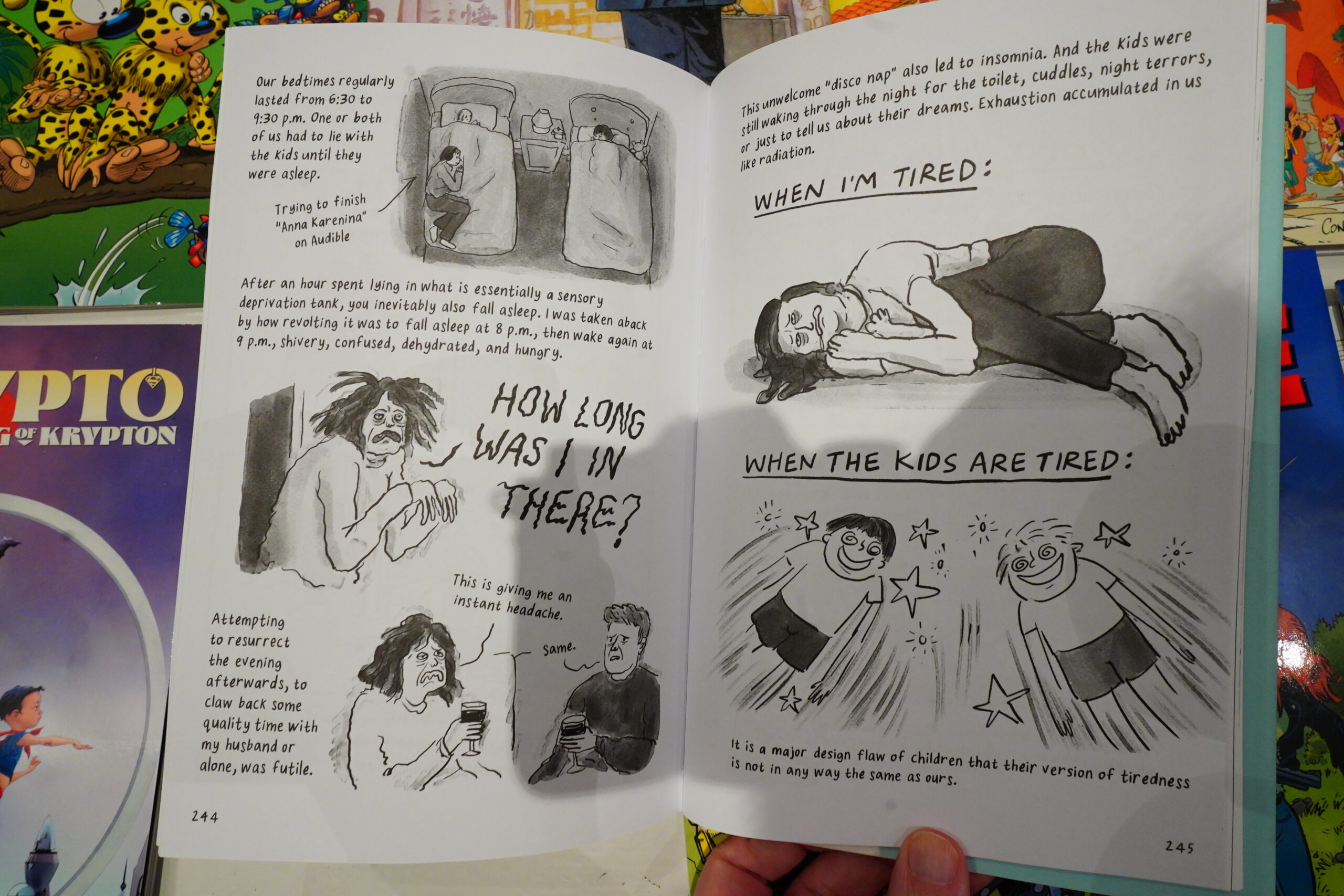

It also clear that the UK is a very foreign country — they start putting the kids to bed at six thirty!? WHAT?!

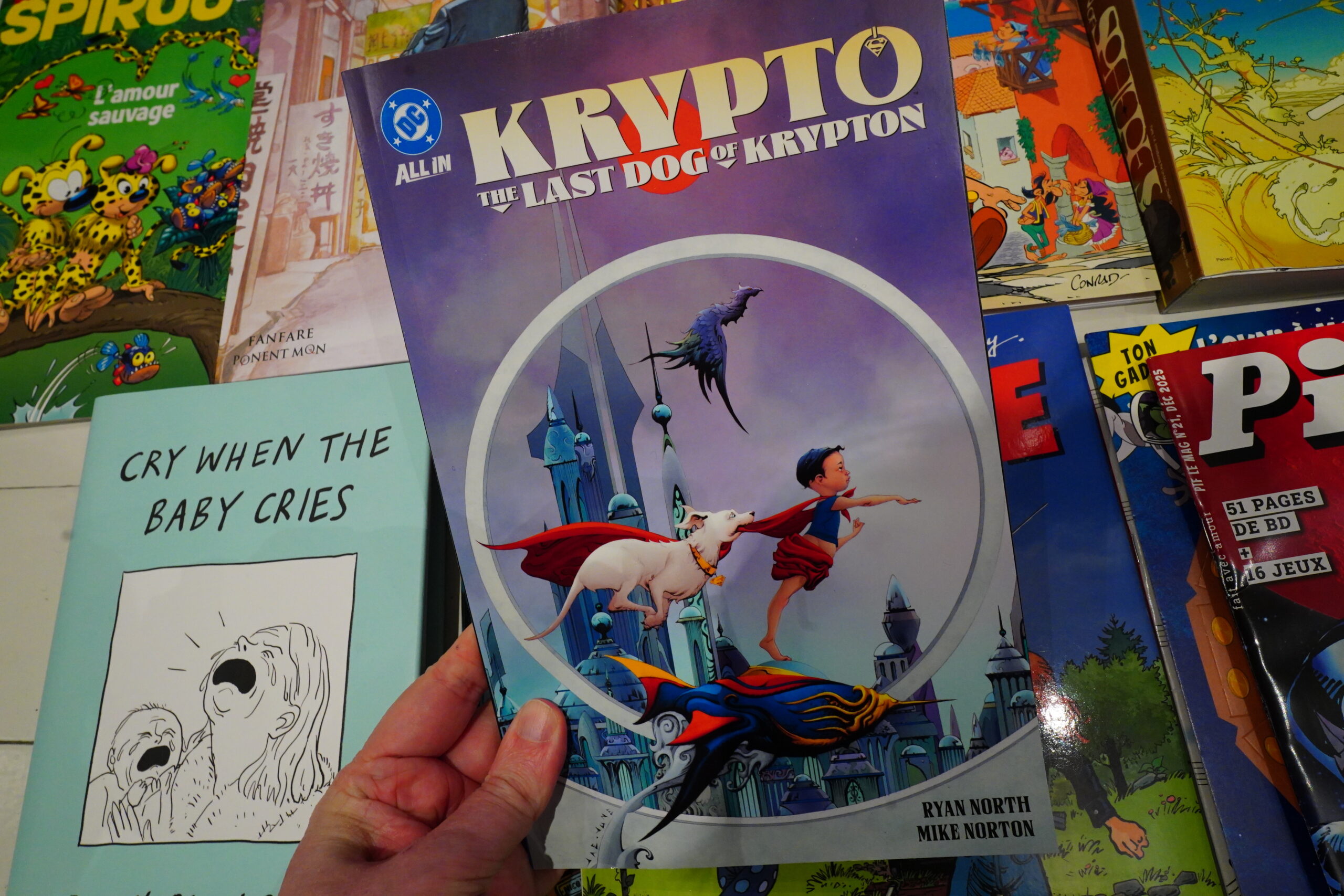

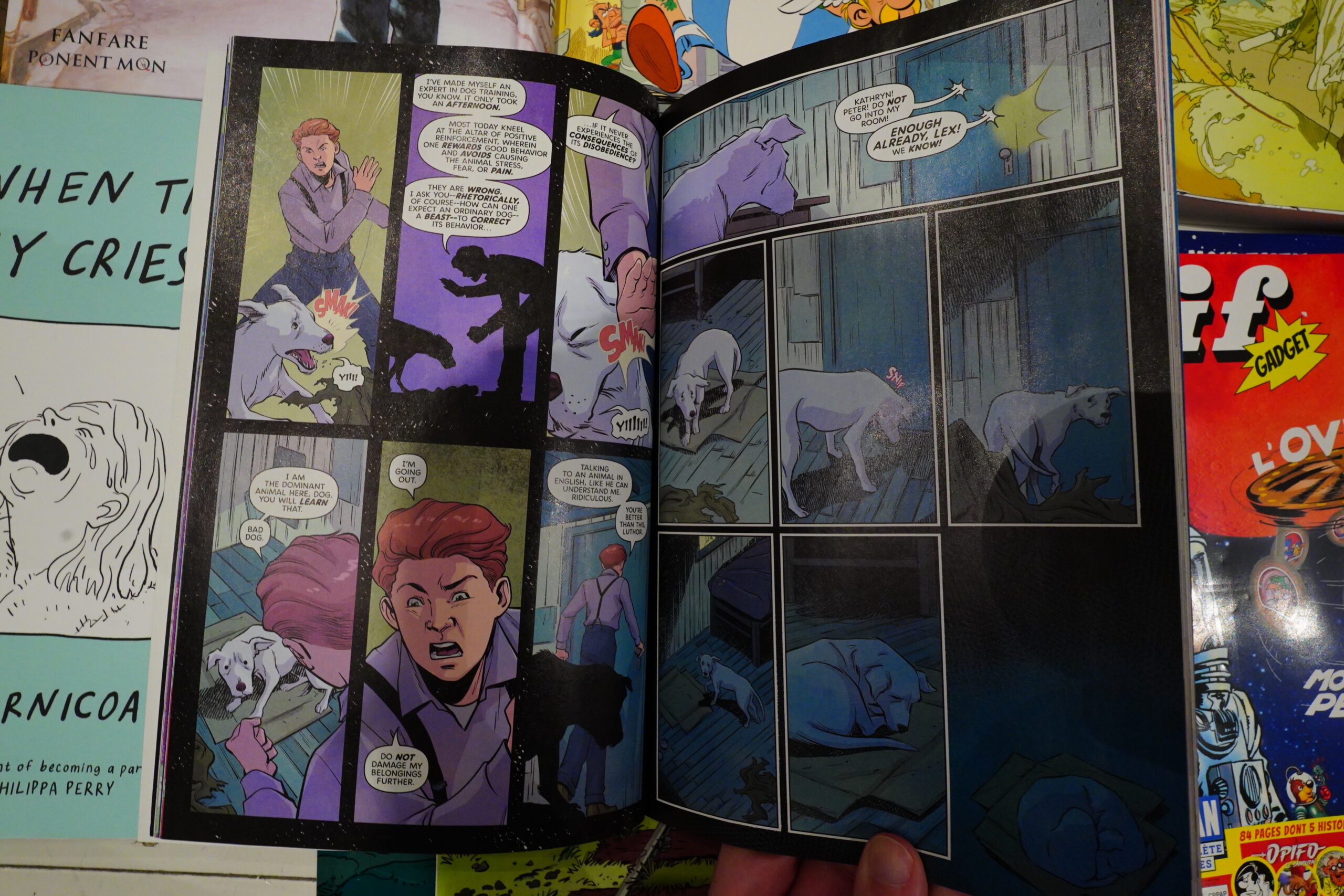

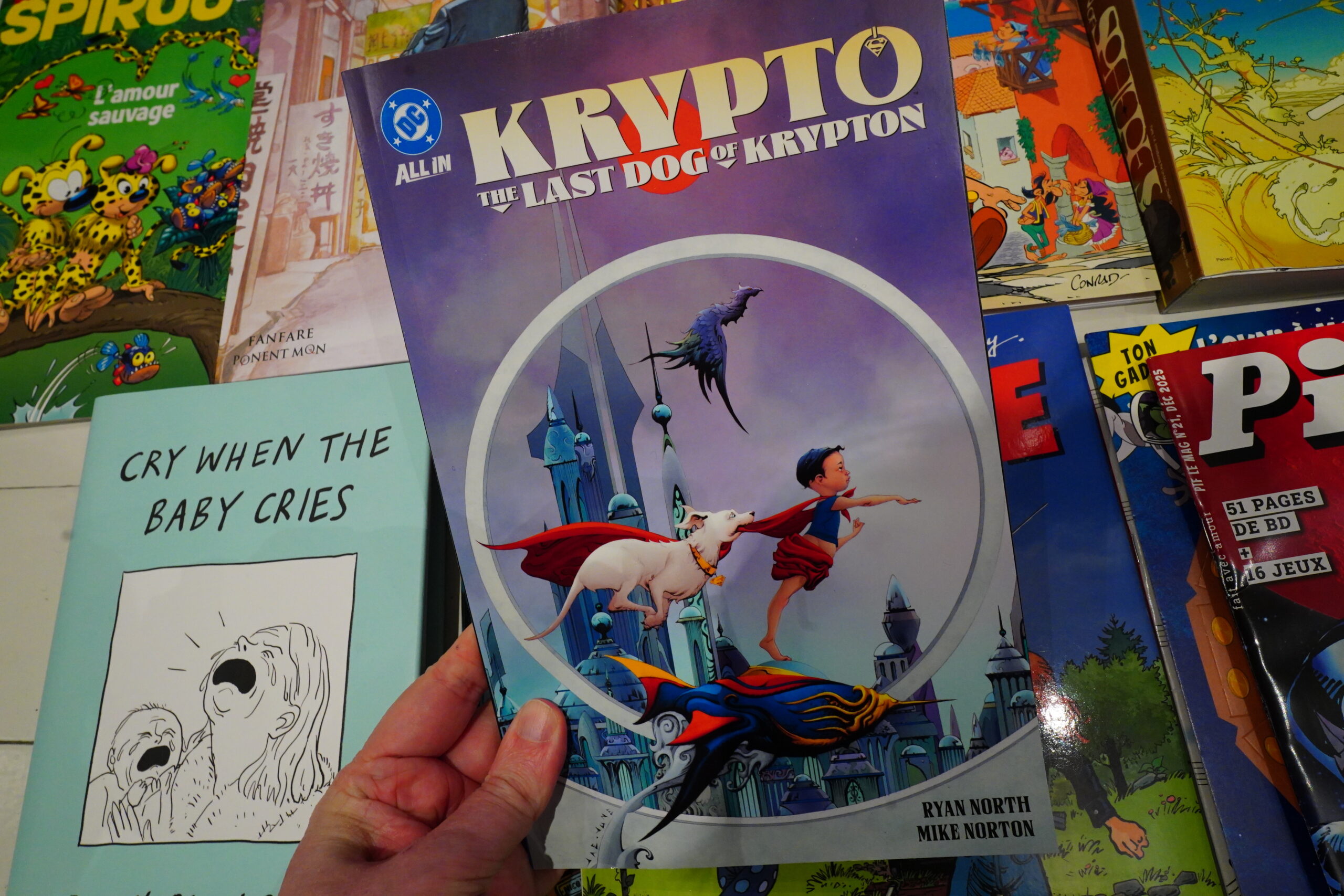

I missed the first couple issues of this when it was serialised, so I thought I might as well just pick up the collection.

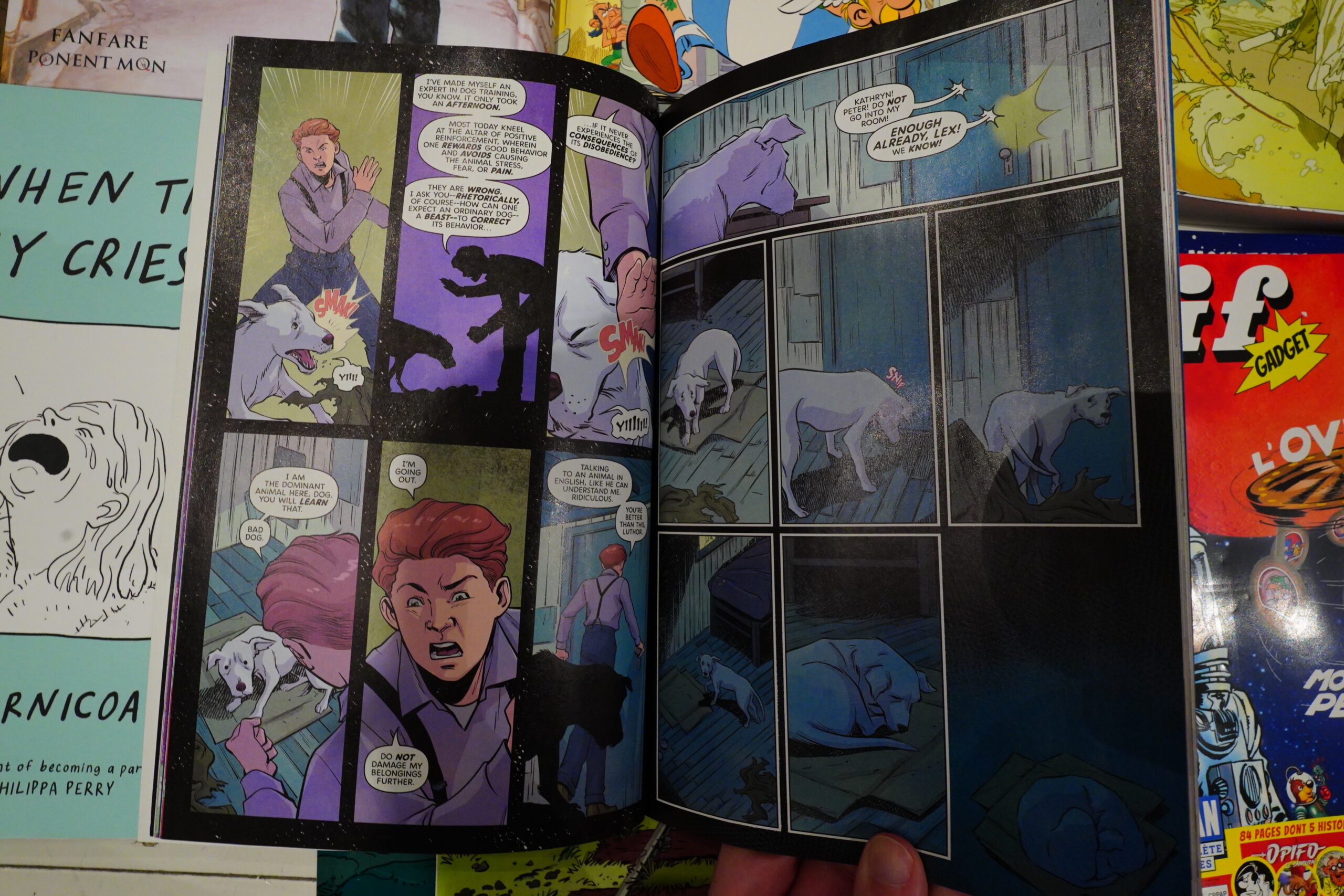

Which turned out to be a bad idea, because the first couple of issues were really weak. In the second issue, Krypto (after landing on Earth) gets adopted by Lex Luthor!? I mean, sure, gotta do all permutations of all characters, but it doesn’t make much sense. And Krypto’s a super dog, so I don’t understand why he’s taking those smacks from Lex. Or why he understands everything he says (the non-understanding bits are supposed to be in light grey, surely?).

The final three issues are OK, but I’ve already read those.

)

)

%3A+Relax+%2B+B-Sides)