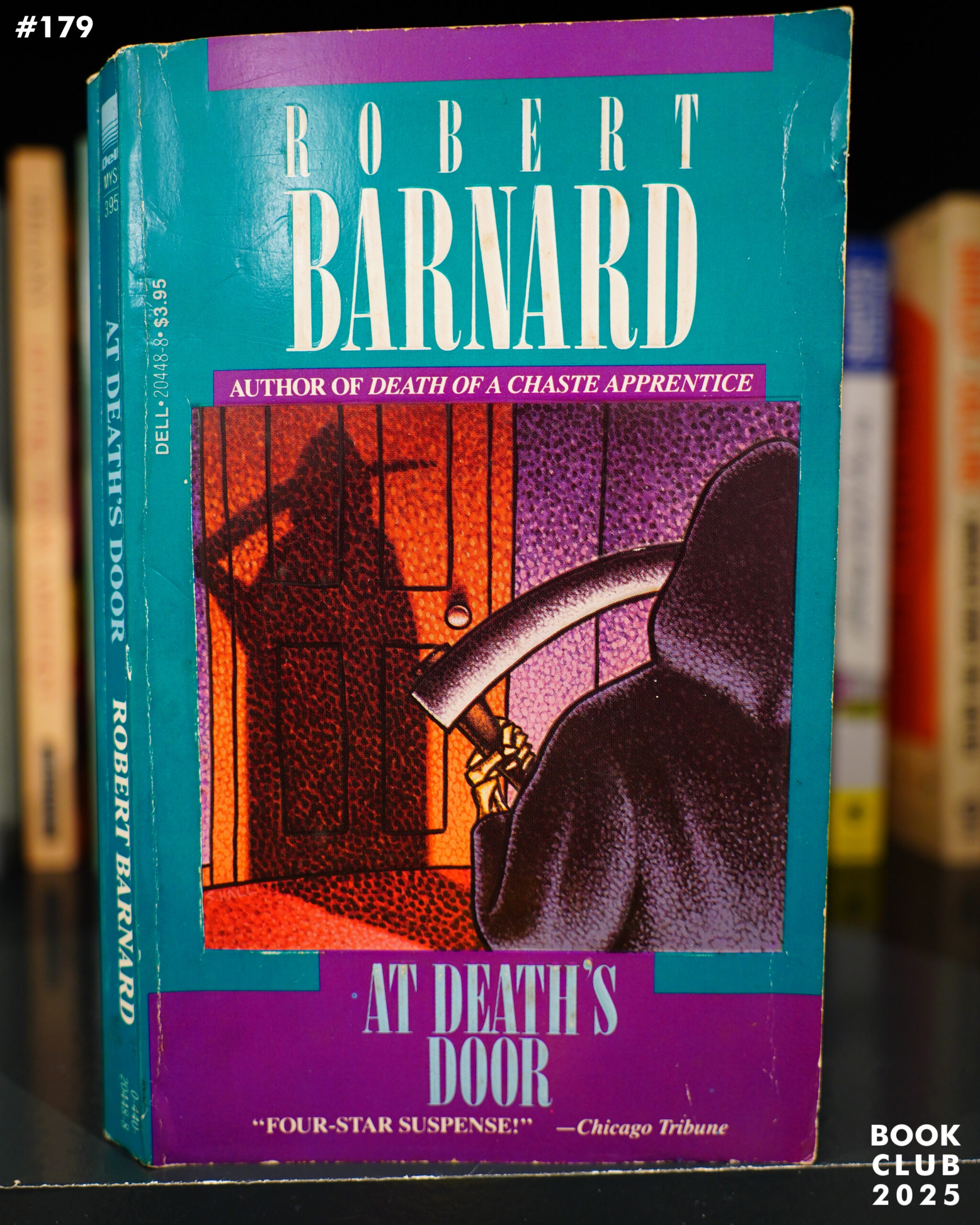

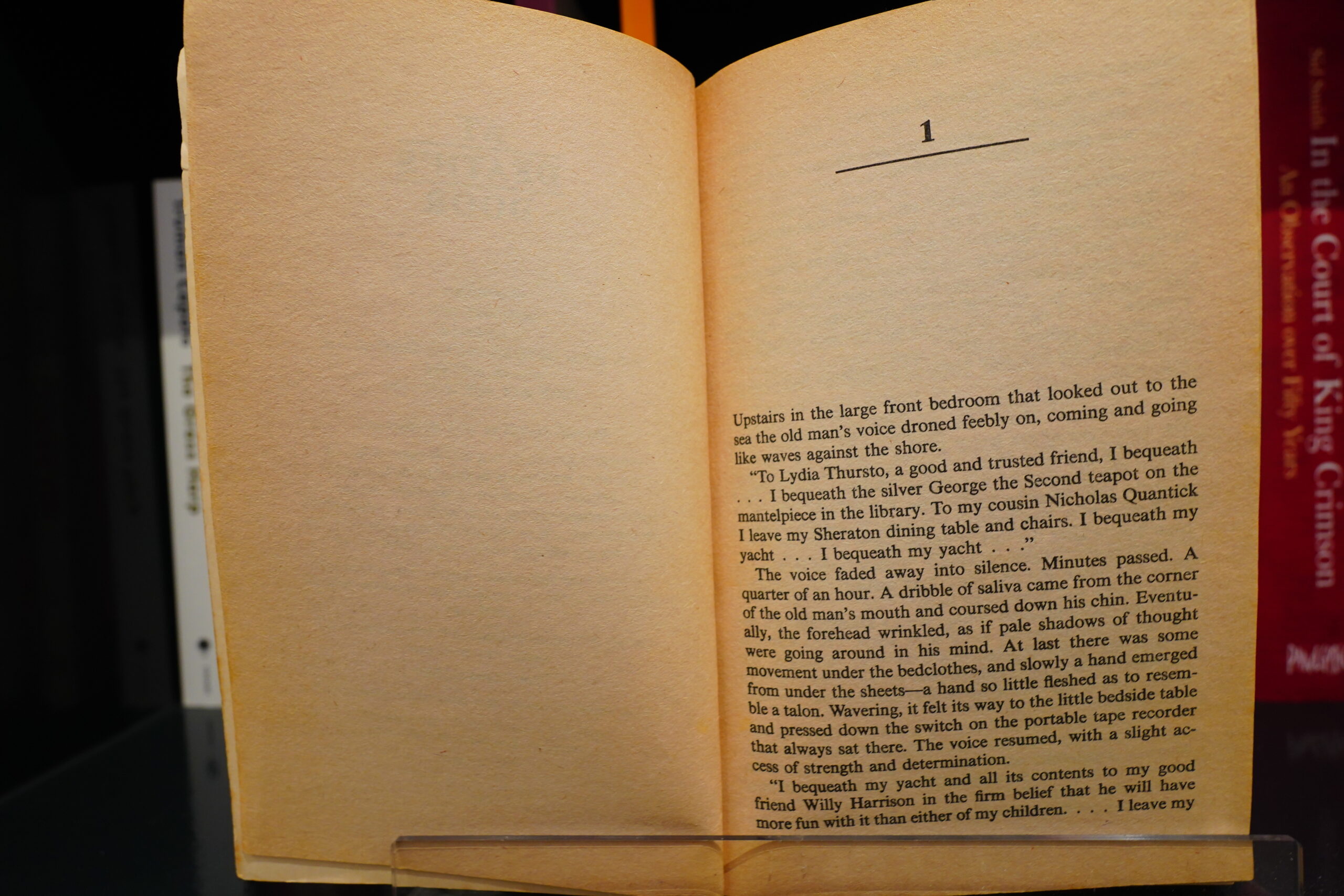

I’m reading yet another mystery, but this one is brand new.

And it’s fun — it’s really original, and it wasn’t at all clear where anything was even leading until halfway through. But then…

OK, spoiler time. Don’t read anything more if you’re going to read this book.

This book features a character with last name “Hoff” who shares a lot of biographical details with Anne Holt — most notably an event that happened in the 90s, where one of Holt’s book was “nulled”; which means that the committee that buys books for the libraries in Norway found it had no literary qualities, and didn’t buy it. This almost never happens with Norwegian authors, so it was quite a thing.

So while reading, it was irresistible to Google things to refresh my memories of all that drama:

“Did you mean: anne holt mullet”? Good question, but no. Google translate:

“Anne Holt nullet” probably refers to a misunderstanding or misspelling of the phrase “anne holt null-ett”, which may refer to her being Minister of Justice for a period of one year, 1996–1997, according to store norske leksikon and VG. Another possibility is that it is a misspelling of “Anne Holt nuller”, which may be related to her having been awarded zero stars on the dice for a book for a year, but this is not directly confirmed in the search results

Wow, that’s some LLM result.

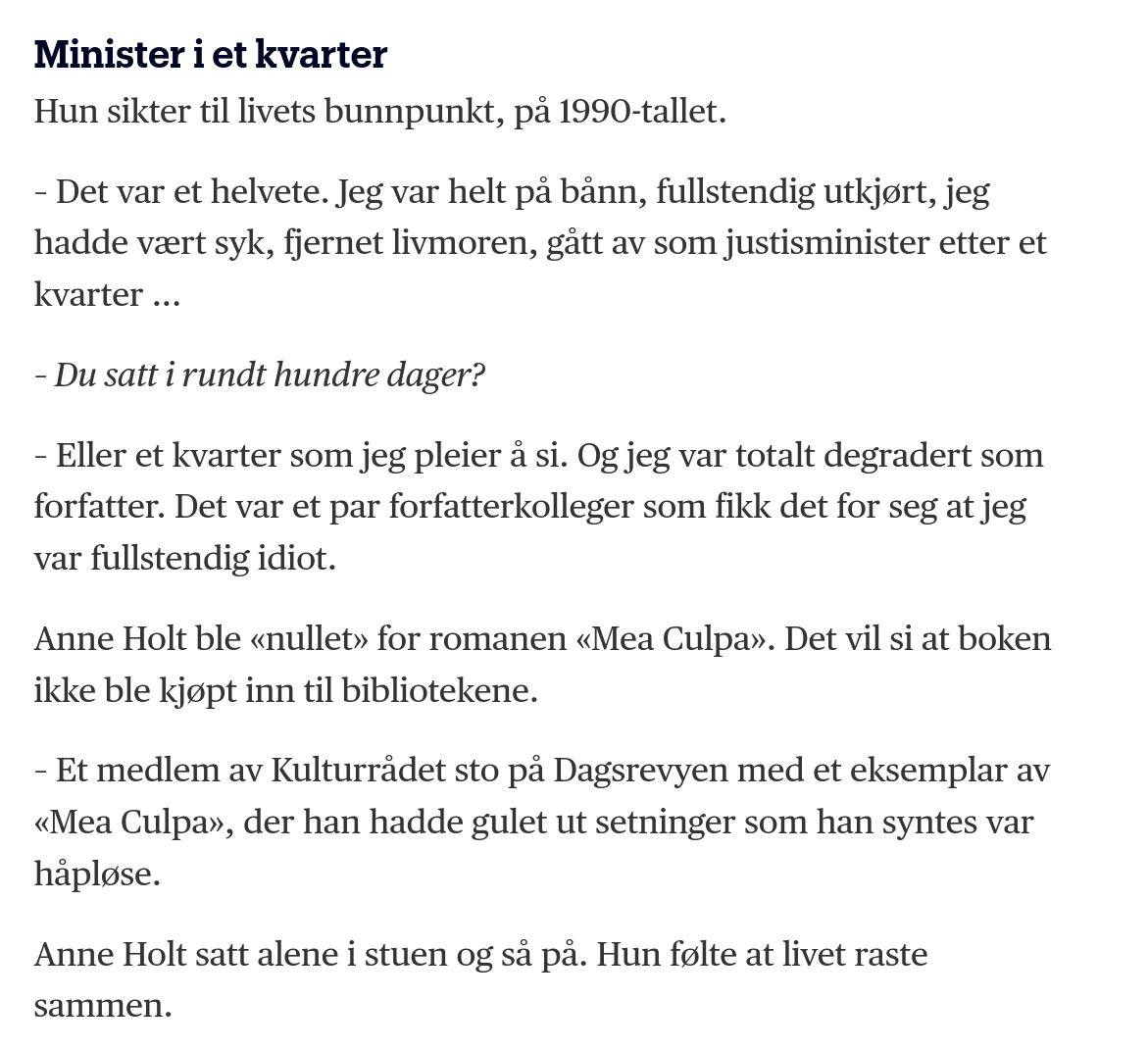

And I was completely degraded as a writer. A couple of my fellow writers thought I was a complete idiot.

Anne Holt was “zeroed out” for her novel “Mea Culpa”. That is, the book was not purchased for libraries.

– A member of the Arts Council stood at Dagsrevyen with a copy of “Mea Culpa”, in which he had yellowed out sentences that he thought were hopeless.

Anne Holt sat alone in the living room and watched. She felt like her life was falling apart.

Which is indeed exactly what the “Hoff” character went through.

The person who started all of this was an author called Øystein Rottem, who died in 2004. Or as Google says:

Øystein Rottem was a Norwegian literary critic and professor, and

he is best known for his collaboration with the author Anne Holt. Rottem helped to popularize Holt’s writing and published a biography of her in 2014.Literary critic: Rottem was a central voice in Norwegian literary criticism, with a strong commitment to contemporary literature.

Collaboration with Anne Holt: He wrote several books and articles about Anne Holt, her writing and her position in Norwegian literature.

Biography: In 2014, Rottem published the book “Anne Holt. A Biography” which provided an in-depth insight into her life and career.

I thought they’d made the Gemini LLM hallucinate less now? But perhaps not for smaller languages?

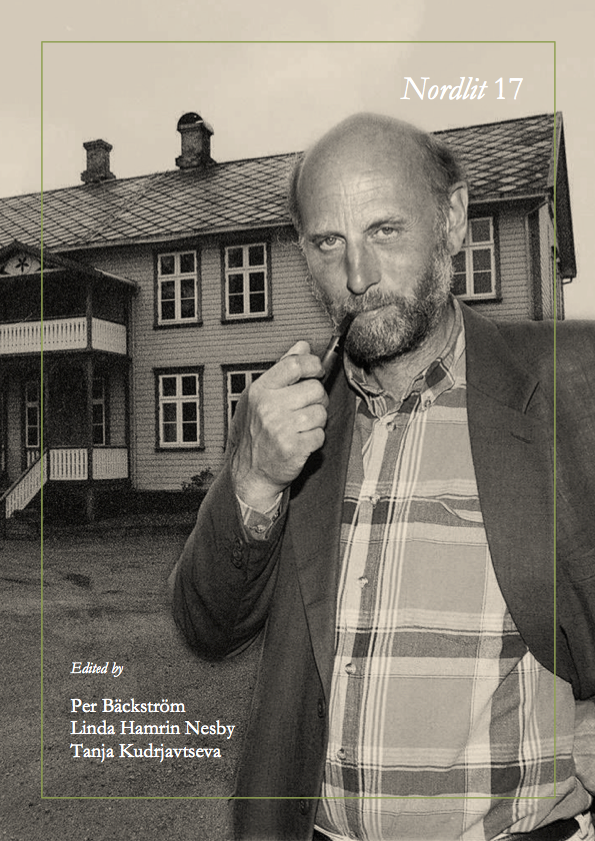

But anyway, one of the characters in the book is described as having written that harsh critique that led to Holt’s life almost falling apart is a bald guy who smokes a pipe:

Like Øystein Rottem, so it’s not like she’s being subtle or anything…

Here’s part of what Rottem wrote about Holt, via Google Translate:

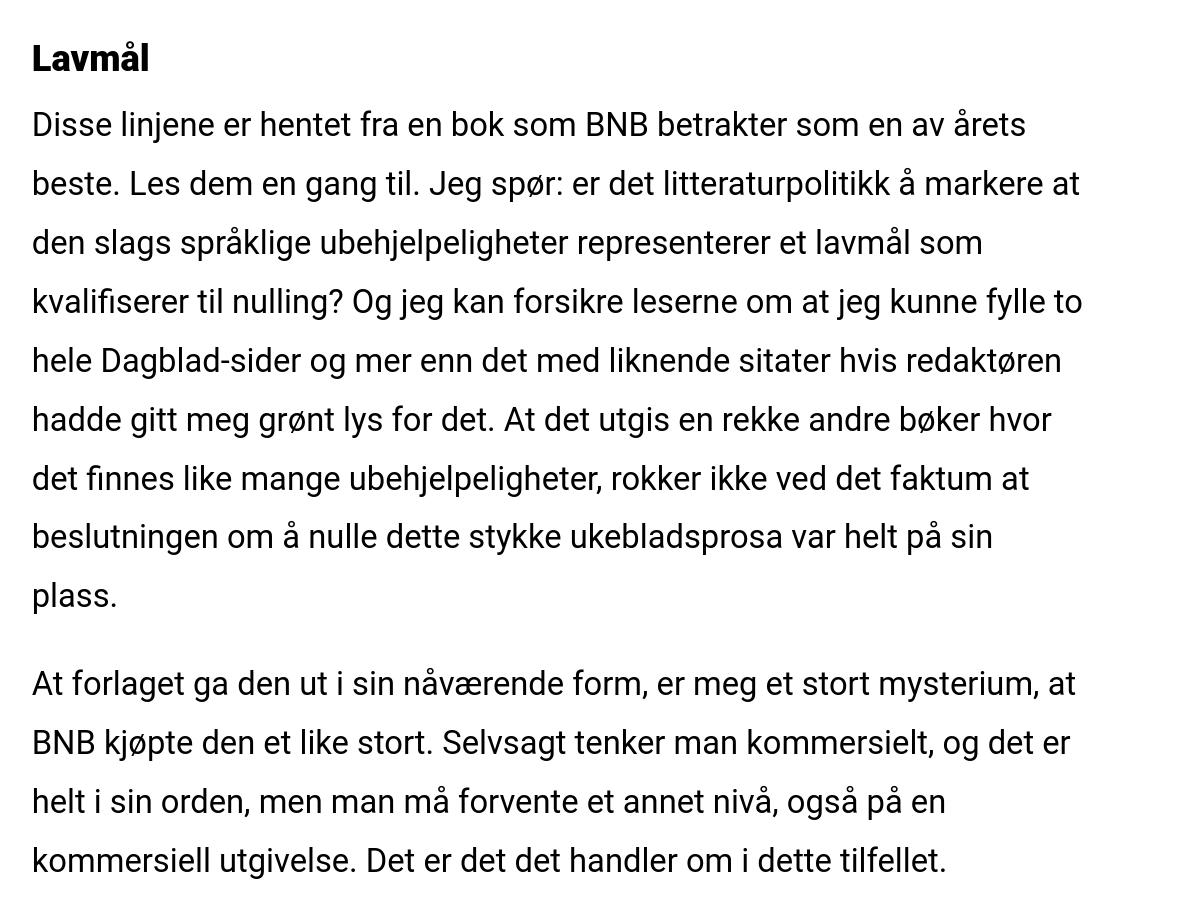

These lines are taken from a book that BNB considers one of the best of the year. Read them again. I ask: is it literary politics to point out that this kind of linguistic inaccuracy represents a low standard that qualifies for zeroing? And I can assure readers that I could fill two entire Dagblad pages and more with similar quotes if the editor had given me the green light to do so. The fact that a number of other books are published in which there are just as many inaccuracies does not alter the fact that the decision to zero this piece of weekly magazine prose was entirely appropriate.

Wow, that’s a bad translation…

Well, all of this is fun in a roman à clef way, but the problem is: 1) Anne Holt has done this before. She’s already written a novel where the deranged killer was a version of herself. This time around, the version isn’t deranged at all, but, er… 2) There’s nobody else, at all, who could be the culprit, so it just feels like bad mystery-writing craft. 3) Googling every new details while reading isn’t optimal. And 4) the book ends pretty much as I’d expected otherwise, too, so that’s also a letdown.

But it’s otherwise a very well-done mystery. And I guess if you’d never read anything by Holt before, or known anything about her history, the clef-ey bits would have been less distracting.

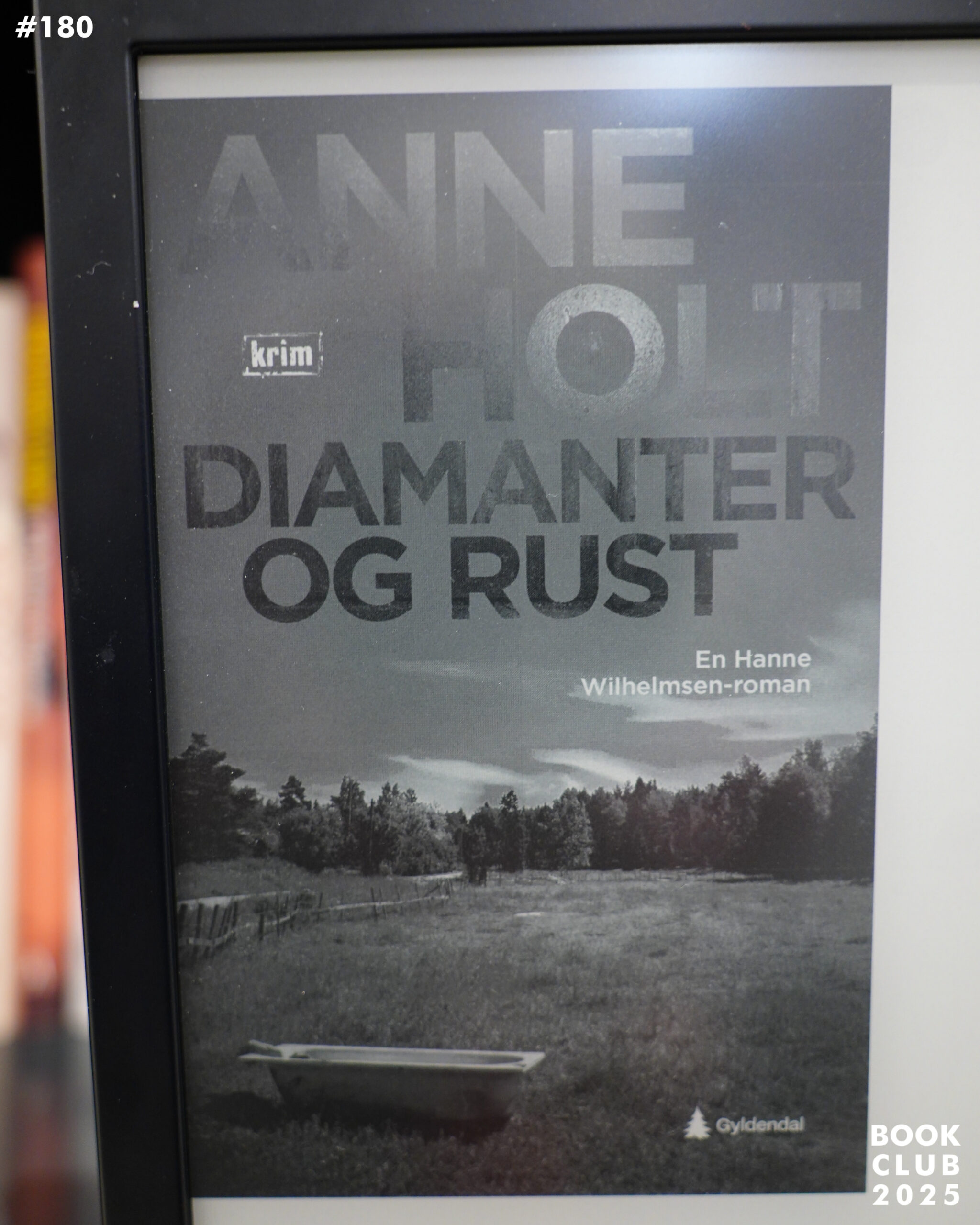

Diamanter og rust (2025) by Anne Holt (3.98 on Goodreads)

%3A+Keyboard)

)

)

)