Whenever I write a PHP page that’s somehow resource intensive, I go “I sure wish there was some way to ensure that this page is rate limited”. This is usually if I’m just using the PHP page as a shim around an executable, which I’m doing on kwakk.info, for instance.

That search page used to be lightning fast, but as there’s now about 150 magazines available for searching, a search now takes about one second in CPU time to execute. (This isn’t inherent in the search engine — if it had only one index to search, it would take a few milliseconds, but since I want to allow excluding/including magazines arbitrarily, it has to open 150 index files, and so here we are.)

Unless the site goes viral, there’s no problem, but what with all the exciting scrapers these days, sooner or later somebody is going to point something at you and then you have 1000 concurrent hits to a CPU intensive page, and then the server will be sad. Allowing external parties to give you arbitrarily high loads without them even trying (so asymmetric) is never a good idea.

Limiting concurrency for a specific page seems like such a basic, basic thing to want to have, so I thought that PHP surely had grown something like this by now, but apparently not.

If you Google this, you’ll find suggestions like altering the Apache limits — but that’s a really bad idea, because you want to have plenty of Apache processes to serve out static resources and the like. You don’t want the fast pages to be stuck behind the slow pages.

The other helpful suggestions from Google are to use a framework like Laravel, or use a queue runner, or to install Varnish in front of the site, or to use Kafka, or to set up a Kubernetes cluster on AWS, or…

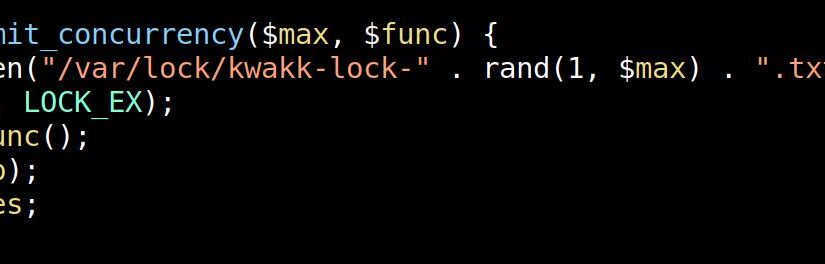

But what if you just have a stupid little server for your stupid little project, and don’t want it to become a full time job? What’s the simplest thing one can do? Well, flock. That works. Let’s look at the documentation. Nice example:

Excellent! Let’s do that! And… Whut? No lock files were created in /tmp!?

Because Apache/PHP on Linux is now so safe that it doesn’t allow writing to the “real” /tmp (or /var/tmp) directories. All accesses to /tmp/ from PHP are intercepted and are done somewhere else; I have no idea where.

But the code still works!

If you want to see the lock file, you can use /var/lock instead, since that isn’t intercepted by this, er, safety mechanism.

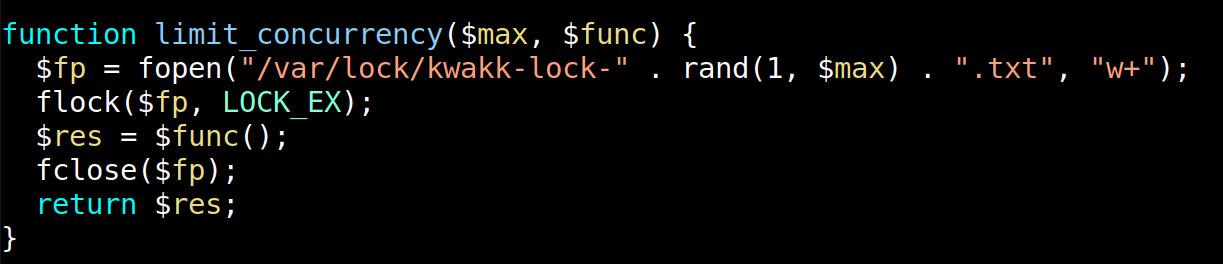

So what’s our minimal code to reduce concurrency to one instance of the search command running at a time? This:

# Ensure that we don't have more than one search at a time.

$fp = fopen("/var/lock/kwakk-lock.txt", "w+");

flock($fp, LOCK_EX);

$results = do_search($string);

fclose($fp);(flock will block until the lock has been freed, and fclose will free the lock.)

Or to wrap it up in a function, and add some sanity checks for hung processes and stuff? (This really shouldn’t be necessary these days on a PHP/Apache installation with the normal defaults (which should ensure that a hung PHP process isn’t actually possible), but anyway.)

Let’s see… my PHP is rusty. Does PHP have anonymous functions now? Yes! What about closures? Eh… Kinda.

OK, here’s something:

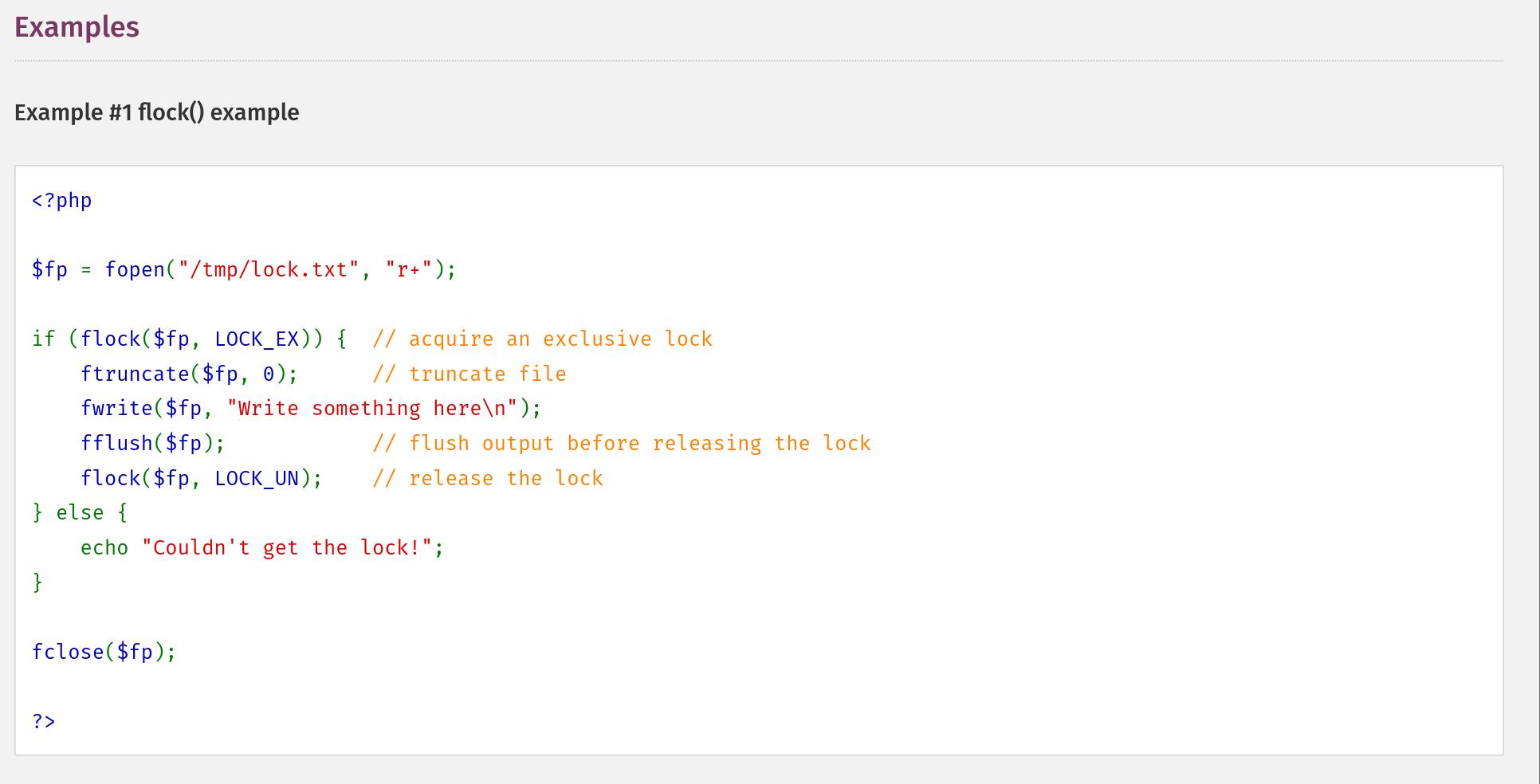

function limit_concurrency($max, $func) {

$lockname = "/var/lock/kwakk-lock-" . rand(1, $max) . ".txt";

$fp = fopen($lockname, "w+");

while (!flock($fp, LOCK_EX|LOCK_NB)) {

# Sanity check: If the lock file is very, very old, then something

# is wrong somewhere, so delete it and try again.

if (file_exists($lockname) &&

time() - filemtime($lockname) > 120) {

unlink($lockname);

$fp = fopen($lockname, "w+");

}

sleep(1);

}

$res = $func();

flock($fp, LOCK_UN);

fclose($fp);

return $res;

}

The concurrency level here is sloppy — it ensures that we never have more than $max, but it doesn’t ensure that we reach $max. It doesn’t really matter much. To use it, say something like:

$results = limit_concurrency(1, function() use ($string) {

return do_search($string);

});Gorgeous! PHP is the best language.

So there you go. It’s not complicated to achieve, but it really should be even simpler than this.