Yesterday, I started messing around with a chatbot based on magazines about comics, and today I’m experimenting a bit more to see whether anything interesting… er… happens?

So I’m using Qwen/Qwen2.5-7B-Instruct, and as expected, the results are of the LLM quality we’ve all come to expect: “Never been married…” “They annulled Superman and Lois Lane’s Marriage”…

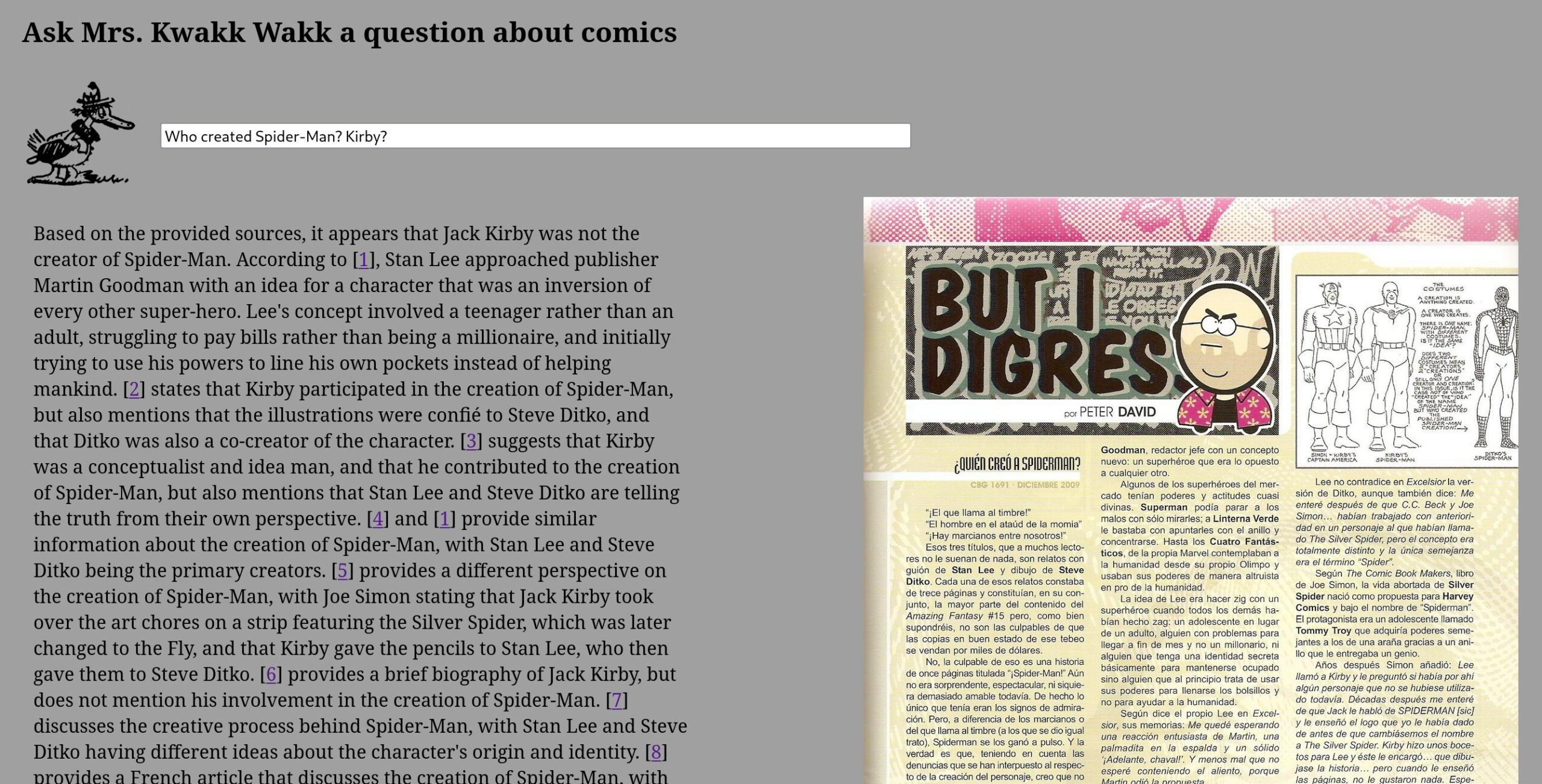

This query was done after just incorporating the English-language (and American-language) magazines. After doing the rest of the world (which increases the corpus by 80%):

That’s more useful. (Keep in mind that the “knowledge cut off” in the collection of comics magazines around 1999 — it has few new magazines.)

With 14B, there’s not much difference, really…

And, of course, the most burning question on everybody’s mind…

Well, that’s better than the response yesterday.

OK, it’s a leading question…

So that’s all bullshit. Nice that LLMs still are kinda stupid, but I guess it’s all going to be fixed in the next version, right?

Meanwhile, the ChatGPT window that has been guiding me through the LLM installation and corpus ingestion has gotten so slow that it’s not usable any more.

So this has been a problem for many years, and OpenAI’s brilliant engineers haven’t fixed it. The problem is all client side — it updates too much of the DOM tree when inserting new text.

So how do brilliant prompt engineers deal with this problem?

…

Asking a question and then reloading the page seems to work, but man.

Mistral-Nemo-Instruct-2407.

Llama-3.1-8B-Instruct seems to be the best of them?

But very chatty.

So there you go… or rather, you don’t, because I’m not making this publicly available.

Well, we’ll see whether this’ll actually be useful when blogging about comics, which is my use case here.