I’ve been watching a buttload of 40s movies over the past few months, and I was thinking about watching something really modern next.

And then it occurred to me: How about if I watch all “Netflix Original” movies released in 2019? Sizzling fresh movies!

And if you’ve ever read this blog before, you’ll know there’ll be complications, right? Prepare to scroll for days!

According to Wikipedia, there were about 70? 80s? of these movies in 2018, so presumably there’ll be a similar amount this year.

What counts as a “Netflix Original” varies a bit, apparently. Some of these are produced (i.e., financed) by Netflix directly, and others are picked up for exclusive distribution by Netflix after it’s already been made, apparently. So perhaps I’ll be adjusting the criteria after having watched a few of the movies… or not? We’ll see.

But how to watch these movies? I have an Apple laptop now, so I could plug the laptop into the TV and watch it that way, but it turns out that Netflix limits the resolution on real computers to 1.4K (or, as some people quaintly call it, “720p”). That’s annoying, because the majority of Netflix Originals are available in 4K. I’d be getting, what, 15% of the pixels I could be getting?

(/ (* 4000 2000) (* 1400 720)) => 7

Sad.

My TV is “smart”, but I’m not insane enough to ever let it go on the interwebs. I don’t want to 30kg brick in my TV room after an update or virus has had its fun with it.

But! I have a Chromecast Ultra! And Netflix allows 4K media on the Chromecast!

And I mean, I have to blog this thing, so I have to screenshot, right?

Right.

And you’re not supposed to be allowed to do that because that’s the whole point of Netflix limiting the resolution to devices that they control, and the HDCP 2.2 line to the TV is supposed to be unhackable OOOH DRM WOO WOO.

But of course people have been making devices to defeat all this nonsense.

First of all, I got an Ezcoo 4K HDMI splitter. It promises to be able to take a 4K input source and pass the HDCP 2.2 woo from a 4K TV to the device, thereby fooling it into thinking that it has a secure line.

Meanwhile, there’s another output on the splitter (that can either be 4K or 2K (some people quaintly call this “1080p”)) and you can put an HDMI recording device there.

I got an HDML Cloner Box Evolve, which promises to take a 2K input and has a remote that you can screenshot with. It can also record the entire video, but that’s not really what I’m interested in… And it’s probably called “HDML” to avoid having the HDMI people sue them? I’m just guessing.

Let’s plug all this in!

So pretty.

And the Chromecast is apparently satisfied: It allows 4K to pass to the TV, according to the TV.

But!

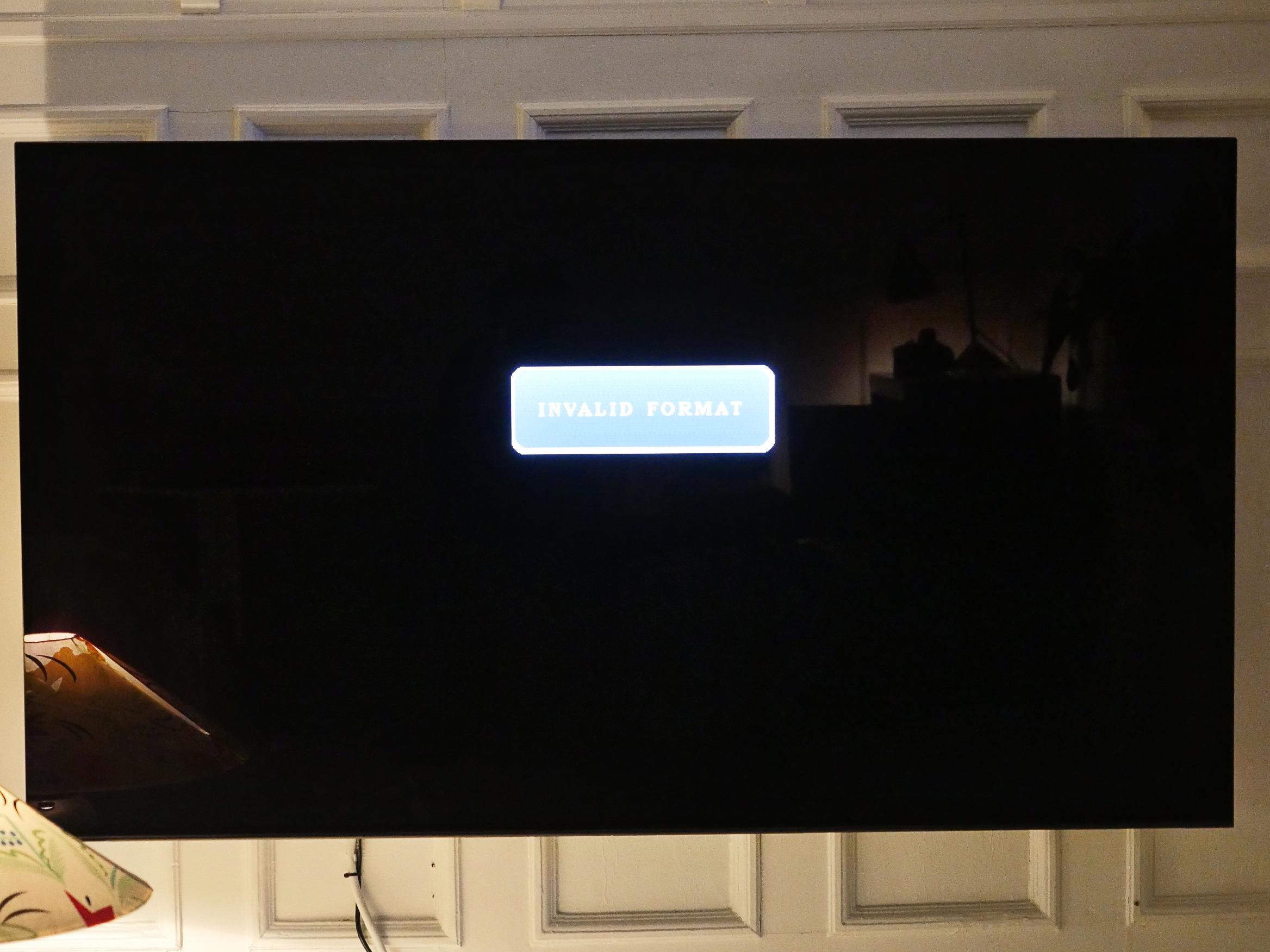

When looking at the output from the HDML Cloner Box Evolve, all the screenshots just said “INVALID FORMAT”.

And so the passthrough HDMI output on the Cloner Box says.

Is that coming from… the Cloner Box, the Ezcoo HDMI splitter, or from the Chromecast? And what’s invalid? The format on the SD card it’s writing to? The … HDMI format? What!??!!

But then I noticed this dip switch on the Ezcoo splitter. Downscaling to 2K is apparently not the default setting. The Cloner Box claims to be able to take a 4K signal (even if it only records in 2K), but perhaps this is the problem anyway?

Yes! Now it’s screenshotting!

OK, now I have a 4K path from the Chromecast to the TV, while being able to screenshot in 2K. Let’s see what the Netflix app thinks about all this!

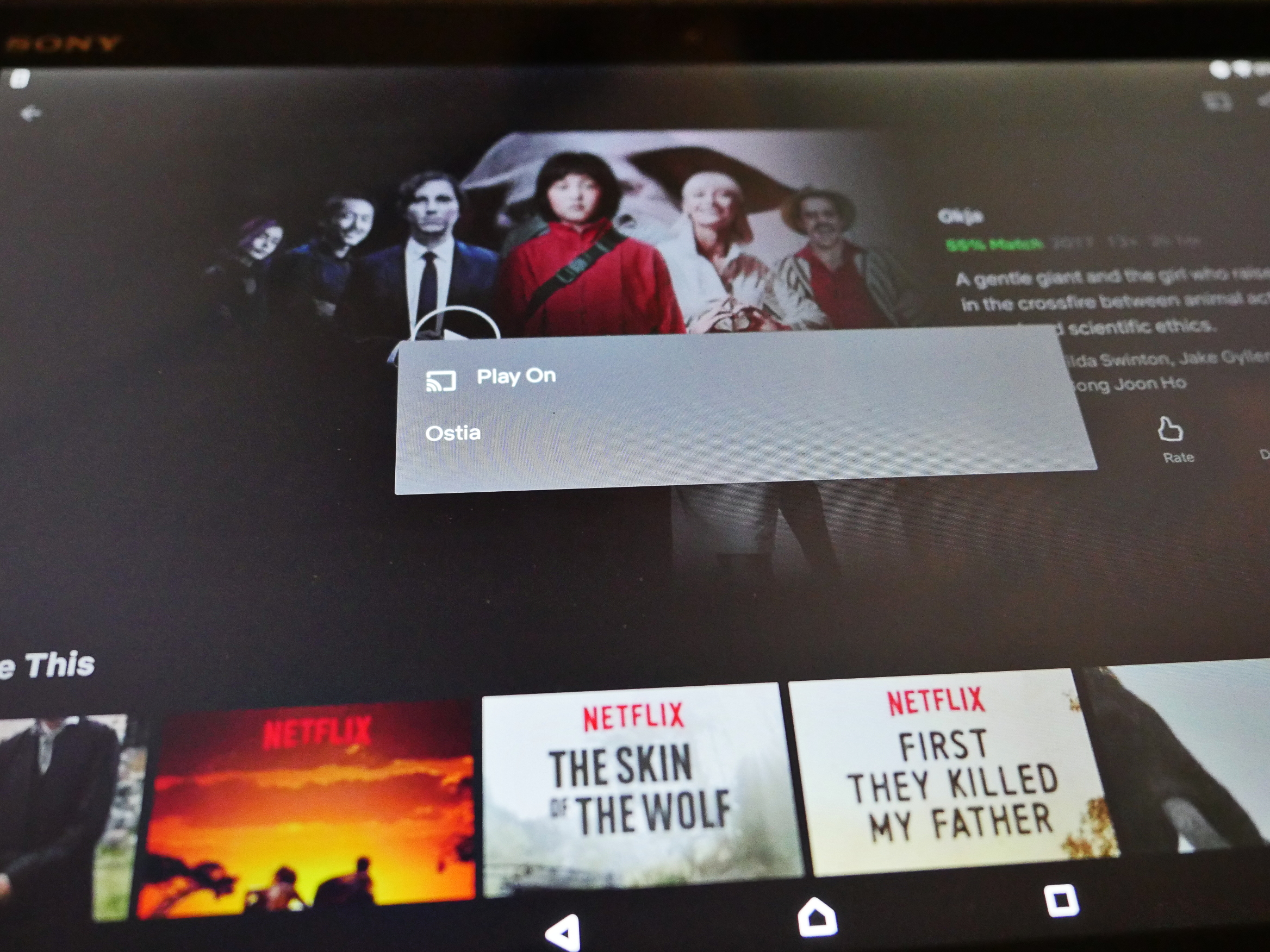

As the control device, I’m using an Android tablet, and as the test movie, let’s go with Okja, because it’s in 4K and I’ve already seen it.

Let’s see… there it is…

I tap the Chromecast icon, and it offers to connect to it and stream from it.

Aha! Once I tapped the screencast logo, the “Ultra HD” (code word for 4K) popped up in the Netflix app.

And there’s Okja! Well, that’s not actually Okja but it’s Jake Gyllenhaal and that’s close enough.

And there’s Okja! On the screenshot from the Cloner Box. The image looks quite nice… The screenshot is 2K, of course, but whatevs.

And the TV says that whatever it’s getting from the Chromecast is still in 4K. But… is it?

Apparently, there’s no way to make the Netflix app say what resolution it’s streaming in, or what the bitrate is. Both are important for the video quality, of course: If you have a 4K video with a bitrate of nothing, you’ll get a really ugly picture. (I’m looking at you, Amazon Prime Video!) This looks pretty fine, I think? No obvious issues? And it’s at least 2K, I think.

Telling the difference between 2K and 4K can be pretty difficult. It’s just that when you’re watching in 4K you go “whoa” a lot more, but a lack of “whoa” doesn’t necessarily mean that it’s not 4K: It might just be the director not going for “whoa”.

(Cue Dear Reader going “if you can’t tell the difference, whyyyy!” and to you I just have to say: Phooey.)

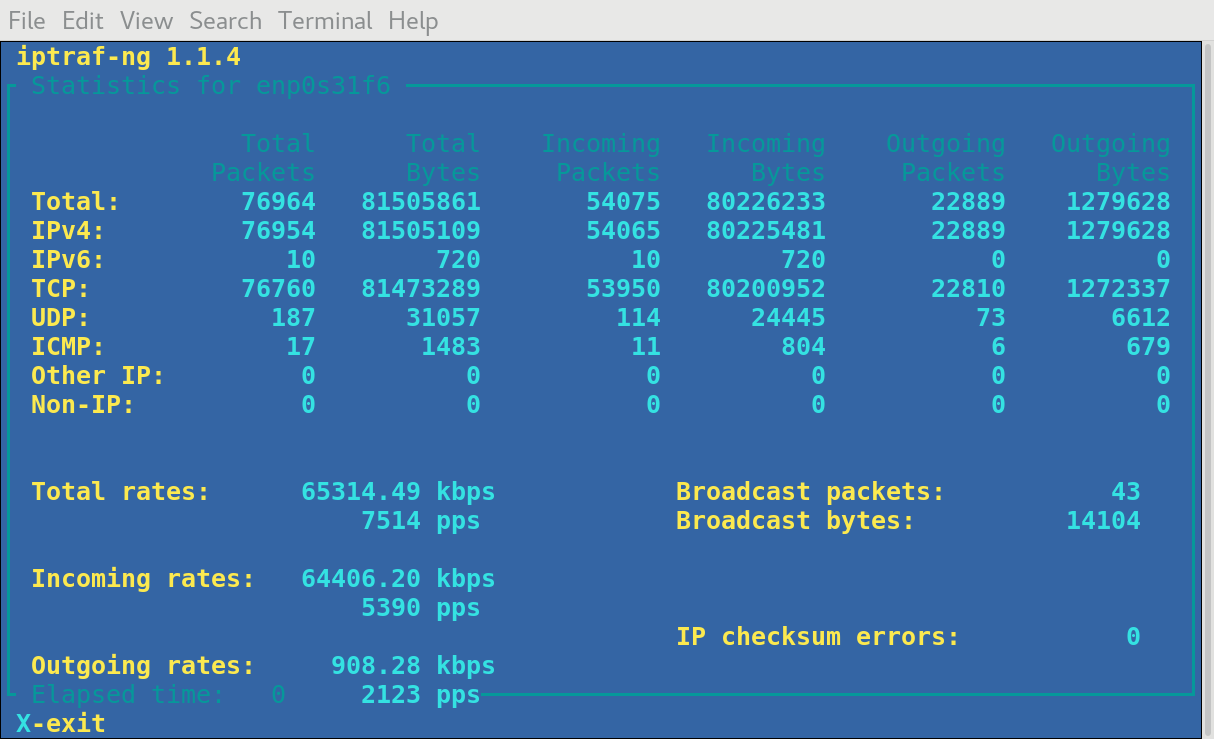

What does the firewall say?

Hm…

It seems to vary between 3MB/s and 60MB/s? Oh, yeah, it’s buffering and then playing, so it’ll vary a lot… And I’m usually used to thinking about this a bitrates, not byte rates…

OK, let’s write a simple script:

#!/bin/bash

duration=$1

device=enp1s0

direction=TX

times=0

start=$(ifconfig $device | grep "$direction packets" | awk '{ print $5; }')

while [ $times -lt $duration ]; do

sleep 1

times=$(( $times + 1 ))

end=$(ifconfig $device | grep "$direction packets" | awk '{ print $5; }')

echo $(( (($end - $start) / 1000 / $times) * 8 ))

done

And then compare a “HD” thing to a “Ultra HD” thing. As the “HD” thing I chose the Teen Titans TV show.

Ermagerd. I’ve never used the Chromecast before… why is everything so washed out? The blacks are supposed to be black, not totally gray.

Oh, well, I’ll have to investigate that too, but not while doing the bitrate thing.

Ok, I got 778… er… so that’s less than 1MB/s?

Let’s try it again but multiply by 8 to get bitrate.

OK, now it says 24560, which means that it’s a bitrate of 24Mbps? Hm. Perhaps one minute is just too short a time to measure, what with all the buffering. Let’s give it ten minutes.

And while I’m waiting I can try to investigate the washed out/low saturation thing going on. Apparently there’s a lot of people on Google having the problem… Hm… One internet says that it’s a problem with TVs and Chromecast not managing to convey to each other what HDR format to use. OK, on my Sony A1E OLED TV I switched HDR from “auto” to “HDR10”, aaaand…

Yowza! That’s some black blackness and some super-saturated colours. But… is it right? The colours are way crazy…

Aaand… it says “5088”, so I guess 5Mbps. That sounds about like what I expected.

Let’s try with some “Ultra HD”. Like the Punisher TV series.

HDR10 seems to do the right thing there too. But is that 4K grain?

The script says that it’s streaming in 9064, i.e., 9Mbps. That’s not a lot for 4K media: A 4K bluray is about 35-50Mbps.

Let’s try to reproduce our findings!

- Okja (UHD): 9.5Mbps

- Men in Tights (HD): 7.6Mbps

- Police Academy (HD): 7.6Mbps

- Ready Player One (HD): 5.7Mbps

- Bird Box (UHD): 6.6Mbps

- Okja (again) (UHD): 6.1Mbps

Well… that was… inconclusive? When the bitrate varies by 50% on the same movie when playing at different times, that’s not very… helpful.

Perhaps I should have a device other than a Chromecast to do this. Or… perhaps the Chromecast should use ethernet and not wifi? The wifi network should do 30Mbps easily (and does when I test with a laptop), but who knows what the Chromecast is able to do?

Unfortunately the Chromecast can’t be on ethernet and wifi at the same time, and the wifi is on a separate “secure” network.

Everything is so complicated!

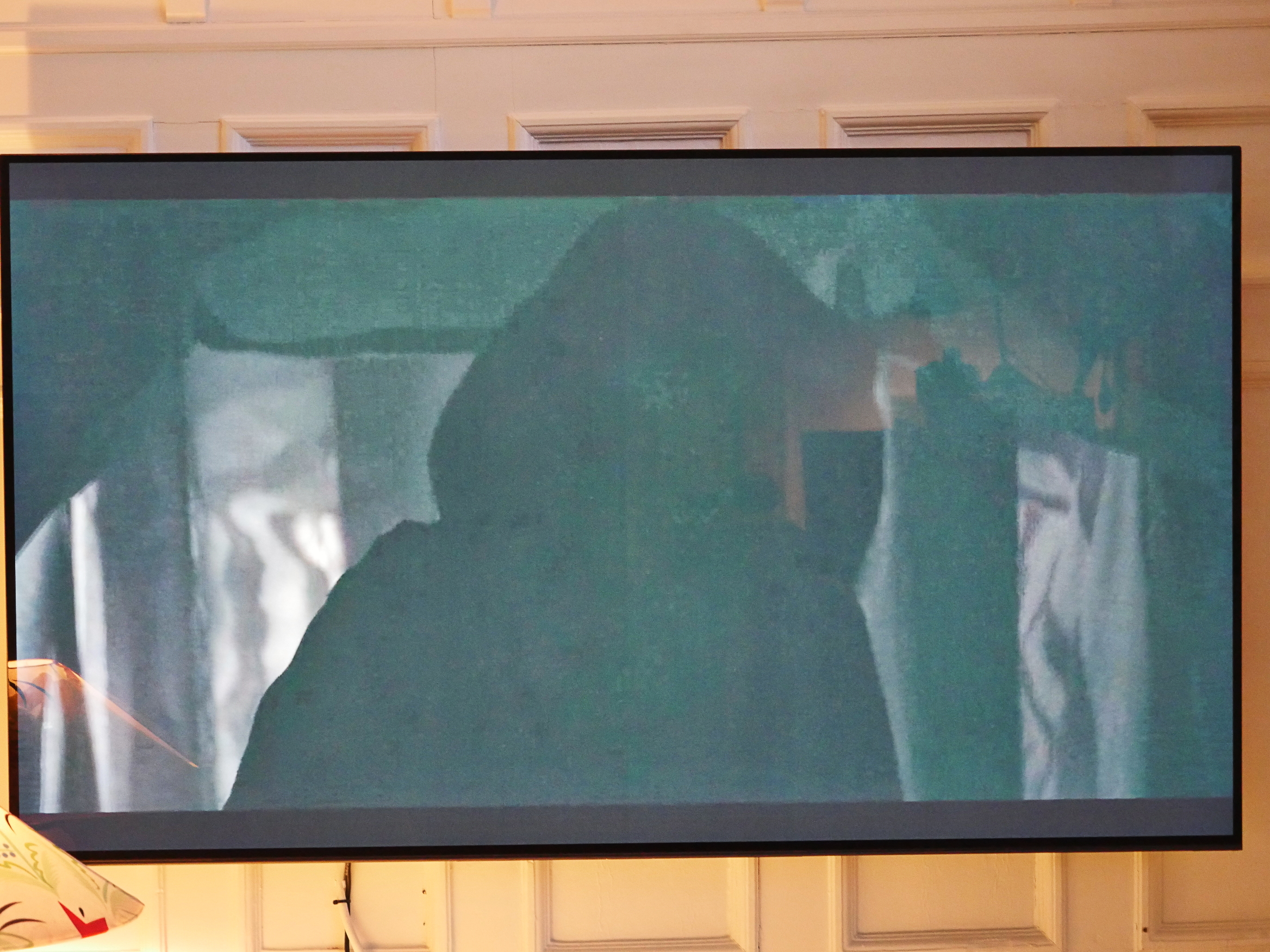

OK… what I should do is something I’ve wanted to do for years: Get some VLAN switches and create two separate wired networks, too.

I’ve had these dumb HP Procurve 1400-8G switches for more than a decade (as you can tell by the dust), and they’ve worked flawlessly. And I’ve had some flaky ones before that…

So what to buy? I’m extremely satisfied with the Procurve switches, so getting similar ones, but managed (i.e., with VLAN) instead would be an idea.

My additional requirements: The absolutely have to be fanless, and it would be nice if the power supply was internal, or at least small. I’ve had some HP switches where the power adapter is a huge monster, bigger than the switch, and that’s… just so… cumbersome? I mean, my network isn’t physically star-shaped, and involves three switches in series, and they’re all placed in pretty small spaces, so small and uncomplicated is better than big and complicated.

The first requirement should be a no-brainer: Virtually all of these small switches are fanless. But! HPE has this weird tendency to create lineups of switches with the same model name, and then there’s 8 port, 24 port, 48 port versions (in different form factors). And the larger ones have fans. So just going through all the spec sheets (because HPE helpfully just does one per series) is a hassle. And some of the models don’t explicitly say “no fan”, while other says “fan”, so…

And determining whether they have internal power supplies is impossible. There’s no pictures of the backs of the switches, and absolutely no pictures of the (possible) adapters.

But then I stumbled on this Netgear 8-Port Gigabit Web Managed Click Switch With 4-Port PoE+ (phew). The specs say that it has an internal power supply! The specs say “fanless”! *click* *buy*

See what doing proper specs do for sales, HP? SEE?!?!?!

So now I have three of these Netgears. I’m not sure whether Netgear makes good net gear or not, but I guess we’ll see.

Look! I’m being all proper and stuff and labelling the ports!

Setting up the VLAN stuff is really nice and trivial:

Couldn’t be simpler: The switch responds to a static IP address, so it’s easy to find, and has a built-in web server (which is nice and fast). Setting up a VLAN has never been this easy. Perfect.

The only thing that seems a bit janky here are the actual power connectors. They feel rubbery and don’t “click” in place, so it feels like they could, like, slide out without wanting to. It’s probably not a problem in practice, but that could have an improved feel to it.

Anyway! Chromecast now plugged into the “wifi” VLAN, so it can stream via ethernet while I control it from a WIFI tablet. Let’s see what bandwidth it uses now…

- Okja (UHD) 17Mbps:

- Men in Tights (HD): 7.8Mbps

- Police Academy (HD): 8Mbps

- Ready Player One (HD): 8.1Mbps

- Bird Box (UHD): 16Mbps

Well, that’s better! The highest I had with WIFI was 9Mbps, so using ethernet it’s almost doubled. That’s a lot more bits to look at!

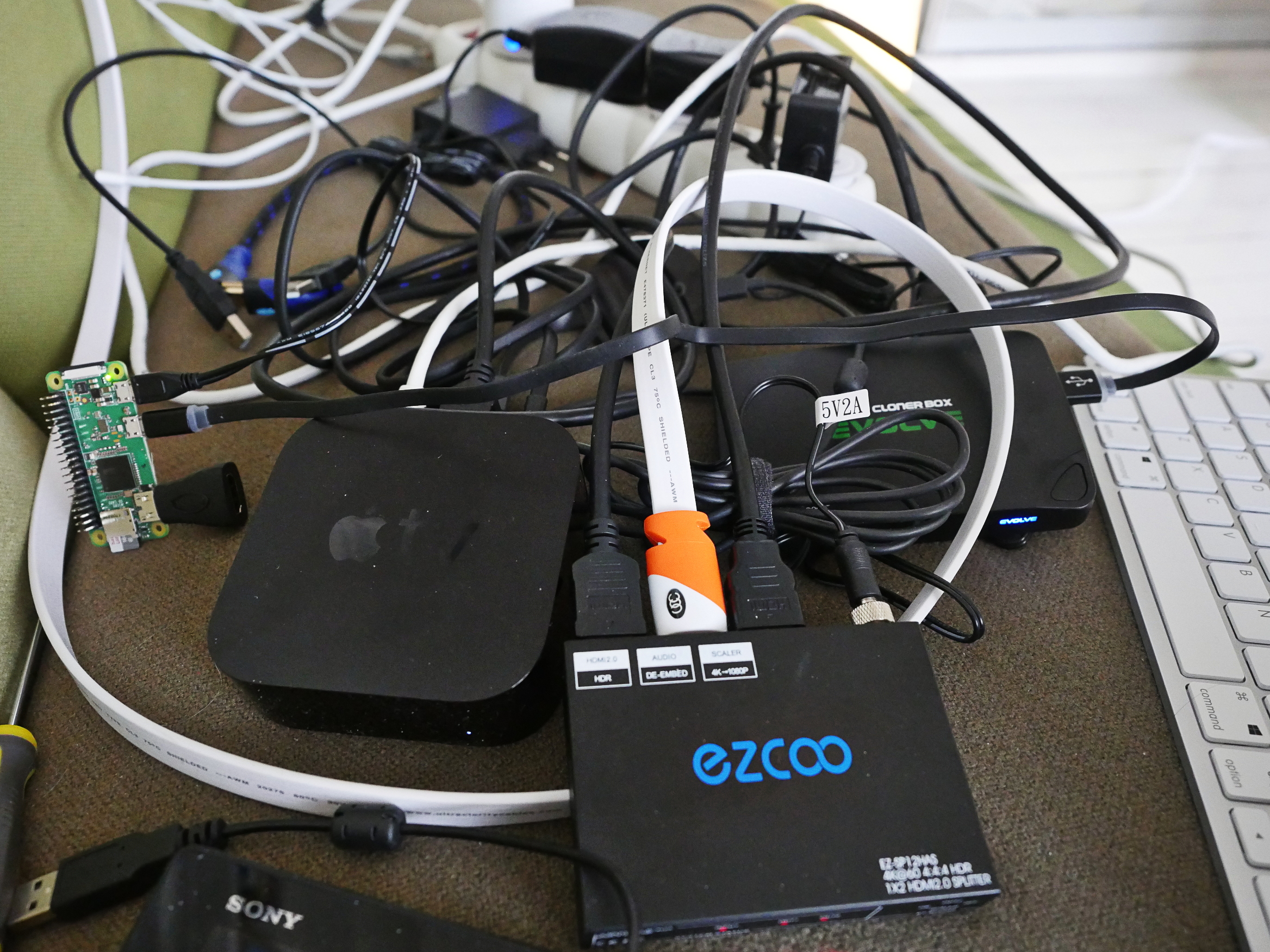

Meanwhile!

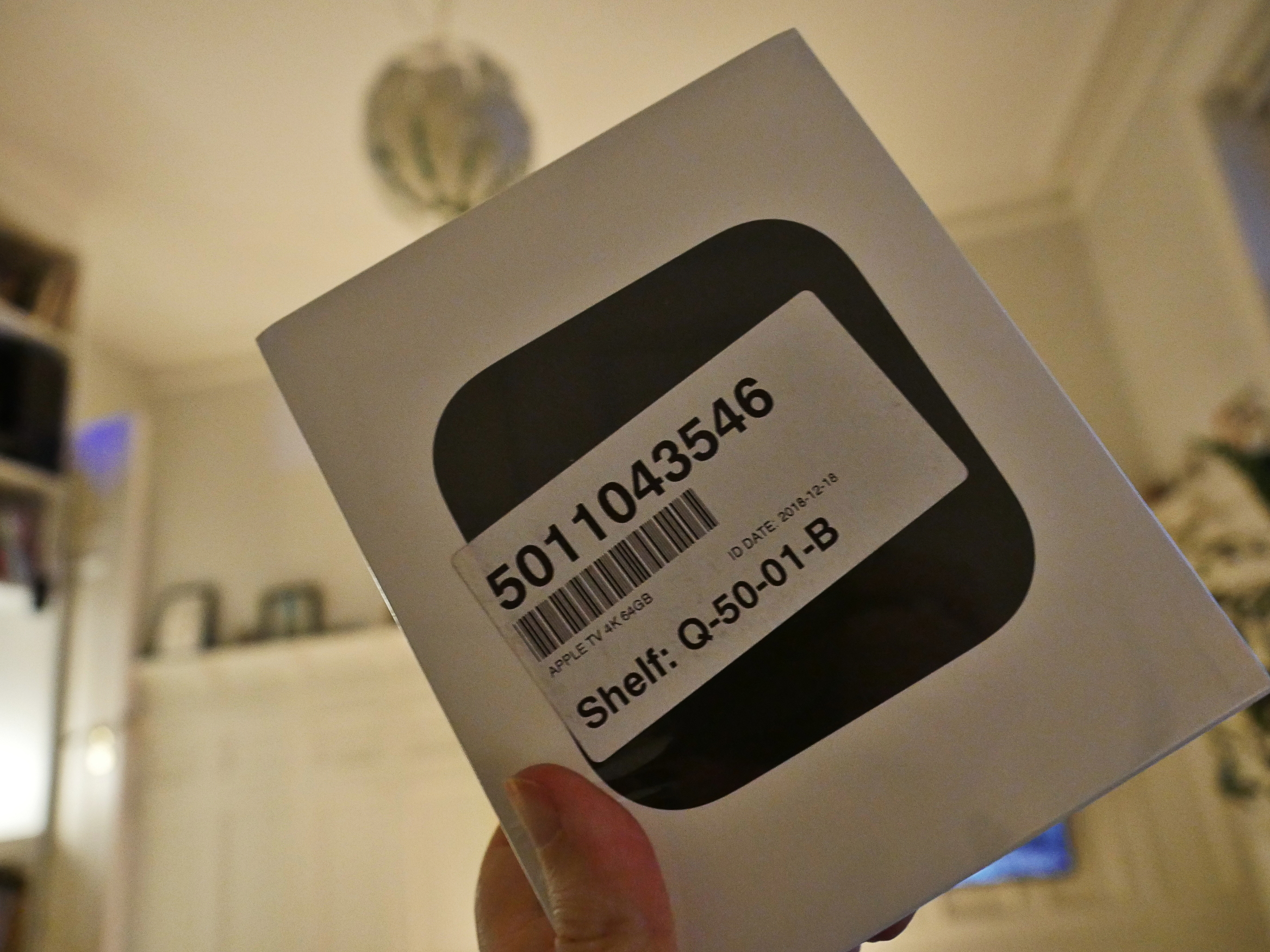

I bought an Apple TV because I just don’t trust Google not to fuck up. Especially since the Chromecast doesn’t seem to want to switch HDR on on my Sony A1E TV. Let’s see whether the Apple TV manages…

Hey, the Apple TV 4K isn’t as small at the first-generation Apple TVs used to be…

Let’s see whether it’s easy to set up…

Er… what? Uhm. *five minutes pass* Oh, you can click the top of the remote control! Apple UX discoverability is on point: Unless you know what you’re supposed to do, there’s absolutely no hint about how these devices are supposed to work…

Oh, never mind. There’s a manual included here. Oops. Yet another anti-Apple rant derailed by facts!

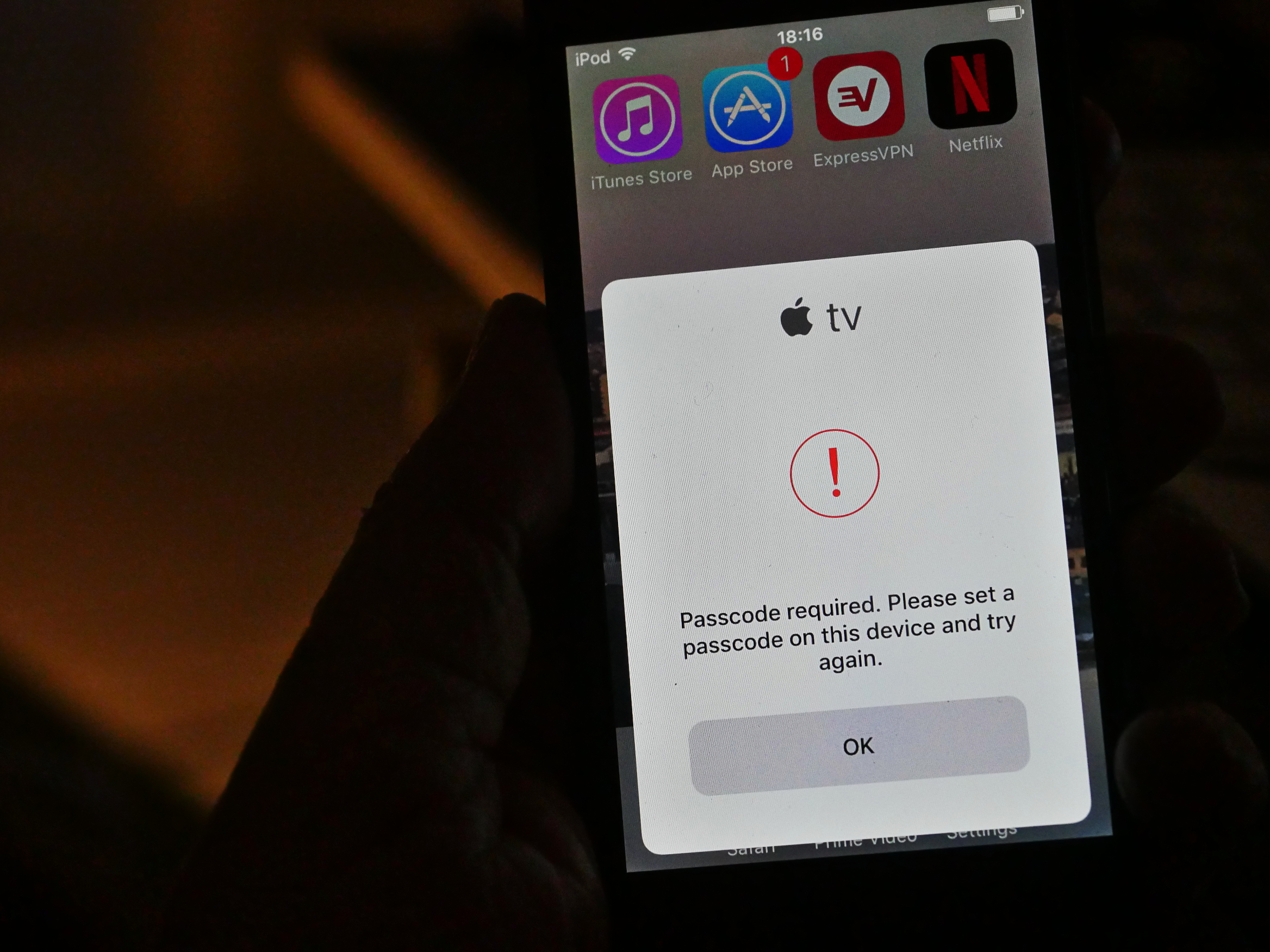

Uhm… it wanted to use an existing device to get the WIFI parameters etc, so I pulled out my Ipod Touch, which helpfully just said the above. Do you think “OK” takes you to the passcode setting? No? Correct.

After some googling I found out where it was hiding (in the obvious place), and we’re off!

And after getting the credentials transferred, I could switch the passcode on the Ipod Touch off again. So UX!

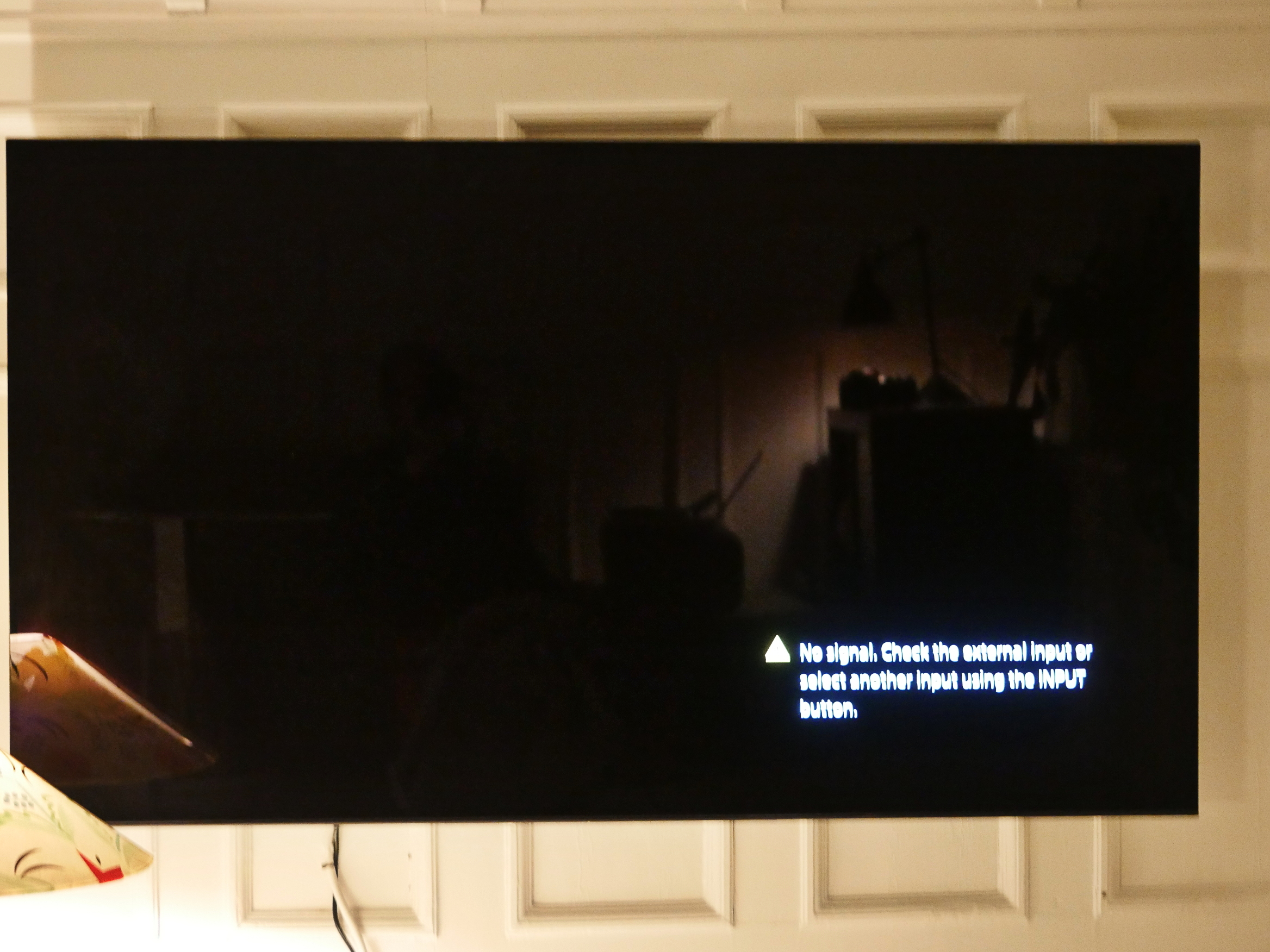

Hm… when it switches HDR on, the TV goes black? That’s not good…

As with the Chromecast, on “HDR Auto” everything is super-washed out, and when I switch HDR10 on, the blacks become properly black, but the colours are…. too much…

Hm… Oh! If I try to do 4K @ 60Hz HDR via the Ezcoo splitter box, it complains that the cable doesn’t have sufficient bandwidth. But if I do 4K @ 30Hz HDR, then it works fine, and the TV automatically detects the HDR and everything. Nice!

The Apple TV is certainly easier to deal with than the Chromecast which is extremely

But let’s do some benchmarking! Let’s do some of the same UHD movies and add some new ones:

- Okja (UHD): 15Mbps:

- Bird Box (UHD): 18Mbps

- Alex Strangelove (UHD): 18Mbps

Well, that’s not too bad. A normal 4K Bluray usually is somewhere around 40-50Mbps, and taking into account that Netflix is probably using a slightly more efficient codec, I’m getting an acceptable product.

Well, OK, this seems to be the way to go: Apple TV 4K @ 30Hz HDR. See, it simple!

So now I just have to have a convenient way to get the screenshots onto the computer. I mean, I don’t want to have to futz around with an SD card that I have to plug into the HDML Cloner Box and then transfer to the laptop…

I mean, I’m bone lazy.

I thought I had a solution here: Use a Flashair SD card. I use them in my cameras, and I’ve set it up so that I snap a picture and then the picture is pulled down into this Emacs buffer I’m typing in now. It’s great.

But unfortunately, I can’t use that with the HDLM Cloner Box: First of all, the cards use the exfat file system, and the box only understands FAT and VFAT. And I can’t reformat the Flashair card, because then the OS on the card goes AWOL and there’s no longer any WIFI on it.

The second problem is that there’s a partition table on Flashair cards, and the Cloner Box wants to write to it partitionless (i.e., the difference between /dev/sda1 and /dev/sda).

So that’s no go.

I was momentarily excited about the Sandisk Connect Wireless Stick, but it turns out that you can’t talk to it wirelessly while it’s plugged in as a USB memory stick, so that’s a pretty useless thing.

Other WIFI SD cards exist, and I guess I could give them a go, but during my last investigation into those, I all the non-Flashair ones to suck in one way or another.

So I started thinking about whether Linux can emulate a USB mass storage device via a USB-A to USB-A cable somehow.

And the answer is… sort of? Normal USB chipsets in normal computers do not support this kind of tomfoolery, but the Raspberry Pi Zero W does via its USB on-the-go (OTG) port, which is wired directly to the CPU, so it can do whatever it wants. Or something.

So I’ve got a USB-A to USB-OTG adapter cable…

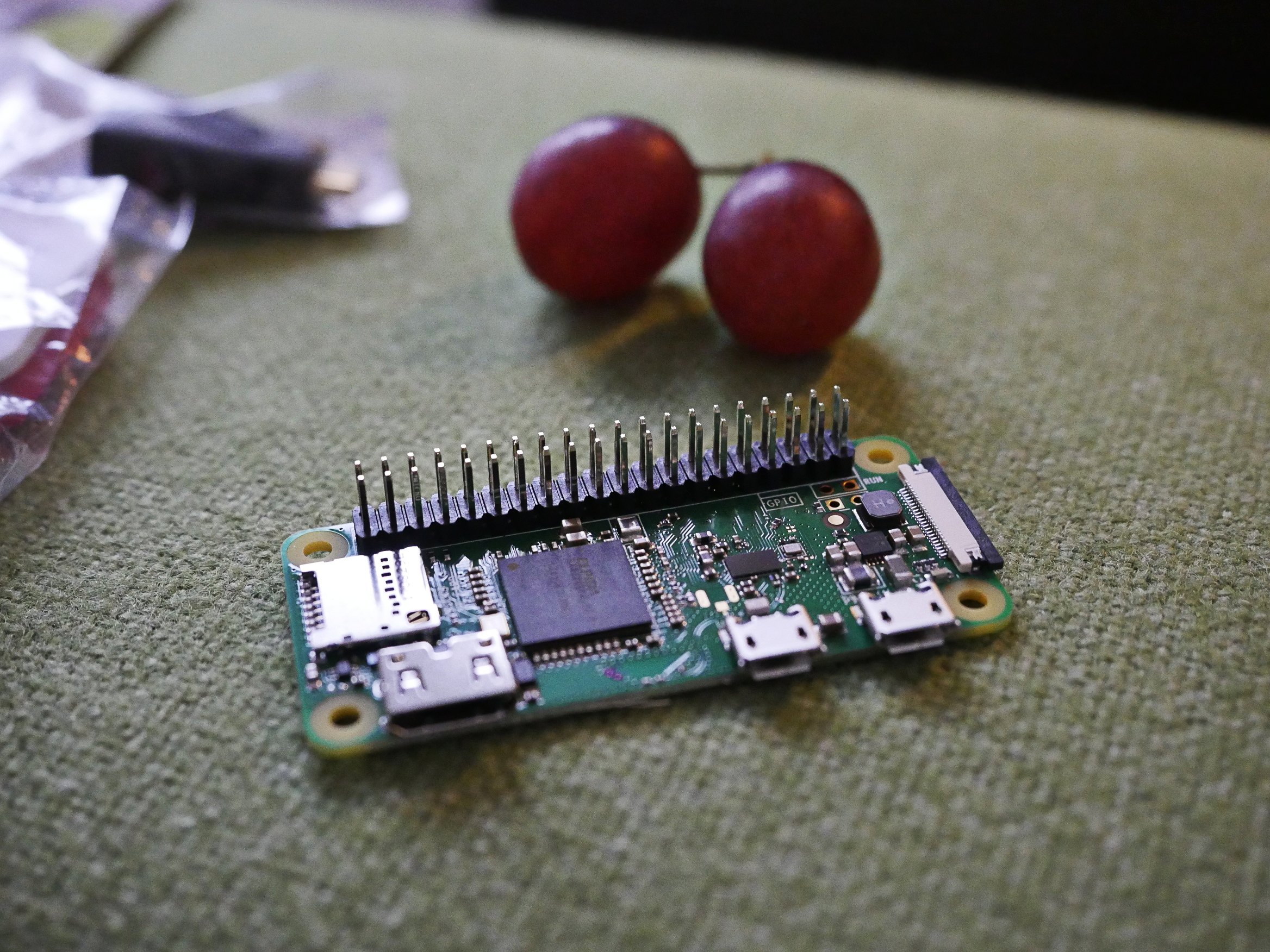

And the Zero W.

It’s so tiny! (Grapes for scale.)

And I got a case for it, and a mini-HDMI to HDMI adapter

The problem is that the case makes the mini-HDMI too recessed, so I can’t get any HDMI signals out of it. I wondered whether the Zero was broken or something, but…

So then I got HDMI and could see what I was doing. The HDMI seems to drop out whenever it’s doing something strenuous, but it’s good enough to do some setup stuff, at least.

Getting the thing to speak wifi took me hours and hours. I followed all of the how-tos on the net, but no matter how I tweaked the wpa_supplicant.conf file, iwconfig said that it hadn’t tried to connect to the access point.

Finally, I tried to do the simplest thing possible:

# wpa_supplicant -i wlan0 -c <(wpa_passphrase Beige PASSWORD)

And then it paired and got DHCP and everything!

So I just

# wpa_passphrase Beige PASSWORD > /etc/wpa_supplicant/wpa_supplicant.conf

and then it works after reboot.

This is with Debian Jessie Raspbian, because one of the million discussion threads about wifi not working on Raspberry Pis said that it was marginally less non-working.

So, after all that wasted time, it’s time to get USB gadget mode working! Should be a breeze!

I’m using this guide.

Let’s see… this isn’t, like, working…

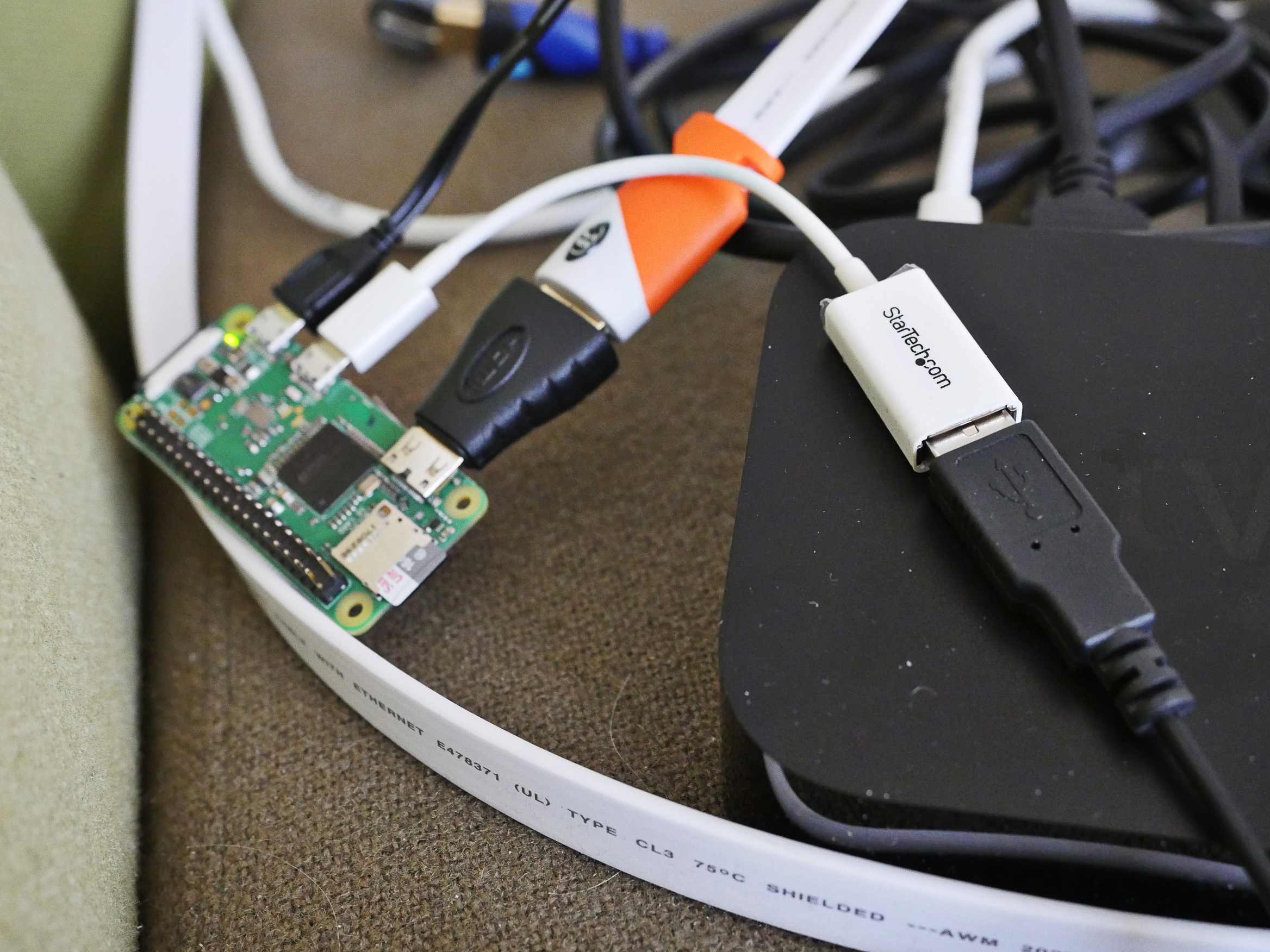

But then a wise person on irc asked me “why are you using an OTG adapter if the Pi has an OTG port?” That was a very good question. I switched to a normal USB-A to Micro-USB cable, and immediately:

Feb 14 14:26:25 marnie kernel: [1271626.095533] usb 1-2: New USB device found, i dVendor=0525, idProduct=a4a5 Feb 14 14:26:25 marnie kernel: [1271626.095540] usb 1-2: New USB device strings: Mfr=3, Product=4, SerialNumber=5 Feb 14 14:26:25 marnie kernel: [1271626.095545] usb 1-2: Product: Mass Storage G adget Feb 14 14:26:25 marnie kernel: [1271626.095549] usb 1-2: Manufacturer: Linux 4.9 .35+ with 20980000.usb Feb 14 14:26:25 marnie kernel: [1271626.095553] usb 1-2: SerialNumber: deadbeef Feb 14 14:26:25 marnie kernel: [1271626.096757] usb-storage 1-2:1.0: USB Mass St orage device detected Feb 14 14:26:25 marnie kernel: [1271626.097256] usb-storage 1-2:1.0: Quirks matc h for vid 0525 pid a4a5: 10000 Feb 14 14:26:25 marnie kernel: [1271626.097412] scsi host1: usb-storage 1-2:1.0 Feb 14 14:26:26 marnie kernel: [1271627.123226] scsi 1:0:0:0: Direct-Access Linux File-Stor Gadget 0409 PQ: 0 ANSI: 2 Feb 14 14:26:26 marnie kernel: [1271627.124775] sd 1:0:0:0: Attached scsi generi c sg1 type 0 Feb 14 14:26:26 marnie kernel: [1271627.125370] sd 1:0:0:0: [sdb] 4194304 512-byte logical blocks: (2.15 GB/2.00 GiB)

Success! The Pi shows up as a mass storage device.

But having the file system open on the Pi while the other host is writing to the backing file isn’t a good idea: Things get very confused. So only one thing that writes should have it open at a time.

So let’s try it with the HDML Cloner Box:

You can clearly see the USB-A cable from the Cloner Box going to the Pi Zero, right? Right.

And… it works! I got two screenshots over!

Uhm… I hope that status line goes away…

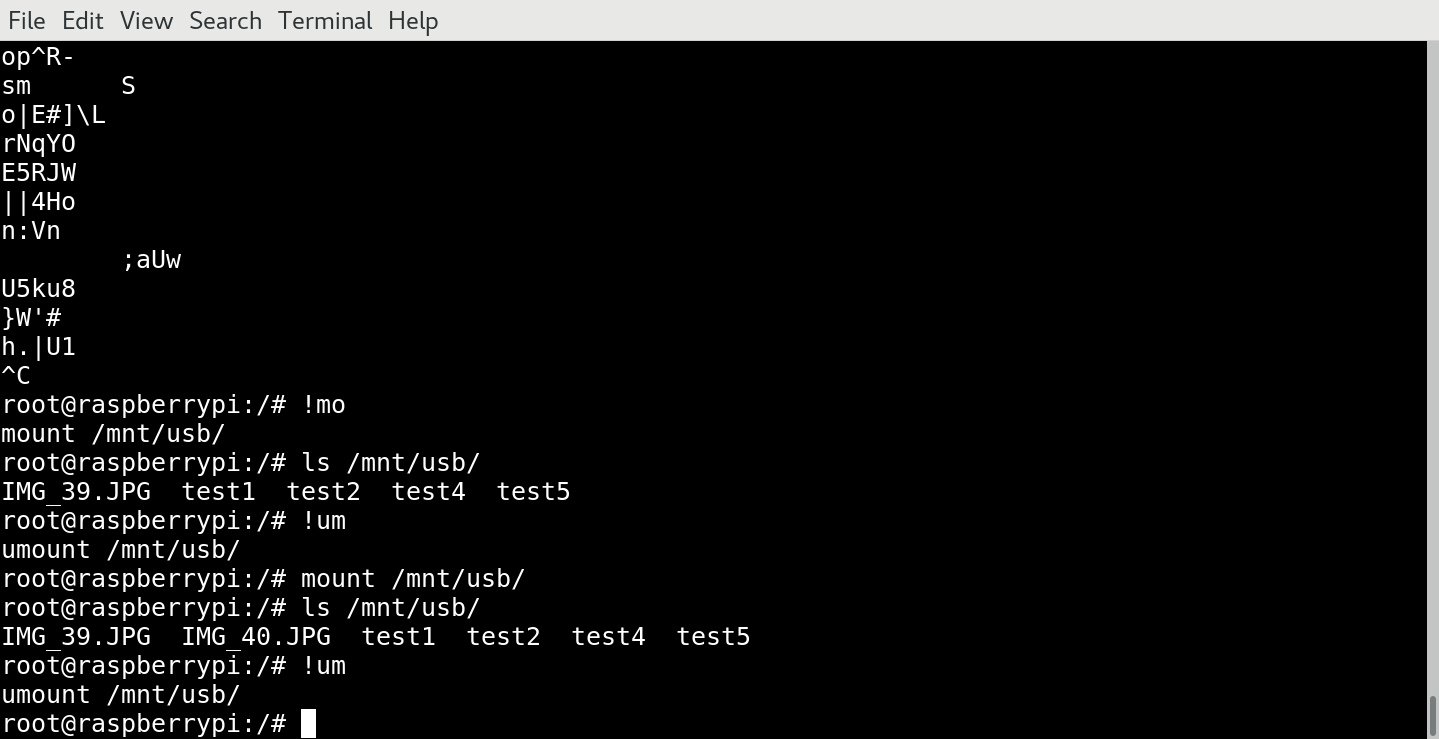

So my script is basically:

ssh $host mount /mnt/usb rsync -av $host:/mnt/usb/ ~/films/"$film"/ ssh $host umount /mnt/usb

And that seems to work pretty reliably? At least during the ten minutes I’ve been testing.

Let’s see… Click on the remote, run the script and then this is here:

But… that looks really, really washed-out? Hm… Oh, yeah. It’s HDR? So I should probably run it through something to get to a better colour space?

Perhaps if I do

convert -contrast-stretch 0.20x0.10% IMG_53.JPG norm.jpg

Well, that’s better! But is that the right transform for HDR-screenshot-to-8bit? Probably not? I tried googling this pressing, universal problem, but didn’t find any ready-made solutions…

Excuse me?

Well, that went well! See how easy it is to watch 4K content from Netflix?

I mean, if you’re me?

Anyway, in conclusion:

I’m looking forward to watching some new movies, and I think it’s annoying that Netflix makes it so difficult to see if you’re getting what you pay for.

I realise that this makes things much easier for customer support: They don’t have to field calls from customers saying “I’m on the 4K plan, and I’m watching a Star Wars movie, but the bitrate says 6Mbps”. Understandable! But if you sold a product in a different arena, and made it virtually impossible for any normal customers to check whether you get what you’re paying for, then there’s be consequences. Perhaps?

Perhaps not.

[Edit several months later: Somebody wondered whether I went on to actually watch Netflix and write about them? I did, and you can read about it here! I’ve suffered through all 2019 “Netflix Originals”. When the year is over I’ll even write a recap, but SPOILERS: It doesn’t turn out well for Netflix.]

A perfectly reasonable chain of events, thank you for a detailed run through.

haha had a good laugh. Thanks for sharing the odyssey. I enjoyed the read.

Niiice. A truly worthy pile of cables.

If you search Netflix for “Test Patterns” you will find some useful videos.

That say what bitrate the test patterns are streamed at?

Out of curiosity, have you tried playing something in the Android TV Netflix app on your Sony TV, and then pressing the [i+]-button on your TV remote? It’ll show the currently streaming resolution and (target) bitrate!